Appendix - Tamu.edu

advertisement

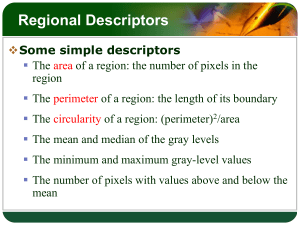

Appendix This appendix describes the specific computational procedures used for the extraction of image features. Gabor function and wavelets. Following Manjunath and Ma (1996), we employed Gabor filters to extract textures of different sizes and orientations (i.e. Gabor-based texture feature). A Gabor filter (Fig. 5) is defined by a two-dimensional Gabor function, g(x, y): 2 2 1 exp 1 x y 2jWx g ( x, y ) 2 2 2 x y 2 x y (1) where x and y denote the scaling parameters of the filter in the horizontal (x) and vertical (y) directions, and W denotes central frequency of the filter. The Fourier transform of the Gabor function g(x, y) is defined as: 1 (u W ) 2 v 2 G (u , v) exp 2 2 v 2 u (2) where u 1 / 2 x and v 1 / 2 y . The Gabor filters (Fig. 5) can be obtained by dilations and rotations of G(x, y) following a class of functions defined by Manjunath and Ma (1996): g mn ( x, y ) a m G ( x' , y ' ), a 1, m, n integer x' a m ( x cos y sin ), and y' a m ( x sin y cos ) (3) where θ is the orientation of the wavelet and is defined by n / K , and K denotes the total number of orientations. We generated six different orientations of Gabor filters (K = 6). The size of the filter is defined by a and m in Equation 3. The larger the values of parameters a and m are, the smaller the size of the Gabor filters is. We generated four different sizes of Gabor filters (Fig. 5). Altogether, we generated 24 different Gabor filters (6 × 4 = 24). These Gabor filters transformed the animal faces I(x, y) into X mn ( x, y ) : X mn ( x, y ) I ( x1 , y1 ) g mn * ( x x1 , y y1 )dx1 dy1 (4) where * denotes the complex conjugate. Assuming that the local regions are spatially homogeneous, we can use the mean, umn, and standard deviation of these regions, σmn, as textural features. mn Wmn ( xy) dxdy mn Wmn ( xy) mn ) 2 dxdy (5) Using 24 Gabor filters defined at six orientations and four sizes, the two textural features (umn and σmn) derived from Equations 1 to 5 result in a 48-dimensional feature vector (24 × 2 = 48). We then employed principal component analysis (PCA) to reduce these highdimensional feature vectors into one dimension (i.e. the one corresponding to the largest variance)1. Co-occurrence. In addition to Gabor-based textural features, we computed the spatial dependency among pixels (i.e. Co-occurrence based texture feature) using the Gray-Level Co-occurrence Matrices (GLCM) defined by Haralick, Shanmugan, and Dinstein (1973). GLCM is a matrix that shows the frequency of adjacent pixels with grayscale values i and j. For example, let matrix I be the grayscale values of image I, and (i, j) denotes a possible pair of the horizontally adjacent pixels i and j. 0 0 I 0 2 0 1 1 2 1 1 where 2 2 2 2 1 0 (0,0) (0,1) (0,2) (i, j ) (1,0) (1,1) (1,2) (2,0) (2,1) (2,2) (6) GLCM represents the frequency of all possible pairs of adjacent pixel values in the entire image. For instance, in the GLCM for image I (i.e. GLCMI), there is only one occurrence of two adjacent pixel values both being 0 (i.e. (0, 0)), whereas the frequency of having (0, 2) pixel values in image I is two, so and so forth. 1 1 2 GLCM I 1 2 0 0 2 3 (7) From the resulting GLCM, we estimated the probability of having a pair of pixel values (i, j) occurring in each image (i.e. P(i, j)). For example, the probability of having a pair of pixel values (0,0) in image I is 1/12, and the probability of having pixels (0,2) is 2/12. Using P(i, j) estimated from the GLCM of each animal face, several features can be extracted. Following Howarth and Ruger (2004), we extracted three features – GLCM contrast, GLCM homogeneity, and GLCM energy. GLCM Contrast (i j ) 2 P(i, j ) i j GLCM Homogeneit y i j P(i, j ) 1 i j GLCM Energy P 2 (i, j ) i (8) j GLCM contrast measures the variance in grayscale levels across the image, whereas GLCM homogeneity measures the similarity of grayscale levels across the image. Thus, the larger the changes in grayscale, the higher the GLCM contrast and the lower the GLCM homogeneity will be. Finally, GLCM energy measures the overall probability of having distinctive grayscale patterns in the image. Brightness. Given an image I(x, y), we defined brightness as its average grayscale value: cmean 1 n2 n n I ( x, y) (9) x1 y 1 Size. To represent the size of an image, we counted the number of pixels above a threshold T (T = 157): 0 I ( x, y ) T p B( x, y ); where B( x, y ) x y 1 I ( x, y ) T (10) In addition, we computed the ratio of width (W) to height (H) for each animal face: WH ratio W H (11) Contour. The contour of an animal’s face can also be very important for discriminating between animal faces (Palmer, 1999). In order to extract contour features, we first thresholded the image to identify foreground pixels; the threshold was set to 0.95 times the largest pixel value in the image. Then, we identified the outermost pixel relative to the center of mass of the foreground in angular increments of 1 degree, and stored the distance between center mass and the radially-spaced outermost pixels in a 360dimensional feature vector. Finally, we employed principal component analysis (PCA) to identify the directions of maximum variance in the 360-dimensional contour vector, and kept the top three principal components as contour features2. Fig. 6 shows the resulting average contour (solid) superimposed on the contour +3 (dotted) and -3 standard deviations (dashed) away from the average along each of the three principal components.

![paper_ed26_6[^]](http://s3.studylib.net/store/data/007847169_2-987d8accb83434ced695d10293b249e0-300x300.png)