JEIDA Standard of Symbols for Japanese Text-to

advertisement

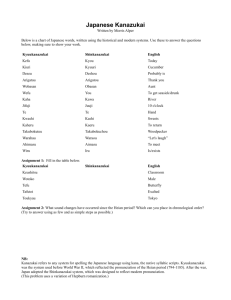

JEIDA Standard of Symbols for Japanese Text-to-Speech Synthesizers TANAKA Kazuyo1, AKABANE Makoto2, MINOWA Toshimitsu3, ITAHASHI Shuichi4 1 Electrotechnical Laboratory, 1-1-4 Umezono, Tsukuba, 305-8568, Japan. ktanaka@etl.go.jp SONY Corporation, 2-15-3 Konan Minato-ku, Tokyo, 108-6201, Japan. akabane@pdp.crl.sony.co.jp 3 Matsusita Communication Ind. Co., Ltd., Yokohama, 224-8539, Japan. minowa@adl.mci.mei.co.jp 4 University of Tsukuba, 1-1-1 Tennodai, Tsukuba, 305-8573, Japan. itahashi@milab.is.tsukuba.ac.jp 2 symbol system with several examples and comments. In “JEIDA-62-2000” version, the JEIDA Committee determined the symbols and description format that every TTS engine needs to accept as its input, that is, the TTS engine synthesizes speech by reading such a character sequence as is written by the standardized symbols and description format. Through the discussion in the Committee, we have considered that the symbols should have the following characterization: (1) they do not depend upon any specific applications nor platforms, such as hardware architectures, operating systems, programming languages, character codes, etc. , and also (2) they should have availability for wide applications. ABSTRACT This paper presents a standard of symbols commonly used for Japanese text-to-speech synthesizers. The standard has been discussed in the Speech Input/Output Systems Expert Committee of the Japan Electronic Industry Development Association (JEIDA), and was announced from the JEIDA as the JEIDA Standard named “JEIDA-62-2000” in March 2000. We describe here its basic policy and outline of the symbol system with several examples. 1. INTRODUCTION Recently, text-to-speech (TTS) synthesizers have rapidly spread to various applications and/or services in our life. Japanese makers thus put many TTS engines on the market. These TTS engines, however, employ individual (machine-dependent) interfaces and symbol systems, so that it becomes a serious obstacle when users implement application systems that incorporate any of the TTS engines. To help to resolve such a problem, the JEIDA Committee began, in 1995, to discuss standardization of symbols and input format used for TTS engines and, in March 2000, brought it to the first concluding version as the JEIDA Standard, “JEIDA-62-2000”[1]. At the same time, the JEIDA Committee also presented guidelines for speech synthesis system performance evaluation as “JEIDA Guideline” in this March[2]. In the following sections, we describe the outline of the 2. CLASSIFICATION OF THE SYMBOLS First of all, to make clear the characteristics of the symbols for TTS synthesizers, we classified this kind of symbols into categories shown in Table 1, where the vertical indicates level of description and the horizontal means features conveyed by the symbols. More specifically, it is shown in Fig. 1, which indicates the configuration of each symbol category in relation to TTS systems and shows typical text format examples. 1 Table 1: Classification of the Symbols for Japanese TTS Synthesizers Level Pronunciation feature Prosodic feature Control tag Text-level ----Kana-level Kana-level representation Text-embedded tags Pronunciation symbols Prosodic symbols Allophone-level Allophone-level representation Pronunciation symbols Prosodic symbols Input Text Japanese Text Analysis Text-level representation: <PRON SYM=”キョ’-”>今日</PRON>は天気 がよい。 Kana-level representation: (Kana) キョウ’ ワ|_テンキカ゜/ヨ’ イ. (Alphabetic) kyo’-wa|_te’nnkinga/yo’i. Allophone-level Analysis Allophone-level representation: (SAMPA) kjo!:wa||te!NkiNa|jo!i Synthesizer Engine Fig.1 JEIDA Symbols in relation to a TTS system. character sets for the representation symbols. Thus, the monosyllables are written by 1) a single Kana-character or character string (e.g. キョー), or 2) an alphabetic-character or character string (e.g. kyo-), as indicated in the Kana-level representation shown in Fig. 1. While these character representations are uniquely defined, their coding methods are not specified here to be independent of application platforms. The representation method does not meet with the Japanese orthographical transcription in several points. No characters are prepared for devocalizations of vowels because they are usually predictable from the phonetic contexts. On the other hand, nazalization of /g/, which is phonetically distinct but not recognized as a phonemic unit in the Japanese language, is explicitly defined by a 3. KANA-LEVEL REPRESENTATION 3.1 Pronunciation symbols The Kana-level Representation is used in a stage where a basic pronunciation of a word sequence is given based on a result of text analysis. Input Japanese texts are usually written with Chinese characters, Hira-kana and Kata-kana characters, Arabic numerals, etc, so that these texts need to be transformed to pronunciation symbol sequences using the text analysis result. The pronunciation symbols here represent a phonetic aspect of words except their prosodic features, and are uniquely defined for every Japanese monosyllable (see Appendix-I). In Japan, the pronunciation is usually written by Kana or alphabetic characters, so that we accepted both 2 5. TEXT-EMBEDDED CONTROL-TAGS different character, because this type of phonetic alteration is derived from the text analysis result. The Text-Embedded Control-Tags are defined to specify a kind of language, speaking rate, volume level of speech, part of speech, etc., included in the input text, for examples, and are written by XML format. We determined them by referring to several documents already announced, such as Microsoft Speech API (SAPI) [7] and the Aural Style Sheet of W3C (World Wide Web Consortium) [8]. At the same time we also considered the characteristics of the Japanese language and TTS engines for Japanese. Thus, most of such Control-Tags as overlapped and already defined in the SAPI were adopted in the JEIDA Standards, but several tags were added as components specifically used for Japanese TTS synthesizers. 3.2 Prosodic symbols The prosodic symbols here are defined for those prosodic features as accent position, accent phrase boundary, phrase boundary, sentence period and its intonation types (eg., normal, interrogative, or exclamatory), and pause insertion, contained in Japanese texts (see Appendix II). They are defined on the assumption that they are able to represent Tokyo dialect. We did not determine such symbols as representing fine prosodic phenomena, because it might be troublesome in implementing various applications. Several closer definitions are given in the Allophone-level Representation. 4. ALLOPHONE-LEVEL REPRESENTATION 5.1 Examples of Control-Tags Allophone-level Representation is determined for specifying more details of pronunciations. It is based on IPA representation of Japanese syllables. However, the IPA representation of Japanese itself is still including several ambiguous items, so that we referred to a report by Oonishi et al [3] in determining this standard. For easy use in computer programs, we adopted the XSAMPA (eXtended Speech Assessment Methods Phonetic Alphabet [5,6]) as ASCII code expression corresponding to the IPA. Prosodic features are also represented using symbols defined in the suprasegmentals of IPA. In representing the accentuation, we adopted symbols indicating rise and fall positions(see Appendix III), so that it is able to represent several dialects other than Tokyo dialect. Since the IPA does not intend to express general prosodic characteristics, the current set of the symbols for prosodic features is not yet enough to express details. We consider that the symbols for prosodic features needs further investigations in the future to compose a more convenient representation. the Text-Embedded The Control-Tags are categorized as those for controlling TTS systems, indicating pronunciations, and helping text analysis. Examples are shown in the following. (1) Tag examples for controlling TTS systems: BOOKMARK: inserting a bookmark. SPEECH: specifying a scope of the Tags defined in this standard. LANG: specifying a language. VOICE: specifying a font of voice, such as specified person’s voice tone. RESET: resetting values in the section corresponding to “SPEECH”. (2) Tag examples for indicating pronunciations: SILENCE: inserting a pause. EMPH: indicating a emphasis phrase, word, etc. SPELL: indicating reading by alphabetic character pronunciation of a word spelling. RATE: specifying a speaking rate. VOLUME: specifying volume of speech. PITCH: specifying average pitch level. (3) Tag examples for helping text analysis: 3 PARTOFSP: specifying a part of speech in the text. CONTEXT: specifying prior information in the scope. REGWORD: registration of a pronunciation and/or part of speech, etc, for a specified word. REFERENCES [1] Japan Electronic Industry Development Association (JEIDA) Standard: Standard of symbols for Japanese text-to-speech synthesizer (in Japanese), JEIDA-62-2000 (March, 2000). [2] JEIDA: Guidelines for speech synthesis system performance evaluation methods (in Japanese), JEIDA-G-2000 (March, 2000). S. Itahashi, “Guidelines for Japanese speech synthesizer evaluation,” Proc. LREC2000, pp.xxx-xxx, May, 2000. [3] M. Oonishi, S. Toki, M. Dantsuji, “Research report on phonetic symbol representation of Japanese,” Proceedings 1995 Meeting, Phonetic Association of Japan, pp.11-17, 1995. [4] Dafydd Gibbon, Roger Moore, Richard Winski, “Handbook of Standards and Resources for Spoken Language Systems,” Mouton de Gruyter, 1997. [5] SAMPA http://www.phon.ucl.ac.uk/home/sampa/home [6] Microsoft Speech SDK http://research.microsoft.com/stg/ [7] W3C - http://www.w3.org/TR/WD-CSS2/ [8] Voice XML - http://www.voicexml.org/ 5.2 Attributes Specification Two ways are acceptable in specifying attributes of the Tags, such as speaking rate, pitch level, volume of speech, etc. One is to express by an absolute value (or level), and the other is by relative value (or level) in relation to the preceding one, as shown in the following: (1) Using the absolute value: (The name of attribute is “AbsAttr”.) <TagName AbsAttr=”5”> <The value is set to 5> <TagName AbsAttr=”6”> <The value is set to 6> </TagName> <The value is reset to 5> </TagName > <The value is reset to one before “5”> (2)Using the relative value: (The name of attribute is “AbsAttr” and the reference value of RelAttr=”0”.) <TagName RelAttr=”+5”> <The value is set to 5> <TagName RelAttr=”+6”> <The value is set to 11> </TagName> <The value is reset to 5> </TagName> <The value is reset to 0> 6. CONCLUDING REMARKS The JEIDA Standard of Symbols “JEIDA-62-2000” is the first version that defined primary part of the symbol system for Japanese TTS synthesizers. It will be revised in the future to meet with the progress of TTS engines and/or their application systems. ACKNOWLEDGMENT The authors wish to thank all the members of the Speech Input/Output Systems Expert Committee of JEIDA for their intensive effort and discussion to establish this Standard of Symbols. 4 Appendix-I: Kana-level Symbols for Japanese monosyllables pronunciation. 表2-1 読み記号 ア a カ ka サ sa タ ta ナ na ハ ha マ ma ラ ra ガ ga ザ za ダ da バ ba パ pa ヴァ va カ゜ nga ン nn イ i キ ki シ shi チ chi ニ ni ヒ hi ミ mi リ ri ギ gi ジ ji ディ di ビ bi ピ pi ティ ti ヴィ vi キ゜ ngi スィ si ズィ zi ッ q ウ u ク ku ス su ツ t su ヌ nu フ hu ム mu ル ru グ gu ズ zu ドゥ du ブ bu プ pu ト ゥ tu ヴ vu ク゜ ngu エ e ケ ke セ se テ te ネ ne ヘ he メ me レ re ゲ ge ゼ ze デ de ベ be ペ pe オ o コ ko ソ so ト to ノ no ホ ho モ mo ロ ro ゴ go ゾ zo ド do ボ bo ポ po ヤ ユ ya yu キャ キュ kya kyu シャ シュ sha shu チャ チュ cha chu ニャ ニュ nya nyu ヒャ ヒュ hya hyu ミャ ミュ mya myu リャ リュ r ya r yu ギャ ギュ gya gyu ジャ ジュ ja ju デャ デュ dya dyu ビャ ビュ bya byu ピャ ピュ pya pyu テャ テュ t ya t yu ヴェ ヴォ ヴャ ヴュ ve vo vya vyu ケ゜ コ ゜ キ゜ ャ キ゜ ュ nge ngo ngya ngyu フャ フュ f ya f yu イェ ヨ ye yo キェ キョ kye kyo シェ ショ she sho チェ チョ che cho ニェ ニョ nye nyo ヒェ ヒョ hye hyo ミェ ミョ mye myo リェ リョ r ye r yo ギェ ギョ gye gyo ジェ ジョ je jo ディ ェ デョ dye dyo ビェ ビョ bye byo ピェ ピョ pye pyo ティ ェ テョ t ye t yo ヴィ ェ ヴョ vye vyo キ゜ ェ キ゜ ョ ngye ngyo フィ ェ フョ f ye f yo ー - 5 ワ wa クァ kwa スァ swa ツァ t sa ヌァ nwa ファ fa ムァ mwa ルァ r wa グァ gwa ズァ zwa ドゥァ dwa ブァ bwa プァ pwa ト ゥァ t wa ヴゥ ァ vwa ク゜ ァ ngwa ウィ wi クィ kwi スゥ ィ swi ツィ t si ヌィ nwi フィ fi ムィ mwi ルィ r wi グィ gwi ズィ zwi ドゥィ dwi ブィ bwi プィ pwi ト ゥィ t wi ヴゥ ィ vwi ク゜ ィ ngwi ウェ we クェ kwe スェ swe ツェ t se ヌェ nwe フェ fe ムェ mwe ルェ r we グェ gwe ズェ zwe ドゥェ dwe ブェ bwe プェ pwe ト ゥェ t we ヴゥ ェ vwe ク゜ ェ ngwe ウォ wo クォ kwo スォ swo ツォ t so ヌォ nwo フォ fo ムォ mwo ルォ r wo グォ gwo ズォ zwo ドゥォ dwo ブォ bwo プォ pwo ト ゥォ t wo ヴゥ ォ vwo ク゜ ォ ngwo Appendix-II: Kana-level Symbols for Prosodic Features. Function Accent position Accent phrase boundary Phrase boundary End of sentence, Normal/assertive intonation End of sentence, Interrogative intonation End of sentence, Exclamatory intonation 1-mora pause insertion Symbol ’ / | . ? ! _ (The ASCII codes are represented by the decimal system.) ASCII code 039 047 124 046 063 033 095 Appendix-III: Allophone-level Symbols for Prosodic Features. Function XSAMPA ASCII code Accent, Upstep ^ 094 Accent, Downstep ! 033 Accent phrase boundary, Minor (foot) group | 124 Phrase boundary, Major (intonation) group || 124 124 End of sentence, Global fall <F> 060 070 062 End of sentence, Global rise <R> 060 082 062 1-mora pause insertion ... 046 046 046 (The IPA symbols corresponding to XSAMPA’s are omitted here.) 6