ParalleX Execution Model - Center for Research in Extreme Scale

INDIANA UNIVERSITY

ParalleX Execution Model

version 3.1

Thomas Sterling

12/17/2012

ParalleX is an execution model being developed to meet the objectives of the DOE Exascale

Program. ParalleX is an evolving model that is intended to guide the co-design of the extremescale architecture, programming models, and operating and runtime system software. It is driven by DOE mission-critical problems. ParalleX is being employed by the XPRESS Project under the ASCR X-stack Program and by the ASCR Modeling Execution Models Project as well. This early technical report offers a discussion of the ParalleX model and its primary foundation concepts. It represents a work in progress and will be continuously expanded, refined, clarified, and otherwise improved to guide the evolution of the Exascale system architecture and software.

Table of Contents

1

2

1. Introduction

An innovative execution model is required to address the challenges impeding progress to effective Exascale computing before the end of this decade and accelerating problems demanding strong scaling even today. The strategic challenges include time and energy efficiency, scalability, programmability, and reliability. Designs for Exascale systems that are based on evolutionary extensions of current conventional methods are likely to fail in meeting these challenges, at least for some crucial application problem domains. An execution model provides a holistic definition of a class of systems and provides a conceptual framework for the codesign, operation, and interoperability of the many hardware and software layers which in combination make up a complete parallel computing system. ParalleX is an innovative experimental execution model under development to address these challenges and enable extreme scale computation. ParalleX serves as the guiding execution model for the DOE ASCR

X-stack Program XPRESS Project and the DOE ASCR Modeling Execution Models Project.

This technical report, “ ParalleX Execution Model, version 3.1

” provides a description of the

ParalleX model in its current instantiation. It presents the overall strategy and describes the primary conceptual components of ParalleX. Its content is offered to foster collaboration on the development of a new execution model, to provide guidance in the development of the stack of new system software compliant with the needs of ParalleX, and to support the codesign of the hardware and software system layers including core and system hardware architecture, system software of operating systems and runtime systems, and programming models, languages, and tools. It also informs the development of new parallel applications and algorithms to take advantage of these systems. Unlike previous execution models, ParalleX facilitates not only basic application performance through scalability, but other critical factors of practical operation including reliability, protection, and power consumption while exploiting new self-aware control methods.

1.1 ParalleX Execution Model

1.1.1 Goals and Objectives

The goal of ParalleX is to replace the Communicating Sequential Processes parallel execution model 1) to enable future practical systems capable of performance levels beyond an Exaflops,

2) to accelerate scaling-constrained applications through improved methods of strong scaling, and 3) to facilitate efficient processing of dynamic directed graph-based applications that exhibit memory-intensive computation and heavy system-wide communication and control. It is to dramatically improve scalability, temporal and energy efficiency, reliability, and programmability.

3

The objectives of ParalleX include providing means of exposing and exploiting high degree of currently untapped parallelism, hide latency, significantly decrease overhead, and diminishing impact of logical and physical resource access contention to provide significant improvements in efficiency in the use of both physical resources and energy. An additional objective is to exploit runtime methods to contribute to the efficiency challenge above and to significantly improve programmability by reducing programmer responsibility for explicit resource management and task scheduling. Another objective is to enable new solutions to the problems of fault tolerance and protection. A final objective is to devise a framework for applications emphasizing meta data processing such as dynamic directed graphs.

1.1.2 Strategy

The strategy of ParalleX is based on the idea that HPC Phase changes are both reflected and facilitated by a corresponding change in execution model to provide a conceptual framework or paradigm that permits codesign of the system layers and application workloads within the scope of enabling technology properties. ParalleX was first and foremost intended to shift system operation from static resource management and task scheduling of course grain parallelism to dynamic allocation of tasks to resources at the level of medium grain parallelism through lightweight user multithreading. This strategy was combined with message-driven computation to achieve split-phase transaction execution and intrinsic system-wide latency hiding. The effect sought is to eliminate resource blocking even when task elements are logically blocked.

To expose more parallelism, ParalleX eliminates global barriers and instead employs a rich set of local synchronization for dynamic adaptive control in the presence of widely varying task granularity. To maintain control locality, continuations are defined to migrate throughout the system as opposed to being tied to fixed program counters, stack pointers, and the occasional global barrier. ParalleX is defined to support a global address space with a hierarchical logical name space. ParalleX needs to provide means for fault tolerance, protection, and runtime energy use management.

A philosophy of simplicity of design of highly repeated components is assumed to achieve complexity of operation as an emergent property of the dynamic behavior for low development cost. Policies are undefined for flexibility of implementation and portability across different systems, systems of the same type but varied scale, and systems of different generations. Such diversity of policy is supported through a set of specified invariants of operation that guarantee compatibility of ParalleX compliant systems.

1.1.3 Major Components

ParalleX comprises a set of constructs each of which may be realized in many ways from pure software to pure hardware as well as a mix of the two. To introduce the ParalleX model, here are presented the small set of primary components required to achieve the goals and objectives through the strategy described.

4

1.1.3.1 Compute Complexes

The actual work is performed by small first-class (named) executing objects that combine code with private state or ParalleX Variables . Compute Complexes have limited lifetimes and each exists only within a local physical resource called a “

Synchronous Domain

”. Flow control within a complex is a combination of static dataflow or functional execution using single-assignment semantics with the ParalleX Variables and global mutable data including synchronization objects

(discussed below). Complexes may be preempted, suspended, and resumed for dynamic resource usage.

1.1.3.2 ParalleX Processes

ParalleX Processes are contexts that contain one or more executing complexes, their code objects, ParalleX Variables, and relatively near global state. They maintain mapping of their state across one or more synchronous domains and referenceable operating quality state and control actors. They may also include child processes.

1.1.3.3 Local Control Objects

Advanced synchronization objects are used to manage asynchrony of operation, expose parallelism, protect global mutable variables, control eager versus lazy evaluation, support migration of continuations, handle anonymous producer-consumer computation and much more.

It generally incorporates a semantic structure that manages incident events, updates its control state which it compares against a predicate, and under appropriate conditions triggers new actions like a compute complex. While they can be used for primitive conventional semaphore and mutex synchronization constructs, they can be much more powerful such as dataflow template and futures . They are local in that any one such object must exist in only one synchronous domain at a time (although they may migrate between them). This is different than distributed control operations that may be spread across multiple synchronous domains and are formed through a collection of local control objects.

1.1.3.4 Parcels

A parcel is a class of active messages provided to allow message-driven computation and the moving of work to the data when appropriate rather than always moving the data to the work. A parcel can cause an action to occur remotely (in a different synchronous domain) applied to a named logical or physical entity such as a program variable. Parcels specify the destination object to which they are to be applied, the action they are to perform, additional operand values required to complete the action, and the continuation defining what is to happen upon completion of the specified action. Among such actions, block data moves can be performed by parcels.

1.1.3.5 Percolation

A variation of parcels, percolation offers the means to move work to a physical resource such as a GPU to hide latency and offload overhead for costly units. Percolation moves all necessary information needed to perform a complex, possibly highly parallel, task to a separate execution component, and remove its results to main memory.

5

1.1.3.6 Micro-checkpointing

A means of providing local management of copies of designated variable values until follow on results for which they are precedent are valid are incorporated within the model. It provides a framework from which fault tolerant policies can be developed and included in a given application execution. These methods scale and can employ multiple methods of fault detection, isolation, correction, and recovery.

1.2 Execution Model Concept and Tool

An execution model (or model of computation) is a paradigm that provides the governing principles to guide the co-design and cooperative operation of the vertical layers comprising a parallel computing system. Execution models are devised to respond to changes in enabling technologies and needs of new classes of applications to exploit the opportunities afforded by such new technologies while addressing the challenges they impose to effective exploitation.

Throughout the history of high performance computing (HPC), there have been at least five mainstream scalable execution models such as the vector model with communicating sequential processes (CSP) dominating the last two decades of MPPs and commodity clusters. At the inchoate Petaflops era powered by multicore and GPU technologies, a new model of computation is required to permit the development and application of future Exaflops parallel systems.

An execution model incorporates key attributes required to organize the system elements and guide their co-operation in fulfill the application tasks. These are:

Holistic system representation – provides a framework to describe and consider and entire system rather than the set of separate system layers,

Strategies – the approach and fundamental ideas that constitute the paradigm embodied by the execution model,

Semantics – the set of referenced objects, the action classes performed on the objects, the means of representing parallelism and supporting synchronization, and name space management,

Policies – provides a set of invariants that have to be satisfied but for which the implementation is unspecified providing flexibility,

Resource Control – the practical management of system resources for fault tolerance, security protection, and power management,

Abstract to Physical – the methods and means for resource management, data distribution, and task scheduling,

Commonality – support for portability across similar systems of different scale, across different contemporary systems, and across systems of successive generations.

6

1.3 Codesign through ParalleX

A goal of ParalleX is to enable codesign of system layers and of system design with application design. ParalleX as an execution model allows the use of the conceptual approach of the

“ decision chain

” which addresses the question: how does each layer of a system contribute to the determination of when and where an operation is to be performed. ParalleX may, at least ideally, simplify the problem of codesign from O(n

2

) to O(n) by allowing each layer to be designed with respect to the execution model and their neighboring layers rather than with every other layer in the system simultaneously. The following discussion suggests a possible breakdown of the relationship of every layer in the system design in supporting the functionality and capability for each of the key ParalleX components.

1.3.1 Complexes Codesign

1.3.1.1 Programming model

Modularity, encapsulation, and composability of executable work

1.3.1.2 Compilation

Complex modules for encapsulation of local work. Aggregation of very lightweight complexes to balance granularity with overhead.

1.3.1.3 Runtime System

Complexes scheduling manager with preemption. Depleted threads treated as local control objects.

1.3.1.4 Operating System

Provides basic thread execution resource to stream of runtime threads.

1.3.1.5 System Architecture

First class object support, Relies on complexes for intra-domain latency hiding.

1.3.1.6 Core Architecture

ISA extensions. Rapid creation and context switching. Priority scheduling. Execution with ILP.

Preemption of user complexes.

1.3.2 ParalleX Processes Codesign

1.3.2.1 Programming model

Primary modularity, encapsulation, and composability of program organization. Coarse grain parallelism. Strict and eager evaluation.

1.3.2.2 Compilation

Inheritance for processes as objects. Stores all components within processes. Hierarchical name space.

7

1.3.2.3 Runtime System

Create and terminate processes

1.3.2.4 Operating System

Serves as protection barriers via capabilities-based addressing. Allocates physical resources

(synchronous domains) to processes. I/O from “main”.

1.3.2.5 System Architecture

NICs communication across processes and between processes. Mapping support.

1.3.2.6 Core Architecture

ISA extensions

1.3.3 Local Control Objects Codesign

1.3.3.1 Programming model

Rich semantics of fine grain synchronization (e.g. dataflow, futures); Hidden at high level;

Exposed at low level (e.g. XPI).

1.3.3.2 Compilation

Distributed continuations; Eager versus lazy evaluation; Out of order asynchronous control.

1.3.3.3 Runtime System

Management of Depleted Threads. Carry out basic primitive DMA operations on LCOs. Update

Local Control Objects included depleted threads.

1.3.3.4 Operating System

Locate.

1.3.3.5 System Architecture

NICs directly process updates from parcels; Atomic updates.

1.3.3.6 Core Architecture

ISA extensions; Rapid update and consequent action instantiation

1.3.4 Parcels

1.3.4.1 Programming model

Medium of global access, control, and action at a distance; Hidden at high level; Exposed at low level (e.g. XPI).

1.3.4.2 Compilation

Formulate work requirements in specified data-struct for conversion to parcels

8

1.3.4.3 Runtime System

Conversion of data-struct to parcel; Conversion of parcel to Computational Abstract Complex;

Carry out basic primitive DMA operations; Update Local Control Objects included depleted threads.

1.3.4.4 Operating System

Manages all system wide parcel transport, delivery, buffering

1.3.4.5 System Architecture

Transport layer, Physical buffers, Lots of smarts in the NICs.

1.3.4.6 Core Architecture

ISA extensions; Rapid creation

1.4 Report Organization

The “Report on the ParalleX Execution Model” is presented to describe and explain the ParalleX model of computation. It is intended to inform those who wish to acquire a professional understanding of the model concepts, computational scientists who will apply ParalleX related system software and programming environments and tools, and computer scientists who will implement ParalleX system hardware or software. This report is organized simply to provide easy access and understanding to the concepts and elements of the ParalleX execution model.

The next section, Section 2, provides a brief overview of the model to give a complete high level picture to which more detailed focused discussions can be related. With this understanding,

Section 3 establishes the fundamental elements at the lowest level of abstraction of any ParalleXbased system. These currently include the synchronous domains upon which a compute complex is performed and the active global address space (AGAS) upon which the hierarchical programmer name space is mapped and that maps to the system physical address space. Section

4 begins the description of the principal elements and the element principles. The first, given in this section, is the ParalleX Process that provides the hierarchical name space and contexts for data, computation, and mapping as well as protection barriers. Section 5 describes Compute

Complexes that serve the role of threads of physical systems in performing the actual actions of computation. Section 6 describes the semantics and abstract mechanisms for lightweight but sophisticated synchronization to manage asynchrony, support distributed continuations, permit anonymous producer-consumer computation, and providing means of rate of work generation

(lazy versus easy evaluation). These are the class of Local Control Objects that include but are not limited to dataflow representation of precedence constraints and futures constructs. Parcels are described in Section 7 to explain the form, function, and very low level API of messagedriven computation. This is followed by a special case of parcels, Percolation , in Section 8 for heterogeneous computing and optimized use of precious resources. At Exascale, new methods to achieve reliability may be required and Section 9 describes the principles of Fault Management

9

through the concept of micro-checkpointing. The final three sections deal with the UHPC advanced requirements of Protection , Self-Aware , and Energy , respectively.

10

2. Overview

The ParalleX model of computation is very different from the conventional Communicating

Sequential Processes model that dominates scalable MPP and commodity clusters today at the beginning of the Petaflops era. ParalleX incorporates a global shared name space rather than the

CSP distributed or fragmented memory. ParalleX program wide parallelism is at three levels: coarse grain parallel processes that may each span multiple, even shared, nodes, medium grain computational abstract complexes (or just “complexes”) which are a kind of thread, and fine grain (operations level) dataflow parallelism. All are ephemeral rather than the static MPI processes. Communication is message-driven rather than CSP message-passing. Synchronization is lightweight with sophisticated semantics rather than heavyweight global barriers of ghost cell copying which has the same effect. ParalleX also deals with key issues that are not addressed by the CSP model (although there is research in some of these areas) such as reliability, security, energy efficiency, and self-aware adaptivity.

2.1 Hardware Operation Properties

ParalleX incorporates a model of the underlying hardware. The system hardware is an ensemble of interconnected “synchronous domains”. Each synchronous domain incorporates 1) the ability to execute complexes, 2) ephemerally stores data of distinct forms, 3) performs operations in bounded time, 4) accesses hardware addresses for thread control, scratch pad fast memory, lowlevel counter and actuator bits/flags, 5) global virtual to physical address translation, 6) sends/receives parcels, 7) incorporates hardware fault detection, 8) reconfiguration flags, 9) energy sensitive control, 10) connects to external I/O for mass storage, and 11) guarantees compound atomic sequence of operations on local data.

2.2 Active Global Address Space

The ParalleX model incorporates a global address space. Any executing complex (such as a thread) can access any object within the domain of the parallel application with the caveat that it must have appropriate access privileges. The model does not assume that global addresses are cache coherent; all loads and stores will deal directly with the site of the target object. All global addresses within a Synchronous Domain are assumed to be cache coherent for those processor cores that incorporate transparent caches.

The Active Global Address Space used by ParalleX differs from research PGAS models.

Partitioned Global Address Space is passive in their means of address translation. Copy semantics, distributed compound operations and affinity relationships are some of the global functionality supported by AGAS capability.

11

2.3 Split-phase transactions

ParalleX is devised to enable a new modality of execution and relationship between the logical and physical system. Split-phase transactions are tasks that are performed in a sequence of segments, each of which is performed on a computing subsystem closest or containing the memory state upon which the actions are to be performed. This, in turn makes possible a key form of operation, the work queue model. This method only does work on local state and through the availability of a stream of such work packets achieves no blocking or idle time resulting from remote access. Thus, the effect of latency hiding is achieved and energy, as well as time, is reduced for the computation.

2.4 Message-driven computation

Parcels are a form of active message that permits the instigation of remote work and thus enable message driven computation. Parcels are a form of compound message that carry data, target address, action, and continuation information. Parcels permit the migration of continuations to create a new dynamic global control space that can adapt at runtime for locality management through self-aware low level mechanisms and high-level situational-awareness. In support of heterogeneous computing and optimal use of precious resources through latency hiding and overhead offloading, parcels support a medium to coarse grained “percolation” methodology (see section 8).

2.5 Multi-threaded operation

ParalleX is a multithreaded model to support three levels of parallelism with complexes (threads) serving as the intermediate level. Complexes are first class objects and can be both referenced and manipulated by user applications. Complexes are ephemeral in that they exhibit finite lifetimes. While logically they can migrate between physical domains, this is not a preferred as to do so could incur egregious overhead. However, self-consistency of the model demands this capability. Complexes incorporate a static-dataflow fine grain parallel control model to provide a simple means of representing operation level parallelism. This is a more abstract representation that permits easy and diverse transformation to employ different class of processor cores. The dataflow is on transient values while global mutable side-effects still require synchronization or control protection.

2.6 Local (lightweight) control objects

A key element of the ParalleX model is its dependence on a set of sophisticated synchronization constructs referred to as “local control objects” or LCOs. These are lightweight objects all of the state of which exists in a single contiguous logical block and physical memory bank to be acquired, modified, and restored atomically at low temporal and energy cost. While LCOs can perform the traditional synchronization primitives such as semaphores and mutexes, their innovation is in bringing such powerful constructs such as dataflow and futures for the first time to general usage. For example, an entire static dataflow program could be entirely represented

12

with a series of LCOs. In certain cases, this might even be a useful way to organize a computation (not recommended for the general use).

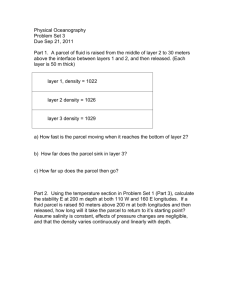

The diagram below provides a graphic of the ParalleX execution model. The shaded boxes represent synchronous domains while the larger bounded boxes represent logical parallel processes which are capable of employing more than one system node at a time and even share one or more nodes between processes. The complexes are represented by the wavy black lines while the directed green arcs imply message-driven computation using parcels. Other features of

ParalleX are also displayed in this picture.

13

3. Fundamental Elements

Independent of the implementation details of a system supporting the ParalleX execution model, every system must incorporate a set of specific fundamental elements. Here they are partitioned in to two general classes: synchronous domains and active global address space.

3.1 Synchronous Domains

A “synchronous domain” is a contiguous physical domain that guarantees compound atomic operations on local state. It manages intra-locality latencies and exposes diverse temporal locality attributes. The set of synchronous domains comprises the functional capability of the entire system and divides the world into the synchronous and the asynchronous. Thus a system comprises a set of mutually exclusive, collectively exhaustive domains. Synchronous domains although hardware are themselves first class objects with the same attributes of other objects modulated by type “synchronous-domain”. Such domains need not be identical and as long as specific inter-domain protocols and invariants are satisfied can support heterogeneous system architectures. However, all asynchronous domains must exhibit specific inalienable properties.

3.2 Active Global Address Space

The active global address space (AGAS) of a system provides a unified reference space on a distributed physical system. AGAS assumes no coherence between localities. It enables the ability to move virtual named elements in physical space without the need to change those names as would be necessary in PGAS systems. Examples include: user variables, synchronization variables and objects, parcel sets (but not parcels!), threads as first-class objects, and processes which are also first class objects specifying a broad task. AGAS defines a distributed environment spanning multiple localities and need not be contiguous.

Active Global Address Space maps globally unique addresses to local virtual addresses. Global addresses are immutable. They allow objects to be moved to other localities and are a precondition for efficient dynamic flow control and load balancing.

14

4. Parallel Processes

The concept of the “process” in ParalleX is extended beyond that of either sequential execution or communicating sequential processes. While the notion of process suggests action (as do

“function” or “subroutine”) it has a further responsibility of context, that is, the logical container of program state. It is this aspect of operation that process is employed in ParalleX. Furthermore, referring to “parallel processes” in ParalleX designates the presence of parallelism within the context of a given process, as well as the coarse grained parallelism achieved through concurrency of multiple processes of an executing user job. ParalleX processes provide a hierarchical name space within the framework of the active global address space and support multiple means of internal state access from external sources. It also incorporates capabilities based access rights for protection and security.

4.1 Process Parallelism

The ParalleX process is the main abstraction encapsulating parallel execution. It does this in three ways. It uses three levels of physical parallel resources. It can distribute its state and multiple actions across multiple synchronous domains (nodes). Within any one synchronous domain a parallel process may employ multiple physical threads or cores to support multiple concurrent ParalleX user threads within the process. And, within a physical thread, a user thread of a ParalleX process may employ its instruction level parallelism (ILP) or dataflow parallelism.

More than one ParalleX process can share a synchronous domain. The relationship between the abstract process parallelism and the hardware physical parallelism may change over time, either because the object of parallelism is ephemeral (a finite lifetime) or because an existing executable object (e.g., process or complex) shifts the physical resources allocated to it for load balancing or fault tolerance.

4.2 First Class Object

A ParalleX process is a first class object. It is identified by a name in the same name space of application variables (e.g., x or y, or i or j) and can be treated by a set of associated operations in the same instruction stream of conventional operation primitives. A process may be manipulated by other processes; created, terminated, suspended, moved, queried with regards to status or properties, etc. It may be applied to an argument object. A process is defined by its corresponding procedure. A procedure is a representation of the state to be created and manipulated and the actions to be applied to the state as well as defining other attributes. A procedure is also a first class object that itself may be manipulated and mutated.

4.3 Comprising Elements

The procedure defines the active processes that are its instances. It contains a number of elements of different type classes. These elements include action entities including child

15

processes, computation abstract complexes (e.g., threads), and primitive operations. Other elements are data either individual scalar variables or structure of such variables such as sets, vectors, arrays, and graphs. A process includes a map of its elements to its physical resources. As changes of resource allocation or element assignment occurs, the content of the resource map is changed to reflect this. As discussed below, a process includes its access list for capabilities based addressing for protection. The process also contains observable parameters including a measure of power, reliable operation, utilization, and other factors.

4.4 Accessing Process Data

The threads of a process can access any data within its resident process. Depending on the relative position of the resident process with a second target process in the process hierarchy, the means of accessing data contained by the target process is possible but will vary.

4.4.1 Tree Namespace Hierarchy of Processes

A process may create child processes by instantiating procedures. These child processes may in turn create their own child processes. The result is that a user ParalleX computation is organized as a tree of processes with the root process, “main”, the ancestor of all other descendent processes. This tree of processes defines the hierarchical name space within the global address space. An object in an application is determined by its ordering within its resident process and the sequence of process decent from “main” to the resident process. This provides a unique and dynamic naming of all program objects.

4.4.2 Direct Access

A thread of its resident process can directly access any data contained by any child process or its descendant processes. Direct access refers to the ability to read or write the value content of a memory location without an intervening software such as a method. Except for the hierarchical naming it is identical to accessing data in the original resident process of the thread.

4.4.3 Indirect Access

A thread of its resident process seeking access to the state of a process that is a sibling or cousin process must do so through the use of intermediary methods. Direct access is not possible and the abstractions of the access methods guarantee isolation of underlying structures designed separately while providing correct semantic operation. It also permits a transparent layer of protection through access rights provided by capability based addressing.

4.4.4 An open issue:

One relationship has not been resolved: that of access by a descendent process thread to an ancestor process data. One approach is to give them direct access to the data directly above them. Another is to limit such to indirect access. This question is being explored currently.

16

4.5 Instantiation

A process is ephemeral except for the process “main” that exists throughout the lifetime of the application program execution. Therefore, a process is created as an instance of a called procedure potentially at any time during the application execution with a set of operand values.

ParalleX employs higher order procedures permitting procedures to be passed as operands of calls to other procedures. ParalleX also permits the use of both conventional strict calling sequence as well as an eager mechanism. There is also a lazy evaluation mode. The strict calling sequence will not instantiate a parallel procedure until all of the procedure arguments are available; that is, they have been determined and are local to the site of the calling thread.

Alternatively, an eager mode of procedure instantiation will create a new process when any of the argument values are available and will initiate such parts of the process computation requiring only those available operands while deferring other internal computation until their required operands also become available. It is also valid to pass a futures variable as an operand to a procedure. A future represents the equivalent of an IOU for a value yet to be derived. It permits a form of calculation that is both eager and lazy. How? The called procedure may proceed without the actual operand value, holding instead the future for eager evaluation. But the future permits its own calculation to only begin when the using process is instantiated thus permitting a form of lazy evaluation.

Main

Operands (strict & eager)

Data

Methods

Codes

S

D

S

D

S

D

S

D

Resource

Configuration

I/O

Process

Child

Processes

CAC

CAC

CAC

CAC

17

5. Computation Abstract Complexes

5.1 Complexes are Advanced Threads

Almost all computation is performed through the mechanism of the Computation Abstract

Complex (also referred to as a “CAC” or just simply a “complex”). For all intent and purposes, the complex can be thought of as a thread in the conventional lexicon. The reason this is not exactly correct is because a complex permits but does not demand certain forms of internal control flow that is not ordinarily employed by threads. However, a complex in its abstraction can be targeted to a physical thread of conventional processors. The reason that complexes do not constitute all the computation is that for some very lightweight functions such as remote basic compound atomic operations the overhead, even with hardware support, for thread instantiation would significantly exceed that of the useful work itself thus requiring alternative mechanisms for achieving the same result.

5.2 Local Objects

Complexes provide the functionality of its resident parallel process. An important distinction between complexes and processes is that complexes are local while processes are potentially distributed. The control state of a complex is entirely incorporated within its resident physical synchronous domain as is its register state and that of its stack frame if used. Therefore, intra complex operation can anticipate the majority of its operation operands will be locally available and therefore exhibiting bounded latency.

5.3 First Class Objects

Complexes are first class objects. They are individually identified by an address within the global address space and are referenced uniquely in the hierarchical name space of the application. They not only perform actions on data but can allow actions to be performed on them as a whole. This is critical for the development of runtime systems, schedulers, prioritizers, and dynamic load balancing.

Within a complex its state is also accessible externally that ordinarily would be hidden or private.

The abstraction of registers within a complex is an example. Under certain conditions, registers may be accessed from outside its complex. This involves not only the internal data value state of a register but also the control state as well. The abstraction of a complex assumes single assignment semantics for registers. This implies a write-once, read-many times side-effect model. It is motivated by the advantage of working without anti-dependencies so that both compilation and hardware runtime control will have easy flexibility in both extracting fine grain parallelism and allocating physical register resources. Single assignment methods permit dataflow semantics for exposing the potential parallelism even if only on a sequential issue

18

processor core for determining issue order, pipeline latency mitigating, and instruction level parallelism.

5.4 Variable Granularity

ParalleX complexes permit encapsulation of local parallelism in a form of variable granularity that can either improved available concurrency through lighter weight complexes or mitigate software overheads through heavier weight complexes. Aggregation at a level of compilation can be used to construct coarser grain complexes from the specification of fine grain complexes transparent to the user or DSL (Domain Specific Language) layer that adopting granularity to system operational properties.

The opportunity to exploit near-fine grain parallelism possible with ParalleX complexes will ultimately demand low overhead mechanisms of the system including both hardware and software. For example, reasonable scaling requires that the useful work of a complex exceed in critical length time that of the overhead time costs needed to manage the complex. Recent experiments with the experimental HPX runtime system shows full lifetime overhead of a complex on the order of 1 microsecond with additional overheads incurred for the likely multiple context switching. Experiments by other user multithreaded software runtime systems suggest that fine tuning and optimization may be able to reduce this by a factor of four or more. Further improvements will demand specific hardware functionality for reducing the overheads more.

5.5 Internal Structure

A complex is a partially ordered set of actions to be performed on first class objects from simple scalar variables, through structures of data, to other complexes and processes. The ordering is determined by establishment of precedent constraints. That is: which operations must be completed or variable values produced and provided (locally) prior to and sufficient for a described action to be conducted. This is equivalent to the dataflow graph of the computation.

Every complex has a set of locally named data elements that in conformance to conventional practices is referred to here as “registers” but which are used in a way different from conventional processor cores. ParalleX registers are single-assignment objects that maybe written once and read many times. This is equivalent to a functional computing model. As an abstraction it implies that the number of registers of a ParalleX complex is variable. The names themselves are computable, i.e., they may be indexed to create higher order register structures.

The motivation for this as previously mentioned is to convey the fine grain parallelism of the computation represented by a given complex through a single simple but powerful model

(dataflow). Theses registers are used for all local and private intermediate calculation results.

One form of a complex can use only this technique thus satisfying the requirements to operate purely functionally, i.e., value oriented. Like a process, a complex upon instantiation can be applied to a set of operand values. If the complex requires no additional input data, such as loading from global mutable data, then it can operate functionally. The results of a complex may be conveyed in four ways: 1) the result value may be stored in a register of the parent thread, 2)

19

the result may be conveyed via either directly or indirectly with a parcel to a site of instantiation of a new thread, 3) the result may be deposited with a local control object, or 4) the result may be loaded in at the site of a global mutable object. The first two methods extend the functional execution style. The third also can be used in a functional style when the LCO is the dataflow type.

Complexes engage several classes of operations of which more than one operation may be included. These major classes are briefly defined in the following subsections.

5.5.1 Primitive scalar

The largest set of operations are the familiar scalar operations performed on the contents of complex registers resulting in the production of one or more resulting values loaded in the complex registers.

5.5.2 Compound atomic specified operations

Short sets of primitive operations to be performed on tightly bound data likely to be stored in the same memory bank. This class of operations may provide very efficient compound operations potentially not requiring software locks if proper hardware support is incorporated within the system.

5.5.3 Complex and process calls

Both computational abstract complexes and processes are created through calls from complexes themselves. While there are additional means by which these important activities can be instantiated call by complexes is by far the most common.

5.5.4 Parcel management

A small set of parcel create and manage semantic constructs are included in the model to elicit work at a distance or to move blocks of data. Parcels require a destination, an action, possibly some operand values, and an indication of what may happen afterwards. This information is provided by a data structure that is applied to the parcel-set object. There, transparent to the user program it is transformed to a parcel and encapsulated in a data transport packet. Other useful instructions solicit such data structures from the parcel set that have arrived within the local subset of the parcel set but not delivered. Such parcels can be acquired by requesting properties such as a parcel from a particular source, a particular kind of parcel, or a parcel with a specific priority.

5.6 Complex Lifecycle

A complex is ephemeral being created at some point in the lifetime of its resident process, performing its assigned function, and terminating at or before the conclusion of its host process.

The lifecycle represents the sequence of operational states through which the complex transitions. It is characterized by the state diagram such as the one below. Upon instantiation of a complex, its beginning state is initialized and when completed it is taken over by the scheduling methods of the system to issue it for processing. If resources are immediately available the

20

complex issues its operations for execution. If there are no resources available, the complex which is logically capable of continuing is transitioned to the pending state joining other complexes waiting for access to physical resources. When the complex runs out of operations ready to be performed but expects to be able to proceed in a modest time span, then it transitions to the waiting state. Upon completion of a precedent operation, the complex may be returned to the pending or even the issues state. Other states provide different modes of suspension such as blocked or depleted permitting different system response. Upon completion of its allocated functional responsibilities, the complex is retired first cleaning up its footprint in memory and then eliminating it from the system at the end.

21

6. Local Control Objects

Local Control Objects (LCO) provide semantic constructs for globally distributed synchronization. They complement the internal synchronization state of the active threads, together comprising the parallel control state of the executing program. An LCO is a finite state machine ( FSM ) whose local control and data state evolve in response to the incidence of program events. The LCO establishes a predicate that defines the criteria in terms of the control state for which it will invoke an action that may affect any part of program. An LCO is local in the sense that its entire being exists within a single locality .

6.1 Goals of the LCO Construct

The Local Control Object is a seminal construct of the ParalleX model of computation, taking many forms but in essentially all cases serving the need for dynamic global synchronization and migration of continuations . The principal goal of the LCO is to enable the exploitation of parallelism in a diversity of forms and granularities for extreme scalability such as exploiting the implicit parallelism of meta-data in dynamic directed graphs. A number of important attributes of the ParalleX include the following:

Exposure of Parallelism – greatly expand the kinds of parallelism that can be represented for scalability

Eliminate global barriers – provide (near) fine grain synchronization of small parts of flow control to mitigate effects of variable thread lengths within fork-join boundaries

Migration of continuations – enable transfer of low control across distributed computation and system to put it near operating data

Facilitate Global Control Operations – serve to implement distributed global atomic operations

Eager-lazy evaluation – facilitate scheduling policies that balance eager and lazy methods for time-domain load balancing

Dynamic Adaptive Resource Management – enable global resource management at runtime for both space and time domain load balancing and critical path execution optimization including mitigating effects of contention (e.g., shared resource access conflicts)

6.2 LCO Concept

A Local Control Object is a small object oriented structure and associated set of constructs that implement a finite state machine (FSM) for flexible synchronization and control. As an object, an LCO comprises data and control state as well as a set of related methods that perform on both.

22

An LCO is a first class object which means it exists within the user variable name space and may be addressed and accessed by any thread within its context hierarchy. A thread’s protected state is accessed by calls to its designated methods which manage all incident event accesses, assimilate input data as required, modify control state, determine satisfiability of a predicate constraint, and if so satisfied initiate the purpose action of the LCO.

The LCO is a family of synchronization functions potentially representing many classes of synchronization constructs, each with many possible variations and multiple instances. The LCO is sufficiently general that it can subsume the functionality of conventional synchronization primitives such as spinlocks, mutexes, semaphores, and global barriers. However due to the LCO rich structural substrate it can represent powerful synchronization and control functionality not widely employed such as dataflow and futures among others which open up enormous opportunities for rich diversity of distributed control and operation.

The LCO supports lightweight synchronization; the synchronizing of a few rather than essentially all activated threads of action. It provides for management of global control flow in the presence of asynchrony; the non-deterministic partial ordering of events due to variable latencies, contention, and scheduling policies of a global system beyond the control of user code.

Lightweight synchronization, within the practical limits of overhead costs, may remove the overconstrained scheduling imposed by conventional techniques such as global barriers and critical sections.

Here is a list of some of the possible synchronization classes , many well known, all of which may be implemented within the LCO framework:

Mutexes

Semaphores

Events

Full-empty bits

Dataflow

Futures

Depleted threads (suspended)

But LCOs as a family may be customized to very specific usages permits unique to a given application code, still maintaining the properties of all LCOs. Such customization may be achieved through the refinement of generic LCO form with intrinsic inherited form and functions

(methods).

The concept of the event is a general way of capturing the asynchronous incidence of a communication with the LCO representing some other computation completion or requirement for organized action. The organization usually implies some coordination with one or more other action stages achieved; each an event in its own right. From the perspective of ParalleX, an event

23

is manifest as the incidence (arrival at the LCO) of a parcel from anywhere in the system or an access request by a thread in the same locality. It may also be a system state from the runtime or operating system (OS) or even the hardware architecture (usually the OS serves as an intermediary between the user code and the hardware architecture). All LCOs react to incident events by modifying their control state and possibly their data state.

The basic operation of an LCO is to perform a consequent action upon the satisfaction of a predicate criterion specified in terms of the LCO control state. Upon each incidence of an event, the control state once updated by the internal FSM is checked in terms of the LCO predicate.

Only when the predicate is true (the criterion is satisfied) is the LCO consequent action performed. Upon completion of its action, an LCO may be terminated ( ephemeral ) or be reset to continue operation towards the next in a sequence of synchronization functions ( persistent ).

6.3 Generic LCO

The generic LCO is a set of general structure and operational properties shared by all LCO objects from which specialized predefined LCO classes or user custom defined LCOs are derived. Alternatively, such classes inherit a common set of attributes from the generic LCO.

The generic LCO is an embodiment of the concepts described previously. In summary: an LCO is a persistent or ephemeral first class object that instantiates new threads or performs other actions in response to a set of directed incident events according to pre-established criteria codified as the LCO predicate in terms of the LCO control state.

The family of ParalleX LCO is partitioned into a set of classes each of which may include a set of types . The Generic LCO represents the entire family of ParalleX LCOs. LCO classes include dataflow, futures, depleted threads, producer-consumer, and others. A type for anyone of these classes is the special case with specific parameters, predicate, control finite state machine, etc.

The Generic LCO is reactive. It is quiescent except when it is the destination object of a requesting agent such as a thread, a parcel, or some special mechanism. Such a request is referred to as an incident event . When quiescent, the Generic LCO consumes no execution resources and is embodied as a data structure in memory identified by a global address.

The Generic LCO is described in terms of its basic elements and the basic operations performed on and by them. The following two subsections describe the Generic LCO in each of these.

6.3.1 Basic Elements

The Generic LCO consists of data and control state buffers, control finite state machine, predicate definition, and multiple methods defining input processing and output actions. These basic elements are inherited by all LCO types and can take diverse forms. Here each basic element is introduced.

24

6.3.2 Input Event Queue

An LCO includes a means of temporarily holding an incident event until the destination LCO is available to assimilate that event. This ensures atomic processing of any preceding events for deterministic operation. The event queue will hold a number of such events.

6.3.3 Data State

The data state of an LCO represents the aggregation of input events sufficient to provide the necessary consequence actions upon satisfaction of predicate criterion. The data buffer is updated upon the incidence of an event. The contents of the buffer may be comprehensive copies of the content of the incoming accesses or as limited as a count of such events. Other filters applied to the input events may assert mappings that provide and store some intermediary data representation. In a small subset of LCO types, the input data may be the null set with all event sequence effects limited to the LCO control state.

Event data state may be either fixed in size specified by the LCO type or variable length determined at runtime. An example of fixed size buffer for data state is the Dataflow LCO with one entry for each of a set number of operands. An example of a variable length data state buffer is as part of a Future LCO to store a non-determinant number of access requests.

6.3.4 Control State

The control state of the LCO reflects the history of incident events and preceding actions of the

LCO. It is the state of the finite state machine and is modified upon incidence of input events or initiation or completion of consequence actions. The control state is employed by the predicate criterion to determine when the LCO can perform its specified consequent action.

6.3.5 Predicate Criterion

The predicate criterion determines when the LCO can perform its specified consequent action. It is an encoding of a logical relation based on the control state of the LCO. When the predicate is evaluated to true , the LCO will initiate its specified consequent action.

6.3.6 Methods

Every LCO has associated with it a set of methods some of which are shared among all LCOs and others which are adjusted or entirely custom for the needs of the specific functional requirements of the particular LCO.

The inherited generic methods are the low level functionality required of all LCOs. These include the basic create and terminate, event queue, action control, parcel constructors, garbage collection, and heap management among others.

The event assimilation methods manage the specific input processes of acquired incident events including the initial input data processing, state buffer management, and resulting control state update. The control method manages the internal finite state machine, the predicate criterion and test with respect to the control state, and the initiation of the consequent action. The thread

25

method is optional and is used when the consequent action involves the creation of a new thread, either directly, or through the mediation of a parcel for remote thread instantiation.

Inherited Generic Methods

Incident

Events

Event Buffer

Event

Assimilation

Method

Cont rol

State

Control

Method

Predicate

Thread

Create

Complex

New

Complex

26

7. Parcels

7.1 Introduction

7.1.1 Objectives

The Parcel is a component of the ParalleX execution model that communicates data, asserts action at a distance, and distributes flow-control through the migration of continuations. Parcel bridge the gap of asynchrony between synchronous domains while maintaining symmetry of semantics between local and global objects. Parcels enable message-driven computation and may be classed as a form of “active messages”. Other important forms of message-driven computation predating active messages include dataflow tokens, the J-machine support for remote method instantiation, and at the coarse grained variations of Unix remote procedure calls, among others. This enables work to be moved to the data as well as performing the more common get action of bringing data to the work. A parcel can cause actions to occur remotely and asynchronously, among which are the instantiations of computational complexes (a generalized form of threads) at different system nodes or synchronous domains. Optimizing the protocol of Parcels is important for efficient scalable parallel computation by minimizing communication overhead and latency effects, avoiding communication contention, and exploiting increased parallelism.

This section describes Parcels and provides their Specification as part of the broader ParalleX

Specification. It is a working document and will experience frequent and substantial changes, refinements, and clarifications to reflect knowledge gained through research.

7.1.2 Overview

A Parcel is a semantic construct intended to serve as an abstraction of an asynchronous message between two distant but integrated resources, both logical and physical, in a parallel computer system. It is distinguished from conventional send/receive data transfers in that it can target as a destination any first class object, can specify an action to be performed and upon completion the data and control side-effects to assert. The Parcel has four major components or fields that provide a flexible operator for asynchronous execution control. These are: destination field, action field, data payload field, and continuation field. Parcels are expected to be manifest in a few different sizes with short parcels optimized for minimized latency and long data movement parcels optimized for data throughput.

7.1.3 Organization of this Section

This subsection briefly introduces the semantic construct, Parcel, as a critical component of the

ParalleX execution model, as well as an overview of its function. Section 7.2 describes the

27

Destination Field of the Parcel that identifies the target of a parcel of the object to which the parcel action is to be applied. Section 7.3 presents the set of possible Actions that can be represented in a Parcel. Section 7.4 discusses the Data field of the parcel and its many types, forms, and roles. Section 7.5 presents the Continuation field that determines the follow-on task that is performed upon completion of the parcel specified action at the destination objects.

Section 7.6 presents some ideas on a basic programming interface that would invoke the fundamental functionality represented by the specified parcels. Finally, Section 7.7 explores possible formats to derive sizing factors and constraints.

7.1.4 Open Issues

As this document represents continued progress in research on message-driven computation, it will book-keep issues considered important that at any point in time are unresolved.

7.2 Destinations

7.2.1 Destination classes

The Parcel destination field uniquely identifies any ParalleX object, such as a process, LCO, computational complex, synchronous domain, or hardware entity represented in the AGAS address space. Such a first class object is the logical target of the action conveyed by the designated parcel. The parcel destination field provides the context for the parcel action execution; the action execution being analogous to, but not necessarily implemented by, a method invocation applied to the destination object. The actual arguments of the method are data components of the parcel including the complete destination descriptor and the contents of the parcel data field.

All first class objects have a unique virtual address (name) assigned by the AGAS. This global virtual address space is flat. It is not possible to derive ancestor or descendant information in global object hierarchy based solely on the object’s virtual address. The virtual addresses are integers of fixed size in a given ParalleX implementation and cannot be explicitly decomposed into meaningful components.

Using first class objects as parcel destinations is sufficient to implement and perform arbitrary operations on ParalleX objects. However, this may result in excessively large type descriptors required to describe and access their immediate subcomponents. While AGAS permits assignment of names to arbitrary entities represented in the global address space, doing so for many of them would frequently result in exhaustion of the address space and cause additional inefficiencies. Therefore, parcels can access immediate subcomponents of the first-class objects; this is accomplished by adding an optional target descriptor to the parcel data field (presence of this information is indicated by a flag in the parcel descriptor). The supported subcomponent targets include:

28

process data members,

complex registers,

functional blocks of hardware entities.

The target field is a number uniquely identifying subcomponent or member of a specific object type. The component enumeration must be consistent across all synchronous domains in the system.

7.2.2 Open Issues

The specific binary length of the destination field is as yet unspecified but is further discussed in

Section 7.7.

7.3 Actions

The Parcel Action field defines the type of operation to be performed upon parcel arrival at the synchronous domain containing the instance of parcel destination object. This operation typically involves processing of the contents stored in parcel data field, therefore the format of the latter is strictly determined by the action type. The operation may be performed by any execution resource physically available within the domain, in particular, intelligent NICs or custom function accelerators as long as they possess the necessary capabilities to decode the data payload (when present), transfer the data between parcel buffer and system memory (when required), and support the computation to be performed. The exceptions from this rule include percolation and parcels targeting explicit physical destinations (such as hardware registers).

Parcel actions are not explicitly typed; instead, the type of operation is derived from the types of action arguments. The type information is strongly associated with the carried payload

(operands, objects) and encoded in the parcel data field.

The action field is subdivided into two subfields: action class and action subtype. The first describes the major categories of operations, while the second specifies the actual operation to be performed or its additional parameters. Execution resources advertise their capabilities to the root parcel dispatcher in a synchronous domain indicating which types of actions each of them can handle; supporting the whole action class implies that all its action subtypes are supported.

Certain actions are launched with an explicit intent to retrieve the contents of remote memories or other computational state. Note that these actions do not specify how or where these results should be transmitted, or even what should happen to the result; this part of parcel-driven transaction is governed entirely by continuation associated with the parcel. The result produced by such actions, represented as a return value from a function implementing the action, is passed as one of the arguments to the continuation.

Parcels support the following action classes:

29

7.3.1 Atomic memory operation

This action class applies to globally accessible portion of synchronous domain’s memories. It represents the smallest parcel format and should therefore promote latency-optimized implementations. Its argument is at most one scalar value up to 64 bits in size stored in the parcel data field.

Supported subtypes:

Simple scalar read : read a 1, 2, 4 or 8-byte scalar from memory location identified by destination field.

Simple scalar write : write a 1, 2, 4 or 8-byte scalar to memory location identified by destination field.

Atomic test-and-set with operand width derived from type information stored in data field the action completes when set operation succeeds.

Atomic fetch-and-add using the datum supplied in the data field as the addend and its type to determine the size of the operation in bits; the return value is equal to the incremented value stored in memory as a result of operation.

Atomic compare-and-swap using the first argument supplied in the data field as the comparison value and the second argument as the intended memory value upon successful comparison; the operand’s type determines the size of the operation in bits.

Compare and swap returns a Boolean value indicating whether the operation was successful.

7.3.2 Compound atomic memory operation

Compound atomic memory operation consists of a sequence of one or more AMOs. The effects of this action are memory updates or retrievals (or both) carried out as if all component AMOs were executed simultaneously and in uninterrupted fashion. If any of the component AMOs fails, the entire action fails with no memory updates. The actual order of execution of the individual

AMOs is undetermined and implementation specific.

The effects of compound AMOs are explicitly restricted to the memory or state of a single synchronous domain. The entire operation can be executed to completion as a result of a single incident parcel. This stands in direct contrast to another class of compound atomic operations called Distributed Control Objects (DCOs), which are not directly implemented through parcels and operate atomically on object sets with arbitrary distributions.

7.3.3 Block data movement

The block move action performs bandwidth-optimized large volume data transfers. The raw bytes representing object contents are stored in parcel data field, accompanied where needed by related type information. Note that some runtime system implementations may be sensitive to the way the copies of data are handled (for example due to implicit reference counting); such environments must specify an additional flag to prevent the hardware layer from executing the action. This flag is bitwise or-ed with action subtype.

30

This specification does not explicitly name the entities or layers responsible for the serialization of objects or their members. The implementers should have the flexibility of choosing the best performing implementation for a specific system and application profile. It is expected that while these tasks could be carried out at multiple levels of the parcel stack, the software layer by default exercises the most control over serialization formats. However, it is possible that certain data movement operations, specifically those including POD (plain old data), could be accomplished using relatively unsophisticated hardware and therefore improve the overall efficiency.

Supported subtypes:

Opaque block put : writes block of bytes of specified size into location pointed to by the destination.

Opaque block get : reads block of bytes of specified size from location identified by the destination; returns the size and contents of the memory block.

Object data member put : update the contents of a data member within an object using supplied serialized contents accompanied by type information; the destination identifies the containing object and the accessed data member.

Object data member get : obtain a copy of a data member using supplied type information in the data field; parcel destination identifies the target object along with the accessed data member.

Object restore

: recreate a ParalleX object’s state as a child of process given as the parcel destination; the data field contains serialized object data. Object may be a process, an inactive LCO, or a depleted thread. The serialized state may include object’s AGAS name, in which case the association continues to be maintained after the object is recreated on the other end; otherwise a new name is obtained from AGAS and assigned to the restored object.

Object migrate : force migration of the object given as the parcel target to destination process or synchronous domain specified in the data field. This action results in object put parcel with destination field set to the target domain or new parent process, and serialized object state and name in the data field. Name associations referring to the previous location of the object will have to be updated. If the object is relocated to an explicitly identified synchronous domain, it becomes a child of the main process in the same application. This action operates on the same object kinds as object put parcel.

Object clone : replicate the internal state of an object specified in parcel destination, assigning it a new AGAS name. If a new parent object or host domain is specified in the data field, transfer it there as described for object migrate subtype; otherwise make the clone a sibling of the destination. The supported object types are the same as for object put .

31

7.3.4 Remote Thread Instantiation (methods)

RTI action creates and initiates the execution of a thread at the synchronous domain hosting the object specified in the parcel destination field. Parcel data field contains an optional sequence of thread attributes and their values defining the characteristics of the created thread, and a portable description of the method to invoke along with its marshaled argument values. This helps decoupling the software domain, which may provide arbitrarily complex descriptions of methods as well as their input arguments, from the processing at lower layers of parcel stack. For example, parcel hardware may still be used to accelerate the thread creation and/or scheduling operations (even if only by allocating the memory for thread state or performing early thread register initialization) whenever possible. The created thread executes a predefined function that accepts the parcel destination and method description as its arguments.

The RTI class has no subtypes.

7.3.5 Thread state manipulation

This class of parcel performs operations on thread state. The parcel destination field must contain thread name.

Supported subtypes:

Thread attribute get : obtain values for the number of thread attributes named in the data field; the retrieved name-value pairs are returned.

Thread attribute set : update the values of thread attributes using name-value pairs supplied in the data field.

Modify thread status : attempt to resume, suspend or deplete a thread. Returns status of the operation.

Thread register write : a special case of LCO fire method (discussed later) permitting low level update of thread register. The destination must be a suspended thread. The data field contains a typed scalar value, which is implicitly converted to thread register type. Note that this action is semantically different from object data member update (7.3.3), because it explicitly causes rescheduling of the modified thread.

7.3.6 LCO access

The LCO access class permits control of the LCO state using predefined interface methods

(consult the XPI Specification document for details). Since the operation semantics and format of the argument list is fixed for each fundamental LCO type, this opens the possibility of lowoverhead hardware-assisted LCO manipulation.

Supported subtypes:

LCO register : enters object names specified in the data field on the notification list associated with an LCO identified in parcel destination. The data field may contain an optional typed value used as an argument to register method.

32

LCO unregister : removes object names stored in the parcel data field from the

LCO notification list.

LCO wait : waits for the destination LCO to fire, with a timeout value specified in the data field. When that occurs, a typed value optionally produced by the LCO may be returned as the action’s result.

LCO fire : supply a trigger event to the target LCO associated with a typed value as an input argument.

7.3.7 Execute code

Parcels may carry explicitly executable objects, which serve two purposes. First, they implement percolation, which attempts to harness non-standard execution resources, such as accelerators, coprocessors, or dedicated functional units. The second use case involves execution of simplified micro-programs directly by parcel handler logic. The latter may have uses for system bootstrap, introspection and fault handling complementary to hardware-level register access. o Type 1 (self-contained) percolation: data field supplies an execution object to the percolation target identified in parcel destination. The executable object must conform to a prearranged format understood by the percolation’s target (e.g., ELF). o Parcel handler microcode: execute in-lined code segment expressed in simplified ISA interpreted by all parcel processing hardware in the system. The code along with associated data is stored in parcel data field.

7.3.8 Hardware register access

This class of actions enables a direct access to hardware registers. It applies to any device or unit with physically addressable memory space or register file disjoint from synchronous domain main address space. The parcel destination identifies the target hardware unit, while the sequence of physical addresses and bitwise arguments is stored in the data field. The data access operations are carried out strictly in order specified.

Supported subtypes: o Register read: perform the read sequence using addresses and bit size information from the data field. Return the sequence of tuples containing address, size in bits and value for each access. o Register write: perform a sequence of bitwise write operations using addresses, sizes and values stored in parcel data field. Return operation status. o Type 2 (low level) percolation. (This will be expanded upon)

7.3.9 I/O

<TBD>

33

7.3.10 Parcel tunnel

Parcel tunneling provides an encapsulation mechanism for parcels. The primary application of this protocol is to enable easy handoff of parcel content to functions operating on parcel arguments, such as optimized AGAS forwarding. The destination object must export a predefined method that accepts the metadata of the wrapper parcel and wrapped parcel as the arguments.

There are no explicitly specified subtypes, however the implementations are free to use the subtype field to define application-specific behavior.

7.3.11Open Issues

Among the important innovative claims of the ParalleX model is the action classes of its Parcels.

New advances are being made in this area and while much has been accomplished, there are key specifics of refinement still required. Some of these are briefly mentioned here. a.

With regards to Atomic Compare and swap would it better to let it retry until it succeeds? b.

There may be some use for double word atomics are useful, if they can be implemented correctly. c.

Would a more generic fetch-and-op function be useful instead of limiting to addition? d.

Should atomic fetch and add operate only on a single byte? e.

AMOs require substantial refinement in their semantics including detailed functionality. f.

Percolation should either be defined here or included elsewhere in the ParalleX specification. g.

I/O requires a system-wide strategy including support from Parcels.

7.4 Data

Parcel data field has to accommodate many kinds of payloads and therefore has the most flexible format of all parcel components. The possible variants include:

data ranging from as little as one byte to as large as multiple memory pages,

both anonymous (opaque) and fully typed datasets,

bitwise values representing hardware register contents, scalars, structures, arrays, as well as serialized ParalleX objects,

additional metadata required to execute parcel action (types, addresses, sizes, etc.),

purely passive data and executable code streams, and mixes of both,

other (embedded) parcels,

optional specifiers.

34

Data field has a variable length that is specified in parcel descriptor. In addition to raw payload data, in many cases it is necessary to provide sufficiently descriptive metadata (such as type information) for unambiguous decoding of the field contents; this is illustrated in more detail in

Section 7.7. The field format is strictly determined by parcel action class and its subtype. Below are enumerated the possible formats of the parcel data field along with lists of related actions.

The count and type information have not been listed explicitly for data values, identifiers, sizes and addresses for readability and to prevent overly restrictive format specification; it is mentioned only when necessary to identify the required component that is not associated with a value in data field.

7.4.1 Null

The data field does not contain any information. Its length in the parcel descriptor must be zero.

Used by: object_clone (variant), LCO_wait (variant), LCO_fire (variant)

7.4.2 Scalar type

Format: scalar_type

Used by: simple_scalar_read, test_and_set

7.4.3 Scalar operand

Format: scalar_value

Used by: simple_scalar_write, fetch_and_add, object_migrate, object_clone (variant), modify_thread_status, thread_register_write, LCO_wait (variant), LCO_fire (variant)

7.4.4 Scalar pair

Format : scalar_value, scalar_value

Used by: compare_and_swap

7.4.5 Untyped memory size

Fixed-length integer number representing size in bytes; the length must be sufficient to express maximal permitted payload.

Format: size

Used by: block_get

7.4.6 Untyped memory block

Format: byte

0

, …, byte size-1

Used by: block_put, type1_percolation, NIC_microcode, parcel_tunnel

In addition, if the action field contains flags preventing processing by lower layers of parcel stack, the following requests degenerate to tis data format:

35

Note: the size is known from parcel descriptor.

7.4.7 Compound type descriptor

Compound type descriptor expresses the structural type information describing an arbitrary