Comparing Naive Bayesian and k-NN algorithms for automatic email

advertisement

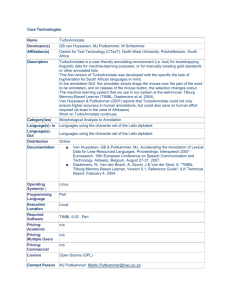

Comparing Naïve Bayesian and k-NN algorithms for automatic email classification Louis Eisenberg Stanford University M.S. student PO Box 18199 Stanford, CA 94309 650-269-9444 louis@stanfordalumni.org ABSTRACT The problem of automatic email classification has numerous possible solutions; a wide variety of natural language processing algorithms are potentially appropriate for this text classification task. Naïve Bayes implementations are popular because they are relatively easy to understand and implement, they offer reasonable computational efficiency, and they can achieve decent accuracy even with a small amount of training data. This paper seeks to compare the performance of an existing Naïve Bayesian system, POPFile [1], to a hand-tuned k-nearest neighbors system. Previous research has generally shown that k-NN should outperform Naïve Bayes in text classification. My results fail to support that trend, as POPFile significantly outperforms the k-NN system. The likely explanation is that POPFile is a system specifically tuned to the email classification task that has been refined by numerous people over a period of years, whereas my k-NN system is a crude attempt at the problem that fails to exploit the full potential of the general k-NN algorithm. 1. INTRODUCTION Using machine learning to classify email messages is an increasingly relevant problem as the rate at which Internet users receive emails continues to grow. Though classification of desired messages by content is still quite rare, many users are the beneficiaries of machine learning algorithms that attempt to distinguish spam from non-spam (e.g. SpamAssassin [2]). In contrast to the relative simplicity of spam filtering – a binary decision – filing messages into many folders can be fairly challenging. The most prominent non-commercial email classifier, POPFile, is an open-source project that wraps a user-friendly interface around the training and classification of a Naïve Bayesian system. My personal experience with POPFile suggests that it can achieve respectable results but it leaves considerable room for improvement. In light of the conventional wisdom in NLP research that kNN classifiers (and many other types of algorithms) should be able to outperform a Naïve Bayes system in text classification, I adapted TiMBL [3], a freely available k-NN package, to the email filing problem and sought to surpass the accuracy obtained by POPFile. 2. DATA I created the experimental dataset from my own inbox, considering the more than 2000 non-spam messages that I received in the first quarter of 2004 as candidates. Within that group, I selected approximately 1600 messages that I felt confident classifying into one of the twelve “buckets” that I arbitrarily enumerated (see Table 1). I then split each bucket and allocated half of the messages to the training set and half to the test set. As input to POPFile, I kept the messages in Eudora mailbox format. For TiMBL, I had to convert each message to a feature vector, as described in section 3. Code Size** ae bslf c hf na p pa se s ua w wb 86 63 145 43 37 415 53 134 426 13 164 36 Description academic events, talks, seminars, etc. buy, sell, lost, found courses, course announcements, etc. humorous forwards newsletters, articles personal politics, advocacy social events, parties sports, intramurals, team-related University administrative websites, accounts, e-commerce, support work, business * - training and test combined Table 1. Classification buckets 3. POPFILE POPFile implements a Naïve Bayesian algorithm. Naïve Bayesian classification depends on two crucial assumptions (both of which are results of the single Naïve Bayes assumption of conditional independence among features as described in Manning and Schutze [4]): 1. each document can be represented as a bag of words, i.e. the order and syntax of words is completely ignored; 2. in a given document, the presence or absence of a given word is independent of the presence or absence of any other word. Naïve Bayes is thus incapable of appropriately capturing any conditional dependencies between words, guaranteeing a certain level of imprecision; however, in many cases this flaw is relatively minor and does not prevent the classifier from performing well. To train and test POPFile, I installed the software on a Windows system and then used a combination of Java and Perl to perform the necessary operations. To train the classifier I fed the mbx files (separated by category) directly to the provided utility script insert.pl. For testing, I split each test set mbx file into its individual messages, then used a simple Perl script fed the messages one at a time to the provided script pipe.pl, which reads in a message and outputs the same message with POPFile’s classification decision prepended to the Subject header and/or added in a new header called X-TestClassification. After classifying all of the messages, I ran another Java program, popfilescore, to tabulate the results and generate a confusion matrix. 4. k-NN To implement my k-NN system I used the Tilburg Memory-Based Learner, a.k.a. TiMBL. I installed and ran the software on various Unix-based systems. TiMBL is an optimized version of the basic k-NN algorithm, which attempts to classify new instances by seeking “votes” from the k existing instances that are closest/most similar to the new instance. The TiMBL reference guide [5] explains: Memory-Based Learning (MBL) is based on the idea that intelligent behavior can be obtained by analogical reasoning, rather than by the application of abstract mental rules as in rule induction and rule-based processing. In particular, MBL is founded in the hypothesis that the extrapolation of behavior from stored representations of earlier experience to new situations, based on the similarity of the old and the new situation, is of key importance. Preparing the messages to serve as input to the kNN algorithm was considerably more difficult than in the Naïve Bayes case. A major challenge in using this algorithm is deciding how to represent a text document as a vector of features. I chose to consider five separate sections of each email: the attachments, the from, to and subject headers, and the body. For attachments each feature was a different file type, e.g. jpg or doc. For the other four sections, each feature was an email address, hyperlink URL, or stemmed and lowercased word or number. I discarded all other headers. I also ignored any words of length less than 3 letters or greater than 20 letters and any words that appeared on POPFile’s brief stopwords list. All together this resulted in each document in the data set being represented as a vector of 15,981 features. For attachments, subject, and body, I used tf.idf weighting according to the equation: weight(i,j) = (1+log(tfi,j))log(N/dfi) iff tfi,j ≥ 1, where i is the term index and j is the document index. For the to and from fields, each feature was a binary value indicating the presence or absence of a word or email address. The Java program mbx2featurevectors parses the training or test set and generates a file containing all of the feature vectors, represented in TiMBL’s Sparse format. TiMBL processes the training and test data in response to a single command. It has a number of command-line options with which I experimented in an attempt to extract better accuracy. Among them: k, the number of neighbors to consider when classifying a test point: the literature suggests that anywhere between one and a handful of neighbors may be optimal for this type of task w, the feature weighting scheme: the classifier attempts to learn which features have more relative importance in determining the classification of an instance; this can be absent (all features get equal weight) or based on information gain or other slight variations such as gain ratio and shared variance m, the distance metric: how to calculate the nearness of two points based on their features; options that I tried included overlap (basic equals or not equals for each feature), modified value difference metric (MVDM), and Jeffrey divergence d, the class vote weighting scheme for neighbors; this can be simple majority (all have equal weight) or various alternatives, such as Inverse Linear and Inverse Distance, that assign higher weight to those neighbors that are closer to the instance For distance metrics, MVDM and Jeffrey divergence are similar and, on this task with its numeric feature vectors, both clearly preferable to basic overlap, which draws no distinction between two values that are almost but not quite equivalent and two values that are very far apart. The other options have no clearly superior setting a priori, so I relied on the advice of the TiMBL reference guide and the results of my various trial runs. 5. RESULTS/CONCLUSIONS The confusion matrices for POPFile and for the most successful TiMBL run are reproduced in Tables 2 and 3. Figure 4 compares the accuracy scores of the two algorithms on each category. Table 5 lists accuracy scores for various combinations of TiMBL options. The number of TiMBL runs possible was limited considerably by the length of time that each run takes – up to several hours even on a fast machine, depending greatly on the exact options specified. ae bs ae 3 0 bs 0 c 0 hf hf na 0 0 0 5 0 0 1 38 0 0 1 0 na 1 1 p 0 0 pa 0 se 0 s w wb 14 0 0 0 4 19 0 0 0 8 13 0 0 0 0 0 5 0 0 0 11 0 0 0 0 0 0 189 0 0 15 0 1 0 6 5 0 0 0 27 29 0 0 0 p 178 0 0 0 na 0 0 5 0 0 hf 0 12 0 27 0 c 0 bs se s 1 0 25 0 3 0 0 12 0 5 0 10 0 0 5 0 2 0 0 0 0 0 2 13 2 0 1 0 8 0 0 1 0 0 0 28 0 6 ua 0 0 0 0 0 1 0 w 2 0 0 0 0 41 0 0 0 0 0 each other, but failed to pick up on most of the other important differences across buckets. ua pa wb c p 0 18 0 0 0 0 0 wb w ua s se TiMBL POPFile pa ae Table 2. Confusion matrix for best TiMBL run ae bs ae 38 0 bs 0 c 8 hf 0 na p c p s ua w 0% hf na pa se 1 0 0 0 0 0 2 0 2 wb 0 10 0 0 0 0 0 0 21 0 0 0 3 51 0 0 4 1 0 2 1 0 0 0 0 7 0 7 1 1 4 0 0 0 0 0 0 1 32 0 0 0 0 0 0 0 0 10 3 8 0 140 2 7 20 0 4 4 pa 3 1 0 0 0 0 18 0 2 0 1 0 se 0 5 2 1 0 3 0 33 20 0 0 0 s 0 14 3 2 0 15 0 2 173 0 0 3 ua 0 0 0 0 0 0 0 0 0 6 0 0 w 1 0 7 0 0 4 1 2 4 2 59 0 wb 0 0 0 1 0 2 0 0 0 0 0 14 Table 3. Confusion matrix for POPFile As the tables and figure indicate, POPFile clearly outperformed even the best run by TiMBL. POPFile’s overall accuracy was 72.7%, compared to only 61.1% for the best TiMBL trial. In addition, POPFile’s accuracy was well over 60% in almost all of the categories; by contrast, the kNN system only performed well in three categories. Interestingly, it performed best in the two largest categories, personal and sports – in fact, it was more accurate than POPFile. Apparently it succeeded in distinguishing those categories from the rest of the buckets and from 20% 40% 60% 80% 100% Figure 1. Accuracy by category The various TiMBL runs provide evidence for a few minor insights about how to get the most out of the k-NN algorithm. The overwhelming conclusion is that shared variance is far superior to the other weighting schemes for this task. Based on the explanation given in the TiMBL documentation, this performance disparity is likely a reflection of the ability of shared variance (and chi-squared, which is very similar) to avoid a bias toward features with more values – a significant problem with gain ratio. The results also suggest that k should be a small number – the highest values of k gave the worst results. The effect of the m and d options is unclear, though simple majority voting seems to perform worse than inverse distance and inverse linear. It is also important to recognize the impact of the original construction of the feature vectors. Perhaps the k-NN system’s poor performance was a result of unwise choices in mbx2featurevector: focusing on the wrong headers, not parsing symbols and numbers as elegantly as possible, not trying a bigram or trigram model on the message body, choosing a poor tf.idf formula, etc. m MVDM overlap overlap MVDM Jeffrey overlap MVDM MVDM MVDM MVDM w gain ratio none inf. gain shared var shared var shared var gain ratio inf. gain shared var shared var k 9 1 15 3 5 9 21 7 1 5 d inv. dist. majority inv. dist. inv. linear inv. linear inv. linear inv. dist. inv. linear inv. dist. majority accuracy 51.0% 54.9% 53.7% 61.1% 60.2% 58.9% 49.4% 57.4% 61.0% 54.6% attempted to use semantic information to improve accuracy [8]. In addition to the two models discussed in this paper, there exist many other options for text classification: support vector machines, maximum entropy and logistic models, decision trees and neural networks, for example. 7. REFERENCES [1] POPFile: http://popfile.sourceforge.net [2] SpamAssassin: http://www.spamassassin.org Table 4. Sample of TiMBL trials [3] TiMBL: http://ilk.kub.nl/software.html#timbl [4] Manning, Christopher and Hinrich Schutze. Foundations of Statistical Natural Language Processing. 2000. 6. OTHER RESEARCH A vast amount of research already exists on this and similar topics. Some people, e.g. Rennie et al [6], have investigated ways to overcome the faulty Naïve Bayesian assumption of conditional independence. Kiritchenko and Matwin [7] found that support vector machines are superior to Naïve Bayesian systems when much of the training data is unlabeled. Other researchers have [5] TiMBL reference guide: http://ilk.uvt.nl/downloads/pub/papers/ilk0310.pdf [6] Jason D. M. Rennie, Lawrence Shih, Jaime Teevan and David R. Karger. Tackling the Poor Assumptions of Naive Bayes Text Classifiers. Proceedings of the Twentieth International Conference on Machine Learning. 2003. [7] Svetlana Kiritchenko and Stan Matwin. Email classification with co-training. Proceedings of the 2001 conference of the Centre for Advanced Studies on Collaborative Research. 2001. [8] Nicolas Turenne. Learning Semantic Classes for Improving Email Classification. Biométrie et Intelligence. 2003.