Resit

advertisement

Machine Learning (Extended)

Resit paper for August 2014.

Answer all questions.

Non-alpha calculator may be used.

Question 1 – Probabilistic generative classifiers

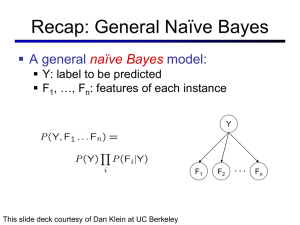

a) What is the Naive Bayes assumption and why is it called Naive? [5%]

b) State an advantage and a disadvantage of using the the Naive Bayes assumption in

classification. [5%]

c) How many parameters do you need to specify P(A,B|C) if A, B and C are discrete random

variables that can take on a, b and c different values respectively? [5%]

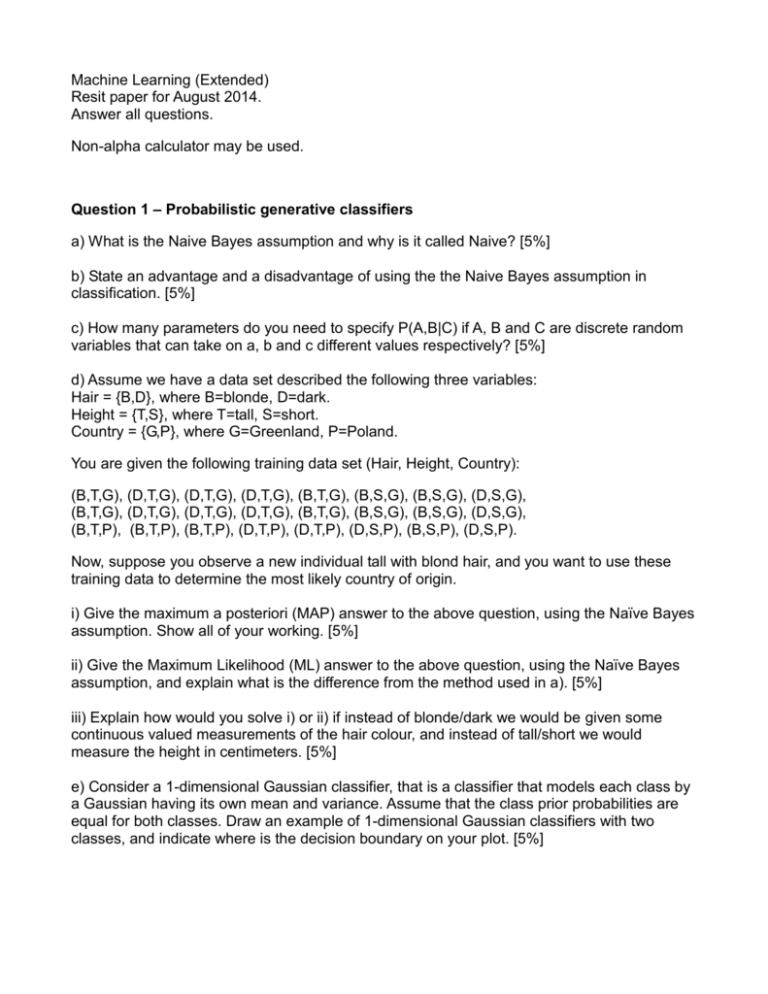

d) Assume we have a data set described the following three variables:

Hair = {B,D}, where B=blonde, D=dark.

Height = {T,S}, where T=tall, S=short.

Country = {G,P}, where G=Greenland, P=Poland.

You are given the following training data set (Hair, Height, Country):

(B,T,G), (D,T,G), (D,T,G), (D,T,G), (B,T,G), (B,S,G), (B,S,G), (D,S,G),

(B,T,G), (D,T,G), (D,T,G), (D,T,G), (B,T,G), (B,S,G), (B,S,G), (D,S,G),

(B,T,P), (B,T,P), (B,T,P), (D,T,P), (D,T,P), (D,S,P), (B,S,P), (D,S,P).

Now, suppose you observe a new individual tall with blond hair, and you want to use these

training data to determine the most likely country of origin.

i) Give the maximum a posteriori (MAP) answer to the above question, using the Naïve Bayes

assumption. Show all of your working. [5%]

ii) Give the Maximum Likelihood (ML) answer to the above question, using the Naïve Bayes

assumption, and explain what is the difference from the method used in a). [5%]

iii) Explain how would you solve i) or ii) if instead of blonde/dark we would be given some

continuous valued measurements of the hair colour, and instead of tall/short we would

measure the height in centimeters. [5%]

e) Consider a 1-dimensional Gaussian classifier, that is a classifier that models each class by

a Gaussian having its own mean and variance. Assume that the class prior probabilities are

equal for both classes. Draw an example of 1-dimensional Gaussian classifiers with two

classes, and indicate where is the decision boundary on your plot. [5%]

Question 2 – Non-probabilistic classifiers

a) Consider the following training data from 2 categories:

Class1: (1,1)’

Class 2: (-1,-1)’, (1,0)’, (0,1)’

i) Plot these four points, draw the linear separation boundary that SVM would give for these

data, and list the support vectors. [5%]

ii) Consider training data of 1-dimensional points from two classes:

Class 1: -5,5

Class 2: -2,1

A) Consider the transformation f: RR2, f(x)=(x,x2). Transform the data and plot these

transformed points. Are these linearly separable? [5%]

B) Draw the optimal separating hyper-plane in the transformed space, and explain in one or

two sentences how does this linear boundary help us to separate the original data points.

[5%]

b) Consider the following data set with two real-valued inputs x (i.e. the coordinates of the

points) and one binary output y (taking values + or -). We want to use k-nearest neighbours

(K-NN) with Euclidean distance to predict y from x.

i) Calculate the leave-one-out cross-validation error of 1-NN on this data set. That is, for each

point in turn, try to predict its label y using the rest of the points, and count up the number of

misclassification errors. [5%]

ii) Calculate the leave-one-out cross-validation error of 3-NN on the same data set. [5%]

iii) Describe how would you choose the number of neighbours K in K-NN in general? [5%]

c) Suppose you have a data set to classify and you have several classification methods that

you can try. Explain how do you decide which of these methods to choose [5%]

Question 3 – Learning theory

a) State three questions that are studied by learning theory. [5%]

b) A learning theory framework is the PAC model of learning, where PAC stands for Probably

Approximately Correct. Explain in plain English when do we say that a concept class is PAClearnable. [5%]

Question 4 – Unsupervised learning: Clustering

a) What methods to you know for data clustering? [5%]

b) Describe how you would use a clustering method to do image segmentation. [5%]

c) Describe two limitations of the K-means clustering algorithm. [5%]

d) Suppose you have run K-means clustering on a data set and later you get more data point

into the same data set. How would you cluster the new points without re-running the algorithm

? [5%]

Link to learning the outcomes assessed by examination

1. Demonstrate a knowledge and understanding of the main approaches to machine learning.

Q1 a,c,e; Q2 a, b; Q3; Q4 a.

2. Demonstrate the ability to apply the main approaches to unseen examples.

Q1 d; Q2 b; Q3; Q4 d.

3. Demonstrate an understanding of the differences, advantages and problems of the main

approaches in machine learning.

Q1 d iii; Q2 c.

4. Demonstrate an understanding of the main limitations of current approaches to machine

learning, and be able to discuss possible extensions to overcome these limitations.

Q1 b; Q2 c; Q4 c.