Data Structures, Algorithms, & Applications in Java

advertisement

Data Structures, Algorithms, & Applications in Java

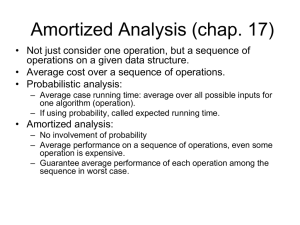

Amortized Complexity

Copyright 1999 Sartaj Sahni

What is Amortized Complexity?

Examples

Maintenance Contract

The McWidget Company

Subset Generation

Simulated Pointers

Array Stacks and Queues

Union-Find

Rearranging Railroad Cars

Exercises

Further Reading

What is Amortized Complexity?

The complexity of a method or operation, as defined in Chapter 2 of the text, is the actual

complexity of the method/operation. The actual complexity of an operation is determined by the

step count for that operation, and the actual complexity of a sequence of operations is determined

by the step count for that sequence. The actual complexity of a sequence of operations may be

determined by adding together the step counts for the individual operations in the sequence.

Typically, determining the step count for each operation in the sequence is quite difficult, and

instead, we obtain an upper bound on the step count for the sequence by adding together the

worst-case step count for each operation.

Example Consider the method insert of Program 2.10. This method inserts an element into a

sorted array, and its step count ranges from a low of 4 to a high of 2n+4, where n is the number of

elements already in the array. Suppose we perform 5 insert operations beginning with n = 0.

Further, suppose, that the actual step counts for these insert operations are 4, 4, 6, 10, and 8,

respectively. The actual step count for the sequence of insert operations is 4 + 4 + 6 + 10 + 8 = 32.

If we did not know the actual step count for the individual operations, we could obtain an upper

bound on the actual step count for the operation sequence using one of the following two

approaches.

1

1. Since the worst-case step count for an insert operation is 2n 4 ,

4

(2i 4) = 4 + 6 + 8 +

i 0

10 + 12 = 40

is an upper bound on the step count for the sequence of 5 inserts.

2. The maximum number of elements already in the array at the time an insert operation

begins is 4. Therefore, the worst-case step count of an insert operation is 2*4+4 = 12.

Therefore, 5*12 = 60 is an upper bound on the step count for the sequence of 5 inserts.

In the preceding example, the upper bound obtained by the first approach is closer to the actual

step count for the operation sequence. We say that the count obtained by the first approach is a

tighter (i.e., closer to the real count) upper bound than that obtained by the second approach.

When determining the complexity of a sequence of operations, we can, at times, obtain tighter

bounds using amortized complexity rather than worst-case complexity. Unlike the actual and

worst-case complexities of an operation, which are closely related to the step count for that

operation, the amortized complexity of an operation is an accounting artifact that often bears no

direct relationship to the actual complexity of that operation. The amortized complexity of an

operation could be anything. The only requirement is that the sum of the amortized complexities

of all operations in the sequence be greater than or equal to the sum of the actual complexities.

That is

n

(1)

amortized (i)

i 1

n

actual(i)

i 1

where amortized(i) and actual(i), respectively, denote the amortized and actual complexities of the

ith operation in a sequence of n operations. Because of this requirement on the sum of the

amortized complexities of the operations in any sequence of operations, we may use the sum of

the amortized complexities as an upper bound on the complexity of any sequence of operations.

You may view the amortized cost of an operation as being the amount you charge the operation

rather than the amount the operation costs. You can charge an operation any amount you wish so

long as the amount charged to all operations in the sequence is at least equal to the actual cost of

the operation sequence.

Relative to the actual and amortized costs of each operation in a sequence of n operations, we

define a potential function P(i) as below

(2) P(i) = amortized(i) - actual(i) + P(i-1)

That is, the ith operation causes the potential function to change by the difference between the

amortized and actual costs of that operation. If we sum Equation (2) for 1 <= i <= n, we get

2

n

P (i )

i 1

n

amortized (i) actual(i) P(i 1)

i 1

or

n

P(i) P(i 1)

i 1

n

amortized (i) actual(i)

i 1

or

n

P(n) P(0) amortized (i ) actual (i) P(n) - P(0) = sum 1 <= i <= n(amortized(i) - actual(i))

i 1

From Equation (1), it follows that

(3) P(n) - P(0) >= 0

Under the assumption that P(0) = 0, the potential P(i) is the amount by which the first i operations

have been overcharged (i.e., they have been charged more than their actual cost).

Generally, when we analyze the complexity of a sequence of n operations, n can be any

nonnegative integer. Therefore, Equation (3) must hold for all non negative integers.

The preceding discussion leads us to the following three methods to arrive at amortized costs for

operations:

1. Aggregate Method

In the aggregate method, we determine an upper bound UpperBoundOnSumOfActualCosts(n)

for the sum of the actual costs of the n operations. The amortized cost of each operation is

set equal to UpperBoundOnSumOfActualCosts(n)/n. You may verify that this assignment of

amortized costs satisfies Equation (1) and is, therefore, valid.

2. Accounting Method

In this method, we assign amortized costs to the operations (probably by guessing what

assignment will work), compute the P(i)s using Equation (2), and show that P(n)-P(0) >= 0.

3. Potential Method

Here, we start with a potential function (probably obtained using good guess work) that

satisfies Equation (3), and compute the amortized complexities using Equation (2).

Although, at this time, you may feel most comfortable with the aggregate method. This method is

often the hardest to use because it is often quite difficult to obtain a bound on the aggregate actual

cost that is smaller than the bound obtained by using the worst-case cost of each operation in the

sequence. The accounting method is intuitive (we simply verify that the sum of the amortized

costs is at least equal to the sum of the actual costs) and often results in tight bounds on the

complexity of a sequence of operations. The potential method is often the hardest to use (because

3

of the difficulty of determining the proper potential function to use), but for some applications

remains the only way to obtain tight complexity bounds.

Examples

1. Maintenance Contract

Problem Definition

In January, you buy a new car from a dealer who offers you the following maintenance contract:

$50 each month other than March, June, September and December (this covers an oil change and

general inspection), $100 every March, June, and September (this covers an oil change, a minor

tune-up, and a general inspection), and $200 every December (this covers an oil change, a major

tune-up, and a general inspection). We are to obtain an upper bound on the cost of this

maintenance contract as a function of the number of months.

Worst-Case Method

Using the ideas developed in Chapter 2, we can bound the contract cost for the first n months by

taking the product of n and the maximum cost incurred in any month (i.e., $200). This would be

analogous to the traditional way to estimate the complexity--take the product of the number of

operations and the worst-case complexity of an operation. Using this approach, we get $200n as

an upper bound on the contract cost. The upper bound is correct because the actual cost for n

months does not exceed $200n.

Aggregate Method

To use the aggregate method for amortized complexity, we first determine an upper bound on the

sum of the costs for the first n months. As tight a bound as is possible is desired. The sum of the

actual monthly costs of the contract for the first n months is

200*floor(n/12) + 100*(floor(n/3) - floor(n/12)) + 50*(n - floor(n/3))

= 100*floor(n/12) + 50*floor(n/3) + 50*n

<= 100*n/12 + 50*n/3 + 50*n

= 50n(1/6 + 1/3 + 1)

= 50n(3/2)

4

= 75n

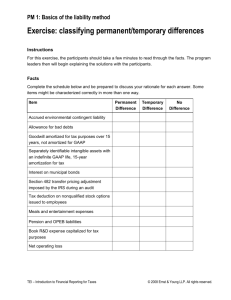

The amortized cost for each month is set to $75. The table below shows the actual costs, the

amortized costs, and the potential function value (assuming P(0) = 0) for the first 16 months of the

contract.

month

| 1

actual cost

| 50 50 100 50

amortized cost | 75

P()

2

75

| 25 50

3

75

4

5

6

7

50 100

75 75

25 50

75

8

9

10

11

50

50 100

50

75

75

75

15

16

50 200 50

50 100

50

75

75

75

75

75

75

75

50

75 100 75 100 125

0

25

50

25

50

75

12 13

14

Notice that some months are charged more than their actual costs and others are charged less than

their actual cost. The cumulative difference between what the operations are charged and their

actual costs is given by the potential function. The potential function satisfies Equation (3) for all

values of n. When we use the amortized cost of $75 per month, we get $75n as an upper bound on

the contract cost for n months. This bound is tighter than the bound of $200n obtained using the

worst-case monthly cost.

Accounting Method

When we use the accounting method, we must first assign an amortized cost for each month and

then show that this assignment satisfies Equation (3). We have the option to assign a different

amortized cost to each month. In our maintenance contract example, we know the actual cost by

month and could use this actual cost as the amortized cost. It is, however, easier to work with an

equal cost assignment for each month. Later, we shall see examples of operation sequences that

consist of two or more types of operations (for example, when dealing with lists of elements, the

operation sequence may be made up of search, insert, and remove operations). When dealing with

such sequences we often assign a different amortized cost to operations of different types

(however, operations of the same type have the same amortized cost).

To get the best upper bound on the sum of the actual costs, we must set the amortized monthly

cost to be the smallest number for which Equation (3) is satisfied for all n. From the above table,

we see that using any cost less than $75 will result in P(n) - P(0) < 0 for some values of n.

Therefore, the smallest assignable amortized cost consistent with Equation (3) is $75.

Generally, when the accounting method is used, we have not computed the aggregate cost.

Therefore, we would not know that $75 is the least assignable amortized cost. So we start by

assigning an amortized cost (obtained by making an educated guess) to each of the different

operation types and then proceed to show that this assignment of amortized costs satisfies

Equation (3). Once we have shown this, we can obtain an upper bound on the cost of any

operation sequence by computing

5

k

f (i) amortized (i)

i 1

where k is the number of different operation types and f(i) is the frequency of operation type i (i.e.,

the number of times operations of this type occur in the operation sequence).

For our maintenance contract example, we might try an amortized cost of $70. When we use this

amortized cost, we discover that Equation (3) is not satisfied for n = 12 (for example) and so $70

is an invalid amortized cost assignment. We might next try $80. By constructing a table such as

the one above, we will observe that Equation (3) is satisfied for all months in the first 12 month

cycle, and then conclude that the equation is satisfied for all n. Now, we can use $80n as an upper

bound on the contract cost for n months.

Potential Method

We first define a potential function for the analysis. The only guideline you have in defining this

function is that the potential function represents the cumulative difference between the amortized

and actual costs. So, if you have an amortized cost in mind, you may be able to use this

knowledge to develop a potential function that satisfies Equation (3), and then use the potential

function and the actual operation costs (or an upper bound on these actual costs) to verify the

amortized costs.

If we are extremely experienced, we might start with the potential function

P(0) = 0

P(n) = 0 for n mod 12 = 0

P(n) = 25 for n mod 12 = 1 or 3

P(n) = 50 for n mod 12 = 2, 4 or 6

P(n) = 75 for n mod 12 = 5, 7 or 9

P(n) = 100 for n mod 12 = 8, or 10

P(n) = 125 for n mod 12 = 11

Without the aid of the table constructed for the aggregate method, it would take quite some

ingenuity to come up with this potential function. Having formulated a potential function and

verified that this potential function satisfies Equation (3) for all n, we proceed to use Equation (2)

to determine the amortized costs.

From Equation (2), we obtain

amortized(i) = actual(i) + P(i) - P(i-1)

Therefore, amortized(1) = actual(1) + P(1) - P(0) = 50 + 25 - 0 = 75, amortized(2) = actual(2) + P(2) - P(1)

= 50 + 50 - 25 = 75, amortized(3) = actual(3) + P(3) - P(2) = 100 + 25 - 50 = 75, and so on. Therefore,

6

the amortized cost for each month is $75. So, the actual cost for n months is at most $75n.

2. The McWidget Company

Problem Definition

The famous McWidget company manufactures widgets. At its headquarters, the company has a

large display that shows how many widgets have been manufactured so far. Each time a widget is

manufactured, a maintenance person updates this display. The cost for this update is $c + dm,

where c is a fixed trip charge, d is a charge per display digit that is to be changed, and m is the

number of digits that are to be changed. For example, when the display is changed from 1399 to

1400, the cost to the company is $c + 3d because 3 digits must be changed. The McWidget

company wishes to amortize the cost of maintaining the display over the widgets that are

manufactured, charging the same amount to each widget. More precisely, we are looking for an

amount $e = amortized(i) that should levied against each widget so that the sum of these charges

equals or exceeds the actual cost of maintaining/updating the display ($e*n >= actual total cost

incurred for first n widgets for all n >= 1). To keep the overall selling price of a widget low, we wish

to find as small an e as possible. Clearly, e > c + d because each time a widget is made, at least

one digit (the least significant one) has to be changed.

Worst-Case Method

This method does not work well in this application because there is no finite worst-case cost for a

single display update. As more and more widgets are manufactured, the number of digits that

need to be changed increases. For example, when the 1000th widget is made, 4 digits are to be

changed incurring a cost of c + 4d, and when the 1,000,000th widget is made, 7 digits are to be

changed incurring a cost of c + 7d. If we use the worst-case method, the amortized cost to each

widget becomes infinity.

Aggregate Method

Let n be the number of widgets made so far. As noted earlier, the least significant digit of the

display has been changed n times. The digit in the tens place changes once for every ten widgets

made, that in the hundreds place changes once for every hundred widgets made, that in the

thousands place changes once for every thousand widgets made, and so on. Therefore, the

aggregate number of digits that have changed is bounded by n(1 + 1/10 + 1/100 + 1/1000 + ...) =

(1.11111...)n. So, the amortized cost of updating the display is c + d(1.11111...)n/n < c + 1.12d. If the

McWidget company adds $c + 1.12d to the selling price of each widget, it will collect enough

money to pay for the cost of maintaining the display. Each widget is charged the cost of changing

1.12 digits regardless of the number of digits that are actually changed. The table given below

shows the actual cost, as measured by the number of digits that change, of maintaining the

display, the amortized cost (i.e., 1.12 digits per widget), and the potential function. The potential

function gives the difference between the sum of the amortized costs and the sum of the actual

costs. Notice how the potential function builds up so that when it comes time to pay for changing

7

two digits, the previous potential function value plus the current amortized cost exceeds 2. From

our derivation of the amortized cost, it follows that the potential function is always nonnegative.

widget

|

1

2

3

4

5

6

7

8

9 10 11 12 13 14

actual cost

|

1

1

1

1

1

1

1

1

1

2

1

1

1

1

amortized cost | 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12

P()

| 0.12 0.24 0.36 0.48 0.60 0.72 0.84 0.96 1.08 0.20 0.32 0.44 0.56 0.68

================================================================================

===

widget

| 15 16 17 18 19 20 21 22 23 24 25 26 27 28

actual cost

|

1

1

1

1

1

2

1

1

1

1

1

1

1

1

amortized cost | 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12 1.12

P()

| 0.80 0.92 1.04 1.16 1.28 0.40 0.52 0.64 0.76 0.88 1.00 1.12 1.24 1.36

Accounting Method

We begin by assigning an amortized cost to the individual operations, and then we show that

these assigned costs satisfy Equation (3). Having already done an amortized analysis using the

aggregate method, we see that Equation (3) is satisfied when we assign an amortized cost of $c +

1.12d to each display change. Typically, however, the use of the accounting method is not

preceded by an application of the aggregate method and we start by guessing an amortized cost

and then showing that this guess satisfies Equation (3).

Suppose we assign a guessed amortized cost of $c + 2d for each display change.

P(n) - P(0) = sum 1 <= i <= n(amortized(i) - actual(i))

= (c + 2d)n - sum1 <= i <= nactual(i)

= (c + 2d)n - (c + (1 + 1/10 + 1/100 + ...)d)n

>= (c + 2d)n - (c + 1.12d)n

>= 0

This analysis also shows us that we can reduce the amortized cost of a widget to $c + 1.12d.

An alternative proof method that is useful in some analyses involves distributing the excess

charge P(i) - P(0) over various accounting entities, and using these stored excess charges (called

credits) to establish P(i+1) - P(0) >= 0. For our McWidget example, we use the display digits as

the accounting entities. Initially, each digit is 0 and each digit has a credit of 0 dollars. Suppose

we have guessed an amortized cost of $c + (1.111...)d. When the first widget is manufactured, $c +

d of the amortized cost is used to pay for the update of the display and the remaining $(0.111...)d

8

of the amortized cost is retained as a credit by the least significant digit of the display. Similarly,

when the second through ninth widgets are manufactured, $c + d of the amortized cost is used to

pay for the update of the display and the remaining $(0.111...)d of the amortized cost is retained as

a credit by the least significant digit of the display. Following the manufacture of the ninth

widget, the least significant digit of the display has a credit of $(0.999...)d and the remaining digits

have no credit. When the tenth widget is manufactured, $c + d of the amortized cost are used to

pay for the trip charge and the cost of changing the least significant digit. The least significant

digit now has a credit of $(1.111...)d. Of this credit, $d are used to pay for the change of the next

least significant digit (i.e., the digit in the tens place), and the remaining $(0.111...)d are

transferred to the ten's digit as a credit. Continuing in this way, we see that when the display

shows 99, the credit on the ten's digit is $(0.999...)d and that on the one's digit (i.e., the least

significant digit) is also $(0.999...)d. When the 100th widget is manufactured, $c + d of the

amortized cost are used to pay for the trip charge and the cost of changing the least significant

digit, and the credit on the least significant digit becomes $(1.111...)d. Of this credit, $d are used to

pay for the change of the tens digit from 9 to 0, the remaining $(0.111...)d credit on the one's digit

is transferred to the ten's digit. The credit on the ten's digit now becomes $(1.111...)d. Of this

credit, $d are used to pay for the change of the hundred's digit from 0 to 1, the remaining

$(0.111...)d credit on the ten's digit is transferred to the hundred's digit.

The above accounting scheme ensures that the credit on each digit of the display always equals

$(0.111...)dv, where v is the value of the digit (e.g., when the display is 206 the credit on the one's

digit is $(0.666...)d, the credit on the tens's digit is $0, and that on the hundred's digit is $(0.222...)d.

From the preceding discussion, it follows that P(n) - P(0) equals the sum of the digit credits and

this sum is always nonnegative. Therefore, Equation (3) holds for all n.

Potential Method

We first postulate a potential function that satisfies Equation (3), and then use this function to

obtain the amortized costs. From the alternative proof used above for the accounting method, we

can see that we should use the potential function

P(n) = (0.111...)d sumivi

where vi is the value of the ith digit of the display. For example, when the display shows 206 (at

this time n = 206), the potential function value is (0.888...)d. This potential function satisfies

Equation (3).

Let q be the number of 9s at the right end of j (i.e., when j = 12903999, q = 3). When the display

changes from j to j+1, the potential change is (0.111...)d(1-9q) and the actual cost of updating the

display is $c + (q+1)d. From Equation (2), it follows that the amortized cost for the display change

is

9

actual cost + potential change

= c + (q+1)d + (0.111...)d(1-9q)

= c + (1.111...)d

3. Subset Generation

Problem Definition

The subsets of a set of n elements are defined by the 2n vectors x[1:n], where each x[i] is either 0 or

1. x[i] = 1 iff the ith element of the set is a member of the subset. The subsets of a set of three

elements are given by the eight vectors 000, 001, 010, 011, 100, 101, 110, 111, for example. A

recursive method to generate all subsets was developed as the solution to Exercise 1.29. We shall

now develop an enumerator for the subsets. For convenience, we shall actually enumerate only

the nonempty subsets (i.e., all subsets other than 000...000). Further, we shall deviate from the

syntax of Java enumerators and provide only two methods--a constructor and a method

nextSubset, which returns null when there is no next subset and returns the next subset otherwise.

The code is given below.

public class SubsetEnumerator

{

// data member

int n;

// set size

int [] x; // subset array

/** constructor */

public SubsetEnumerator(int theN)

{

n = theN;

x = new int [n + 1];

}

/** @return next subset

* @return null iff there is no next subset */

public int [] nextSubset()

{

// generate next subset

int i = n;

while (i > 0 && x[i] == 1)

10

{

x[i] = 0;

i--;

}

if (i == 0)

return null;

else

{

x[i] = 1;

return x;

}

}

/** test program */

public static void main(String [] args)

{

int n = 4;

int [] x;

SubsetEnumerator sv = new SubsetEnumerator(n);

// output all subsets other than the null subset

while (true)

{

x = sv.nextSubset();

if (x == null) break;

for (int i = 1; i <= n; i++)

System.out.print(x[i] + " ");

System.out.println();

}

}

}

We wish to determine how much time it takes to generate the first m, 1 <= m < 2n subsets.

Worst-Case Method

The complexity of nextSubset is Theta(c), where c is the number of xs that change. Since all n of

11

the xs could change in a single invocation of nextSubset, the worst-case complexity of nextSubset

is Theta(n). Using the worst-case method, the time required to generate the first m subsets is

O(mn).

Aggregate Method

The complexity of nextSubset equals the number of x[i]s that change. When nextSubset is invoked

m times, x[n] changes m times; x[n - 1] changes floor(m/2) times; x[n - 2] changes floor(m/4) times; x[n

- 3] changes floor(m/8) times; and so on. Therefore the sum of the actual costs of the first m

invocations is

sum0 <= i <= floor(log2m)floor(m/2i) < 2m

Therefore, the complexity of generating the first m subsets is actually O(m), a tighter bound than

obtained using the worst-case method.

The amortized complexity of nextSubset is (sum of actual costs)/m < 2m/m = O(1).

Accounting Method

We first guess the amortized complexity of nextSubset, and then show that this amortized

complexity satisfies Equation (3). Suppose we guess that the amortized complexity is 2. To verify

this guess, we must show that

P(m) - P(0) >= 0

; for all m.

We shall use the alternative proof method used in the McWidget example. In this method, we

distribute the excess charge P(i) - P(0) over various accounting entities, and use these stored

excess charges to establish P(i+1) - P(0) >= 0. We use the x[j]s as the accounting entities. Initially,

each x[j] is 0 and has a credit of 0. When the first subset is generated, 1 unit of the amortized cost

is used to pay for the single x[j] that changes and the remaining 1 unit of the amortized cost is

retained as a credit by x[n], which is the x[j] that has changed to 1. When the second subset is

generated, the credit on x[n] is used to pay for changing x[n] to 0 in the while loop, 1 unit of the

amortized cost is used to pay for changing x[n-1] to 1, and the remaining 1 unit of the amortized

cost is retained as a credit by x[n-1], which is the x[j] that has changed to 1. When the third subset

is generated, 1 unit of the amortized cost is used to pay for changing x[n] to 1, and the remaining 1

unit of the amortized cost is retained as a credit by x[n], which is the x[j] that has changed to 1.

When the fourth subset is generated, the credit on x[n] is used to pay for changing x[n] to 0 in the

while loop, the credit on x[n-1] is used to pay for changing x[n-1] to 0 in the while loop, 1 unit of the

amortized cost is used to pay for changing x[n-2] to 1, and the remaining 1 unit of the amortized

cost is retained as a credit by x[n-2], which is the x[j] that has changed to 1. Continuing in this way,

12

we see that each x[j] that is 1 has a credit of 1 unit on it. This credit is used to pay the actual cost

of changing this x[j] from 1 to 0 in the while loop. One unit of the amortized cost of nextSubset is

used to pay for the actual cost of changing an x[j] to 1 in the else clause, and the remaining one

unit of the amortized cost is retained as a credit by this x[j].

The above accounting scheme ensures that the credit on each x[j] that is 1 is exactly 1, and the

credit on each x[j] that is 0 is 0.

From the preceding discussion, it follows that P(m) - P(0) equals the number of x[j]s that are 1.

Since this number is always nonnegative, Equation (3) holds for all m.

Having established that the amortized complexity of nextSubset is 2 = O(1), we conclude that the

complexity of generating the first m subsets equals m * amortized complexity = O(m).

Potential Method

We first postulate a potential function that satisfies Equation (3), and then use this function to

obtain the amortized costs. Let P(j) be the potential just after the jth subset is generated. From the

proof used above for the accounting method, we can see that we should define

P(j) = number of x[i]s in the jth subset that are equal to 1.

By definition, the 0th subset has all x[i] equal to 0. Since P(0) = 0 and P(j) >= 0 for all j, this

potential function P satisfies Equation (3).

Consider any subset x[1:n]. Let q be the number of 1s at the right end of x[] (i.e., x[n], x[n-1], ..., x[nq+1], are all 1s). Assume that there is a next subset. When the next subset is generated, the

potential change is 1-q because q 1s are replaced by 0 in the while loop and a 0 is replaced by a 1

in the else clause. The actual cost of generating the next subset is q+1. From Equation (2), it

follows that, when there is a next subset, the amortized cost for nextSubset is

actual cost + potential change

=q+1+1-q

=2

When there is no next subset, the potential change is -q and the actual cost of nextSubset is q.

From Equation (2), it follows that, when there is no next subset, the amortized cost for nextSubset

is

actual cost + potential change

13

=q-q

=0

Therefore, we can use 2 as the amortized complexity of nextSubset. Consequently, the actual cost

of generating the first m subsets is O(m).

4. Simulated Pointers

Problem Definition

Section 7.3 of the text discusses how we can simulate Java pointers using integers. The

discussion of Section 7.3 resulted in the development of the class SimulatedSpace1 which has a

constructor and the methods allocateNode and deallocateNode. We wish to determine the time it

takes to perform any sequence of a allocateNode and d deallocateNode operations.

The code for the class SimulatedSpace1 For the analysis, we assume that the cost of changeSize1D

is node.length. is given below.

public class SimulatedSpace1

{

// data members

private int firstNode;

SimulatedNode [] node; // package visible

// constructor

public SimulatedSpace1(int numberOfNodes)

{

node = new SimulatedNode [numberOfNodes];

// create nodes and link into a chain

for (int i = 0; i < numberOfNodes - 1; i++)

node[i] = new SimulatedNode(i + 1);

// last node of array and chain

node[numberOfNodes - 1] = new SimulatedNode(-1);

// firstNode has the default initial value 0

}

public int allocateNode(Object element, int next)

{// Allocate a free node and set its fields.

14

if (firstNode == -1)

{ // double number of nodes

node = (SimulatedNode []) ChangeArraySize.

changeSize1D(node, 2 * node.length);

// create and link new nodes

for (int i = node.length / 2;

i < node.length - 1; i++)

node[i] = new SimulatedNode(i + 1);

node[node.length] = new SimulatedNode(-1);

firstNode = node.length / 2;

}

int i = firstNode;

// allocate first node

firstNode = node[i].next; // firstNode points to

// next free node

node[i].element = element;

node[i].next = next;

return i;

}

public void deallocateNode(int i)

{// Free node i.

// make i first node on free space list

node[i].next = firstNode;

// remove element reference so that space can be garbage collected

node[i].element = null;

firstNode = i;

}

}

Worst-Case Method

Since the worst-case complexity of allocateNode is Theta(a) (because of the array doubling done

by the invocation of changeSize1d) and that of deallocateNode is Theta(1), the complexity of a

sequence of a allocateNode and d deallocateNode operations is O(a2+d).

Aggregate Method

The total time spent on a allocateNode operations is O(a + time spent in array doubling). The time

15

spent in array doubling is O(sum0 <= i <= k2i), where k = ceil(log2a). Therefore, the time spent in array

doubling is O(a), and the aggregate time for a allocateNode operations is O(a). The amortized

complexity of allocateNode is therefore O(a/a) = O(1).

The aggregate time for d deallocateNode operations is O(d), and the amortized complexity of

deallocateNode is O(d/d) = O(1).

Consequently, the actual complexity of a sequence of a allocateNode and d deallocateNode

operations is O(a+d). This is a tighter bound than obtained using the worst-case method.

Accounting Method

For the deallocate method, we assign an amortized complexity that equals its worst-case

complexity, that is O(1). For the allocate method, we assign an amortized complexity of 3, which

is less than its worst-case complexity. To verify the correctness of this assignment, we must show

that

P(a) - P(0) >= 0;

for all a.

We shall use the alternative proof method introduced in the McWidget example. We use the

node[i]s as the accounting entities. Initially, each node[i] has a credit of 0. When a node, node[i], is

allocated, we use 1 unit of the amortized cost to pay for the actual cost of the node allocation

(excluding any array doubling that may occur), and the remaining 2 units of the amortized cost

are retained as a credit by node[i]. When array doubling occurs for the first time, all nodes have

been allocated and so have a credit of 2 units on them. The total available credits are

2*node.lengthBeforeDoubling. These credits are used to pay for the array doubling (the cost of

doubling the array size is node.lengthBeforeDoubling). When any subsequent array doubling

occurs, the nodes node[j], node.lengthAfterDoubling/2 <= j < node.lengthAfterDoubling, have a credit of

at least 2 units on them (other nodes may also have a nonzero credit). Therefore, the total credits

on the nodes is at least node.lengthAfterDoubling. These credits are adequate to pay for the

subsequent array doubling.

From the preceding discussion, it follows that P(a) - P(0) is always nonnegative. Therefore,

Equation (3) holds for all a.

Having established that the amortized complexity of both allocateNode and deallocateNode is O(1),

we conclude that the actual complexity of a sequence of a allocateNode and d deallocateNode

operations is O(a+d).

16

Potential Method

We first postulate a potential function that satisfies Equation (3), and then use this function to

obtain the amortized complexity of allocateNode. Let P(i) be the potential following the ith allocate

node operation. Since we assign deallocateNode an amortized complexity that equals its actual

complexity, the potential is not affected by deallocate operations. The potential function that we

shall use is

P(i)

= 2

* (number

of allocate

node operations

since the

most

recent array

doubling)

We see that P(0) = 0 and P(i) >= 0 for all i. So, this potential function satisfies Equation (3).

If the ith allocate node operation does not require array doubling, the actual cost of this operation

is 1 unit. Also, P(i) - P(i-1) = 2. Therefore, the amortized cost of the operation is

actual cost + potential change

=1+2

=3

If the ith allocate node operation requires array doubling, the actual cost of this operation is 1 +

node.length units. Following the node allocation, the potential P(i) is 2. Further, since at least

node.length/2 allocate node operations (excluding the current one) must have taken place since the

last time the array size was doubled, P(i-1) >= node.length. Therefore, the amortized cost of the

operation is

actual cost + potential change

<= (1 + node.length) + (2 - node.length)

=3

Consequently, we may assign each allocate node operation an amortized cost of 3.

Since the amortized complexity of both allocateNode and deallocateNode is O(1), we conclude that

the actual complexity of a sequence of a allocateNode and d deallocateNode operations is O(a+d).

5. Array Stacks and Queues

The classes ArrayStack and ArrayQueue were developed in Chapters 9 and 10, respectively. For

both classes, because it may be necessary to double the array size, the actual complexity of the

17

insert operation (ArrayStack.push and ArrayQueue.put) is O(s), where s is the current size of the

stack or queue. The actual complexity of the remove operation (ArrayStack.pop and

ArrayQueue.remove) is O(1). Therefore, using the worst-case method, the complexity of a sequence

of i insert and r remove operations is O(i2+r). Using developments identical to those used for the

case of simulated pointers, we can show that the amortized complexity of the insert operation is

O(1). This enables us to obtain the tighter bound of O(i + r) on the complexity of any sequence of i

insert and r remove operations performed on an initially empty stack or queue. Using these

amortized complexities, we can establish the time complexities of the stack and queue

applications considered in Chapters 9 and 10 without appealing to Theorem 5.1.

6. Union-Find

Problem Definition

Consider the class UnionFindSecondSolution developed in Section 7.7.4 of the text. Suppose that u

< n union operations and f find operations are performed. The complexity of an individual union

is O(u) and that of an individual find is O(1). In the text, we showed that the aggregate complexity

of u union operations is O(u log u), and then concluded that the actual complexity of a sequence of

u < n union operations and f find operations is O(u log u + f). Had we used the worst-case method,

we would have arrived at the complexity O(u2+f). Since the aggregate complexity of u union

operations is O(u log u), the amortized complexity of the method union is O(log u). The amortized

complexity of the method find is the same as its actual complexity, that is O(1). Let us see how we

can arrive at the amortized complexity of union using the accounting and potential function

methods.

Accounting Method

To the method find, we assign an amortized complexity that equals its worst-case complexity, that

is O(1). To the method union, we first assign an amortized complexity of L = ceil(log2 (u+1)), which

is less than its worst-case complexity. To verify the correctness of this assignment, we must show

that

P(u) - P(0) >= 0

for all u, where P(i) denotes the potential following the ith union operation. Since the actual and

amortized complexities of the method find are the same, the find operations do not affect the

potential.

It is easy to verify that, at all times, each set contains exactly one element that has never moved

(see the text for the definition of moved). Our credit assignment scheme will have the following

properties (except, possibly, following the last union operation):

18

1. The element in each set that has never been moved has 0 credits.

2. The credits on an element that has moved at least once is at least L - (number of times this

element has moved).

For example, when the number u of union operations is 6, L = 3. Suppose that the initial sets are

{1}, {2}, ..., {10}. Initially, no element has moved, each set has exactly one element that has never

moved, and each element has 0 credits. If the first union unites the sets {1} and {2} by regarding

the first set as the smaller set, then following the union we have the set {1,2}. The credits on the

element 1 will be at least L-1 = 2 (note that the element 1 has been moved once) and the credits on

the element 2, which is the single element in the set {1,2} that has never moved, will be zero.

Suppose that a union operation unites the sets A and B, where A is the smaller set. Let the

resulting set be C. The set C contains exactly one element that has never moved. This is the single

element of B that has never moved. This element has 0 credits. 1 unit of the amortized cost of L

units associated with this union operation is used to pay for moving the never moved element a of

A. The remaining L-1 units of the amortized cost are assigned as credits to the element a. These

credits are adequate to pay for the at most L-1 future moves that element a may make. The

remaining portion of the actual cost of uniting sets A and B is paid for by reducing the number of

credits on each of the elements A-{a} by 1. Note that each of these elements must have a credit

that exceeds 0, because the first time an element is moved it is assigned L-1 credits which are

enough to pay for all future moves of the element.

It follows that, after the u union operations, no element has a negative credit. Therefore, P(u) P(0) >= 0, and L = floor(log2(u+1)) = O(log u) is the amortized complexity of a union operation.

Hence, the complexity of any sequence of u < n union operations and f find operations is O(u log u

+

f).

Potential Method

Let P(i) be the potential following the ith union operation. Let L = floor(log2 (u+1)). For each

element i, let p(j) be 0 if element j has never moved; otherwise, let p(j) equal L - (number of times

element j has moved). We define P(i) to be the sum of the p(j)s. Since no element can move more

than L times, no p(j) is ever negative. We see that P(0) = 0 and P(i) >= 0. Therefore, the potential

function satisfies Equation (3).

When two sets are united, the change in the potential function is L - (number of elements that

moved), and the actual cost of the operation equals the number of elements that moved. Therefore,

the amortized complexity of the union method is

19

actual cost + potential change

= (number of elements that moved) + (L - number of elements that moved)

=L

= O(log u)

Consequently, the complexity of any sequence of u < n union operations and f find operations is

O(u log u + f).

7. Rearranging Railroad Cars

Problem Definition

Section 9.5.3 of the text developed a solution to the railroad cars rearranging problem in which

the holding tracks operate as stacks. We shall analyze the complexity of the method

RailroadWithStacks.railroad (Program 9.10). For convenience, a simplified version of this method is

given below.

/** rearrange railroad cars beginning with the initial order

* inputOrder[1:theNumberOfCars]

* @return true if successful, false if impossible. */

public static boolean railroad(int [] inputOrder,

int theNumberOfCars, int theNumberOfTracks)

{

// code of complexity O(numberOfTracks) comes here

// rearrange cars

for (int i = 1; i <= numberOfCars; i++)

if (inputOrder[i] == nextCarToOutput)

{// send car inputOrder[i] straight out

System.out.println("Move car " + inputOrder[i] + " from input "

+ "track to output track");

nextCarToOutput++;

// output from holding tracks

while (smallestCar == nextCarToOutput)

{

20

outputFromHoldingTrack();

nextCarToOutput++;

}

}

else

// put car inputOrder[i] in a holding track

if (!putInHoldingTrack(inputOrder[i]))

return false;

return true;

}

The method outputFromHoldingTrack removes and outputs a railroad car from a holding track, and

the method putInHoldingTrack puts a car into a holding track using the rule given in Section 9.5.3.

This latter method returns the value false iff there is no holding track that satisfies the stated rule.

For purposes of our analysis, we assume that the actual complexity of both

outputFromHoldingTrack and putInHoldingTrack is O(numberOfTracks). In reality, this assumption is

valid only for the method outputFromHoldingTrack. The actual complexity of putInHoldingTrack is

O(numberOfCars+numberOfTracks) because of the cost of array doubling when adding an element

to a

stack. The amortized complexity of putInHoldingTrack is, however, O(numberOfTracks).

The complexity of the code outside of the for loop is O(numberOfTracks), and our analysis here

will focus on the complexity of the for loop.

Worst-Case Method

In examining the if statement, we see that the worst-case complexity of the else clause is

Theta(numberOfTracks). The then clause consists of the statements executed when the if conditional

(inputOrder[i] == nextCarToOutput) is true. The worst-case complexity of the then clause is

Theta(numberOfCars * numberOfTracks) because the holding tracks may have Theta(numberOfCars)

on them and we may have have to output all of these. Therefore, the worst-case complexity of the

if statement is Theta(numberOfCars * numberOfTracks).

Using the worst-case method, we arrive at O(numberOfCars2 * numberOfTracks) as the complexity

of the for loop (and of the method railroad). Although this analysis is correct, we have not obtained

a tight bound on the complexity. A tighter bound is obtained using amortized complexity

methods.

21

Aggregate Method

Since p < numberOfCars cars are put into the holding tracks in the else clause, exactly p cars are

removed from the holding tracks in the then clause. Therefore, the aggregate number of iterations

of the while loop is p, and the aggregate complexity of the while loop is O(p * numberOfTracks).

Therefore, the aggregate complexity of the for loop (and also of the method railroad) is

O(numberOfCars * numberOfTracks). Using the aggregate method, we have arrived at a tighter

bound

on

the

complexity

than

obtained

using

the

worst-case

method.

Since the aggregate complexity of the for loop is O(numberOfCars * numberOfTracks), and the

number of times the for loop is entered is numberOfCars, the amortized complexity of each

iteration of the for loop is O(numberOfCars * numberOfTracks / numberOfCars) = O(numberOfTracks) .

Accounting Method

To simplify the analysis, we use numbeOfTracks (rather than O(numberOfTracks)) as the

complexity of the methods putInHoldingTrack and outputFromHoldingTrack. We assign the then

clause an amortized complexity of 1, and the else clause is assigned an amortized complexity

equal to 2 * numberOfTracks + 2. To verify the correctness of this complexity assignment, we must

show that the sum of the amortized complexities is greater than or equal to the sum of the actual

complexities (the sum is taken over all executions of the then and else clauses). This is equivalent

to showing that Equation (3) holds.

When the else clause is entered, numberOfTracks units of its amortized complexity are used to pay

for the execution of the method putInHoldingTrack, 1 unit is used to pay for testing the value

returned by putInHoldingTrack and exceuting the return false statement (if executed). The remaining

numberOfTracks + 1 units of the amortized complexity are held as a credit by the railroad car that

is

put into a holding track.

When the then clause is entered, the single unit of amortized cost assigned to the then clause is

used to pay for the statements that print a line and increment nextCarToOutput, and for one test of

the while loop conditional. If the while loop is entered, then the numberOfTracks + 1 credits on

each car output from a holding track are used in the following way: numberOfTracks units are used

to pay for the execution of the method outputFromHoldingTrack, and the remaining 1 unit is used to

pay for the statement that increments nextCarToOutput as well as for one test of the while loop

conditional. Therefore, the amortized costs are adequate to pay for all of the actual costs.

Since the amortized cost of the then clause is 1 and that of the else clause is 2 * numberOfTracks +

2, and since the number of times each clause is executed is O(numberOfCars), the actual

22

complexity of the for loop (and hence of the entire method) is O(numberOfCars * (2 *

numberOfTracks + 2 + 1)) = O(numberOfCars * numberOfTracks) .

Potential Method

Let P(j) denote the potential following the iteration i = j of the for loop. We define, P(j) =

(numberOfTracks + 1)n, where n is the number of railroad cars in the holding tracks after the for

loop iteration with i = j completes. Also, P(0) = 0 is the initial potential (note that n = 0 initially

because the holding tracks are empty when we start the method railroad). Since this potential

function is nonnegative for all j, Equation (3) is satisfied.

Suppose that when i = j + 1, the else clause is entered. Since a car is added to the holding tracks,

P(j+1) - P(j) = numberOfTracks + 1. Therefore, the amortized complexity of the else clause is

actual cost + potential change

= (numberOfTracks + 1) + (numberOfTracks + 1)

= 2 * (numberOfTracks + 1)

Next, suppose that when i = j + 1, the then clause is entered. If the while loop iteraties p times, p

cars are removed from the holding tracks. Therefore, P(j+1) - P(j) = -p * (numberOfTracks + 1). The

actual complexity of the then clause is 1 + p * (numberOfTracks + 1). So, the amortized complexity

of the then clause is

actual cost + potential change

= 1 + p * (numberOfTracks + 1) - p * (numberOfTracks + 1)

=1

Having established that the amortized complexities of the then and if clauses are 1 and 2 *

(numberOfTracks + 1), resepctively, we conclude that the actual complexity of the for loop (and

hence of the entire method) is O(numberOfCars * numberOfTracks).

Exercises

1. What is the relationship between a correct assignment of amortized complexities to

operations and the actual complexities of these operations?

2. How are potential function, amortized complexity, and actual complexity related?

23

3. The McWidget company has been bought out by a computer manufacturer who insists

that all displays be in binary. Rework the McWidget example using a binary display.

4. Suppose that a sequence of tasks is performed. The actual complexity of the ith task is 1

when i is not a power of 2. When i is a power of 2, the complexity of the ith task is i. Use

each of the methods (a) aggregate, (b) accounting, and (c) potential function to show that

the amortized complexity of a task is O(1).

5. Imagine that a data structure is represented as an array whose initial length is 1. The data

structure operations are insert and remove. An insert takes 1 time unit except when the

number of elements in the data structure prior to the insert equals the array length n; at

this time, the insert takes n time units because we double the array length. A remove takes

1 time unit except when the number of elements left in the array is less than (array

length)/4. When the number of elements left in the array is less than (array length)/4, the

array length is halved and the remove takes (array length)/2 time units. Use each of the

methods (a) aggregate, (b) accounting, and (c) potential function to show that the

amortized com plexity of each data structure operation is O(1).

Further Reading

A survey of amortized complexity appears in the paper ``Amortized computational complexity,''

by Robert Tarjan, SIAM Journal on Algebraic and Discrete Methods, 6, 2, 306-318, 1985.

24