purpose of report & summary - College of Education

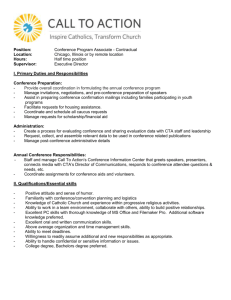

advertisement