Nitin Bahadur and Florentina Irina Popovici

advertisement

Typed MPI - A Data Type Tool for MPI

Nitin Bahadur and Florentina Irina Popovici

{ bnitin, pif } @cs.wisc.edu

Abstract

This paper describes our work on typed MPI and automatic generation of MPI datatypes. We have

developed an interface that provides type checking for MPI programs written in C. This interface passes

data between two processes only if their types are identical, however complex may they be. The sender

is informed in case if an error occurred on the receiver side when receiving the data. This way the sender

can also take corrective action on its side. Another feature of our interface is the automatic generation of

MPI datatypes, corresponding to the user datatypes. Our interface provides a set of MPI_Wrapper

functions through which the user can send and receive data. These functions are preprocessed and C

code is generated to perform the construction of MPI datatypes, type-checking and error handling. We

provide wrapper functions for blocking send and receive. Our measurements show that the overhead

introduced by our interface is very low ( compile time overhead of type inference is ~120 millisec,

automatic generation of MPI datatypes for programs with simple structures ~90 millisec).

1

Introduction

Message passing is one of the paradigms used in distributed computing and MPI is one of the

standard interfaces widely used to write message-passing programs. Some of the features MPI offers are:

portability, point-to-point communication (with several semantics for send and receive operations),

collective operations, process groups and communication domains [References 1,2,3]. The above

features offer a powerful set of operations to the programmer. However, MPI does not perform type

checking and provides a fairly crude interface for sending and receiving user defined datatypes. MPI

being a library has no knowledge of the layout of program data and it is up to the user to construct MPI

datatypes corresponding to user datatypes. This section presents a motivation for implementing type

checking and automatic MPI type generation and a summary of our objectives.

1

1.1

Motivation

MPI is a library specification for message passing. MPI offers various function calls and variables

that have to be used by a user to transfer user-defined data over the network. So, the user needs to be

aware of the various MPI data types and also needs to convert to MPI derived / struct types manually

whenever required. Multiple ( five or more ) MPI calls are required for packing a structure into an MPI

derived type before sending it over the network (see Figure 1). This manual work on part of the user is

error-prone and should be avoided. The user should be left just to concentrate on the logic of the

application.

Struct Partstruct {

int block[3] = {1, 6, 7};

int class;

MPI_Aint

double d[6];

MPI_Address( particle, disp);

char b[7]; };

MPI_Address( particle.d, disp+1);

struct Partstruct particle;

disp[3];

MPI_Address( particle.b, disp+2);

/* build datatype describing first array MPI_Type_struct( 3, block, disp, type,

entry */

&Particletype);

MPI_Datatype Particletype;

MPI_Type_commit( &Particletype);

MPI_Datatype

type[3]

=

{MPI_INT, MPI_Send( MPI_BOTTOM, 1, Particletype,

MPI_DOUBLE, MPI_CHAR};

dest, tag, comm);

Figure 1. Example of the steps the user has to perform before the user can actually send / receive a C

structure. [Reference 2]

Another inconvenience with MPI is the fact that it does not perform any type checking and a

receive operation is satisfied if the receive buffer is large enough to fit the incoming message and the

source and tag fields in the receive call match. MPI does not inform the sender if any error occurred at the

receiver end while receiving. Thus the sender keeps executing oblivious of the fact that the receiver did

2

not receive the data correctly. Again, if a user wants to keep track of such errors for safe programming,

the user needs to handle all error conditions that might occur. Also, the receiver has to send an

acknowledgement to the sender informing about the correct receipt / error condition at the receiver end.

Besides, a process may terminate if an error handler is not installed and that process receives a message

that is larger than its receive buffer.

An MPI receive call completes if the receiver buffer is large enough to accommodate the sender’s

data irrespective of the type of data sent by the sender. So it might happen that the sender sent a char

and receiver expected an integer. The successful completion of the MPI receive will lead the receiver into

believing that the receiver received an integer, which is incorrect. Thus MPI provides no form of type

checking when receiving data.

In order to send arbitrary number of data in one MPI call, the user needs to explicitly pack the

data into an MPI derived type { 2-4 MPI calls (+ number of elements * 3, in case of a structure)} before

sending it. This is often inconvenient, as the user needs to be aware of packing semantics and has to do

the packing oneself. It would have been nice if the user was able to just specify multiple data arguments

in one MPI call and MPI would do all the packing and sending of data.

1.2

Objectives

The goal of this project is to provide a simple, high-level interface for typed message passing and

automatic generation of MPI datatypes. We have made this interface as seamless as possible with the

user application. This should help the programmer to concentrate on the logic of the application and not

be concerned with the subtleties of MPI types and packing details. We provide a solution to the above

mentioned shortcomings of MPI without actually modifying MPI. We also do not make any assumptions

about the underlying hardware. This makes our code portable to any MPI supported platform. Our

interface provides the following facilities to the user:

Automatic generation of MPI datatypes

Type checking and error reporting to both sender and receiver

Seamless integration within a C program

Facility to send user-defined datatypes such as structures

3

Facility to send multiple data in one send call (allowing arbitrary grouping of data to be sent)

The outline of the rest of the paper is as follows: Section 2 presents the design of our interface, the

methodology for type checking, the interface functions and some design issues. Section 3 is dedicated to

implementation issues (preprocessing, signature generation and error handling). Results of preprocessing

and type checking overhead are presented in Section 4. We discuss related work in Section 5 and

conclude in Section 6.

2

Design

This section explains our design in detail and the philosophy for making certain design related

decisions. Section 2.1 presents an overview of the design while section 2.2 gives an overall description of

type-checking. Section 2.3 presents the interface functions provided by us for send and receive and

section 2.4 discusses other design-related aspects.

Our project provides a high-level interface, more like the remote procedure call interface

[Reference 9]. Our solution is similar to RPC with respect to automatic generation of code (stub functions)

1.User

Application

1.User

Application

2. Wrapper

call code

2. Wrapper

call code

3. Typed MPI

Runtime

support

4. MPI

Internals

3. Typed MPI

Runtime

support

4. MPI

Internals

Network Communication

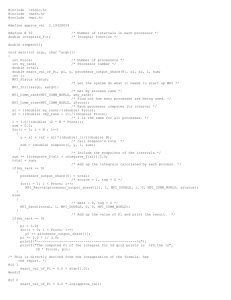

Figure 2. General overview of an application that uses our interface

for transmitting data by analyzing the user code. The user interface consists of wrapper calls, derived

from a subset of MPI calls, related to typed message passing. The user invokes our wrapper calls that will

4

be preprocessed and will be expanded to code that does the packing of data, type checking, error

handing and invocation of MPI functions for sending / receiving data. The user is not aware of the above

steps; the only parts visible to the user are the wrapper function calls of our interface. Figure 2 gives an

overview of the structure of a program that uses our interface. The grayed areas represent the two parts

of our interface. The second layer will change from application to application (this is the part that will be

automatically generated by preprocessing the user code), and the third layer represents the runtime part

of Typed MPI that is common for all applications. Both layers are discussed in the following sections.

2.1 Design Overview

In order to send / receive a typed message, the user has to use the interface function specialized

for that operation (eg. MPI_Wrapper_Send for a blocking send / MPI_Wrapper_Recv for a blocking

receive). Before the source code is compiled to an executable, we preprocess it. In this step we will

generate the code that represents the second layer (Figure 2). We use a preprocessor to parse the user

code and determine the type of the data to be sent / received. In the preprocessing step, using this type

information we construct a signature that represents the type of data going to be sent. We then generate

the code to construct the MPI data type corresponding to the data the user wants to send and to package

the signature and data into an MPI derived type and send it over the network. All these steps take place

during the preprocessing stage.

Point to be noted here is that at compile time we generate code that does construction of derived

Receiver

Construct signature

Time

Sender

Receive data (MPI_Recv)

Construct signature

Unpack data

Pack data

Compare received signature

with generated one

Send data/report error to

receiver

Send data (MPI_Send)

Receive ack. from receiver

Figure 3. Logical sequence of steps performed for completion of a

MPI_Wrapper_Send-MPI_Wrapper_Recv operation

5

Time

datatypes, type-checking and error handling1. The actual execution of the generated code (e.g. sending /

receipt of data) takes place at run-time. The runtime layer (layer 3 in Figure 2) does the type checking

and error handling.

2.2 Type Checking

In deciding if two datatypes are identical we have adopted the following algorithm. Two simple

types (primitive types: integer, long, float, double, character and their unsigned counterparts), T1 and T2

are identical if they are the same primitive type. Two arrays are considered to be identical if they have the

same base type and the same size (for example, int p[10] has base type integer and size 10). Two

structures are considered to be identical if all their members have the same type. We do not allow

passage of unions since we do not know the element of the union the user wants to send / receive and

hence cannot construct the signature.

At both the sender and the receiver end we compute a signature for all the data to be sent /

received in one call using data type information. While preprocessing the user source code when we

detect that a sender has posted a send, we determine the types of data to be sent and this type

information is used to generate a signature (sig_send). At the receiver end the situation is similar. When

the user posts a receive2, the types of the data to be received are determined by parsing the user source

and a corresponding signature is generated (sig_recv). The generated signature depends on the type and

size of the data. Thus two integer arrays with different sizes will have different signatures.

This signature (sig_send) will be sent over the network along with the actual data, and will be

used at the receiver end to check if the received and generated signature (sig_send and sig_recv) match.

If the two match, then it indicates that the types of received data and expected data match and the data

will be given to the receiver. In case of mismatch, the user will be returned an error code. In both cases a

message will be sent to the sender to inform if type matching successfully took place at the receiver end.

This message will be returned to the sender in the form of a success / error code. The protocol used for

type checking and error detection is presented in Figure 4. Note that type checking cannot be done

statically since we cannot determine at compile time which receive will be satisfied by which send.

1

The code for all the steps in Fig. 3 is generated at compile time

6

Sender

Receiver

Time

Receive Data

Send Data

Check Type

Receive Error/Success

Code

Return Error/Success Code

Figure 4. Send / Receive Protocol

A new signature is computed every time a send / receive is encountered. So, if we will send data

of the same type twice, the signature will be sent twice over the network. Another alternative would have

been to keep track of the data types encountered and to send only an identifier that will identify the

signature. Even though the last approach reduces overload, it has the drawback that all the processes

have to maintain a database of the data types sent and received. We consider that the overhead in

managing this is not justified.

2.3 Interface Functions

This section illustrates our interface functions:

Synchronous communication:

Send calls:

MPI Call: int MPI_Send (void* buf, int count, MPI_Datatype datatype, int dest, int tag,

MPI_Comm comm)

Our Call: int MPI_Wrapper_Send (int dest, int tag, MPI_Comm comm, (void*) buf1, int count1,…)

Receive calls:

MPI Call: int MPI_Recv (void* buf, int count, MPI_Datatype datatype, int source, int tag,

MPI_Comm comm, MPI_Status *status)

Our Call: int MPI_Wrapper_Recv (int source, int tag, MPI_Comm comm, MPI_Status *status,

(void*)buf1, int count1, . . .)

2

Type checking is done only if our Wrapper calls are used and not ordinary MPI calls.

7

Asynchronous communication:

Send calls:

MPI Call: int MPI_Isend (void *buf, int count, MPI_Datatype datatype, int dest, int tag,

MPI_Comm comm, MPI_Request *request);

Our Call: int MPI_Wrapper_Isend (int dest, int tag, MPI_Comm comm, MPI_Request *request,

(void*)buf1, int count1, ...)

Receive calls:

MPI Call: int MPI_Irecv (void *buf, int count, MPI_Datatype datatype, int dest, int tag,

MPI_Comm comm, MPI_Request *request)

Our Call: int MPI_Wrapper_Irecv (int source, int tag, MPI_Comm comm, MPI_Request *request,

(void*)buf1, int count1, ...)

Call for waiting for completion of an asynchronous activity

MPI Call: int MPI_Wait (MPI_Request *request, MPI_Status *status)

Our Call: int MPI_Wrapper_Wait (MPI_Request *request, MPI_Status *status)

Besides the functions for receive and send [Reference 1], MPI offers functions for building

communicators and setting topology [Reference 2,3]. The user can still use these functions in user

programs. We have only replaced functions used for sending / receiving data. As one can see from the

format of the interface function calls, the user still has to specify a communicator, a tag and a destination /

receive process number for send / receive calls. Also the definition of our Wait call is identical to that of

MPI. Thus, we have preserved the message-passing abstraction represented by MPI functions, modifying

only the part related to specifying the MPI data types and support for multiple data arguments.

2.4 Design Issues

We allow the user to pass a part of an array starting from some index, as well as dynamically

allocated memory. In case of the latter we assume that the user has allocated count units of memory. We

allow passing of partial arrays (eg. MPI_Wrapper_Send(1, 1, MPI_COMM_WORLD, &b[50], 10) where b

is declared int b[100]) because MPI programs generally involve large computations that use huge data

structures (e.g. matrices) and many times it is not required to pass the whole data type to another

8

process. Also, it may be required to receive the incoming data in some part of an array (e.g. Parallel

matrix multiplication where parts of the final result calculated by different processes are collected by a

root process). In such cases, passing the entire array is simply inefficient. We also allow sending of

dynamically allocated data structures because at times the size of the data type might not be known

statically. Our calls expect the user data in the form (void*)buf i.e. we require the user to pass the address

of the data type to be sent. This is because we allow the user to pass any kind of datatype as an

argument in the wrapper calls.

We also explored another way by which we could have designed the construction of derived

datatypes: the use of pragma directives [Reference 11] (or other constructs that will be preprocessed) to

describe the data that have to be sent. This approach would imply that the user has to learn another set

of rules for describing data types. The approach is described in more detail in Section 5.

3. Implementation

Our interface allows the user to be oblivious of all the intricacies related to construction of MPI

derived data types and also automatically generates code for type checking and error handling. A function

call from our interface looks like a regular C function call and unlike MPI calls [Reference 5], the user

does not provide any information about the type of data the user wants to send / receive.

MPI_Wrapper_Send (dest, tag, comm, status, &buffer1, count1, &buffer2, count2);

Sections 3.1 deals with the construction of signature (that is used to check the type of data),

Section 3.2 explains the preprocessing stage. In section 3.3 asynchronous communication is discussed

while and Section 3.4 deals with the error handling mechanism.

3.1

Construction of Signature

The signature consists of each type present in the data and its corresponding count. Types can

be broadly divided into two categories : primitive and complex. Primitive types are char, short, int, long,

float, double and their unsigned counterparts, complex types include arrays and structures.

For a simple variable such as int p the signature is represented as

{ START, VAR_START, INT, 1, END }

9

When we have complex types such as a structure or structure containing structures, we parse the

full complex type and the signature consists of each basic type and its count within the complex type.

Eg 1: struct example1 {

int a;

float b[5]; }

will have a signature of { START, VAR_START, STRUCT_START, TYPE_INT, 1 , TYPE_ARRAY,

TYPE_FLOAT, 5, ST_UN_END, END }

Eg 2. struct example2 {

int a;

struct example1 x;

}

will have a signature of { START, VAR_START,

STRUCT_START,

TYPE_INT, 1,

STRUCT_START,

TYPE_INT, 1,

TYPE_ARRAY,

TYPE_FLOAT, 5,

ST_UN_END,

ST_UN_END,

END }

This type of a variable is broken down recursively to generate the signature. We use a depth-first

approach to signature construction.

VAR_START

STRUCT

TYPE_INT

STRUCT

1

TYPE_INT

1

TYPE_ARRAY

TYPE_FLOAT

5

10

Figure 5. Recognition of a complex structure

If the user passes two data arguments ( int and char[5] ) then the signature would look like

START, VAR_START,

TYPE_INT, 1

VAR_START,

TYPE_ARRAY

TYPE_CHAR, 5

END

One can pass a char *p by specifying a size of 5, which means that the user wants to send 5

chars i.e MPI_Wrapper_Send ( destn, tag, MPI_COMM_WORLD, p, 5) is allowed.

But consider,

Eg 3 struct example3 {

char *p;

float q;

} st;

MPI_Wrapper_Send (dest, tag, MPI_COMM_WORLD, &st, 1);

In this case, we do not know the size of p, so we do not allow the user to pass this structure. If the

user wants to still pass the structure, we provide an alternative way.

MPI_Wrapper_Send (dest, tag, MPI_COMM_WORLD, st.p, 5, &st.q, 1);

The user needs to specify all the elements of the structure in this case. This may be tedious for

the user but this allows us to do strict type checking and size determination.

Thus we encapsulate the entire type of the variable in our signature and this helps us in

performing strict type checking. As mentioned before, we do not allow the user to pass a union since it is

ambiguous as to which element of the union the user wants to send / receive.

In some cases, the size of the signature can become big (such as sending of multiple datatypes

in one call). This may cause an increase in the overhead during sending data and signature over the

network. To deal with this situation we hash the signature to a 20 bytes string (using RIPEMD-160 hash

function [Reference 12]). The use of this hash function will be triggered by a command-line option during

11

preprocessing of the code. Thus the user can specify if the signature should be hashed or not. In the

default case we do not hash the signature.

3.2 Preprocessing

The C code provided by the user is first compiled, to be sure that the user eliminates all errors.

After that it is fed to our preprocessor for generation of the stub (wrapper) functions. The modified source

code that results after preprocessing and the generated MPI_Wrapper functions will be compiled and

linked, resulting in the final executable. The user is not aware of these steps and they appear as a simple

compile step3. The modified source code that results after preprocessing is stored in a different file

leaving the user’s original source code unmodified. To develop the parser we have used the Lex

specification and Yacc grammar for C grammar posted on the net at [Reference 6].

Our preprocessor parses the C source code, gathering information about all type definitions and

variable definitions. When we encounter a function call that belongs to our interface we determine the

type of the actual parameters used in the function call, and we call the appropriate function of our library

to automatically generate the function that will be used at run-time to send data.

In order to provide type checking and automatic generation of MPI data types we need to

preprocess the user source code, to find out the types of the data that the user wants to send. The

preprocessor keeps track of the types of various variables within a scope. For this we build a symbol table

[Reference 8].

To gather information about data types and variables we also have to parse all the files included

by the user in the user C code4. All defined types and variables are stored in a hash table [Reference 8],

for fast access and determination of type of datum. The preprocessing time increases as the number of

include files increase.

For every MPI_Wrapper call we generate a call to a different function. This function is generated

during this preprocessing stage based on the type of data the user wants to send / receive. Since we

have to call different functions every time, we replace the actual call from the code source with a new

function call every time. The name of each new function is made unique by using a unique numeric

sequence (based on time).

3

The linking of the wrapper files is transparent and they do not need to be linked explicitly.

12

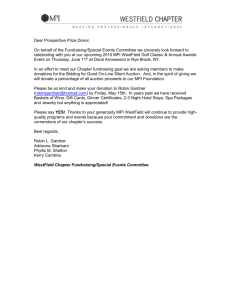

For example (see Figure 6.), the call MPI_Wrapper_Send (dest, tag, comm, &a, count1, &b,

count2) in user source code will be replaced by: MPI_Wrapper_Send 79205397130821 (dest, tag, comm,

status, &a, count1, &b, count2); and the body of the function will be generated in a separate file.

3.3 Asynchronous Communication

One can improve performance on many systems by overlapping communication and computation

non-blocking communication. A non-blocking send call initiates the send operation, but does not complete

it. Similarly, a non-blocking

receive call initiates the receive operation, but does not complete it. A

separate complete call ( MPI_Wait ) is needed to complete the communication [Reference 2]. A handle is

associated with each asynchronous operation and this is used in the completion of that call. We keep

track of handles and associated calls (send/receive) by constructing a mapping of these at run-time.

/* Initial user source code

*/

....

MPI_Wrapper_Send (dest,

tag, comm, &a, 1, &b, 2);

....

MPI_Wrapper_Recv (src,

tag, comm, &status, &a,

1, &b, 2);

...

Preprocessor

and generator

/* Modified user source code */

....

Compilation

MPI_Wrapper_Send79205397130821

(dest, tag, comm, &a, 1, &b, 2);

....

MPI_Wrapper_Recv79205397345131(

src, tag, comm, &status, &a, 1, &b,

2);

...

/* generated file 1 */

int

MPI_Wrapper_Send79205397130821

(int, int, MPI_Comm, void *, int, void *

int){

.....

/* signature data packaging, error

reporting*/

}

/* generated file 2 */

int

MPI_Wrapper_Recv792053971345131

(int, int, MPI_Comm, MPI_Status *, void

*, int, void *, int){

.....

/* signature data packaging, error

reporting*/

}

4

We analyze the code produced by running a C preprocessor (e.g. cpp) on user source code.

Figure 6. Files resulting after preprocessing and source code generation

13

Executable

3.4 Error Handling

We do not want the user code to terminate on MPI call failures. Failures can occur due to various

reasons. One of the cases in which failure occurs at the receiver end is if the sender sends more data

than what the receiver expects. In this case the receiver would terminate if the user had not installed an

appropriate error handler. On the other hand if the sender had sent less data than what the user

expected, the receive would have completed with undefined data in the receive buffer. To deal with

receiver termination, when a message comes we use MPI_Probe / MPI_Iprobe [Reference 2,5] to probe

the incoming message and determine the size of incoming data using MPI_Get_count [Reference 2,5]

before receiving it. If the size is more than what the receiver has posted a receive for, (larger size than

expected directly implies that the message type is incorrect) we install our own error handler to prevent

the process from termination. We also save the previous installed error handler and restore it after

receiving the data. In case of any kind of error while receiving the message (such as signature mismatch,

less / more data than expected ) we send back an error code to the sender. Otherwise we send back a

success code. Thus the sender knows for sure whether or not the receiver received correctly what the

receiver was expecting.

4

Performance Evaluation

We evaluated the overhead of preprocessing and runtime type checking. The measurements

were performed on 300 MHz Sun Ultra10Sparc machines having 256MB RAM. The results of the tests

are presented below.

Ping Pong

This measurement involved sending data to one node and receiving the same amount from it

back. This was done for various data sizes ( ranging from 450 bytes to 22800 bytes) for 50000 sends and

receives using both blocking and non-blocking modes of communication. The data sent was a C structure

of the following form

struct cool_buf {

char a[ ];

int b[ ];

float c[ ]; }

14

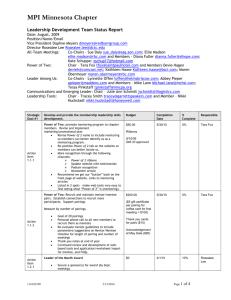

The graphs below (Figure 7 and Figure 8) shows that our MPI_Wrapper calls are almost as

efficient as ordinary MPI calls. The overhead due to type checking gets suppressed as more data is

transmitted.

Ping Pong for blocking communication

Time in microsec

10000

1000

Ordinary MPI

100

Wrapper Calls

10

1

50

100 200

400

800 1600 3200

Data size

Figure 7. Ping Pong for blocking communication

Ping Pong for non-blocking communication

Time in microsec

10000

1000

Ordinary MPI

100

Wrapper Calls

10

1

100

200

400

800

1600

3200

Data size

Figure 8. Ping Pong for non blocking communication

15

Preprocessing Overhead

We measured the time to preprocess the user C-source program. We parse the output of the C

preprocessor on feeding it the user C source. This step expands all the include files and is necessary to

recognize all types utilized in the user-program. The size of the file containing the expanded include files

can be quite big (e.g. 800 lines of code for a simple MPI program that includes <stdio.h>, <mpi.h> and

<string.h>). Table 1 presents the time to preprocess the user-file to synthesize the type of data and the

time to generate the code for signature generation, data packaging and data transfer.

Operation

Time (in

millisec)

Preprocessing (without call to generate the code)

120

Generation of code for Send / Receive of a primitive data

80

Generation of code for Send / Receive of a structure

90

Table 1 Time to preprocess and generate code corresponding to Wrapper calls

5

Related Work

Work on construction of derived datatypes has been done by Werner Augustin at the University of

Edinburgh, UK. (described in Reference 11). He constructed a compiler level tool and a library for

construction of derived MPI types. The data types to be sent / received are described using special

constructs inserted in the C code. The compiler-tool extracted the data type from the source code and the

library was used to generate the MPI derived type. However, he did not focus on type checking. Our

functions that generate the derived type also generate the code for type checking (see Appendix I and II).

Werner’s tool modifies the user source code to quite some extent by introducing new variables before the

function body. We choose to keep modifications to user code minimum for simplicity and to speed up

parsing.

16

6

Conclusion and Future Extensions

The main aim of this project is to remove the burden of packing and unpacking of data by the

programmer during communication using MPI and to provide a tool for development of a type-safe MPI

application. We provide an interface which does all this work and leaves the programmer to the deal with

the logic of the application. Our interface offers support for synchronous and asynchronous data transfer

between processes. Extensions related to group communication and vector operations can be added to

our current work. We believe that these extensions are not difficult to integrate in our current

implementation. We need to research and experiment more with hash functions to confirm their viability

to this project and their efficiency (collision rate).

Our limitations include inability to pass arrays of structures. Complex multiple MPI derived types

need to be constructed to pass arrays of structures along with the signature. We also do not handle

expressions in MPI_Wrapper function calls. An expression tree can be constructed to handle this.

We are able to recognize and send most kinds of C user defined datatypes and we recognize

typedefs. Given this base and the encouraging performance results we feel that extending our project to

all communication related MPI calls would be extremely beneficial.

Acknowledgements

We acknowledge the use of test programs for mpich written by the implementers. These test

programs have been taken from the MPI repository at NSF Engineering Research Center and

Department of Computer Science, Mississippi State University [Reference 4].

ftp://ftp.mcs.anl.gov/pub/mpi/mpich.tar.Z

References:

1. Marc Snir, Steve Otto, et al., MPI: The Complete Reference, 1996

2. www.mpi-forum.org/docs/mpi-11.html/, MPI: A Message Passing Interface Standard, June 1995.

3. http://www.tc.cornell.edu/UserDoc/Software/PTools/mpi/, (Cornell Theory Center), MPI: Message

Passing Interface Library

17

4. http://www.ERC.MsState.Edu/labs/hpcl/projects/mpi/, (Mississippi State Univ.), Message Passing

Interface

5. William Gropp and Ewing Lusk, User’s Guide for mpich, A portable implementation of MPI, 1996.

6. Jeff Lee, “ANSI C Grammar: Lex specification and Yacc grammar”, April 1985

7. Brian W. Kernighan and Dennis M. Ritchie, The C Programming Language, 1988

8. Alfred V. Aho, Ravi Sethi and Jeffrey D. Ullman, Compilers: Principles, Techniques and Tools, 1986

9. A.D.Birrell and B.J.Nelson, Implementing Remote Procedure Calls, ACM Transactions on Computer

Science, Vol. 2, No. 1, Feb. 1984, pp. 39-59

10. Sun Microsystems, XDR: External Data Representation Standard, June 1987, Network Working

Group, Request for Comments: 1014.

11. Werner Augustin, MPI Datatypes Toolset, Edinburgh Parallel Computing Centre, 1996.

12. Hans Dobbertin, Antoon Bosselaers and Bart Preneel, The hash function: RIPEMD-160,

http://www.esat.kuleuven.ac.be/~bosselae/ripemd160.html

18

Appendix I : Code Generated for a Synchronous Send

/* synchronous send of strict example {

int a;

float b;

} */

int MPI_Wrapper_Send_79205397130821 (int destn, int tag, MPI_Comm comm, void *var1, int count1)

{

MPI_Datatype data, *type;

signature[6] = 1;

MPI_Aint *disp;

signature[7] = 10;

int *blocklen;

signature[8] = 1;

int result, result2, recv_code;

signature[9] = 104;

unsigned char *signature;

signature[10] = 200;

MPI_Status status_4294967294;

type[0] = 2;

type[1] = 6;

struct cool_buf1 { int x;

type[2] = 10;

float y;

blocklen[0] = 11;

} * buf1 = var1;

blocklen[1] = 1;

blocklen[2] = 1;

int sig_pos = 11;

int num_data = 3;

/* constuction of MPI derived type for the struct */

MPI_Address(signature, disp);

type = (MPI_Datatype*)malloc(num_data);

MPI_Address(&buf1->x, disp + 1);

blocklen = (int*)malloc(num_data);

MPI_Address(&buf1->y, disp + 2);

disp = (MPI_Aint*)malloc(num_data);

signature = (unsigned char*)malloc(sizeof(unsigned

MPI_Type_struct(num_data, blocklen, disp, type,

char)*sig_pos);

&data);

MPI_Type_commit(&data);

if((type==0)||(blocklen==0)||(disp==0)||(signature==0))

{

/* send data */

printf("Unable to allocate memory\n");

result = MPI_Send(MPI_BOTTOM, 1, data, destn,

exit(1);

tag, comm);

}

if(result != MPI_SUCCESS) return result;

/* constructed signature sig_send */

/* receive success/error code from receiver */

signature[0] = 100;

result2 = MPI_Recv(&recv_code, 1, MPI_INT, destn,

signature[1] = 101;

tag, comm, &status_4294967294);

signature[2] = 26;

if(result2 != MPI_SUCCESS) return result2;

signature[3] = 20;

return recv_code;

signature[4] = 1;

signature[5] = 6;

}

19

Appendix II : Code Generated for a Synchronous Receive

/* synchronous recv of struct example {

int a;

float b;

} */

int MPI_Wrapper_Recv_79205397180821 (int source, int tag, MPI_Comm comm, void *var1, int count1, MPI_Status

*status_1)

/* constructed signature sig_recv */

{

MPI_Datatype data, *type;

signature[0] = 100;

MPI_Aint *disp;

signature[1] = 101;

MPI_Errhandler old_handler;

signature[2] = 26;

int *blocklen;

signature[3] = 20;

int result, result2, recv_code, count, total_size;

signature[4] = 1;

unsigned char *signature, *recd_signature;

signature[5] = 6;

char *tmp_data;

signature[6] = 1;

struct cool_buf1 { int x;

signature[7] = 10;

float y;

signature[8] = 1;

} * buf1 = var1;

signature[9] = 104;

signature[10] = 200;

int sig_pos = 11;

type[0] = 2;

int num_data = 3;

type[1] = 6;

type[2] = 10;

type = (MPI_Datatype*)malloc(num_data);

blocklen[0] = 11;

blocklen = (int*)malloc(num_data);

blocklen[1] = 1;

disp = (MPI_Aint*)malloc(num_data);

blocklen[2] = 1;

signature = (unsigned

char*)malloc(sizeof(unsigned)*sig_pos);

total_size = 0;

recd_signature = (unsigned

total_size += blocklen[0]*1;

char*)malloc(sizeof(unsigned char)*sig_pos);

total_size += blocklen[1]*4;

total_size += blocklen[2]*8;

if((type==0)||(blocklen==0)||(disp==0)||(signature==0)||(r

/* constuction of MPI derived type for the struct */

ecd_signature==0))

MPI_Address(recd_signature, disp);

{

MPI_Address(&buf1->x, disp + 1);

printf("Unable to allocate memory\n");

MPI_Address(&buf1->y, disp + 2);

exit(1);

}

MPI_Type_struct(num_data, blocklen, disp, type,

&data);

MPI_Type_commit(&data);

20

/* check size of incoming data */

MPI_Probe(source, tag, comm, status_1);

MPI_Get_Count(status_1, MPI_BYTE, &count);

/* set up errhandler if data cannot be received at all */

/* return error if data size is greater than expected */

if(count > total_size)

{

if((tmp_data = (char*)malloc(count)) == NULL)

{

MPI_Errhandler_get(comm, &old_handler);

MPI_Errhandler_set(comm, MPI_ERRORS_RETURN);

MPI_Recv(MPI_BOTTOM, 1, data, source, tag, comm, status_1);

MPI_Errhandler_set(comm, old_handler);

}

else

MPI_Recv(tmp_data, count, MPI_BYTE, source, tag, comm, status_1);

recv_code = FAILURE;

MPI_Send(&recv_code, 1, MPI_INT, source, tag, comm);

}

/* receive data */

else

{

result2 = MPI_Recv(MPI_BOTTOM, 1, data, source, tag, comm, status_1);

if(result2 != MPI_SUCCESS) return result2;

/* compare signatures */

recv_code = check_signature(signature, recd_signature);

if(recv_code == MPI_SUCCESS) printf("Signatures match\n");

else printf("Signatures do not match\n");

result2 = MPI_Send(&recv_code, 1, MPI_INT, source, tag, comm);

if(recv_code != MPI_SUCCESS) return recv_code;

}

}

21

22