ALPHABETICS

advertisement

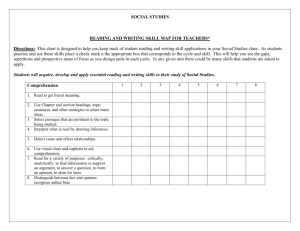

DIAGNOSTIC READING ASSESSMENT (DRA) Challenges Participant or Trainer Possible Solutions How do we maintain consistency in testing and results? 1. Be sure that all testers (STAR administrators or teachers, educational assistants, and volunteers) have participated in DRA training AND observed several assessments from beginning to end. 2. Consider specialization. In other words, have one tester always administer alphabetics, another always administer fluency, etc. Some programs set up DRA stations where students move from one test and tester to another. 3. Bring up DRA questions or problems at your STAR Team meetings. Together decide how you will ensure consistency amongst testers. 4. Accept there are gray areas in DRA; however, the results give us much more information about students’ reading strengths and weaknesses than just CASAS or TABE Reading scores. 5. Remind yourself that if Mastery or Instructional Levels are off, it is usually only by 1-2 grade levels. The STAR teacher always has the privilege of making adjustments in grouping and level selection. Is alphabetics assessment about word reading or pronunciation? The purpose of alphabetics assessment is to measure word recognition in isolation to determine who needs alphabetics instruction. If students can’t read or pronounce words quickly and accurately, this impacts fluency, vocabulary, and comprehension. Alphabetics instruction will help them improve decoding and pronunciation so that they can focus on understanding the meaning of words and text. There is so much subjectivity in the fluency assessment and fluency scale! 1. Using a more objective measure such as words correct per minute is not recommended for adults. There are no adult norms for scoring and adults tend to get stressed by timed readings and sacrifice accuracy and prosody for rate (speed). 2. Scoring with the fluency scale does get easier. You will become more familiar with the passages and can concentrate more on judging accuracy, rate, and prosody. Some BADER higher level readings seem easier than lower level readings. 1. Each level offers three choices identified according to interest: Child (C), Child/Adult (C/A), and Adult (A). Select the more consistent passages for fluency and comprehension assessment. However, do not administer the same passages; pick one for fluency and a different one for comprehension. 2. Some passages are narrative/fiction structure and others are expository/non-fiction structure. Typically, our students are more familiar with the first, so the second structure might seem harder to them (and us). Some of my students are uncomfortable with reading aloud for fluency assessment. Start fluency assessment with a passage 1-2 levels below the Alphabetics Mastery Level. This can be a warm-up to the passages that will be scored (but will add more assessment time). Developed by STAR 11 Participants and Trainers I get very confused by the purposes for the Fluency Mastery Level, Instructional Level for Rate/Prosody, and Instructional Level for Accuracyin-Context. Remember that the Fluency Mastery Level determines who needs to participate in fluency instruction (anyone scoring below Level 8+). The Instructional Level for Accuracy determines who needs to improve alphabetics-in-context. The students will not know all of the words; accuracy in decoding is modeled by the teacher. The Instructional Level for Rate/Prosody determines who needs to improve speed and phrasing. The students will know most or all of the words; rate and prosody are modeled by the teacher. During vocabulary assessment, some of my students use the word in their definition. Try using the prompt “Tell me more” to elicit a definition using other words. Their definition does not have to be dictionary quality, but does establish familiarity with the word’s meaning. During vocabulary assessment, some of my students use the words in sentences. 1. Try repeating the prompt “Tell me what ___ means” to elicit a meaning in addition to a sentence. 2. Students can use the word in a sentence OR give a definition (see above). 3. If the word is used correctly in context, it should be counted as correct. Sometimes a student will ask to see the words during vocabulary assessment. 1. According to research, vocabulary is best assessed with tests that require no reading. If students have to read the words, weaknesses in alphabetics may impact the vocabulary score. 2. The Word Meaning Test is a measure of expressive vocabulary; showing the words changes the purpose - it becomes a measure of reading vocabulary. Sometimes my prior knowledge of students influences my comprehension scoring. 1. Adhere to the answer key from the Examiner’s Manual or scoring form. 2. Use testers who don’t know the students and tend to be more objective. 3. Remember that the purpose of comprehension assessment is to find out who will benefit from comprehension strategy instruction. Scoring high may eliminate students from beneficial instruction. How much can students look back at the passages during comprehension assessment? Determine as a STAR Assessment Team whether or not students can look back at passages for detail answers (names, dates, places) and for how long. Make sure they are skimming or scanning, not rereading. Comprehension questioning and scoring is based on a single reading of the passage. I have trouble judging students’ comprehension answers as correct or incorrect when they stray from the BADER answer key. 1. Students’ answers do not have to be “by the BADER,” but should indicate understanding of the passage. Straying from suggested answers or rambling usually indicates prior knowledge of the topic - not comprehension of the passage. 2. Comprehension Mastery means students can answer most of the questions quickly and correctly. If you are struggling to score question by question, ask yourself: “Are they able to answer most of the questions (>75% - mastery) or just some of the questions (<75% - not mastery) at this level?” Developed by STAR 11 Participants and Trainers