A Design and An Implementaion of Parallel Batch

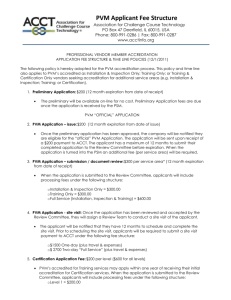

advertisement

A Design and An Implementation of Parallel Batch-mode Neural Networks on Parallel Virtual Machines Adang Suwandi Ahmad1, 2, Arief Zulianto1 1 Intelligent System Research Group Department of Electrical Engineering, Institut Teknologi Bandung E-mail: asa@ISRG.ITB.ac.id, madzul@ITB.ac.id 2 Abstract A parallel computation process such a computation process of an Artificial Neural Network (ANN) is better to be done in parallel processors but this is very expensive. Therefore, to reduce the cost, this paper proposes a method to emulate the computation process of ANN in a parallel mode. The method implements a virtual parallel machine through several sequential machines operated in a concurrent mode. It utilizes UNIX operating system capabilities to manage inter-processor communication and other computation resources. This method is a development of a sequential feed-forward algorithm into a parallel programming algorithm on virtual parallel machines based on the PVM developed by Oak Ridge National Laboratory and it is adapted into ITB Network environment. KEYWORDS: artificial neural network; backpropagation; parallel algorithm; PVM 1. Supervised learning in which the training data set consists of many pairs of inputoutput training patterns. 2. Unsupervised learning in which the training data set only consists of input training patterns. So the networks learn to adapt the previous training patterns based on the collected experiences. 1. Introduction Artificial Neural Networks (neural-nets) have been successfully solving many real world problems but training these networks is time consumed. One way to reduce the training time is to implement parallelism. However, there are many constraints to implement parallelism. One of the major constraints is the unavailability of parallel processing architectures that makes it expensive. Another major constrain is the difficulty in porting codes among the available architectures. These constraints have been limited the research opportunities but now, fortunately, this is changing. The advancements in hardware and software are slowly allowing us to tap to parallel programming paradigm, for example, with the available of PVM (Parallel Virtual Machine) [5]. PVM is a mature parallel library that consists of macros and subroutines to program a variety of parallel machines as well as to support for combining a number of sequential machines into a large virtual parallel architecture. A well-known and widely used training algorithm of the supervised learning category is a backpropagation (BP) algorithm. The algorithm has two learning states. During the first state, a training data is presented to the input and then propagated forward through the network. The output value ( or ) of the neuron r is computed using the equation (1) and (2). oq f wqpo p p (1) where o q is an output activation of each neuron in 2. Conceptual Overview 2.1. Artificial Neural Network wqp the hidden layer. is a connection weight between the p-th op input neuron and the q-th hidden neuron. is the p-th feature vector. or f wrqoq q An Artificial Neural Network (ANN) is a computation model simulating a real biological nervous system. Based on its learning process, the ANN is categorized into: (2) where or is an output activation transfer function for the r-th output neuron. 1 wrq is a connection weight between the q-th oq Furthermore, based on the interaction level among processors, Flynn categorizes the MIMD systems into two sub-categories, i.e. a tightly coupled architecture, and a loosely coupled architecture. These architectural models produce two different programming paradigms that are: 1. Shared memory programming. It uses constructions such as semaphores, monitors and buffers. It is normally associated with tightly coupled systems. 2. Message passing programming. It uses a conventional message-passing method to communicate and synchronize. It is normally associated with loosely coupled systems. The performance measure of a parallel algorithm is speedingup. Speedingup is defined as the ratio between the elapsed time using m processors for the parallel algorithm and the elapsed time using a single processor for the sequential algorithm to complete the same task. One that affects the performance of a parallel algorithm is granularity. Granularity is the average process size, measured in numbers of instructions executed. In most parallel computation cases, the overhead associated with communications and synchronization is relatively high, so, it is an advantageous to have a high coarse granularity, i.e., a high computation-tocommunication ratio (a large number of computation instructions between communication instructions) hidden neuron and the r-th output neuron. is the hidden layer activation. The output is compared with the target output values, resulting in an error value ( r ) for each output neuron. The second state is a backward pass, where the error signal is propagated from the output layer backward to the input layer. The 's are computed recursively and then used as a basic of weight changes. (3) r (1 or )(t r or ) where r is the r-th output neuron t r is the target value associated with the feature vector (4) q oq (1 oq ) wrq r r where r is the r-th error of output neuron wrq is the connection weight between the q-th hidden layer neuron and the r-th output layer neuron The gradient between input and hidden layer is computed using the equation (5) and (6). (5) pq q o p q is hidden layer's for the q-th neuron op is the p-th input feature vector rq r oq (6) where r is output layer's for the r-th neuron o q is the q-th input feature vector 2.3. Possibilities for Parallelization There are many methods for parallelization [9], such: 1. mapping every node to a processor 2. dividing the weight matrix amongst the processors 3. placing a copy of entire network on each processor 2.2. Parallel Processing A variety of computer taxonomies exist. Flynn's taxonomy classifies computer architectures based on the number of instruction and data streams, [6], [7]. Its categories are: 1. SISD (Single Instruction Single Data stream); 2. SIMD (Single Instruction Multiple Data stream); 3. MISD (Multiple Instruction Single Data stream); 4. MIMD (Multiple Instruction Multiple Data stream) Mapping every node to a processor, so that the parallel machine becomes a physical model of the network. However, this is unpractical for large networks, but in a massive parallel architecture, this perhaps more practicals, since the number of nodes (and even nodes per layer) can be significantly greater than the number of processors. 2 concurrently to solve a single problem. Therefore, PVM can solve a huge computational problem by using an aggregate power of many architecture computers. On each node of a virtual machine (host), PVM runs daemons. The daemons, which are controlled by a user process, are responsible for the spawning of tasks, communication, and synchronization between tasks. Furthermore, daemons communicate with each other using UDP (User Datagram Protocol) sockets. On the top of UDP, a reliable datagram delivery service is needed to ensure datagram delivery and TCP (Transmission Control Protocol) could provide a reliable stream data delivery between Ethernet hosts. Beside used in communication among daemons, the UDP sockets are used also in communication between the daemons and local PVM tasks, and between tasks on the same host or different hosts when PVM is advised to do so. The communication between two tasks on different hosts normally comprises a task talking to the daemon using TCP, then the daemons communicate using TCP, and finally, the daemon delivers the message to the task using TCP. A task-to-task direct communication is also possible. This direct communication can be achieved through advising PVM using a special function in the source code. The communication then will use TCP sockets. This direct communication mode ought to be faster, but prevents scalability as the number of sockets available per task is limited by operating system. Dividing the weight matrix amongst the processors, so that allows an appropriate segment of the input vector to be operated at any particular time. This approach is feasible for a SIMD, a shared memory architecture that uses a data parallel programming paradigm. Placing a copy of the entire network on each processor, so that allows a full sequential training of the network for a portion of the training set. The training results are then averaged to give the overall attributes of the network, i.e. the final weights. Figure 1. Placing a copy of entire network on each processors In acquiring the final weights, this method speedups linearly. This method, furthermore, could be pushed to have a greater speedup than a linear speedup by utilizing the error terms collected from the feedforwards for a single back- propagation step. The procedure is known as the batch updating of weights. However, the more attractive the potential to speedup this method is, the more it tends to stray away from the true parallelization of the sequential method and it also have a tendency to taint the results. 2.4. PVM (Parallel Virtual Machine) 3. Implementation Although the UNIX operating system provides routines for inter-process communication but these routines are at a lowlevel, so they are difficult to be used. To overcome this difficulty, PVM (Parallel Virtual Machine [5]) combines these routines to present a collection of high-level subroutines that allow users to communicate between processes, synchronize processes, spawn, and kill processes on various machines using a message passing construction. To be executed, these routines have to be combined with a user library, linked with a user source code. These high-level subroutines can be run over a wide range of various architectures, consequently, different architectures can be used 3.1. PVM Implementation, The main steps to implement PVM in a live network are: Selecting hosts. This is with considering hosts' CPU resource utilization Porting the source code to host's architecture The common problems in utilizing PVM are: Varying CPU-resource utilization of PVM nodes. PVM provides no dynamic load balancing if a bottleneck occurs, when one machine is severely loaded compared to others in the virtual machine. 3 Varying on network loads. High network utilization will decrease the performance because communication time will increase as a result of lower bandwidth available to users. 3.2. Batch-Mode Training Algorithm Inisialisasi arsitektur Start Inisialisasi bobot awal Inisialisasi set training Forwardpass Forwardpass Forwardpass Forwardpass Forwardpass Propagasi balik Stop Instead of a conventional pattern-mode training method, to update weights, we use a batch-mode training method. The difference between the pattern-mode and the batch-mode training methods is in the number of training examples propagated to the net before weights are updated. The pattern-mode training method propagates only one training pattern while the batch-mode training method propagates the whole training set before weights are updated. error<= max_error Y N Ubah bobot Figure 3. Parallelizing scheme using batch-updating 3.4. Putting it Together Spawn slaves Inisialisasi arsitektur Inisialisasi dengan parameter dari master Forw ardPass Inisialisasi dengan parameter dari master Inisialisasi bobot awal Inisialisasi set training PVM PVM Forw ardPass P r o p a g a s i b a lik Y error<= max_error Y N Ubah bobot Figure 4. Implementation parallel batch-updating on PVM The implementation of a parallel algorithm on PVM is based on a standard master-slave model. The following is the complete parallel algorithm using batch-mode-updating (figure 4.) 1. Master initializes a neural-net architecture, its weights, and a training set 2. Master spawns slaves and sends a copy of the neural-net architecture and its initial weight values to each slave 3. Master sends to each slave a part of the training set 4. Master waits slaves’ output Each slave concurrently propagates its part of the training set forward through the net. Each slave return its output values to the master Master accumulates all partial values 5. Master propagates the errors backward and adapts the weight values 6. The algorithm is repeated from step 3 until the weights converge. 7. Master kills slaves Figure 2. Comparison between batch-mode training and online mode training As it has been mentioned that the overhead of communication and synchronization is relatively high in most parallel computation cases, so for that reason, the batch-mode method has a better performance than the pattern-mode performance. 3.3. A Weight Updating Scheme A weight updating scheme of the batch-mode method is that the forward pass is concurrently proceeded in the PVM's slaves-process to obtain training errors, and then using these errors, the master-process adjusts the neural-net weights (figure 3.) 4. Results Figure. 5 illustrate an experiment of PVM. It depicts a parallel virtual machine that consists 4 of heterogeneous (various architectures and operating systems) computers connected in a network. We are indebted to many people (especially Mr. Eto Sanjaya) in the Intelligent System Research Group for their support to this research. We particularly thank to Ms Lanny Panjaitan, Miss Elvayandri, Mr Erick Perkasa and Miss Sofie for their assistance in preparation of this paper. References: [1] Freeman, J.A, and Skapura, D.M, "Neural Networks: Algorithms, Applications, and Programming Techniques", Addison Wesley, 1991 [2] http://www.GNU.org/, "GNU's Not UNIX", The GNU Project and The Free Software Foundation (FSF), Inc., Boston, August 27, 1998 [3] Hunt, Craig, "TCP/IP Network Administration", O'Reilly and Associates, Second Edition, December 1997 [4] Geist, A., A., Begeulin, J., Dongarra, W., Jiang, R., Manchek, and V., Sunderam, "PVM: Parallel Virtual Machine -–A Users' Guide and Tutorial for Networked Parallel Computing", Oak Ridge National Laboratory, May 1994 [5] Coetzee L., "Parallel Approaches to Training Feedforward Neural Nets", A Thesis Submitted in Partial Fulfillment for the Degree of Philosophiae Doctor (Engineering), Faculty of Engineering University of Pretoria, February 1996 [6] Lester, Bruce P., "The Art of Parallel Programming", Prentice Hall International Editions, New Jersey, 1993 [7] Hwang, Kai, "Advance Computer Architecture: Parallelism, Scalability and Programmability", McGraw-Hill International Editions, New York, 1985 [8] Purbasari, Ayi, "Studi dan Implementasi Adaptasi Metoda Eliminasi Gauss Parallel Berbantukan Simulator Multipascal", 2:1-39, Tugas Akhir Jurusan Teknik Informatika Institut Teknologi Bandung, 1997 [9] http://www.mines.colorado.edu/students/f/ fhood/pllproj/main.htm, "Parallelization of Backpropagation Neural Network Training for Hazardous Waste Detection", Colorado School of Mines, December 12, 1995 Figure 5. Parallel Virtual Machine The speedup curve is shown in figure 6. The speedup is a function of: Number of slaves, Number of iterations, Size of the training set Figure 6. Speedup vs. number of slaves 5. Concluding Remarks PVM can be implemented in a live-network. However, it needs some considerations in the resource sharing (in multi-user environment), security, and load balancing The parallel batch-mode neural-net on PVM has a better performance than a sequential implementation performance. The optimum performance of the batch-mode method is obtained for a neural-net with a large enough training set, since it will have a bigger grain-size. Acknowledgment: 5 [10] http://www.tc.cornell.edu/Edu/Talks/PVM/ Basics/, "Basics of PVM Programming", Cornell Theory Center, August 5, 1996 6