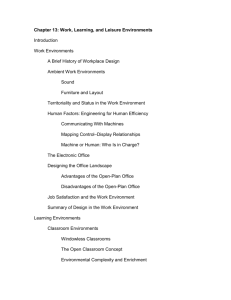

Topic Outlines - Michigan State University

advertisement