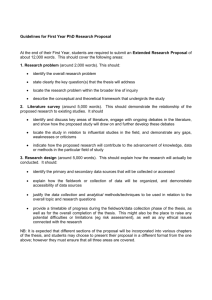

requiring donors

advertisement