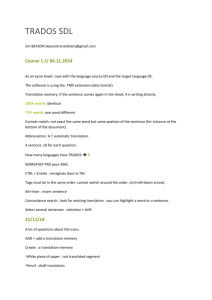

Test - Localisation Research Centre

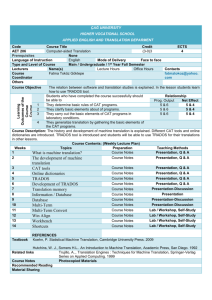

advertisement