Evolution of Reinforcement Learning in Foraging Bees

advertisement

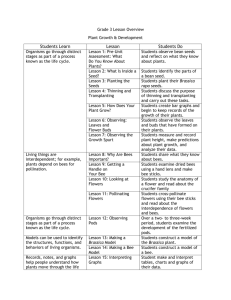

Evolution of Reinforcement Learning in Foraging Bees: A Simple Explanation for Risk Averse Behavior Yael Niv - Department of Psychology, Tel-Aviv University, Israel Email: yaeln@cns.tau.ac.il Daphna Joel - Department of Psychology, Tel-Aviv University, Israel Email: djoel@post.tau.ac.il Isaac Meilijson - School of Mathematical Sciences, Tel-Aviv University, Israel Email: isaco@math.tau.ac.il Eytan Ruppin - School of Computer Sciences & Department of Physiology and Pharmacology, Tel-Aviv University, Israel Email: ruppin@math.tau.ac.il Corresponding Author: Yael Niv Department of Psychology Tel-Aviv University Ramat-Aviv, Tel-Aviv 69978 Israel Tel. +972-51-883178 Fax. +972-3-6421889 Abstract Reinforcement learning is a fundamental process by which organisms learn to achieve a goal from interactions with the environment. We use Evolutionary Computation techniques to derive (near-)optimal neuronal learning rules in a simple neural network model of decision-making in simulated bumblebees foraging for nectar. The resulting bees exhibit efficient reinforcement learning. The evolved synaptic plasticity dynamics give rise to varying exploration/exploitation levels and to well-documented animal foraging strategies. These behaviors are shown to emerge directly from optimal reinforcement learning, which provides a biologically founded, parsimonious and novel explanation of risk-aversion. Our results are corroborated by a rigorous mathematical analysis and by experiments in mobile robots. Preferred mode of presentation: Oral presentation Introduction Reinforcement learning (RL) is a process by which organisms learn from their interactions with the environment to achieve a goal1, and has been indicated as a fundamental means by which experience changes behavior in both vertebrates and invertebrates2. In RL, learning is contingent upon a scalar reinforcement signal that provides evaluative information about how good an action is, without providing an instructive cue to the most rewarding behavior. RL has been demonstrated and studied excessively in the ethology of foraging bumble-bees. Real3,4 showed that when foraging for nectar in a field of blue and yellow artificial flowers, bees not only exhibit efficient RL, but also show a strong preference for landing on constant rewarding flowers, as opposed to variably rewarding flowers yielding the same mean reward. Such risk-averse behavior5 has classically been accounted for by hypothesizing the existence of a subjective non-linear concave “utility function” for nectar6,7. RL has attracted ample attention in computational neuroscience, yet a fundamental question regarding the underlying mechanism has not been sufficiently addressed, namely, what are the optimal neuronal learning rules for maximizing reward in RL? And, given such rules, what foraging strategies emerge from optimal RL? In a previous neural network (NN) model, Montague et al.8 simulated bee foraging in a 3D arena of flowers, based on a neurocontroller modelled after an identified interneuron in the honeybee suboesophogeal ganglion9,10. While this model replicates Real’s foraging results and provides a basic and simple NN architecture to solve RL tasks, many aspects of the model, first and foremost the handcrafted synaptic learning rule, are arbitrarily specified and their optimality questionable. Towards this end, we use a generalized and parameterized version of this model in order to determine the optimal synaptic learning rules for RL (with respect to maximizing nectar intake) using a genetic algorithm11. Previous attempts at evolving neuronal learning rules have used heavily constrained network dynamics and very limited sets of learning rules12-15, or evolved only a subset of the learning rule parameters16. We define a general framework for evolving learning rules, which encompasses all heterosynaptic Hebbian learning rules, along with other characteristics of the learning dynamics, such as learning dependencies between modules. Preferred mode of presentation: Oral presentation (a) Regular Input Module B Y Differential Input Module N B Reward Module Y W ij R N (b) P (c) 7 6 2 Synapses Initial Weights m b 8 1 4 Hebb DependCoefficients encies Figure 1: (a) The bee's neural network controller. (b) The bee's action function. (c) The genome sequence of the bee. The Model A simulated bee flies above a 3D patch of 60x60 randomly scattered blue and yellow flowers. In each trial the bee descends from height ~10, advancing in steps of 1 unit in any downward heading direction. The bee views the world through a cyclopean eye (10 cone view), and in each timestep decides whether to maintain the current heading direction or to change direction randomly, according to the visual inputs. Upon landing, the bee consumes the nectar in the chosen flower and another trial begins. A bee’s life consists of 100 trials. The evolutionary goal (the fitness criterion) is to maximize nectar intake. In the NN controlling the bee's flight (Fig. 1a), three modules contribute their input via synaptic weights to a linear neuron P, whose continuous-valued output is P(t) = R(t) + (iregular)Wi(t)Xi(t) + (jdifferential)Wj(t)*[Xj(t) - Xj(t-1)]. (1) The bee's action is determined according to the output P(t) using Montague et al's probabilistic action function6,8 (Fig. 1b) p(change direction) = 1 / [1 + exp(m*P(t) + b)] . (2) During a bee's "lifetime" the synaptic weights are modified via a generalized heterosynaptic Hebb learning rule of the form Wi = [AXi(t)P(t) + BXi(t) + CP(t) + D], (3) where is a global learning rate parameter, Xi(t) and P(t) are the presynaptic and the postsynaptic values respectively, Wi their connection weight, and A-D are real-valued evolvable parameters. In addition, we allow for learning in one module to be dependent on the activity of another module via axo-axonical connections simulating gating of synaptic plasticity. The simulated bee's “genome” (Fig. 1c) consists of a string of 28 genes, each representing a parameter governing the network architecture or learning dynamics. Using a genetic algorithm11, the bees were “evolved” and the optimal gene values determined. In each generation one of the two flower types was randomly assigned as a constant-yielding flower (containing 0.7l nectar), and the other a variable-yielding flower (1l nectar in 1/5th of the flowers and zero otherwise). Reward contingencies were switched between the flower types in a randomly chosen trial during each bee's life. Evolution of Reinforcement Learning About half of the evolutionary runs were successful runs, in which rewarddependent choice behavior was evolved. These runs reveal that an interaction between eight genes governing the network structure and learning dependencies is essential for producing efficient learning in the bee's uncertain environment. We find that in our framework only a network architecture similar to that used by Montague et al. 8 can produce above-random foraging behavior, supporting their choice as an optimal one. However, our optimized networks utilize a heterosynaptic learning rule different from that used by Montague et al., which gives rise to several important behavioral strategies. Examining the behavior of the evolved bees, we find that there are individual differences between the bees in their degree of exploitation of the high-rewarding flowers versus exploration of the other flowers (Figure 2(a,b)). This can be explained by differences in the Hebb rule coefficients of the evolved bees: In the common case when upon landing the bee sees only one color, the update rule for the corresponding differential synapse is Wi = [(A-C)*(-1)*(R(t)-Wi) + (D-B)] (4) leading to an effective monosynaptic coefficient of (A-C), and a general weight decay coefficient (D-B). For the rest of the differential synapses, the learning rule is Wj = [(C)(R(t)-Wi) + D]. (5) Thus, positive C and D values result in spontaneous strengthening of competing synapses, leading to an exploration-inclined bee, while negative values result in exploitation-inclined behavior. (a) Preference for blue flowers 0.6 0.4 0.2 0 25 50 75 0.8 0.6 0.4 0.2 0 0 100 25 (b) 1 0.8 0.6 0.4 0.2 0 0 25 50 75 Trials 100 Preference for constant rewarding flowers Preference for blue flowers 0.8 0 (c) 1 1 50 75 100 (d) 0.7 0.6 0.5 0.4 0 0.25 0.5 0.75 1 Learning rate Figure 2: (a,b) Preference for blue flowers for two different bees from the last generation of two successful runs, averaged over 40 test bouts, 100 trials each. Blue is the initial constantrewarding flower, reward contingencies are switched at trial 50. (c) Previously evolved bees tested in a risk-aversion scenario show a marked preference for the constant-yielding flower. (d) Risk aversion is ordered according to learning rate. Emergence of Risk Aversion A prominent strategy exhibited by the evolved bees is risk-aversion. Figure 2(c) shows that when tested in a new environment, where the mean rewards of the two kinds of flowers are identical, the previously evolved bees pronouncedly prefer the constantrewarding flower. In contradistinction to the conventional explanations of risk aversion, our model does not include a non-linear utility function. What hence brings about risk- averse behavior? Corroborating previous numerical results17, we prove analytically that this is a direct consequence of Hebbian learning dynamics in an armed-bandit-like RL situation. The bee's stochastic foraging decisions can be formally modeled as choices between a variable-rewarding(v) and a constant-rewarding(c) flower, based on memory (synaptic weights). We consider the bee's long-term choice dynamics as a sequence of N cycles, each choice of (v) beginning a cycle. The frequency fv of visits to (v) can be determined (via Birkhoff's Ergodic theorem18) by the expected number of visits to (c) in a cycle, an is fv = 1/E[1/pv(Wv,Wc)] (6) where pv(Wv,Wc) is the probability of choosing (v) in a trial in which the synaptic weights are Wv and Wc. We show that if pv(Wv,Wc) is a positive increasing choice function such that [1/pv(Wv,Wc)] is convex, the risk order property of Wv() always implies risk-averse behavior, i.e. for every learning rate, the frequency of visits to the variable flower is less than 50%, further decreasing under higher learning rates. Our simulations corroborate this analytical result (Figure 2d). (b) Yellow synapse Blue synapse (a) 1 0.5 0 -0.5 -1 1 0.5 0 -0.5 -1 BBBBBYYBBBBBBYYYYYYY 0 5 10 Trials 15 20 Figure 3: Synaptic weights of a mobile robot incorporating a NN controller of one of the previously evolved bees, performing 20 foraging trials (blue flowers - ½l nectar, yellow - 1l in half the flowers, contingencies switched at trial 10). Top – flower color chosen in each trial. In order to assess the robustness of the evolved RL algorithm, we implemented it in a mobile Khepera mini-robot. Moving with continuous speed and performing calculations in real-time in a noisy real-world environment, the foraging robot exhibited behavior fully analogous to that of the simulated bees (Figure 3). In summary, we present a novel model of evolved embodied reinforcement learning agents, which enables their concomitant study on both the macro-behavioral and micro-dynamic levels. We further show that risk-averse behavior emerges directly from the evolved learning rule dynamics, making additional assumptions conventionally used to explain this behavior redundant. References: 1. Sutton R.S. and Barto A.G., Reinforcement learning: An Introduction (MIT Press, 1998) 2. Donahoe J.W. and Packard-Dorsel V. eds., Neural Network Models of Cognition: Biobehavioral Foundations (Elsevier Science, 1997) 3. Real L.A., Animal Choice Behavior and the Evolution of Cognitive Architecture, Science 253, 980-986 (1991) 4. Real L.A ., Paradox, Performance and the Architecture of Decision Making in Animals, American Zoologist 36, 518-529 (1996) 5. Kacelnik A. and Bateson M., Risky Theories - The effects of Variance on Foraging Decisions, American Zoologist 36, 402-434 (1996) 6. Harder L.D. and Real L.A., Why are Bumble-bees Risk Averse? Ecology 68(4), 1104-1108 (1987) 7. Smallwood P.D., An introduction to Risk Sensitivity: The use of Jensen's Inequality to clarify Evolutionary arguments of Adaptation and Constraint, American Zoologist 36, 392-401 (1996) 8. Montague P.R., Dayan P., Person C. and Sejnowski T.J., Bee foraging in Uncertain Environments using Predictive Hebbbian Learning, Nature 377, 725-728 (1995) 9. Hammer M., An Identified Neuron mediates the Unconditioned Stimulus in Associative Olfactory Learning in Honeybees, Nature 366, 59-63 (1993) 10. Hammer M., The Neural Basis of Associative Reward Learning in Honeybees, Trends in Neuroscience 20(6), 245-252 (1997) 11. Mitchell T,. Machine Learning (McGraw Hill, 1997) 12. Chalmers D.J., The Evolution of Learning: An Experiment in Genetic Connectionism, in: Proceedings of the 1990 Connectionist Models Summer School, San-Mateo, CA (eds Touretzky D.S., Elman J.L., Sejnowski T.J. and Hinton G.E.) 8190 (Morgan Kaufmann, 1990) 13. Fontanari J.F. and Meir R., Evolving a Learning Algorithm for the Binary Perceptron, Network: Computation in Neural Systems 2(4), 353-359 (1991) 14. Baxter J., The Evolution of Learning Algorithms for Artificial Neural Networks, in: Complex Systems (eds Green D. and Bossomaier T., IOS Press, 1992) 15. Floreano D. and Mondada F., Evolutionary Neurocontrollers for Autonomous Mobile Robots, Neural Networks 11,1461-1478 (1998) 16. Unemi T., Nagayoshi M., Hirayama N., Nade T., Yano K. and Masujima Y., Evolutionary Differentiation of Learning Abilities - a case study on optimizing parameter values in Q-learning by a genetic algorithm, in: Artificial Life IV (eds Brooks R.A., Maes P.) 331-336 (MIT Press, 1994) 17. March J. G., Learning to be Risk Averse. Psychological Review 103(2), 301-319 (1996) 18. Breiman L., Probability (Addison-Wesley, 1968)