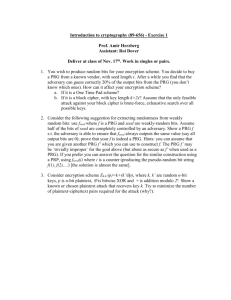

17659

advertisement

17659 >>: It's a pleasure to have Nishanth Chandran speaking today. So Nishanth is from UCLA. He's a student of [inaudible] and Rafael Ostrovsky. And he's going to be speaking about a new and interesting paradigm for cryptography. >> Nishanth Chandran: Thanks. So some references in case you're interested. This appears in Crypto this year. Also a full version that I'll give you the link to later. It's joint work with Vipul Goyal Ryan Moriarty and Rafael Ostrovsky. Okay. So typically in cryptography, every party has some sort of identity. So, for example, it could be a public key given through some public infrastructure. It could be a biometric that identifies you in the network, or, for example, in the case of identity-based encryption it could be your e-mail ID. So if someone knows your public key they can encrypt the message and send it to you or if they know your e-mail address they can encrypt a message to you so that only you can decrypt the message. So the question is can we have any other different kind of identity, what else can we use as an identity? So a question we ask over here is how about where you are. In other words, three dimensional coordinates. Can we use this to do cryptography. The fact that I'm standing at this particular place gives me some sort of an identity, and I'm here at this particular point of time so that gives me some identity. So can we use cryptography out of this kind of notion? First, where might something like this be useful? Let's consider a situation where you have military base in the United States that wants to communicate with the military base in Iraq. So in a traditional crypto setting, the military bases would share a secret key, exchange it between themselves and then they can exchange messages back and forth and everything's good. But then what happens? You have, say, an honest officer who is captured by terrorists and he's tortured and he finally reveals the secret key. Ideally, what do we want to have, maybe we trust physical security more? Right. So maybe we're able to guarantee somehow through some physical means that those were inside a particular geographical region are good. So maybe we can guarantee that geographically if someone has entered the military base in Iraq, then he's a good guy. So we want to be able to send a message to anyone who is geographically present inside this military base in Iraq. So ideally we want to send a message encrypted to the military base in Iraq, and only someone who is at a particular geographical position, someone who possesses maybe some XYZ coordinates can decrypt the message and no one else can decrypt this message. One can think of other applications as well. You can have position-based authentication. So, for example, if you want to receive a message from this military base in Iraq and you want to have the guarantee that this message actually physically originated from the military base in Iraq. Some other examples could be positioned-based access control. So, for example, if I want to authorize someone to use a printer that's inside a room but if he goes a little farther away he no longer has access to print in this location. And you can think of many more such examples. So the problem informally is as follows: Say we have a set of verifiers who are present at various geographical positions in space. And we have a prover who is present at some geographical position, to become clear why I use the terms verifier and prover shortly. And the goal is to exchange a key with the prover if and only if the prover is at position P. So the verifiers want to talk this prover and if and only if this prover is at this position P which has some coordinates XYZ, then a key must be exchanged with the prover. Otherwise nothing should happen. Now, before we look at that task, let's look at a corresponding related task which is called secure positioning, which is a bit more basic. Again, we have a set of verifiers who wish to now simply verify the position claim of a prover at position P. So there is a prover again at position P and now we're not interested in a key exchange but simply the prover says, hey, I'm at XYZ and the verifiers want to verify that in fact the prover is at XYZ and he's not lying. And the verifiers can run an interactive protocol with the prover at P in order to verify this claim. So this problem has actually been studied in a bunch of works in wireless security. But there is an inherent problem with all of them. So we'll get to that in a bit. So what is a previous technique that's been used for secure positioning? First, the assumption is that all messages travel at the speed of light. Radiowaves achieve this, GPSs use this in their current operation. We actually don't require that they travel at a speed of light, but we just require that we can transmit messages as fast as an adversary can. If an adversary can transfer something at some particular speed then even we can do so at that particular speed. That's the only thing we need over here. But it's convenient to just say speed of light. So now we have a verifier and a prover, and they can use a time of response. So how do they do this? So the verifier sends a random nuns R to the prover and the prover responds with this random nuns. And the verifier measures how long it takes for this whole protocol to take place. And then just based on distance and the speed at which it travels, if the prover is responding back you get sort of an estimate of the distance. What is the advantage of the protocol? The advantage is that the prover cannot claim to be closer to the verifier than he actually is. So he can -- he can be very close by and claim to be extremely far away. That's possible. He can just simply delay his response such that it's coming from far away. But if he's in fact far away he cannot claim to be very close by. So one can think about generalizing this and in fact that's what Kapcun [phonetic] and Hubbard did in 2005 and used triangulation. So basically you have three verifiers, V1 V2 and V3 and say you have a prover P. So now each of these verifiers send out random nuns R1 R2 and R3 to the prover at P. And the prover has to respond back with the random nodes to each of the verifiers. Again, the three verifiers measure the time of response and verify the position claim. And you can just show just by triangulation that this is secure. It's secure but it assumes a single adversary. V1 where is the problem? Suppose you have a position P and you did have multiple colluding provers. In fact, there's an attack. What is the attack? Say you have three provers over here. P1 P2 and P3 such that P1 is close to V1 and P 2 is close to V2 and so on. Now when V1 sends out response R1, P1 is closer to V1 than P is. So P1 can respond back in time. And that's the same case with all the other adversary provers as well. So they can forge their responses as if it's coming from a single prover at position P. So the rest of the talk outline is going to be as follows: I'll first describe our model, basic model in a little bit more detail. We call it the random model. It's basically the model I was talking so far in a bit more formalization. Then we'll talk about the problem of secured positioning. I'll first show that the attack that I just described can be generalized in a way and in fact secure positioning is impossible in the random model, even if you allow any kind of computation assumptions any crypto assumptions you may be willing to make it's impossible in the model. So given that we have a strong and positive result, the question is can we do position-based cryptography in any other model and it turns out that the bounded retrieval model, which was a model that's been in use quite a lot recently. I'll talk about it in detail. That gives rise to positive information theoretic ->>: Would the model preclude one beacon sending out, one verifier sending out a message to a prover that has to then send it to three provers? >> Nishanth Chandran: Sure, sure R possible to result in that as well. >>: That might be a viable way of ->> Nishanth Chandran: R possible result. You can make any assumption like that. That also, it rules out. It takes into account that the prover could get information from multiple verifiers and have to send it to multiple verifiers as well, and even then there's an explicit attack that adversaries can carry out. >>: I think that might get around the multiple prover example you had before, because the provers have to communicate between each other in order to send the response out. >> Nishanth Chandran: Sure, but there is a way that they can do it. And I'll just get to that. And then after that we'll get to what we really started off with what we wanted to do, position-based key exchange. So we actually exchange a key with this particular prover at position P. And again we present positive information theoretical results in the model. So first an outline of the random model. So you have multiple verifiers available V1 V2 and V3 in this diagram. You have a prover P so now let's just assume that P lies inside the convex hull, whereas we can avoid that in some cases. We'll assume that the verifiers can send messages at any point of time to the prover with the speed of light. Next we'll assume that verifiers can record the time at which they send the messages, the time at which they receive the messages and so on. We'll assume that verifiers shared a secret channel between themselves that they can use to communicate before the protocol, during the protocol, after the protocol. And finally we'll assume that we have multiple coordinating adversaries and since we're showing a lower bound we'll even assume that they're computationally bounded. Even if they're computationally bounded our lower bound holds. >>: Could you assume that the verifiers have synchronized clocks, too? >> Nishanth Chandran: Yes. >>: Just ->> Nishanth Chandran: Yes. So first theorem we show is that there does not exist any protocol to achieve secure positioning in the random model. And there's a direct corollary of this we get that position-based key exchange is impossible in a random model. Any protocol for position-based key exchange can also be easily seen to be a protocol for secure positioning also. So I'll just present a high level sketch of our lower bound. Say we have verifiers V1 V2, V3, V14 and we have a position P. And now we have multiple colluding provers, P1, P 2. P3 and P 4 and we're now going to describe the -- we're going to describe the attack in the following way. We're going to say let there be any protocol that achieves it for a prover at position P and we'll show how these provers, adversary provers P1. P 2. P3 and P 4 can simulate the exact same thing exactly as if there was someone at position P responding to the verifiers. So that's a generalization of the attack I presented earlier. So basically PI can, any PI can run an exact copy of the prover. That's what we're going to show. And PI is going to respond to VI. So P2 respond V2, V1 respond to V1 and V3 and P3 and so on. And all the PIs will run basically the exact same copy as the prover. We'll show that. Basically what happens is that PJ can -- so any prover at position P will be getting information from, say, V3 and V2 and say he has to respond back to V1. Right so there's a total time taken for something like this is the time taken for a message to travel from V3 to P, because P has to make use of that information in the first place. And then it has to go to V1. Right? But then the adversary -- so in other words the blue part is the path taken if there were to be an honest prover over here at position P. But then an adversary always has a shortcut like this. And you can basically show through some triangle inequalities that this is always possible. And no matter from where it comes, if it comes from here, also the same thing can be done. So it can be shown that PI can simulate exactly the same copy as P. Maybe at a slightly latency period of time but since PI is closer to VI than position P, he can respond back in time and we're done. Okay. So the implication of the lower bound is that secure positioning and, hence, position-based cryptography is impossible in the linear model and even with computational assumptions. Note in the lower bound we never made any use of any sort of computational, whatever the prover was doing the same thing that the adversaries can do as well and it sort of doesn't rely on any computation assumption also. The question is are there alternate models where we can do encryption-based cryptography. And it turns out we can. Here are some constructions and proves. The boundary retrieval model is an extension of the bounded storage model that was introduced by Maurer in 92. What does the model say. It assumes that there's a long string X which is a say length N and it has some high min entropy. And it's either generated by some party or just comes up in the sky. The original bounded storage model said that X just comes up in the sky and assume that there's this string out there in the sky that has some high min entropy that everybody can see. Furthermore, it assumes that all parties, including honest parties, have some retrieval bound beta for some zero less than beta less than one. So in other words the adversaries can look at the entire X and store any arbitrary function of this X, but then the amount that they can retrieve is bounded. And it cannot be. It's only some constant fraction of the total length of the stream. And bunches of works have studied this model in great detail. But I won't get into all of them but we'll just talk about how this fits into our setting. So what do we mean by the BRM in the context of position-based cryptography? Again we have V1. V2 and V3. We have multiple colluding provers. And it's like the linear model but now since that we're going to present information to theoretical results we do not even assume that the adversaries are computationally bounded. So they can run in exponential time, for example, the adversaries. So we assume that the verifiers can broadcast some huge X, which is some large string X that is has some high min entropy. And adversaries and provers, honest provers as well, can store only a small F of X as X passes by. So they have some retrieval bounds. They cannot download the whole X as it passes by. And a restriction of the BRM and the BSM is that adversaries cannot reflect X. In other words, they cannot send X back and forth and this just violates the BSM as well as the BRM framework. So to make things a little bit more clearer, some assumptions we make is that we assume first that computation is instantaneous. Modern GPSs do perform some computation while using this speed of light kind of assumption. We can relax it but we'll get a slight error in the position. We may not be able to say that a person is at XYZ but maybe only be able to say that he's within some particular region, if we start allowing. We also assume that X travels in its entirety, and not as a stream. Again, we can relax this, but again we'll get a small letter in the region that we are secure positioning. So the question is how can one physically realize BRM. Is it possible? We have some thoughts on it. It seems reasonable that an adversary can only retrieve some small amount of information as the string passes by. We're assuming that this is a huge burst of information that's coming by. It seems reasonable that he can look at it but then he can, he has time only to like look at some parts of it and only store a small fraction of it. Another way they could do it is the verifiers could split X into different parts, and broadcast it on separate frequencies. And maybe it's hard for an adversary to listen in on all frequencies simultaneously but then can listen only into certain frequencies and gets only part of the information. Okay. So now going to our constructions, what are the primitives that we need? We require these tools called locally computable generators from the works of Sully Vanin [phonetic], what are they basically PRG takes an input string X with high min entropy like what I was just saying, large string X with high min entropy. Takes a sharp seed K chosen from uniformly random distribution. And the output of the PRG, PRG of X comma K is guaranteed to be statistically close to uniform even given the seed K and some A of X for arbitrary outbound function A. And the most important thing over here for us is that it's locally computable. So what do I mean by locally computable? In order to compute PRG of X comma K, one doesn't need to look at the entire stream. It basically uses the sample and extract paradigm. So basically it says if I have the stream X then all I need to do is look at a few bits of this X randomly selected, some bits of it, and then I can apply the extractor, and I will get something that's statistically close to uniform. So it's good for us because honest parties also are not allowed to look -- they cannot download the whole thing. They can just look like a little bit of it and compute some fraction. Okay. So as a warm-up, let's just first present secure positioning in one dimensional space. It's an easy thing to do for now. So we have V1 and V2 who are two verifiers on a straight line. And now we have a position P that's on the straight line, and we simply wish to verify that a prover is at position P. So how can they do this? V1 and V2 preshare short seed K which is a seed to the PRG. V1 is going to sample this X which comes from the distribution which long string which has high min entropy. And X and K are going to be broadcast such that they reach the position P exactly at the same time. What is a prover at position P do computes PRG of X comma J. And responds to the V1 with PRJ of X comma K. Again, V1 measures time of response and accepts the response is correct and if it were received at the right time. Now, just quickly the correctness of the protocol is quite easy to see. So note that a prover at P can compute PRG of X comma J. He gets both X and K so he can compute PRG of X comma K. V1 can compute PRG of X comma K when broadcasting X. When broadcasting X V1 already knows key K, he can compute PRG of X comma K. And can verify the response correctly. The response from prover from P will be on time because X and K are such that they arrive at P at the same time so it's just a total round trip time from V1. Now, why is this a secure positioning protocol? I'll just give you some proof intuition for this. So note that there are two kinds of adversaries over here. Broadly you can look at them as two classes of adversaries. Adversaries who are between V1 and P call them P1. And adversaries who are between P and V2 we call them P2. Now when these two streams travel past, what do each of these adversaries get to see? P1 can store some A of X, for some arbitrary function K. Right? P2 can store K. Right? And then these streams still travel and P1 gets to CK afterwards. P gets to see X afterwards. So what can P1 do? P1 can respond to V1 in time. He can respond. He's closer, much closer to V1 than P is so he can respond in time. But then he has only A of X and K. Right? He got the X first so he can compute A of X. And then he got to see K. So he just got A of X and K. Right? So by the property of pseudo random generators, this is statistically uniform so he cannot guess the response better than just guessing at random. So P1 is okay. What about P 2? P 2 can compute PRJ of X comma J because he gets to see K first. And then he gets to see X. So he can compute PRJ of X comma K. But then he's farther away from V1 than P is. So just by the distance bounding for him to respond back in time is going to take longer than someone over here. He's not going to be able to respond back in time. Okay. So this does our secure positioning protocol in one dimensional space. We can sort of generalize this in three dimensional space. How do we do this? For now I'll just make an unreasonable assumption and then we'll show how to get rid of it. So again you have V1. V2. V3, V14. You have a prover at position P. Now, V1 is going to pick K 1, which is a short seed to the PRG and preshare it with all the other verifiers. V2 is going to sample X1. V3 is going to sample X2. And V4 is going to sample X. So each of them sample a particular X. And for now let's make the assumption that VI can store all the Xs. So assume for now that V3 can store the X2. V2 can store the X1 and V4 can store X3, which I'll show you in a minute. So now they can all broadcast their respective streams such that they reach position P at the same time. And the prover does an iterative application of the PRG. He takes K1 and X1. PRG of X1 comma K1 to get K2 and K2 to get K3 and PRG comma K3 to get K4. And K4 is broadcast to all four verifiers. Again, verifiers check response and time of response. Now, the security of this protocol will follow from the security of position exchange that I'll present a bit later. But for now let's just look at the correctness of the protocol. So this is what each of the verifiers began with. And then they required a prover at position P to be able to compute K4. But in order to verify that, the response K4, the verifiers themselves need to be able to compute K4. How did they compute K4? So actually it turns out the verifiers cannot compute K4 if they don't store the correct size. Why is that the case? So remember the KIs were applied iteratively. And in order to compute K4 I needed K3 in order to compute K3 I needed K2 and so on. So V3 might need K2 before broadcasting X2 in order to compute K3. Because if he broadcasts X2 then there's no way for him to compute K3 if he doesn't know K2 at that particular point in time. And nobody else can as well. But it just might be the case that V3 might have to broadcast X2 before or same time as V2 broadcasts X1. Even at this point even K2 is not even fixed no one knows the value of K2 but at that time V2 might have had to already broadcast X2. So it seems that the verifiers might not be able to compute K4 themselves so how do we get around this? So we get around this as follows: We basically let V1, V2 and V3 V4 pick K1, K2, K3, K4 at random before basically fix every key that goes into the application of the PRG. Now clearly verifiers know K4 because they picked it themselves but they must help the prover compute it. So as before, we went and broadcast K1. V2 broadcast X1 and in addition broadcast PRG of X1 comma K2 exclusive with K2. In other words, what they're doing is they're fixing the keys K1 K2 K3, K4 making one of the shares be from the iterations so when you apply PRG of X1 comma K1 you get one of the shares. And the other share is broadcast. So it's sort of a secret sharing of the keys. And the other verifiers do a similar kind of thing and verifiers basically secret share the KIs and broadcast one share. And you can show that coupled with the security of the regular protocol and secret sharing that this protocol is also secure. So protocol is basically V1 broadcast K1. V2 broadcast X1 and K2 prime which is a shared, V3 broadcast X2 and K2 prime and so on. And how does the prover compute it? Prover computes PRG comma K1 that basically gives a share to the next key. Exclusive that with K2 prime gives the share that's supposed to be, gives K2 itself then computes PRG X2 comma K2 and goes forward in the process. So the bottom line is that your positioning is that we can do secure positioning in the in the boundary retrieval model. Even if there's a small variance in delivery time or measurement of times in which you were getting the message that can be tolerated but you'll get a small positioning error. So the question is what else can we do in this model? Can the whole power of this can be realized only if we can actually get some sort of a key exchange with the prover at position P. So in fact we can do that. Let's look at again information theory key exchange in one dimensional space. So as a reminder secure positioning protocol we had V1 and V2 along a straight line and you had a position P. There were two types of adversaries, P1 and P 2. And we noted that P1 could not compute the key. If you viewed the response as a key, it was already statistically close to uniform. So great to have the property of the key. Furthermore, we noted that P1 could not compute the key. So seems like halfway we're done. P 2 could compute the key but could not respond in time. That was a problem that we had. So this cannot be directly used for key exchange because there exists an adversary over who can compute the key. But we seem to have already taken care of half the problem. So maybe we can repeat it in the other direction and couple it in some way and get key exchange. In fact, that's what we do. So V1 picks K1 and secret shares it with V1 -- sends it to V2 as well. V1 also samples X2. V2 samples this long string X1. And they're both such that they arrive at P at the same time. And you do PRG of X1 comma K1 to get K2 and PRG of X2 comma K2 to get K3. So again you have two types of adversarial procedures, P1 and P2. P1 could score X of K1 and you could give them K1 for free and P2 can store A of X1 comma Q. So it seems like no adversary gets to learn K2 before or at the same time that he sees X2. That's the only way he can compute. PRG of X2 comma K2. If in fact that is true and this is in fact a secure key exchange protocol in one dimensional space. In order to get a key exchange protocol in three dimensional space we generalize it the same way we did generalization of the positioning. Again, for now assume the verifiers can store the Xs. V1 would broadcast K1 and X4, V2 broadcast X1 and X5, V3 would broadcast X2, V4 broadcast X3. Do iterative application of PRG and K6 is the final key. Now, some of the subtleties that arise in the proof is that there could be multiple colluding provers everywhere in space. You could have one guy over here computing E of X 4 comma K1 and sending across to this guy who commutes A of X3 and combines it in some way sends the graph to P 4 and so on. It could be endless because we're looking at key exchange, we're looking at there's no restriction on when he can respond back. He can work on it for forever later on and so on. We wanted information theoretical security. So it seems like there are some of these issues over here that creep up. So the proof idea is as follows. It works in two parts. We first have a geometric argument. We first need a lemma ruling out any adversary simultaneously receiving all messages. Verifier. So we know that the prover does receive all messages from the verifier simultaneously how do we know there doesn't exist some other point in space where all messages of the verifier do reach there simultaneously? This in fact characterizes regions within the convex hull where key exchange position is possible, a sample region that people might look to the end point and the red region shows where we can get position-based key exchange. We can show that the region is very large. And then we need to combine the geometric arguments to actually characterize information that adversaries at different positions get. What information does each adversary learn and what can he send across to someone else. Finally, we need to put that all together and that's where the extractor arguments come. We build on techniques from recent work intrusion resilient random secret sharing of Dziembowski and Pietrzak. And we secure the security of our protocol through a slight generalization of TP 07 that allows multiple adversaries working in parallel. So just a reminder about intrusion resilient random secret sharing scheme. RRSS [inaudible], basically you have end parties A1, S2. SN. And they have long streams, X1, X2, X3 and X10. K1 was chosen at random and given to S1. And S1 computes PRG of X1 comma K-1, gets K2. Sends it to S2 who computes PRG of X comma K2 and gets X3, sends it to 3 and so on. Basically iterative application of the PRG. And the same outputs, the key is N plus one. Now, what can an adversary do over here? It basically, this models something like a virus that can get into these processes and learn a little bit of information and get out. So basically an adversary can corrupt any sequence of players so an adversary can go to S 3 and learn a little bit of information. And then go to S 5, learn a little bit more information. And get back to S 3 and learn some more information and so on. So a bounded adversary can cut up a sequence of players with repetition as long as the sequence is valid. What do I mean by valid over here? The valid sequence must not contain the exact reconstruction sequence as a sub sequence. So here the way to reconstruct PRG, the way to reconstruct KN plus 1 would be to do S 1, S 2. S 3 so on up to SN. If you're able to look at S 1 and S 2 and go all the way into SN in that order then you can learn KN plus 1. Simply by doing whatever the honest parties would do. So as long as the valid sequence does not contain S 1 to SN as a sub sequence, then they guarantee that KN plus 1 is statistically close to uniform. So over here in this example the first sequence is invalid because it contains one two three four five as a sub sequence but the second sequence is valid because it does not include one two three four five as a sub sequence. So Dziembowski and Pietrzak show that K N plus one is close to as uniform as long as you prevent adversaries from corrupting exactly where are there reconstruction sequence. So at a high level the reduction to IIRS would be as follows. We can model again S1, S2, S3, S4, S5, having different excise. We can give all adversaries K1 for free, and then basically for any adversary in our scheme over here, any adversary who computes any sort of information, we can construct a corresponding adversary in their scheme that has valid corruption sequence. So we can translate anybody in our scheme, any adversary in our scheme to an adversary in their scheme that has some sort of valid corruption sequence. So we'll call that adversary as a corresponding adversary. So an adversary that we have in our scheme translates to a corresponding adversary in their scheme with some valid corruption sequence. But this was just one single adversary. So we need to make sure that if you have multiple adversaries, how do you combine them in order to represent their views? So we need a theorem to show that you can combine two adversaries that have valid corruption sequences in order to get a single adversary that has again a valid corruption sequence and so on. And we need to show that this is possible for any adversary in our setting, and there we need to make use of geometric down trend lemmas. So to conclude some other results that we've shown in the paper is to get position-based key exchange in the bounded iterative model for the entire convex region not just the red region I was talking about, but for that we need to make use of some computational assumptions as well. We also show how we can extend our techniques to get protocol for position-based public key infrastructure. So if I want to develop a public key infrastructure just based on position then we can do that. I wanted to do some sort of position-based multi-party computation where my input should be based on the position and that needs to come with some trust guarantee that indeed I am at this particular position and I can give my input into the multi-party computation. So open questions remain. There are models where we can do position-based key exchange. We've got the boundary retrieval model. Computational doesn't help too much except for this particular thing it doesn't help. Are there any other models for joint work with Surgefor, Whipple, Goyal and Rafael Ostrovsky we have initially results in the quantum model where we can show that even without making any assumption on bounded storage or anything like that, just regular quantum model we can get position-based cryptography. Finally could there be any other applications of position-based cryptography? Thanks a lot. And the full version of the paper is available on E-print. [Applause] >>: How does this interact with, say, revocation, when you revoke a position? >> Nishanth Chandran: Well, it's sort of the position is tied to the time. So sort of the verifiers broadcast something and they know at that particular time this is the key that would have resulted in the protocol. And if they want to do a protocol again, if they want to send a message to somebody else at that position at a later instance of time they need to run the whole thing again. >>: Everything is revoked automatically. >> Nishanth Chandran: Everything is revoked automatically because the key is tied to the time. >>: The same question, you show your possibility result in the claim model with basically no setup at all. If you get some very small setup, something more ->> Nishanth Chandran: So any kind of -- so unless you assume that you have tamper-proof hardware that cannot be cloned, then any sort of setup also doesn't help. We show that as well. But we require a strong assumption that -- so if it's possible for all parties whoever want their position to be verified or anything like that, to meet with the verifiers beforehand at some point in time and the verifiers give them some trusted hardware that cannot be tampered with, then you can do position-based key exchange. We didn't find that interesting because we want to -- we don't want everyone to come to the verifier in the first place. The idea is to use only position as sort of an identity. But any other kind of setup assumption, any kind of keys, anything that none of them work. We can show that. >>: 300 people are moving in the airplane, doesn't it get confused? >> Nishanth Chandran: You mean the provers are moving or? >>: They're all passengers, crossing their legs. >> Nishanth Chandran: Oh. If you want to verify their positions? Well if they're in motion, yeah, I guess you can do it by sort of repeated application of protocol. So measure their position at some particular point of time, then measure their position again and then you can make sure that they're following some sort of -I mean we looked at -- we thought about this in terms of satellites. We can sort of -- you can sort of make sure they're following their orbit by sort of measuring their positions at different points of time and that would be ->>: Air travel isn't [Inaudible] still quite a bit slower? >> Nishanth Chandran: Quite a bit slower. >>: Verifiers moving ->> Nishanth Chandran: In fact, actually there is some work that talks about the following two cases. So our impossibility [inaudible] doesn't involve the following two. If verifiers were moving or if verifiers were hidden. So if the adversaries did not know the positions of the verifiers. Although this impossibility directly doesn't hold true we have attacks on both those protocols that exist and in fact we do believe that most of our work does result in a general impossibility for those models. I don't know of any other model where the impossibility doesn't go through other than boundary driven model and the quantum model. >>: One question. So did you consider this sort of the [Inaudible] problem so maybe save it [Inaudible] positive result in terms of privacy? What if I want to hide my location? So I have the iPhone or some device and it can be triangulated by [Inaudible] but I might not want that to happen. So even sort of a more, more military base application, soldier somewhere and I don't want to be triangulated by [Inaudible] for some reason. [Indiscernible] so what do I need to do, to show that my position can be pinpointed ->> Nishanth Chandran: Even though I'm responding back to all these verifiers? >>: Yes. In terms of -- okay so maybe this is a situation so if you think of like a plane, for example. I don't know exactly how radar works, but I'm assuming you send a signal and it bounces off the plane somehow and maybe something like that, where you don't have a choice. You can basically [Inaudible]. >> Nishanth Chandran: Okay. Basically pinged and then ->>: What can you do in order to protect yourself or is there -- maybe if you have multiple planes, now can -- so the multiple planes, figure out a way so they cannot be so ->> Nishanth Chandran: Well ->>: Pinpointed it exactly. >> Nishanth Chandran: Well, if the verifiers can make sure that there are these colluding provers don't exist, then sort of you cannot guarantee anything. I mean, you would require -- if I as the verifier need to be confused as to where this guy is, right, then I need to then to use what we have, I need to believe that there are adversarial for by design there are provers also who will be giving these responses. So I don't know, as if any of this can be used, but maybe something might be over there of interest. >>: Thank you. (Applause).