The Faculty of Language: What`s Special about it

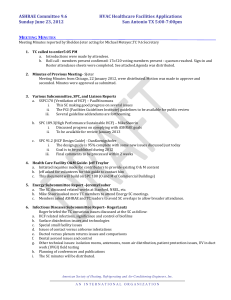

advertisement