Latent Semantic Analysis (LSA), introduced by

advertisement

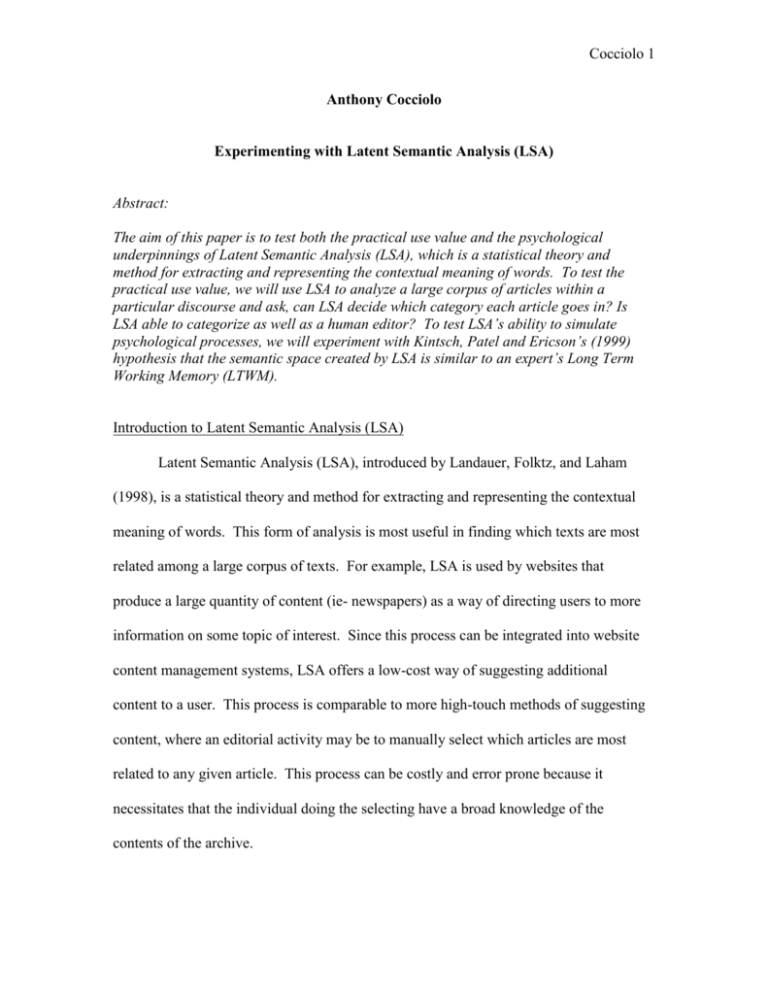

Cocciolo 1 Anthony Cocciolo Experimenting with Latent Semantic Analysis (LSA) Abstract: The aim of this paper is to test both the practical use value and the psychological underpinnings of Latent Semantic Analysis (LSA), which is a statistical theory and method for extracting and representing the contextual meaning of words. To test the practical use value, we will use LSA to analyze a large corpus of articles within a particular discourse and ask, can LSA decide which category each article goes in? Is LSA able to categorize as well as a human editor? To test LSA’s ability to simulate psychological processes, we will experiment with Kintsch, Patel and Ericson’s (1999) hypothesis that the semantic space created by LSA is similar to an expert’s Long Term Working Memory (LTWM). Introduction to Latent Semantic Analysis (LSA) Latent Semantic Analysis (LSA), introduced by Landauer, Folktz, and Laham (1998), is a statistical theory and method for extracting and representing the contextual meaning of words. This form of analysis is most useful in finding which texts are most related among a large corpus of texts. For example, LSA is used by websites that produce a large quantity of content (ie- newspapers) as a way of directing users to more information on some topic of interest. Since this process can be integrated into website content management systems, LSA offers a low-cost way of suggesting additional content to a user. This process is comparable to more high-touch methods of suggesting content, where an editorial activity may be to manually select which articles are most related to any given article. This process can be costly and error prone because it necessitates that the individual doing the selecting have a broad knowledge of the contents of the archive. Cocciolo 2 In addition to text relatedness, LSA can be used as a way of creating keyword searchable indexes. Such keyword searches would differ from some of the more prominent search engines. For example, it would differ from Google in that it would not depend on hyperlinks as a ranking mechanism. It would also differ from more crude search engines, such as many online library catalogs (OPACs), which only return results based on keyword matches and do little to rank results. For example, most OPACs do not make distinctions between how much a book is about something. One 500 page book could be entirely concerned with the philosophy of John Dewey, and another 500 page book may devote only 100 pages to Dewey’s philosophy. However, most OPACs, since they do not have the full text of the books available at their disposal, will not give precedence to the more “Deweyian” of the two books. The value of having full-text available is consequently fueling mass digitization projects by such Internet companies as Amazon and Google. Given that full-text availability it important, how do the searches which use LSA differ from the aforementioned searches? According to Landauer, Foltz, and Laham (1998), LSA is different in that it “represents the meaning of a word as a kind of average of the meaning of all the passages in which it appears, and the meaning of a passage as a kind of average of the meaning of all the words it contains” (6). Hence, the first item of one’s search results should represent the most about X search term compared to all other texts within the corpus. This does not depend on “simple contiguity frequencies, cooccurrence counts, or correlations in usage”, which would privilege longer texts which frequently use some given term. Rather, LSA captures “only how differences in word choice and differences in passage meaning are related” (5). For example, assume that we Cocciolo 3 were concerned with finding documents about “violence”. An LSA-backed search would not necessarily find the result with the highest occurrence of the word “violence” in its text, but rather the result that is most about “violence” compared to the other texts within the corpus. Although LSA “allows us to closely approximate human judgment of meaning similarity between words and to objectively predict the consequences of overall wordbased similarity between passages”, there are certain inherent limitations (Landauer 4). The most striking limitation its results are “somewhat sterile and bloodless” in that none “of its knowledge comes directly from perceptual information about the physical world, from instinct, or from experimental intercourse with bodily functions, feelings and intentions” (4). It also does not make use of word order or the logical arrangement of sentences (5). Although its results work “quite well without these aids”, “it must still be suspected of resulting incompleteness or likely error on some occasions” (5). Laudauer, Foltz and Laham analogizes LSA’s knowledge of the world in the following way: One might consider LSA’s maximal knowledge of the world to be analogous to a well-read nun’s knowledge of sex, a level of knowledge often deemed a sufficient basis for advising the young (5). Hence, LSA’s knowledge is based on word counts and vector arithmetic for very large semantic spaces, and is deprived of more sense-driven information. In addition to viewing LSA as a practical means of obtaining text similarity and performing keyword searches, Landuaer, Laham and Foltz claim that LSA is also a “model of the [human] computational process and representations underlying substantial portions of the acquisition and utilization of knowledge” (4). Thus, along with having a Cocciolo 4 practical component, LSA is hypothesized to underlie human cognitive processes. This does not seem to be based on neurological evidence, but rather on how well it works in practice: It is hard to imagine that LSA could have simulated the impressive range of meaning-based human cognitive phenomena that is has unless it is doing something analogous to what humans do. (33) Hence, the authors claim that since it works most of the time, then it must have some underlying basis in human cognitive processes. Although the authors admit that “LSA’s psychological reality is certainly still open”, it seems difficult to comprehend how it could represent human cognitive processes. Landauer, Foltz and Laham concede that it is unlikely that the human brain performs the LSA/SVD algorithm, they do believe that the “brain uses as much analytic power as LSA to transform its temporally local experiences to global knowledge” (34). Could we conclude that all constructions, either mathematical or statistical, that mimic human behavior have a basis in the constitution of the human brain? To avoid the issue of psychological basis, which may require neurological investigation, this paper will instead look at LSA’s ability to simulate psychological phenomenon. The relationship between LSA and Long Term Working Memory (LTWM) will be dealt with in the “Psychology and LSA” section. Rationale To test the practical applicability of LSA, we will perform an experiment that tests its ability to aid in information search and retrieval. This experiment will use the Teachers College Record, a journal of education research, as a corpus of text. This experiment will begin with a set of categories or topics, such as “Charters” and Cocciolo 5 “Literacy”—topics of keen interest to educators—and ask if LSA is able to decide which article should go in what category. This will then be compared against the categories that have been assigned by the editors. The question is thus: can LSA decide which articles go in which categories? Methodology 1) The first step in performing the LSA is to prepare the full-text that will be read into the analyzer. This case involves 1580 distinct articles, book reviews, commentaries and editorials that were available in HTML format. Although there are 8528 full-text HTML articles available, only 1580 of those have been manually categorized. Since we are only interested in comparing LSA’s results to those that are manually-categorized, we must set aside those articles that do not comprise the 1580. These 1580 articles represent a wide range of years (Table 1). These articles have been placed into 2825 categories, meaning that some texts were placed in multiple-categories. Decade 2000s 1990s 1980s 1970s 1960s 1950s 1940s 1930s 1920s 1910s 1900s unknown Total Articles 756 240 40 267 24 9 51 12 18 50 33 8 1508 Table 1: Breakdown by Years 2) The next step was to prepare and install the LSA software, which is Infomap NLP, developed at Stanford University, which is a variation of LSA based on Laundauer, Cocciolo 6 Foltz and Laham’s model. This software was installed on a Windows 2000 platform running CYGWIN (the Linux interpreter for Windows). 3) After installing Infomap, it was necessary to modify the stop-list. This list includes words that the analyzer will ignore in its analysis. Such words includes numeric characters, punctuation, and very common words, such as “and” and “this”. There are also words included on the stop-list that are often used in conjunction with other words, and that alone to not derive clear semantic value. For example, the word “studies” was on the stop list, which could be perceived as ambiguous (studies as a verb, social studies, studies about some subject area, etc.) There is also a selection of words that were included on the original stop list that had to be removed from the list because they are critical terms for the field of education. The stop-list included with InfoMap was used primarily for analyzing psychology texts, where such words as “learning”, “schools”, “students” and “social” “studies” are not particular to the core vocabulary. These words were removed from the stop-list because they could indeed represent the subject matter at-hand, unlike in psychology where they may be peripheral to some other issue. 4) After modifying the stop-list, I ran the infomap-build command, which reads in all the text files within the corpus and builds a model. This process took about 2 hours to run, and will vary depending on the amount of text being read and computer speed. The infomap-build program creates a series of word-vectors and indexes which can provide a basis for rapid searches. 5) The next step is to install the model so that it is permanently accessible. This is accomplished by running the infomap-install program. Cocciolo 7 6) After installing the model, one may begin making keyword searches against the word-vector indexes. For each topic area, I searched for the most related documents to that phrase, making sure to return the same number of results that were assigned manually. For example, if there are 92 articles manually classified under “language arts” by the editors, I will ask Infomap to return only 92 entries. This is so that I can compare how similar the 92 entries are, if at all. The search is achieved by running the associate program, and piping the results into a text file. 7) The results of the analysis were fed back into the SQL Server database which contained the articles and manually selected categorizations. SQL commands were performed to compare which documents were included manually by the editors, and which documents the analysis decided to include. Certain topics could not be included in the analysis because hyphenated-words are not supported. These phrases include “highstakes” and “at-risk”. The topic “special needs” was also omitted because each word analyzed singularly would not produce any meaningful results. Results Of the 1508 distinct articles that formed the semantic space, 2825 results were asked of the Infomap software (which coincides with the number of articles manuallycategorized). Of these 2825, Infomap correctly matched 750 documents into their proper category (26.5%). The most successful categories include “Charters & Vouchers” (75%), “Race and Ethnicity” (62%), and “Libraries” (56%) (see Table 2). Name Charters & Vouchers Race and Ethnicity Philanthropy Libraries Tracking LSA 42 79 5 10 2 Manual 56 127 9 18 4 % Correct 75.00 62.20 55.56 55.56 50.00 Cocciolo 8 Supply and Demand Online Publishing Standardized Testing Teaching Profession Philosophy Language Arts Literacy Reform Publishing and Communication Qualitative Methods War and Education Politics Distance Learning Gender and Sexuality Leadership Early Childhood Development Faculty Religion Standards Science Parental Influence Technology in the Classroom Arts Cognition Pre-School and Child Care Transformative Learning Program Evaluation Health and Nutrition Professional Development Comparative Education Athletes and Academics History of Schooling Experimental Research Urban Education Educational Psychology Educational Development Student Teaching Sociology of Education Violence Alternative Assessment Admissions and Tuition Survey Research Policy and Teaching Practice Social Studies Technology Education Mathematics Economics and School-to-work 8 2 19 67 50 39 17 54 6 9 22 59 9 15 14 13 15 4 15 4 10 9 8 7 2 6 14 2 13 7 1 11 3 9 12 8 1 20 2 5 4 1 5 2 1 3 1 16 4 39 148 114 92 41 145 17 26 64 179 28 47 44 42 50 14 53 15 39 37 35 31 9 28 66 10 66 39 6 68 21 64 91 61 8 169 18 50 40 10 52 26 14 50 29 50.00 50.00 48.72 45.27 43.86 42.39 41.46 37.24 35.29 34.62 34.38 32.96 32.14 31.91 31.82 30.95 30.00 28.57 28.30 26.67 25.64 24.32 22.86 22.58 22.22 21.43 21.21 20.00 19.70 17.95 16.67 16.18 14.29 14.06 13.19 13.11 12.50 11.83 11.11 10.00 10.00 10.00 9.62 7.69 7.14 6.00 3.45 Cocciolo 9 Control Working Conditions Student and Community Life Supervision Corp. Training and Continuing Ed. Grading Rural Education Secondary Schools College Advising Peace Studies Dropouts Independent Schools Schools of Education Writing for Publication Post-Industrial Education Totals 1 2 1 0 0 29 60 42 12 12 3.45 3.33 2.38 0.00 0.00 0 0 0 0 0 0 0 0 0 0 750 7 5 11 9 32 7 4 46 9 11 2825 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 Table 2: Results of LSA In deciphering the results matrix, what might this indicate about LSA, both as its practical use value and its plausible psychological foundations? We will first address its use value. It seems clear that LSA works best with an explicit vocabulary. Many of the tests of LSA were conducted using introductory psychology texts, which tend to have a more defined vocabulary than the education discourse. However, in the cases where education’s most explicit vocabulary was in use, as illustrated by the “Charters & Vouchers” category, high match-rates resulted (75%). This coincides with other high-matches, such as “Philanthropy” (56%), “Libraries” (56%), “Tracking” (50%) and “Supply and Demand” (50%). “Race and Ethnicity” had the second highest matches (62%, 79/127), however, one would not necessarily conclude the “Race and Ethnicity” is particular to the education discourse, at least not in the same way that “Tracking” may be. This may illustrate divisions amongst authors’ conceptual viewpoints: one either writes a paper which is richly laden with issues of ethnicity and diversity, or one avoids the issue altogether. Hence, within the education discourse, “Race and Ethnicity” is a subject onto Cocciolo 10 itself, much in the same way that “Charters and Vouchers” is for the education discourse, or “Operant Conditioning” is for psychology. Some of the categories that turned up the least results seem to be related to both vocabulary and phraseology. For example, categories which are the result of combining two or more words, where each word alone is highly general, such as “Independent Schools”, resulted in low matches. Because of the way the analysis software decomposes the semantic space into word vectors, it is highly likely that “schools” and “independent” were too general to turn up significant results. The conclusion could be drawn that LSA works best where individual words are specific and do not require phrasing (combining multiple words) to derive the correct semantic meaning. Combining multiple words where each word is specific, such as “Charters and Vouchers” or “Race and Ethnicity”, is not as important as the specificity of a single word (ie- the specificity of “Charter” or “Voucher”). If LSA were to be used in a practical context, it would be necessary that the LSA algorithm be altered so that it treated general words that constituted a phrase (ie“Independent Schools”) as a single whole (or as a phrase). Psychology and LSA In considering the conducted experiment, can we make any conclusion as to the psychological basis of LSA? Although we can make no strong conclusions because of a lack of neurological evidence, there are some interesting interpretations one could make. One particularly compelling aspect is introduced by Kintsch, Patel, and Ericsson (1999) which relates LSA to Long-term working memory (LTWM). Their work concerns itself with the ways in which experts employ their long-term working memory, which is available within a domain of expertise, in conjunction with short-term working memory, Cocciolo 11 which is capacity limited. The authors argue that “superior memory in expert domains is due to LTWM, whereas in non-expert domains LTWM can be of no help” (4). How is LSA related to LTWM? The authors argue that LSA space and LTWM are similar: We assume that the LSA space is the longterm working memory structure within which automatic knowledge activation in comprehension takes place. Thus, the memory structure that is responsible for the generation of LTWM is the semantic space: items close in the semantic space are accessible in LTWM, whereas items removed in the semantic space require more elaborate and resource consuming retrieval operations. (10) The authors further this notion by introducing the concept of the semantic neighborhood, which are closely related concepts in the semantic space and are most readily recalled in LTWM. They argue that “the semantic space itself functions like a retrieval structure, making close neighbors of newly generated text propositions automatically available in LTWM, as long as they can be successfully integrated with the existing episodic structure” (15). In considering the education semantic space that we have constructed using the TC Record corpus, can we see correlations between the ways that semantic neighbors are arranged and the ways in which an education expert would recall such information? In order to answer this question, we must look at some of the semantic neighborhoods. Because we know that “Charters and Vouchers” has high semantic value because of the atomicity and specificity of each word, let us view the semantic neighborhood. The semantic neighborhood is retrieved by running the associate –c all2 voucher charter, which yields following results: Word vouchers Relevance 0.85533 Cocciolo 12 voucher 0.8553 charter 0.8553 choice 0.82545 hoxby 0.7766 cleveland 0.73171 tax 0.72868 private 0.72352 public 0.66732 foul 0.66232 competition 0.65939 charters 0.65783 cost 0.64018 operate 0.63993 opponents 0.63858 cps 0.63445 diversion 0.63192 moe 0.62014 metcalf 0.61714 viteritti 0.61088 Table 3: The Semantic Neighborhood for “Charters and Vouchers” The semantic space for “Charters and Vouchers” is highly compelling, especially in considering the ways in which an expert would use LTWM in relationship to this topic. For example, the most relevant example after the variations of “charter” and “voucher” is the word “choice”, which is really the core of the issue. The next word is “hoxby”, which is the last name of Caroline M. Hoxby, a Professor at Harvard University who specializes in school choice. This is followed by “Cleveland”, which refers to the Cleveland Voucher Study, followed by “tax”, “public” and “private”, all highly relevant terms related to the area. Hence, it does seem like the semantic space derived from LSA mimics the way that an expert would use LTWM. For example, if someone asked an expert about charters and vouchers, she would be quick to recall issues of choice, taxation, public and private, as well as important research (Cleveland study) or important Cocciolo 13 people in the field (Caroline M. Hoxby). The same is similar for “Race and Ethnicity”, which creates a highly relevant semantic space with many related concepts (identity, stereotypes, status, ethnocultural): Word Relevance ethnicity 0.931 race 0.931 identity 0.81423 racial 0.80893 differences 0.73978 dominant 0.72314 native 0.70456 stereotypes 0.69805 racism 0.69333 ethnic 0.68762 status 0.68694 inferiorized 0.68104 pseudo 0.68065 african 0.67231 identities 0.66333 culture 0.66236 disadvantage 0.6622 ses 0.65739 feinberg's 0.65686 ethnocultural 0.65322 Table 3: The Semantic Neighborhood for “Ethnicity and Race” For categories that LSA did not closely correspond with manual selection, such as “Schools of Education”, the semantic neighborhood was much weaker and less relevant: public education schools advocates private rugby marshaling unaccountable 0.81857 0.80009 0.80009 0.6241 0.62262 0.60444 0.58259 0.57646 Cocciolo 14 mightily 0.56911 popular 0.55268 nonrepression 0.54957 subsidize 0.54627 lenin 0.54432 school 0.54382 privatization 0.54269 schooling 0.54218 borrowman 0.53971 proponents 0.53948 secondary 0.53218 campaigns 0.53132 Table 4: The Semantic Neighborhood for “Schools of Education” The semantic space is so weak because the word “of” is not considered, nor is the word ordering, which clearly makes a large difference in this case. This limitation is addressed by Laudaler, Foltz, and Laham, who note that LSA does not take into account word ordering (5). Conclusion In conclusion, LSA has a practical use value and can simulate psychological processes, particularly long term working memory. The operative term in this description is “simulate”—although LSA can create semantic spaces which mirror an expert’s LTWM in certain cases, this should not imply that LSA has a true psychological basis. The uncovering of a basis would at least require neurological study which is beyond the scope (and expertise) of this writer. Both its use value and ability to simulate an expert’s LTWM is affected by many factors, most notably vocabulary and phraseology. For example, words that are specific and do not require phrasing to derive semantic value tend to derive better semantic neighbors and work to better organize documents within categories (ie- “Charters & Vouchers”, “Race and Ethnicity”). Phrases where individual Cocciolo 15 words are highly general, word arrangement is critical, or phrases that use connectors (ie“of”), such “Schools of Education”, produce less compelling results. Cocciolo 16 References Kintsch, W., Patel, V. L., Ericson, K. A. (1999). The role of long-term working memory in text comprehension. Psychologia, 42, 186-198. Landuaer, T. K., Foltz, P. W., & Laham, D. (1998). Introduction to Latent Semantic Analysis. Discourse Processes, 25, 259-284.