1

Protocols for Cognitive Task Analysis

by

Robert R. Hoffman

Florida Institute for Human and Machine Cognition

Beth Crandall & Gary Klein

Klein Associates Division of ARA

including-

Goal Directed Task Analysis

by

Debra G. Jones and Mica R. Endsley

SA Technologies

This document is intended to empower people in the conduct of Cognitive Task Analysis and

Cognitive Field Research.

All rights reserved. Copyright 2008 by R. R. Hoffman, B. Crandall, G. Klein, D. G. Jones

and M. R. Endsley. Individuals or organizations wishing to use or cite this material

should request permission from:

Robert R. Hoffman, Ph.D.,

Institute for Human & Machine Cognition

40 South Alcaniz St.

Pensacola, FL 32502-6008

rhoffman@ihmc.us

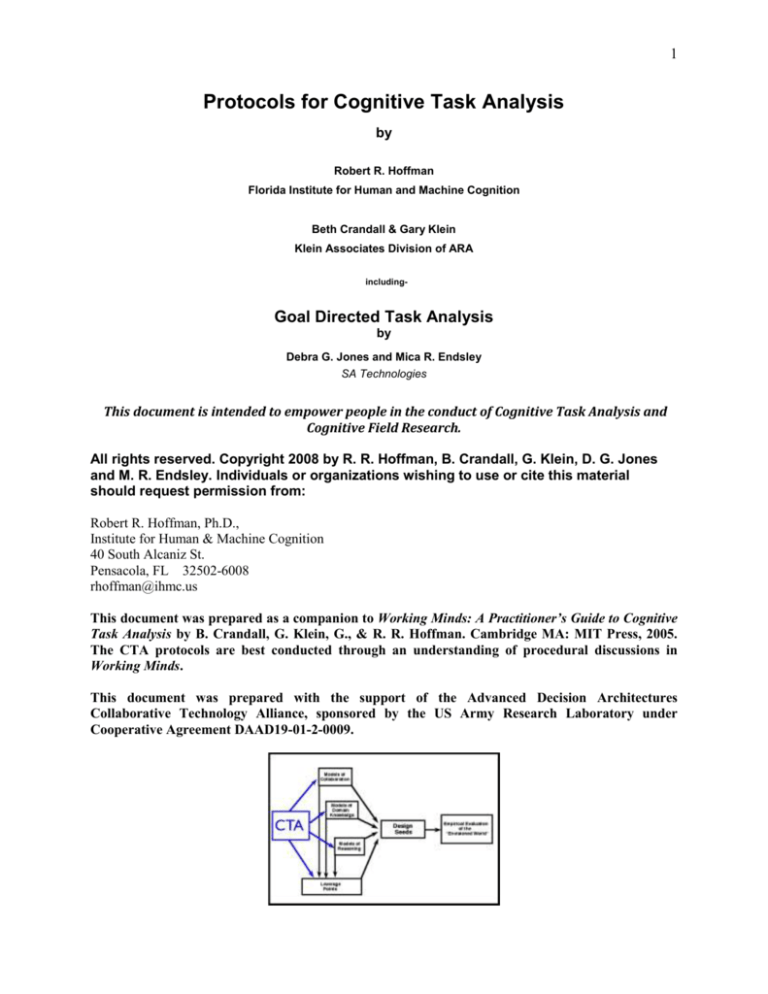

This document was prepared as a companion to Working Minds: A Practitioner’s Guide to Cognitive

Task Analysis by B. Crandall, G. Klein, G., & R. R. Hoffman. Cambridge MA: MIT Press, 2005.

The CTA protocols are best conducted through an understanding of procedural discussions in

Working Minds.

This document was prepared with the support of the Advanced Decision Architectures

Collaborative Technology Alliance, sponsored by the US Army Research Laboratory under

Cooperative Agreement DAAD19-01-2-0009.

2

1. Introduction

2. Bootstrapping Methods

2.1. Documentation Analysis

2.2. The Recent Case Walkthrough

2.3. The Knowledge Audit

2.4. Client Interviews

3. Proficiency Scaling

3.1. General Introduction

3.2. Career Interviews

3.3. Sociometry

3.3.1. General Protocol Notes

3.3.2. General Forms

3.3.3. Ratings Items

3.3.4. Sorting Items

3.3.5. Communication Patterns

3.3.5.1. Within-groups

3.3.5.2. Within-domains

3.4. Cognitive Styles Analysis

4. Workplace Observations and Interviews

4.1. General Introduction

4.2. Workspace Analysis

4.3. Activities Observations

4.4. Locations Analysis

4.5. Roles Analysis

4.6. Decision Requirements Analysis

4.7. Action Requirements Analysis

4.8. SOP Analysis

5. Modeling Practitioner Reasoning

5.1. Protocol Analysis

5.1.1. General Introduction

5.1.2. Materials

5.1.3. Coding Schemes

5.1.4. Effort

5.1.5. Coding Schemes Examples

5.1.6. Coding Verification

5.1.7. Procedural Variations and Short-cuts

5.1.8. A Final Word

5.2. Cognitive Modeling Procedure

5.2.1. Introduction and Background

5.2.2 Protocol Notes

5.2.2.1. Preparation

5.2.2.2. Round 1

5.2.2.3. Round 2

5.2.2.4. Round 3

5.2.2.5. Products

5.2.3. Templates

5.2.3.1. Round 1

5.2.3.2. Round 2

5.2.3.3. Round 3

6. The Critical Decision Method

6.1. General Description of the CDM Procedure

6.1.0. Preparation

6.1.1. Step One: Incident Selection

6.1.2 Step Two: Incident Recall

6.1.3. Step Three: Incident Retelling

6.1.4. Step Four: Timeline Verification and Decision Point Identification

6.1.5. Step Five: Deepening

6.1.6. Step Six: "What-if" Queries

6.2. Protocol Notes for the Researcher

3

6.3. Boiler Plate Data Collection Forms

6.3.0. Cover Form

6.3.1. Step One: Incident Selection

6.3.2. Step Two: Incident Recall

6.3.3. Step Three: Incident Retelling

6.3.4. Step Four: Timeline Verification and Decision Point Identification

6.3.5. Step Five: Deepening

6.3.6. Step Six: "What-if" Queries

6.3.7. Final Integration

6.3.8. Decision Requirements Table

7. Goal-Directed Task Analysis

7.1. Introduction

7.2. Elements of the GDTA

7.2.1. Goals

7.2.2 Decisions

7.2.3. Situation Awareness Requirements

7.3. Documenting the GDTA

7.3.1. Goal Hierarchy

7.3.2. Relational Hierarchy

7.3.3. Identifying Goals

7.3.4. Defining Decisions

7.3.5. Delineating SA requirements

7.4. GDTA Methodology

7.4.1. Step 1: Review Domain

7.4.2. Step 2: Initial interviews

7.4.3. Step 3: Develop the goal hierarchy

7.4.4. Step 4: Identify decisions and SA requirements

7.5.5. Step 5: Additional interviews / SME review of GDTA

7.5.6. Step 6: Revise the GDTA

7.5.7. Step 7: Repeat steps 5 & 6

7.5.9. Step 8: Validate the GDTA

7.5. Simplifying the Process

7.6. Conclusion

8. References

4

1. Introduction

The purpose of this book to lay out guidance, including sample forms and instructions, for

conducting a variety of Cognitive Task Analysis and Cognitive Field Research methods

(including both knowledge elicitation and knowledge modeling procedures) that have a proven

track record of utility in service of both expertise studies and the design of new technologies.

Our presentation of guidance includes protocol notes. These are ideas, lessons learned, and

cautionary tales for the researcher. We also present Templates—prepared forms for various

tasks.

We describe methods useful in boostrapping, which involves learning about the domain and the

needs of the clients. We describe methods used in proficiency scaling and procedures for

modeling knowledge and reasoning. We describe methods coming from the academic laboratory

(e.g., Protocol Analysis), methods coming from applied research (e.g., Sociometrty) and methods

coming from Cognitive Anthropology (e.g., Workpatterns Observations).

Along the way we present lessons learned and rules of thumb that arose in the course of

conducting of a number of projects, both small-scale and large-scale. The guidance offered here

spans the entire gamut—going all the way from general advice about records-keeping, to detailed

advice about audio recording knowledge elicitation interviews; going all the way from general

issues involved in protocol analysis, to detailed advice about the problems and obstacles

involved in managing large numbers of multi-media resources for knowledge models.

Supporting material, including general guidance for the conduct of interviews and detailed

guidance for the Critical Decision Method, can be found in: Crandall, B., Klein, G., & Hoffman

R. R. (2006). Working Minds: A Practitioner’s Guide to Cognitive Task Analysis. Cambridge,

MA: MIT Press.

5

2. CTA Boostrapping Methods Protocols and Templates

In many projects that use Cognitive Work Analysis methodologies, one of the first steps is

bootstrapping, in which the analysts familiarize themselves with the domain.

2.1. Documentation Analysis

Bootstrapping typically involves an analysis of documents (manuals, texts, technical reports,

etc.). The literature of research and applications of knowledge elicitation converges on the notion

that documentation analysis can be a critically important method, one that should be utilized as a

matter of necessity (Hoffman, 1987; Hoffman, Shadbolt, Burton & Klein, 1995). Documentation

analysis is relied upon heavily in the Work Domain Analysis phase of Cognitive Work Analysis

(see Hoffman & Lintern, 2005; Vicente, 1999).

Documentation Analysis can be a time-consuming process, but can sometimes be indispensable

in knowledge elicitation (Kolodner, 1983). In a study of aerial photo interpreters (Hoffman,

1987), interviews about the process of terrain analysis began only after an analysis of the readilyavailable basic knowledge of concepts and definitions. To take up the expert's time by asking

questions such as "What is limestone?" would have made no sense.

Although it is usually considered to be a part of bootstrapping, documentation analysis invariably

occurs throughout the entire research programme. For example, in the weather forecasting case

study (Hoffman, Coffey, & Ford, 2000), the bootstrapping process focused on an analysis of

published literatures and technical reports that made reference to the cognition of forecasters.

That process resulted in the creation of the project guidance document. However, documentation

analyses of other types occurred throughout the remainder of the project—analysis of records of

weather forecasting case studies, analysis of Standard Operating Procedures documents, analysis

of the Local forecasting Handbooks, etc.

Analysis of documents may range from underlining and note-taking to detailed propositional or

content analysis according to functional categories Documentation Analysis can be conducted

for a number of purposes or combinations of purposes. Rather than just having information flow

from documents into the researcher's understanding, the analysis of the documents can involve

specific procedures that generate records or analyses of the knowledge contained in the

documents. Documentation Analysis can:

Be suggestive of the reasoning of domain practitioners, and hence contribute to the forging of

reasoning models,

Be useful in the attempt to construct knowledge models, since the literature may include both

useful categories for domain knowledge and important specific "tid-bits" of domain

knowledge. In some domains, texts and manuals contain a great deal of specific information

about domain knowledge.

Be useful in the identification of leverage points—aspects of the work where even a modest

infusion of or improvement in technology might result in a proportionately greater

improvement in the work.

6

Often, "Standard Operating Procedures" documents are a source of information for

bootstrapping. However, if one wants to use an analysis of SOP documents for other purposes,

(e.g., as a way of verifying the Decision Requirements analysis), one may hold off on even

looking at SOP documents during the bootstraping phase. Therefore, we include the protocol and

template for SOP analysis later in this document, in the category of "Workplace Observations

and Interviews.

The uses and strengths of documentation analysis notwithstanding, the limitations must also be

pointed out. Practitioners possess knowledge and strategies that do not appear in the documents

and task descriptions and, in some cases at least, could not possibly be documented. Even in

Work Domain Analysis, which is heavily oriented towards Documentation Analysis, interactions

with experts are used to confirm and refine the Abstraction-Decomposition Analyses. In the

weather forecasting project (Hoffman, Coffey, & Carnot, 2000) an expert told how she predicted

the lifting of fog. She would look out toward the downtown and see how many floors above

ground level she could count before the floors got lost in the fog deck. Her reasoning relied on a

heuristic of the form, "If I cannot see the 10th floor by 10AM, pilots will not be able to take off

until after lunchtime." Such a heuristic had great value, but is hardly the sort of thing that would

be allowed in a formal standard operating procedure document. Many observations have been

made of how engineers in process control bend rules and deviate from mandated procedures so

that they can more effectively get their jobs done (see Koopman & Hoffman, 2003). We would

hasten to generalize by saying that all practitioners who work in complex sociotechnical contexts

possess knowledge and reasoning strategies that are not captured in existing procedures or

documents, many of which represent (naughty) departures from what those experts are supposed

to do or to believe (Johnston, 2003; McDonald, Corrigan, & Ward, 2002).

7

2.2. The Recent Case Walkthrough

2.2.1. Protocol Notes

The Recent Case Walk-through is an abbreviated version of the Critical Decision Method, and is

primarily aimed at:

Bootstrapping the Analyst into the domain

Establishing rapport with the Participant

The method can also lead to the identification of potential leverage points, a tentative notion of

practitioner styles, or tentative ideas about other aspects of cognitive work.

8

2.2.2. Template

"The Recent Case Walkthrough"

Interviewer

Participant

Start time

Finish time

Instructions

Please walk me through your most recent problem.

When was it?

What were the goals you had to achieve?

What did you do, step by step?

Narrative

(enlarge this cell as needed)

Build a timeline

TIME

EVENT

(Duplicate and expand these rows as needed)

Revise the Timeline

9

Instructions:

Now let's go over this timeline. I'd like you to suggest corrections and additions.

Time

Event

(Duplicate and expand these rows as needed)

Deepening

Instructions:

Now I'd like to go through this time line a step at a time and ask some questions.

Information

What information did you need or use to make this judgment?

Where did you get this information?

What did you do with this information?

Mental

Modeling

As you went through the process of understanding this situation, did you build a

conceptual of the problem scenario?

Did you try to imagine the important causal events and principles?

Did you make a spatial picture in your head?

Can you draw me an example of what it looks like?

Knowledge

Base

In what ways did you rely on your knowledge of this sort of case?

How did this case relate to typical cases you have encountered?

How did you use your knowledge of typical patterns?

Guidance

At what point did do you look at any sort of guidance?

Why did you look at the guidance?

How did you know if you could trust the guidance?

How do you know which guidance to trust?

Did you need to modify the guidance?

When you modify the guidance, what information do you need or use?

What do you do with that information?

Legacy

What forms do you have to complete or specific products you have to produce?

10

Procedures

Deepening Form

Timeline

Entry

Probe

(Duplicate and expand these rows

as needed)

Response

11

2.3. The Knowledge Audit

2.3.1. Template

"The Knowledge Audit"

Interviewer

Participant

Start time

Finish time

Your work involves a need to analyze cases in great detail. But we know that experts are also able to

maintain a sense of the "big picture." Can you give me an example of what is important about the big

picture for (your, this) task? What are the major elements you have to know and keep track of?

(Expand these rows, as needed)

When you are conducting (this) task, are there ways of working smart, or accomplishing more with

less--ways that you have found especially useful?

What are some examples when you have improvised on this task, or noticed an opportunity to do

something more quickly or better, and then followed up on it?"

Have there been times when the data or the equipment pointed in one direction, but your own judgment

tells you to do something else? Or when you had to rely on experience to avoid being led astray by

equipment or the data?

Can you think of a situation when you realized to that you had change what you were doing in order to

get the job done?

Can you recall an instance where you spotted a deviation from the norm or recognized that something

12

was amiss?

Have you ever had experiences where part of a situation just "popped" out at you?--a time when you

walked into a situation and knew exactly how things got there and where they were headed?

Experts are able to detect cues and see meaningful patterns that novices cannot see. Can you tell me

about a time when you predicted something that others did not, or where you noticed things that others

did not catch? Were you right? Why (or why not)?

Experts can predict the unusual. I know this happens all the time, but my guess is that the more

experience you have, the more accurate your seemingly unusual judgments become. Can you remember

a time when you were able to detect that something unusual happened?

13

2.4. Client Interviews

2.4.1. Protocol Notes

The "clients" are the individuals or organizations that use the products that come from the

domain practitioners (or their organizations). For example, new technologies might be designed

to assist weather forecasters (who would be the "end-users" of the systems) whose job it is to

produce forecasts to assist aviators. The aviators would be the "clients."

One might make a great tool to help weather forecasters, but it if does not help the forecasters

help their clients, it may end up an instance of how good intentions can lead to hobbled

technologies.

For many projects in which CTA plays a role, it is important to identify Client's needs (for

information, planning, decision-making, etc.), and understand the differing needs and

requirements of different Clients.

Some “Tips”

Keep an eye out for inadequacies in the standard forms, formats, and procedures used in the UserClient exchanges. Keep an eye out for adaptation (including local kluges and work-arounds).

It can be useful to use Knowledge Audit probes in the Client Interviews. One need not use all of

the probe questions that are included in the Template, below.

14

2.4.2. Template

"Client Interviews"

Analyst:

Participant

Date

Start time

Finish time

Participant Age

Participant Job

designation (or duty

assignment), and

title (or rank)

How many years have you been ___________________ ?

What kinds of projects have you been involved in?

(Expand the cells, as needed)

Have you had any training in (provider's domain)?

What is the range of experience you have had at (provider's domain or products)?

What is your current (job/project)?

Can you give me an example of a project where (provider information/services/products) had a

15

big impact?

Can you give me an example of a project where the provider's (products/information/services)

made a big difference in success or failure?

What is it that you like about the provider's (products/information/services) that is provided to

you?

Can you give me an example of a project where you were highly confident in the provider's

(products/services/information)?

Can you give me an example of a project where you were highly skeptical of the provider's

(products/services/information)?

How do you use the provider's (products/services/information) during your projects?

Have you ever been in a situation where you felt that you understood the (provider's domain)

better than the provider?

16

Have you ever felt that you needed a kind of (product/service/information) that you are not

given?

In your opinion, what characterizes a good provider?

What is the value of having providers who are highly skilled?

Can you think of any things you could do to help the providers get better?

17

3. Proficiency Scaling Methods Protocols and Templates

3.1. General Introduction

The psychological and sociological study of interpersonal judgments and attributions dates to the

early days of both fields (e.g., Thorndike, 1918; see Mieg, 2001). In the past two decades, the

areas of “Knowledge Elicitation” and “Expertise Studies” have been focused specifically on

proficiency scaling, primarily for the purpose of identifying experts. The rich understanding of

expert-level knowledge and reasoning provides a "gold standard" for domain knowledge and

reasoning strategies. The rich understanding of journeymen, apprentices, and novices informs the

researcher concerning training needs, the adaptation of software systems to individual user

models, etc.

In parallel, the areas of the Sociology of Scientific Knowledge and Cognitive Anthropology,

have been focusing some effort on studies of work in the modern technical workplace and thus

have brought to bear methods of ethnography. This has also entailed the study of proficient skill.

This paradigm has been referred to as the study of "everyday cognition" and "cognition in the

wild" (Hutchins, 1995a,b; Lave, 1988; Scribner, 1984; Suchman, 1993). Researchers have

studied a great many domains of proficiency, including traditional farming methods used in Peru,

photocopier repair, the activities of military command teams, the social interactions of

collaborative teams of designers, financiers, climatologists, physicists, and engineers, and so on

(Collins, 1985; Cross, Christians, and Dorst, 1996; Fleck and Williams, 1996; Greenbaum and

Kyng, 1991; Hutchins, 1995; Lynch, 1991; Orr, 1985). Classic studies in this paradigm include:

Hutchins' studies of navigation (by both individuals and teams, by both culturallytraditional methods and modern technology-based methods) (1990) on the interplay of

navigation tools and collaborative activity in maritime navigation,

Hutchins' (1995b) study of flight crew collaboration and team work,

Orr's study of how photocopier repair technicians acquire knowledge and skill by

sharing their "war stories" with one another.

Lave's (1988) studies of traditional methods used in crafts such as tailoring, and the

nature of skills used in everyday life (e.g., math skills used during supermarket

shopping).

Research in the area of the "sociology of scientific knowledge" (e.g., Collins, 1997; Fleck and

Williams, 1996; Knorr-Cetina, 1981; Knorr-Cetina and Mulkay, 1983) also falls within this

paradigm, and has involved detailed analyses of science as it is practiced, e.g., the history of the

development of new lasers and of gravity wave detectors, the role of consensus in decisions

about what makes for proper practice, breakdowns in the attempt to transmit skill from one

laboratory to another, failures in science or in the attempt to apply new technology, etc.

In psychology and cognitive science, concern with the question of how to define expertise

(Hoffman, 1998) led to an awareness that determination of who is an expert in a given domain

can require its own empirical effort. In a proficiency scaling procedure, the researcher

determines a domain-and organizationally appropriate scale of proficiency levels. Proficiency

scaling is the attempt to forge a domain- and organizationally-appropriate scale for

distinguishing levels of proficiency. Some alternative methods are described in Table 3.1.

18

Table 3.1. Some methods that can contribute data for the creation of a proficiency scale.

Method

Yield

In-depth career

interviews about

education,

training, etc.

Ideas about breadth

and depth of

experience;

Estimate of hours of

experience

Professional

standards or

licensing

Ideas about what it

takes for individuals to

reach the top of their

field.

Measures of

performance at

the familiar

tasks

Can be used for

convergence on scales

determined by other

methods.

Social

Interaction

Analysis

Proficiency levels in

some group of

practitioners or within

some community of

practice (Meig, 2000;

Stein, 1997)

Example

Weather forecasting in the armed services, for instance,

involves duty assignments having regular hours and regular

job or task assignments that can be tracked across entire

careers. Amount of time spent at actual forecasting or

forecasting-related tasks can be estimated with some

confidence (Hoffman, 1991).

The study of weather forecasters involved senior

meteorologists US National Atmospheric and

Oceanographic Administration and the National Weather

Service (Hoffman, Coffey, and Ford, 2000). One participant

was one of the forecasters for Space Shuttle launches;

another was one of the designers of the first meteorological

satellites.

Weather forecasting is again a case in point since records

can show for each forecaster the relation between their

forecasts and the actual weather. In fact, this is routinely

tracked in forecasting offices by the measurement of

"forecast skill scores" (see Hoffman and Trafton,

forthcoming).

In a project on knowledge preservation for the electric

power utilities (Hoffman and Hanes, 2003), experts at

particular jobs (e.g., maintenance and repair of large

turbines, monitoring and control of nuclear chemical

reactions, etc.) were readily identified by plant managers,

trainers, and engineers. The individuals identified as experts

had been performing their jobs for years and were known

among company personnel as "the" person in their

specialization: "If there was that kind of problem I'd go to

Ted. He's the turbine guy."

If research or domain practice is predicated on any notion of expertise (e.g., a need to build a

new decision support system that will help experts but can also be used to teach apprentices),

then it is necessary to have some sort of empirical anchor on what it really means for a person to

be an "expert," say, or an "apprentice." A proficiency scale should be based on more than one

method, and ideally should be based on at least three. We refer to this as the “three legs of a

tripod.”

Social Interaction Analysis, the result of which is a sociogram, is perhaps the lesser known of the

methods. A sociogram, that represents interaction patterns between people (e.g., frequent

interactions), is used to study group clustering, communication patterns, and workflows and

processes. For Social Interaction Analysis, multiple individuals within an organization or a

community of practice are interviewed. Practitioners might be asked, for example, "If you have a

problem of type x, who would you go to for advice?" or they might be asked to sort cards

bearing the names of other domain practitioners into piles according to one or another skill

dimension or knowledge category.

19

Hoffman, Ford and Coffey (2000) suggested that proficiency scaling for a given project should

be based on at least three of the methods listed in Table A.1. It is important to create a scale that

is both domain and organizationally-appropriate, and that considers the full range of proficiency.

A rule of thumb in psychology is that it takes 10 years of intensive practice to become an expert

(Ericsson, Charness, Feltovich, and Hoffman, 2005). Assuming six hours of concentrated

practice per day for 40 weeks per year, this would mean 12,000 hours of practice at domain

tasks. while convenient, such rules of thumb are just that, rules of thumb. in the project on

weather forecasting (Hoffman, Coffey and Ford, 2000), the proficiency scale distinguished three

levels: experts, journeymen, and apprentices, each of which was further distinguished by three

levels of seniority, up to the senior experts who has as much as 50,000 hours of practice and

experience at domain tasks.

Following Hoffman (1988; Hoffman, Shadbolt, Burton, and Klein, 1995; Hoffman, 1998) we

rely on a modern variation on the classification scheme used in the craft guilds (Renard, 1968).

20

Table 3.2. Basic proficiency categories (adapted from Hoffman, 1998).

Naive

Novice

Initiate

Apprentice

Journeyman

Expert

Master

One who is totally ignorant of a domain.

Literally, someone who is new—a probationary member. There has been some

(“minimal”) exposure to the domain.

Literally, someone who has been through an initiation ceremony—a novice who has

begun introductory instruction.

Literally, one who is learning—a student undergoing a program of instruction beyond

the introductory level. Traditionally, the apprentice is immersed in the domain by

living with and assisting someone at a higher level. The length of an apprenticeship

depends on the domain, ranging from about one to 12 years in the craft guilds.

Literally, a person who can perform a day’s labor unsupervised, although working

under orders. An experienced and reliable worker, or one who

has achieved a level of competence. It is possible to remain at this level for life.

The distinguished or brilliant journeyman, highly regarded by peers, whose judgments

are uncommonly accurate and reliable, whose performance shows consummate skill

and economy of effort, and who can deal effectively with certain types of rare or

“tough” cases. Also, an expert is one who has special skills or knowledge derived from

extensive experience with subdomains.

Traditionally, a master is any journeyman or expert who is also qualified to teach those

at a lower level. Traditionally, a master is one of an elite group of experts whose

judgments set the regulations, standards, or ideals. Also, a master can be that expert

who is regarded by the other experts as being “the” expert, or the “real” expert,

especially with regard to sub-domain knowledge.

This is useful first step, in that it adds some explanatory weight to the category labels, but needs

refinement when actually applied in particular projects. In the weather forecasting project

(Hoffman, Coffey and Ford, 2000) it was necessary to refer to Senior and Junior ranks within the

expert and journeyman categories. (For details, see Hoffman, Trafton, and Roebber, 2009).

The Table entries fall a step short of being operational definitions. They are suggestive, however,

and this is where the specific methods of proficiency scaling come in: Career evaluations (hours

of experience, breadth and depth of experience), social evaluations and attributions (sociograms),

and performance evaluations.

Mere years of experience does not guarantee expertise, however. Experience scaling can also

involve an attempt to gauge the practitioners' breadth of experience—the different contexts or

sub-domains they have worked in, the range of tasks they have conducted, etc. Deeper

experience (i.e., more years at the job) affords more opportunities to acquire broader experience.

Hence, depth and breadth of experience should be correlated. But they are not necessarily

correlated, and so examination of both breadth and depth of experience is always wise.

Thus, one comes to a resolution that age and proficiency are generally related, that is, partially

correlated. This is captured in Table 3.3.

21

Table 3.3. Age and proficiency are, generally speaking, partially correlated.

10

Novice

Intern and

Apprentice

Journeyman

20

30

LIFESPAN

40

Learning an commence at any age

(e.g., the school child who is avid about dinosaurs;

the adult professional who undergoes job retraining)

Individuals less

than18yrs of age

are rarely

considered

Can commence at any age

appropriate as

Apprentices

It is possible

to achieve

journeyman

status (e.g.,

chess,

computer

hacking,

sports)

50

60

Achievement of expertise in

significant domains may not be

possible

Achievement of

expertise in

significant

domains may not

be possible

Is typically achieved in mid- to late-20s, but

development may go no further

Expert

Stage

It is possible to

achieve expertise

(e.g., chess, computer

hacking, sports)

Master

Stage

Is rarely achieved

early in a career

Most typical for 35 yrs of age and beyond

It is possible to

achieve midcareer

Most typical of seniors

To summarize, a rich understanding of expert-level knowledge and reasoning provides a "gold

standard" for domain knowledge and reasoning strategies. The rich understanding of

journeymen, apprentices, and novices informs the researcher concerning training needs, the

adaptation of software systems to individual user models, etc.

A number of CTA methods can support the process of proficiency scaling. Proficiency scaling is

the attempt to forge a domain- and organizationally-appropriate scale for distinguishing levels of

proficiency. A proficiency scale can be based on:

1. Estimates of experience extent, breadth, and depth based on results from a Career

Interview,

2. Evaluations of performance based on performance measures,

3. Ratings and skill category sortings from a sociogram.

22

If research is predicated on any notion of expertise (e.g., a need to build a new decision support

system that will help experts but can also be used to teach apprentices), then it is necessary to

have some sort of empirical anchor on what it really means for a person to be an "expert," say, or

an "apprentice." Ideally, a proficiency scale will be based on more than one method.

23

3.2. Career Interviews

3.2.1. General Protocol Notes

Important things the Interviewer needs to accomplish:

Convey respect for the Participant for their job and the contribution their job represents.

Convey respect for the Participant for their skill level and accomplishments.

Convey a desire to help the Participant by learning about their goals and concerns.

Mitigate any concerns that the Participant might have that the Interviewer is there to

finger-point or disrupt the work, or broker people's agendas.

Prove that the Interviewer has done some homework, and knows something about the

Participant’s domain.

Important things the Interviewer needs to learn:

What it takes for a practitioner in the domain to achieve expertise.

The range of kinds of experience (breadth and depth) that characterize the most highlyexperienced practitioners.

Acquire a copy of the participant's vita or resume and append it to the interview data.

Selection of Participants can depend on a host of factors, ranging from travel and availability

issues to any need or requirement there might be to identify and interview experts. Whatever the

criteria or constraints, it is generally useful to interview personnel who represent a range of job

assignments, levels of experience, and levels of “local” knowledge. In most CTA procedures, it

is necessary to have at least some Participants who conduct domain tasks on a daily basis. In

most CTA procedures it is highly valuable to have Participants who can talk openly about their

processes and procedures, seem to love their jobs, and have had sufficient experience to have

achieved expertise at using the newest technologies that are in the operational context.

Lesson Learned

Practitioners who are verbally fluent and who seem to love their domain can sometimes be too

talkative and can jump around story-telling and getting off on tangents, especially since in some

cases they rarely have opportunities to talk about their domain and their experiences to

interested listeners. They sometimes need to be kept on track by saying, e.g., "that's an

interesting story—let's come back to that…"

Some “Tips”

24

Until or unless each interviewer has achieves sufficient skill or has had sufficient practice

with Participants in the domain at hand, interviews should be conducted by pairs of

interviewers, who take separate notes.

Expect that the interview guides will have to be revised, even several times, during the

course of the data collection phase.

Think twice about audiotape recording the interviews—it can take a great deal of time to

transcribe and code the data.

For interviews that are audio recorded, do not conduct the interview in a room with ANY

background noise (e.g., air conditioners, equipment, etc.) as this will significantly degrade

the quality of the recordings. Consider using a y-connector allowing input from two lapel

microphones, one clipped to the collar of the Participant, one to the collar of the

Interviewer.

After the recorder is started, preface it with a clear statement of the day, date, time,

Participant name, and Researcher name, project name, and interview type.

Be sure to ask the Participant to speak clearly and loudly.

It is often valuable, and sometimes necessary, to attempt to determine how much time a

Participant has spent engaged in activities that arguably fall within the domain. One might focus

on the amount of time the Participant has spent at some single activity. For instance, how much

time do weather forecasters spend trying to interpret at infra-red satellite images? One might

focus on something broader, such as how much time a forecaster has spent engage in all

forecasting-related activities.

The rule of thumb from expertise studies is that to qualify as an expert one must have had 10

years full time experience. Assuming 40 hours per week and 40 weeks per year, this would

amount to 16,000 hours. Another rule of thumb, based on studies of chess, is 10,000 hours of

task experience (Chase and Simon, 1973). These rules of thumb will in all likelihood not apply in

many applied research contexts. For instance, in the weather forecasting case study (Hoffman,

Coffey, and Ford, 2000), the most senior expert forecasters had logged in the range of 40,00050,000 hours of experience. The experience scale must always be appropriate for the domain, but

also appropriate for and the particular organizational context.

When retrospecting about their education, training, and career experiences, the reflective

Participant will recall details, but there will be some gaps and uncertainties. One strategy that has

proven useful is to apply the “Gilbreth Correction Factor,” which is simply the estimated total

divided by two. In his classic "time and motion" studies of the details of various jobs, Gilbreth

(1911, 1914) found that the amount of time people spend at tasks that are directly work-related is

about half of the amount of time they spend "on the job." Gilbreth also found if the schedule of

work and rest periods could be made, as we would say today, more human-centered, that work

output could double. Gilbreth studied tasks that had a major manual component (e.g., the

assembly of radios), the "factor of 2" kept cropping up again (e.g., estimated time savings if a bin

holding a part could be moved closer to the worker). The GCF serves as a reasonable enough

anchor, a conservative estimate of time-on-task, in comparison to the raw estimate from the

Career Interview, which may be likely to be an over-estimate. Research in a number of domains

has shown that even with the GCF applied, estimates of time spent at domain tasks by the most

25

senior practitioners often fall well above the 10,000 hour benchmark set for the achievement of

expertise (see Hoffman and Lintern, 2005).

Mere years of experience does not guarantee expertise, however. Experience scaling also

involves an attempt to gauge the practitioners' breadth of experience—the different contexts or

sub-domains they have worked in, the range of tasks they have conducted, etc. Deeper

experience (i.e., more years at the job) affords more opportunities to acquire broader experience.

Hence, depth and breadth of experience should be correlated. But they are not necessarily

correlated, and so examination of both breadth and depth of experience is always wise.

Thus, one comes to a resolution that age and proficiency are generally related. This is captured in

Table 3.2.

26

Table 3.2. Age and proficiency are, generally speaking, partially correlated.

10

Novice

Intern and

Apprentice

Journeyman

20

30

LIFESPAN

40

Learning an commence at any age

(e.g., the school child who is avid about dinosaurs;

the adult professional who undergoes job retraining)

Individuals less

than18yrs of age

are rarely

considered

Can commence at any age

appropriate as

Apprentices

It is possible

to achieve

journeyman

status (e.g.,

chess,

computer

hacking,

sports)

50

60

Achievement of expertise in

significant domains may not be

possible

Achievement of

expertise in

significant

domains may not

be possible

Is typically achieved in mid- to late-20s, but

development may go no further

Expert

Stage

It is possible to

achieve expertise

(e.g., chess, computer

hacking, sports)

Master

Stage

Is rarely achieved

early in a career

Most typical for 35 yrs of age and beyond

It is possible to

achieve midcareer

Most typical of seniors

27

3.2.2. Template

"Career Interviews"

Analyst:

Participant

Date

Start time

Finish time

Name

Age

Title or rank or job

designation or duty

assignment

High-school and college education

(Expand these cells, as needed)

Early interest in this domain?

Training/College/Graduate Studies

Post-Schoolhouse Experience

Attempt To Estimate Hours Spent At The Tasks

28

EXPERIENCE

WHERE

WHEN

WHAT

For each task/activity:

Number of hours per day

Number of days per week

Number of weeks per year

TOTAL

Notes

Determination:

Qualification on the Determination:

Notes on Salient experiences and skills

TIME

29

3.3. Sociometry

3.3.1. General Protocol Notes

In the field of psychology there is a long history of studies attempting to evaluate interpersonal

judgments of abilities and competencies (e.g., Thorndike, 1920). In recent times this, has

extended to research on the extent to which expert judgments of the competencies of other

experts conform to evidence from experts’ retrospections about previously-encountered critical

incidents, and the extent to which competency judgments, and competencies shown in incident

records, can be used to predict practitioner performance (McClelland, 1998).

In the field of advertising there has been a similar thread, focusing on perceptions of the

expertise of individuals who endorse products, and the relations of those perceptions to

judgments of product quality and intention to purchase (e.g., Ohanian, 1990).

There is a similar thread in the field of sociology. Sociometry is a branch of sociology in which

attempts are made to measure social structures and evaluate them in terms of the measures.

Psychiatrist Jacob Moreno first put the idea of a “sociogram” forward in the 1930s (see Fox,

1987) as a proposal of a means for measuring and visualizing interpersonal relations within

groups.

In the context of cognitive task analysis and its applications, Sociometry involves having domain

practitioners make evaluative ratings about other domain practitioners. (see Stein, 1992, 1997).

The method is simple to use, especially in the work setting. In its simplest form (see Stein, 1992,

Appendix 2), the sociogram is a one-page form on which the participant simply checks off the

names of other individuals from who the participant obtains information or guidance.

Stein (1992, Appendix 2) provides guidance on using techniques from social network analysis in

the analysis of sociometric data (e.g., how to calculate who is “central” in a communication

network).

Results from a sociogram can include information that can be useful in proficiency scaling,

leverage point identification, and even cognitive modeling. They can be useful in exploring

hypotheses about expertise attributions and communication patterns within organizations. For

instance, one study (Stein, 1992) in a particular organization found that the number of years

individuals had been in an organization correlated with the frequency with which they were

approached as an information resource. However, the individual who was most often approached

for information was not the individual with the most years in the organization.

A sociogram can be designed for use with practitioners who know one another and work together

(e.g., as a team, within an organization, etc.) or it can be designed for use in the study of a

“community of practice” in which each practitioner knows something about many others,

including others with whom the practitioner has not worked.

Most sociograms, like social network analyses, focus on a single relation: Whom do you trust?

From whom to you get information? With whom do you meet? And so on. There is no

principled reason why a sociogrammetric interview, in the context of cognitive task analysis,

might ask about a variety of social factors that contribute to proficiency attributions. The listings

30

of possible sociogram probe questions we present here is intended to illustrate a range of

possibilities.

Questions in sociograms can ask for self-other comparisons in terms of knowledge and

experience level, in terms of reasoning styles, in terms of ability, in terms of subspecialization,

etc. On those same topics, questions in a sociogram can ask about patterns of consultation and

advice-seeking.

Questions in a sociogram can involve a numerical ratings task, or a task involving the sorting of

cards with practitioner names written upon them.

The template we present is intended as suggestive. A sociogram need not precisely follow the

guidance that we present here. Questions can be combined in many ways, as appropriate to the

goals of the research and other pertinent project constraints.

Asking practitioners who work together in an organization to make comparative ratings of one

another can be socially awkward and can have negative repercussions. The Participant needs to

feel that they can present their most honest and candid judgments while at the same time

preserving confidentiality and anonymity. A solution is to have the Researcher who facilitates

the interview procedure remain “blind” to the name and identity of the co-worker(s) that the

Participant is rating.

Ideally, before the sociogram is conducted, the Researchers already have some idea of who the

experts in an organization are. Ideally, the sociogram should involve all of the experts as well as

journeyman and apprentices.

If the number of participants to be involved in a sociogram is greater than about seven, the

conduct of a full Sociogram can become tedious for the Participant. (A glance ahead will show

that each of the sociograms involves having each Participant answer a number of questions about

each of the other Participants).

One solution to this problem is to have each Participant conduct the sociogram for only a subset

of the other Participants, with the constraint that each participant is assessed by at least two

others. Another solution is to divide the pool of Participants into two sets, conduct the

sociograms within each of the two sets, and then randomly select n Participants from set 1 and n

Participants from set 2, and conduct another sociogram in which they rate individuals whom they

had not rated in the first sociogram. Ideally, the same proficiency scale assignments should arise

out of the converging data sets.

As for all of the Templates we present, we suggest that the first page of the sociogram data

collection form include the following:

3.3.2. General Forms Templates

31

Interviewer

Participant

Date

Start time

Finish time

For Within-Group Sociograms

We would like to ask you some questions regarding:

(Name or ID number)

How long (months, years) have you known/worked with one another?

32

3.3.3. Ratings Scale Items

We would now like you to answer a set of specific questions that use a numerical rating scale.

Please utilize the full range of the scale.

Do not hesitate to say things that reflect either positively or negatively on you or your

colleagues.

We are not here to point fingers, or challenge anyone’s skill or authority.

All the data we collect will remain anonymous and confidential. That is, no one’s name will be

associated with particular results.

Please be open, honest, and candid.

Compared to you, what is their level or extent of experience? Check one of the boxes below.

They have had

much more

experience

They have had

more

experience

They have had

somewhat

more

experience

Our levels of

experience are

about the same

They have had

somewhat less

They have had

less experience

They have had

much less

experience

7

6

5

4

3

2

1

Comments

(Expand the cells, as needed)

Compared to your own knowledge of your domain, what is their level of knowledge? Check

one of the boxes below.

They know

much more

7

They know

more

6

They know

somewhat

more

5

Our levels of

knowledge are

about the same

They know

somewhat less

They know less

4

3

2

They know

much less

Comments

Compared to your level of ability at conducting your domain tasks, what is their level of

1

33

ability? Check one of the boxes below.

Their work is

consistently

better

Their work is

usually better

Their work is

often better

Their work is

about as good

as mine

Their work is

often not as

good

Their work is

usually not as

good

Their work is

consistently not

as good

7

6

5

4

3

2

1

Comments

Is s/he good at particular tasks within your domain than others?

What types?

_____________________________________________________________________

_____________________________________________________________________

Compared to your level of ability at conducting those kinds of tasks, what is their ability level?

Circle one of the numbers below

Their work is

consistently

better

Their work is

usually better

Their work is

often better

Their work is

about as good

as mine

Their work is

often not as

good

Their work is

usually not as

good

Their work is

consistently not

as good

7

6

5

4

3

2

1

Comments

Compared to your level of ability at rapidly integrating data and quickly sizing up a situation,

what is their level of ability? Check one of the boxes below.

Much greater

skill

7

Greater skill

6

Moderately

greater skill

5

About the same

skill level as

me

4

Somewhat

lower skill

3

Lower skill

2

Much lower

skill

1

Comments

Compared to the general accuracy of your own products or results, what is the general accuracy

of their products or results? Check one of the boxes below.

34

Much greater

Greater

Moderately

greater

About the same

as mine

Somewhat

lower

Lower

Much lower

7

6

5

4

3

2

1

Comments

Compared to your own degree of ability at communicating about your domain with others, what

is their level of ability? Check one of the boxes below.

Much greater

skill

Greater skill

7

Moderately

greater skill

6

5

About the

same skill

level as me

Somewhat

lower skill

level

4

Lower skill

3

2

Much lower skill

1

Comments

Using traditional craft guild terminology, what would you say is their degree of expertise?

Circle one of the numbers below.

Master

Sets the standards

for performance in

the profession.

1

Expert

Highly competent,

accurate, and

skillful. Has

specialized skills.

2

Journeyman

Can work

competently without

supervision or

guidance.

Apprentice

Needs guidance or

supervision; shows a

need for experience

and on-the-job

training, but also

some need for

additional formal

training.

3

4

Initiate

Still shows a need

for needs formal

training.

5

Comments

Working Style

What seems to be their “style" of reasoning or working? Can you place them somewhere on the

continuum below? Use a check mark

35

Understand the domain events

as a dynamic causal system;

form conceptual models of

what’s going on.

They conduct their work on the basis

of standard procedures, checklists,

etc. rather than a deep understanding

of the domain

--------------------------------------------------------------------

Comments

Reflective Practitioner or Journeyman?

What seems to be their “style" of reasoning or working? Can you place them somewhere on the

continuum below? Use a check mark.

Are reflective, and question

their own reasoning and

assumptions, and are even

critical of their own ideas.

Do not seem to be deep a thinker. They

do not think about their own reasoning

or question their strategies or

assumptions.

--------------------------------------------------------------------

Comments

Leader or Follower?

What seems to be their “style" of reasoning or working? Can you place them somewhere on the

continuum below? Use a check mark

Are they one of the visionaries

or innovators?

They seem to refine and extend the

pathfinding ideas of others.

--------------------------------------------------------------------

Comments

36

3.3.4. Sorting Task Items

Experimenter's Protocol

Participant is invited to write the names of 12 domain practitioners on 3x5 cards.

Names can be selected by instruction or by Participant choice.

“People whose names come to mind, people you know or know of.”

“People you have worked with.”

“People who represent a range of experience in the field.”

A card bearing the Participant’s own name can be added into the pile of cards.

Using traditional craft guild terminology, what would you say is their degree of expertise? Sort

into piles.

Master

Sets the standards

for performance in

the profession.

Comments

Expert

Highly competent,

accurate, and

skillful. Has

specialized skills.

Journeyman

Apprentice

Can work

competently without

supervision or

guidance.

Needs guidance or

supervision; shows a

need for experience

and on-the-job

training, but also

some need for

additional formal

training.

Novice

Still shows a need

for formal training.

37

Place the cards into two piles, according to these two general styles.

“Reflective Practitioner”

They understand the domain at a deep

conceptual level.

They understand the important issues.

“Proceduralist”

They conduct their work on the basis of

standard procedures and have a superficial

rather than a deep understanding of the

domain. They do not seem to care much about

underlying or outstanding issues.

Comments

For each name, note any specialization or specialized areas of subdomain knowledge or skill.

Comments

Who are the real pathfinders or founders of the field? Sort into piles.

Founders and pathfinders

Those who refine and extend the seminal

ideas of others

Comments

Who would you say are the real visionaries in the field? Sort into piles.

Visionaries

Comments

Those who refine and extend

the innovations and visions of others

38

Compared to you, what is their level of skill at conducting domain procedures? Sort into piles.

Much higher skill

Higher skill

About the same

as me

Less skill

Much less skill

Comments

Compared to you, what is their level or extent of experience? Sort into piles.

Much more

experience

More experience

About the same

level of

experience as me

Less experience

Much less

experience

Comments

Compared to your own knowledge of your domain or field, what is their level of knowledge?

Sort into piles.

They know much

more

They know more

Our levels of

knowledge are

about the same

They know less

They know much

less

Comments

Compared to the general quality or accuracy of your own products or results, what is the

general quality or accuracy of their products or results?

Greater

Somewhat

greater

About the same

as mine

Somewhat less

Less

Comments

Compared to your own degree of ability at communicating about your field both to people

39

within the field and those outside of the field, what is their level of ability?

Much greater

Comments

Greater skill

About the same

skill level as me

Lower skill

Much lower skill

40

3.3.5. Communications Patterns Items

3.3.5.1. Within-Groups, Teams, or Organizations

We would like to ask you some questions regarding:

(Name or ID number)

How long (months, years) have you known/worked with one another?

How often do you collaborate in the conduct of tasks?

How often do you conduct separate tasks, but at the same time?

How many times in the last month have you gone to them for guidance or an opinion

concerning the interpretation of data? (If “rarely” or “never,” is this because of rank?)

Does s/he ever come to YOU for guidance or an opinion?

How many times in the last month have you gone to them for guidance or an opinion

concerning the understanding of causes or events?

How many times in the last month have you gone to them for guidance or an opinion

concerning the equipment you use or the operations you perform using the technology?

If “rarely” or “never,” is this because of rank or status?

Does s/he ever come to YOU for guidance or an opinion?

How many times in the last month have you gone to them for guidance or an opinion

concerning organizational operations and procedures? (If “rarely” or “never,” is this because of

rank?)

Does s/he ever come to YOU for guidance or an opinion?

41

3.3.5.2. Within-Domains

Who in the field of ______________________________________ has come to you for

guidance or an opinion?

On what topics or issues has your opinion been solicited?

Do you know of_________________________________________?

How do you know of _____________________________________?

Do you personally know ___________________________________?

If so, how long have you known _____________________________?

Have you worked or collaborated in any way with ______________________________?

Have you gone to them for guidance or an opinion?

Does s/he ever come to you for guidance or an opinion?

42

3.4. Cognitive Styles Analysis

3.4.1. Introduction

In any given domain, one should expect to find individual differences in skill level, but also such

things as motivation and style. Different practitioners prefer work problems in different ways at

different points, for any of a host of reasons.

Proficiency and style can be related in many ways. Proficiency can be achieved via differing

styles. Proficiency can be exercised via differing styles. On the other hand, proficiency takes

time to achieve and thus the styles that characterize experts should be at least partly coincident

with skill level.

The analysis of cognitive styles, as a part of Proficiency Scaling, can be conducted with reliance

on more than one perspective. Ideas concerning cognitive style come from:

The proficiency scale of the craft guilds. Cognitive style can be evaluated from the

perspective that style is partly correlated with skill level. Some designations and

descriptions are presented in Table 3.3.

Experiences of applied researchers who have conducted CTA procedures, and research in

the paradigm of Naturalistic Decision Making (Reference). Some styles designations and

descriptions are presented in Table 3.4

The ideas presented in these Tables are offered as suggestions. The categorizations are not

intended to be inclusive or exhaustive—not all practitioners will fall neatly into one or another of

the categories. Any analysis of reasoning styles will have to be crafted so as to be appropriate to

the domain at hand. Any given project may find itself in need of these, some of these, or other

appropriate designations.

43

Table 3.3. Likely reasoning style as a function of skill level. (For the definitions of the levels, see

Table 3.1.)

Low Skill Levels (Naïve, Initiate, Novice)

Their performance standards focus on satisfying literal job requirements.

They rely too much on the guidance (e.g., out puts of computer models), and take the

guidance at

They use a single strategy or a fixed set of procedures--they conduct tasks in a highly

proceduralized manner using the specific methods taught in the schoolhouse.

Their data-gathering efforts are limited but they tend to say that they look at everything.

They do not attempt to link everything together in a meaningful way—they do not form

visual, conceptual models or does not spend enough time forming and refining models.

They are reactive, and "chase the observations."

They are insensitive to contextual factors or local effects.

They cannot do multi-tasking without suffering from overload, becomes less efficient and

more prone to error.

They are uncomfortable when there is any need to improvise.

They are unaware of situations in which data can be misleading, and do not adjust their

judgments appropriately.

They are less adroit.

Things that are difficult for them to do are easy for the expert.

They are less aware of actual needs of the end-users.

They are not strategic opportunists.

Medium Skill Levels (Apprentice, Journeyman)

Despite motivation, it is possible to remain at this level for life and not progress to the

expert level.

They begin by formulating the problem but then like individuals at the Low Skill Levels,

those at the Medium Skill Levels tend to follow routine procedures—they tend to seek

information in a proceduralized or routine way, following the same steps every time.

Some time is spent forming a mental model.

Their reasoning is mostly at the level of individual cues, some ability to recognize cue

configurations within and across data types.

They tend to place great reliance on the available guidance.

They tend to treat guidance as a whole, and not look to sub-elements—they focus on

determining whether the guidance is "right" or "wrong."

Journeymen can issue a competent product without supervision but both Apprentices and

Journeymen have difficulty with tough or unfamiliar scenarios.

44

High Skill Levels (Expert, Master)

They have high personal standards for performance.

Their process begins by achieving a clear understanding of the problem situation.

They are always skeptical of guidance and know the biases and weakness of each of the various

guidance products.

They look at agreement of the various types of guidance--features or developments on which the

guidances agree.

They are flexible and inventive in the use of tools and procedures, i.e., the conduct action sequences

based on a reasoned selection among action sequence alternatives.

They are able to take context and local effects into account.

They are able to adjust data in accordance with data age.

They are able to take into account data source location.

They do not make wholesale judgments that guidance is either "right" or "wrong."

They reason ahead of the data.

They can do multi-tasking and still conduct tasks efficiently without wasting time or resources.

They are comfortable improvising.

They can tell when equipment or data or data type is misleading; know when to be skeptical.

They create and use strategies not taught in school.

They form visual, conceptual models that depict causal forces.

They can use their mental model to quickly provide information they are asked for.

Things that are difficult for them to do may be either very difficult for the novice to do, or may be

cases in which the difficulty or subtleties go totally unrecognized by the novice.

They can issue high-quality products for tough as well as easy scenarios.

They provide information of a type and format that is useful to the client.

They can shift direction or attention to take advantage of opportunities.

45

Table 3.4. Some Cognitive Styles Designations (based on Pliske, Crandall, and Klein, 2000).

SCIENTIST

The "reflective practitioner" Thinks about their own strategies and reasoning, engages in "what if?"

reasoning, questions their own assumptions, and is freely critical of their own ideas.

AFFECT

STYLE

ACTIVITIES

CORRELATED SKILL

OR PROFICIENCY

LEVEL

They are often "lovers" of the domain.

They like to experience domain events and watch patterns develop.

They are motivated to improve their understanding of the domain.

They are likely to act like a Mechanic when stressed or when problems are

easy.

They actively seek out a wide range of experience in the domain, including

experience at a variety of scenarios.

They show a high level of flexibility.

They spend proportionately more time trying to understand problems, and

building and refining a mental model.

They are most likely to be able to engage in recognition-primed decisionmaking.

They spend relatively little time generating products since this is done so

efficiently.

They possess a high level of pattern-recognition skill.

They possess a high level of skill at mental simulation.

They possess skill at using a wide variety of tools.

They understand domain events as a dynamic system.

Their reasoning is analytical and critical.

They possess an extensive knowledge base of domain concepts, principles

and reasoning rules.

PROCEDURALIST

Seem to lack the intrinsic motivation to improve their skills and expand their knowledge, so they do not

move up to the next level. They do not think about their own reasoning or question their strategies or

assumptions. However, in terms of performance and/or experience, they are journeyman on the

proficiency scale.

AFFECT

STYLE

ACTIVITIES

CORRELATED SKILL

OR PROFICIENCY

LEVEL

They sometimes are lovers of the domain.

They like to experience domain events and see patterns develop.

They are motivated to improve their understanding of the domain.

They spend proportionately less time building a mental model.

They engage in recognition-primed decision making less often.

They are proficient with the tools they have been taught to use.

They are less likely to understand domain events as a complex dynamic

system.

They see their job as having the goal of completing a fixed set of

procedures, although these are often reliant on a knowledge base.

They sometimes rely on a knowledge base of principles of rules, but this

tends to be limited to types of events they have worked on in the past.

Typically, they are younger and less experienced.

MECHANIC

They conduct their work on the basis of standard procedures and have a superficial rather than a deep

46

understanding of the domain. They do not seem to care much or wonder about underlying concepts or

outstanding issues.

AFFECT

STYLE

ACTIVITIES

CORRELATED SKILL

OR PROFICIENCY

LEVEL

They are not interested in knowing more than what it takes to do the job;

not highly motivated to improve.

They are likely to be unaware of factors that make problems difficult.

They see their job as having the goal of completing a fixed set of

procedures, and these are often not knowledge-based.

They spend proportionately less time building a mental model.

They rarely are able to engage in recognition-primed decision making.

They sometimes have years of experience.

They possess a limited ability to describe their reasoning.

They are skilled at using tools with which they are familiar, but changes in

the tools can be disruptive.

DISENGAGED

The most salient feature is emotional detachment and disengagement with the domain or organization.

AFFECT

STYLE

ACTIVITIES

CORRELATED SKILL

OR PROFICIENCY

LEVEL

They do not like their job.

They do not like to think about the domain.

They are very likely to be unaware of factors that make problems difficult.

They spend most of the time generating routine products or filling out

routine forms.

They spend almost no time building a mental model and proportionately

much more time examining the guidance.

They cannot engage in recognition-primed decision making.

They possess a limited knowledge base of domain concepts, principles, and

reasoning rules.

Skill is limited to scenarios they have worked in the past.

Their products are of minimally acceptable quality.

In the Cognitive Styles analysis, the Researchers conduct a card-sorting task that categorizes the

domain Practitioners according to perceived cognitive style. The procedure presupposes that all

of the Researchers who will engage in the sorting task have had sufficient opportunity to interact

with each of the Participants (e.g., opportunistic, unstructured interviews, initial workplace

observations, etc.). The Researchers will have engaged in some interviews, perhaps unstructured

and opportunistic ones, with the domain Practitioners. That is, the Researchers will have

bootstrapped to the extent that they feel they have some idea of each Practitioner's style.

The result of the procedure is a consensus sorting of Practitioners according to a set of cognitive

styles. Individually, the styles should seem both appropriate and necessary. As a set, the styles

should seem appropriate and complete, at least complete with regard to the set's functionality in

helping the Researchers achieve the project purposes or goals.

3.4.2. Protocol For Cognitive Styles Analysis

47

1. The Analysis is conducted after the Researchers have become familiar with each of the

domain practitioners who are serving as Participants in the project. That is, the

Researchers have already had opportunities to discuss the domain with the

Participants whose styles will be categorized.

2. The Researchers formulate a set of what seem to be reasonable and potentially useful

styles categories, having short descriptive labels. These may be developed by the

Researchers, or may be adapted from the listing provided in this doccument.

3. Each Practitioner's name is written on a file card. Working independently, all of the

Researchers sort cards according to style.

4. The Researchers review and discuss each other's sorts. They converge on an agreed-upon

set of categories and descriptive labels.

5. The Researchers re-sort. The Results are examined for consensus or rate of agreement.

6. The Researchers come to agreement that those Practitioners attributed the same style are

similar with regard to the style definition.

7. The Researchers come to agreement that there are no Practitioners who seem to manifest

more than one of the styles. That is, the final set of styles categories allows for a

satisfactory partitioning of the set of Participants.

8. Steps 3-6 may be repeated.

48

3.4.3. Protocol for Emergent Cognitive Styles Analysis

A variation on this task involves having the Researchers conduct the sorting task without

worrying about creating any short descriptive labels for the perceived styles, and without

worrying about devising a clear description of the styles. The procedure lets the categories

"emerge" from the individual Researcher's perceptions and judgments without influence from a

set of pre-determined styles descriptors.

1. Each Practitioner's name is written on a file card. Working independently, each of the

Researchers deliberates concerning the style of each of the Practitioners,

2. The Researchers sorts cards according to those judgments. An individual Practitioner

may be in a category alone, or may fall in a category with one or more others.

3. The Researchers review and discuss each other's sorts. They converge on an agreed-upon

set of categories. These are expressed in terms of the theme or themes of each

category.

4. Working independently, the Researchers re-sort. The results are examined for consensus

or rate of agreement.

5. Only then are the categories are given descriptive labels.

6. The Researchers confirm that those Practitioners attributed the same style are similar with

regard to the style definition.

7. The Researchers confirm that there are no Practitioners who seem to manifest more than

one of the styles. That is, the final set of styles categories allows for a satisfactory

partitioning of the set of Participants.

49

3.4.4. Protocol for Cognitive Styles-Proficiency/Skill Levels Comparison

Another use of Cognitive Styles Analysis is to aid in proficiency scaling. In this use, Cognitive

Styles are compared to proficiency levels, since there should be some relations. For example, it

would be more likely to find that individuals who are potentially designated as expert in a

sociogram or a career interview or a performance evaluation would be more likely to manifest

the style of the "Reflective Practitioner" than that of the "Proceduralist."

Proficiency data for comparison to those from a Cognitive Styles Analysis can themselves come

from a sorting procedure.

1. Working individually, the Researchers sort cards containing the names of the practitioners

according to expertise level (see Table 4.1) or along a continuum.

2. The Researchers compare each individual an individual in the next lowest level and an

individual in the next highest level, to confirm the levels assignments.

3. The Researchers compare the results of the Levels sort and the Styles sort to determine

whether there is any consensus on a possible relation of style to skill level.

3.4.5. Validation

Researcher(s) who did not participate in the sorting task can be provided with the Styles categories' labels

and definitions and can engage in the styles sorting (and the skill levels comparison). Their styles sorting

should match the sorting arrived at in the final group consensus. Their skill levels sorting should affirm

the consensus on the relation of styles to skill level.

50

4. Workplace Observations and Interviews

4.1. General Introduction

Workspace analysis can focus on:

The workspace in which groups work,

The spaces in which individuals work,

The activities in which workers engage,

The roles or jobs,

The requirements for decisions,

The requirements for activities,

The "standard operating procedures."

These alternative ways of looking at workplaces will overlap. For example, at a particular work

group, the activities might be identified with particular locations where particular individuals