app-rec - Microsoft Research

advertisement

Application Recovery: Advances Toward an Elusive Goal

David Lomet

Microsoft Research

Redmond, WA 98052

lomet@microsoft.com

Introduction

Persistent savepoints are the model that has usually

dominated previous thinking on application recovery.

"A persistent savepoint is a savepoint where the state of the

transaction's resources is made stable …, and enough control

state is saved to stable storage so that on recovery the

application can pick up from this point in its execution. …

If the system should fail and subsequently recover, it can

restart any transaction at … its last persistent savepoint

operation. It doesn't have to run an exception handler

because the transaction has its state and can simply pick up

where it left off."{BeNe97}

The value of persistent savepoints is more than reducing

lost work. Persistent savepoints simplify application

programming compared to more explicit methods for

coping with failures.

"… the code is not only shorter than the [prior] solution…

but simpler. … everything related to the maintenance of

persistent context is now taken care of by the Save_Work

function, whereas the [prior] solution had to do the

maintenance all by itself…" {GrRe93}

Traditionally, a savepoint has been viewed as the

capture of an application’s state in stable storage at the

time a savepoint operation is executed.

However, this is not necessary, any more than it is

necessary for database recovery to materialize the final

states of all pages of all committed transaction in stable

storage. Instead, we recover via replay from the log.

"Each resource manager participating in the transaction with

the persistent savepoint is brought up in the state as of the

most recent persistent savepoint. For that to work, the

run-time system of the programming language has to be a

resource manager, too; consequently, it also recovers its

state to the most recent persistent savepoint. Its state

includes the local variables, the call stack, and the program

counter, the heap, the I/O streams, and so forth.” {GrRe93}

Phoenix Goals

At Microsoft Research, we have started a project called

Phoenix “We suggest transactions using persistent savepoints be

called Phoenix transactions, because they are reborn from

the ashes.”{GrRe93}

One technology we purse in Phoenix is application

recovery, specifically involving applications that

interact with a database, from system failures. We call

these Phoenix applications. Our intent is to exploit

database redo recovery techniques and infrastructure to

reestablish application state, e.g. for simple applications

like stored procedures. Thus, the database system

becomes the resource manager for the application.

This has a number of benefits1. Application recovery increases application

availability. Current recovery is limited to database

state. Work can then resume on the database, but

lack of "application consistency" can greatly delay

application resumption. Application recovery

decreases the lengths of these outages.

2. The application programmer does not need to deal

directly with system failures. The application can be

written as a simple sequential program that is

unaware of the crash“… unless it keeps [a] timer… and finds out that

[execution] took surprisingly long to complete.”

{GrRe93}

3. A user of the application, end user or other

software, may likewise be unaware that a crash has

occurred, except for some delay. An end user need

not re-enter input data. Client software can simply

continue to execute.

4. Operations people who are responsible for ensuring

that applications execute correctly, need only initiate

recovery, a process that they are already familiar with

for database recovery, and the recovered system state

will include the recoverable application state.

The Technology Challenges

There has been prior research directed to achieving the

Phoenix aims. A problem has been that the technology

has been expensive in its impact on normal execution.

We have addressed how to enable application recovery

without serious impact on normal application

performance. That is, we reduce the amount of data

and state that has to be captured and written to stable

storage, either to the "database" or to the log. And we

exploit database recovery infrastructure, which is

well-tested, for this purpose.

We treat application program state in the same manner

as a database page. Thus, an application's state is one

of the objects in the database cache. Flushing it is

1

controlled by the cache manager. As with database

pages, we post to the log operations that change

application state. Like any good redo scheme, we

accumulate changes to an application's state in the

cache, and only flush it to stable storage to control the

length of the redo log and hence the cost of redo

recovery. These flushes will be rare- and sometimes

entirely unnecessary, as when the application terminates

prior to the need to truncate the log. For example, we

anticipate that most stored procedures can execute and

complete within a single checkpoint interval- and hence

many applications states needn't be flushed to stable

storage.

And, how exactly does one log operations for

applications without their help? Application state can

only be changed in two ways, via interactions with the

world outside of itself, and by its own internal

execution. We capture both as loggable operations.

Since our applications are unaware of our efforts to

make them recoverable, we seize upon the times when

the application interacts with the rest of the recoverable

resource manager (e.g. a database system) to capture

state transitions as log operations. This has the

significant benefit of not requiring the separate logging

of any internal state changes caused solely by

application execution.

There are a number of ways to log the effects of

"normal" execution. For database pages, we can log

entire page state or partial page state, or we can log

what {GrRe93} calls "physiological" operations, as in

ARIES {MHLPS92}. Given the size of log records, we

point ourselves toward the later. But, in fact, in order

to do really well in keeping log records small, we

cannot restrict ourselves to physiological operations.

Cache management is also impacted by the inclusion of

applications as objects being managed. Application

state is much larger than a page, and that by itself poses

a problem because flushing needs to be atomic. In

addition, application operations execute for unknown

periods, making it difficult to seize a suitable operation

consistent state to flush. Most dramatically, the very

application operations that make logging efficient

introduce flush order dependencies between objects in

the cache.

Finally, in addition to application program state, a

database will commonly hold state that is related to the

application. This state is not a single entity, but can be

both scattered and complicated. We need to capture

and log changes for each of the several pieces involved.

For example, temporary tables can be logged so as to

make them stable. Other state is more intimately held

in volatile database system data structures. Much of

this state needs to be recoverable, e.g., database session

state.

Phoenix Technology

This short overview cannot describe all the technology

we have in mind. Phoenix is a research project so

some of the technology is in the process of being

created. We sketch here our work that shows (i) how

an application can be treated as a recoverable database

object and its interactions with the system and database

objects can be logged; (ii) how this can control

frequency of application checkpointing; and (iii) how

logging costs can be greatly reduced by logging only the

identities of objects read and not their data values. This

is more completely described in {Lo97,Lo97a}.

There are two kinds of application operation, one for an

application's execution between interactions, and the

second for its interactions. Our intent is to replay the

application from an earlier state to re-create the

pre-crash state. Thus, we must:

Re-execute an application between interactions.

When replaying from an application in state A1 , we

reproduce the same state A2 at the next application

interaction as was produced originally. This requires

deterministic execution. Non-deterministic behavior

is captured by the sequence of log records for the

application.

Reproduce the effect of each application interaction

. As an example, if an application read object X, we

could include in the log record its value x1 at the time

it was originally read. During recovery, when the

read is encountered, we use the logged value x1 to

update the application's input buffers.

We intercept every application interaction, as only

interactions change an application's execution trajectory.

Our resource manager wraps itself around the

application, trapping its external calls. At each call

point, it logs the nature of the call and its effect on the

application.

2

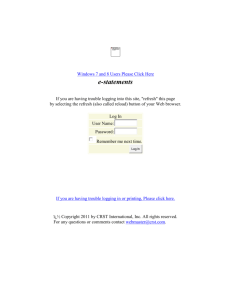

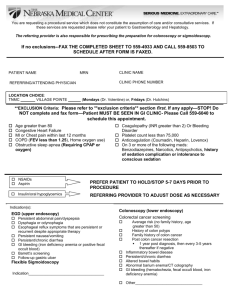

( A ) T r a d itio n a l V ie w o f

A p p lic a tio n E x e c u tio n

I n itia te A

E x e c u te A

E x e c u te A

E x e c u te A

T erm . A

A p p lic a tio n A

RM

I n te r a c tio n s

I n it A

W r ite O

R ead O

T erm A

R eso u rce

M anager

( B ) N e w V ie w o f

A p p lic a tio n E x e c u tio n

a s L o g g e d O p e r a tio n s

I n it(A )

E x (A )

S0

W P (O )

E x (A )

S1

R (A ,O )

S2

E x (A )

S3

C m p (A )

S4

S5

A p p lic a tio n S ta te I d s a s A p p lic a tio n S ta te C h a n g e s

Figure 1

The resource manager logs the application execution

between these calls so that the application itself can be

re-invoked to replay the transition to the next

interaction. The resource manager does this by

returning to the application after its system call, and the

operation finishes to via its next system call. This stands

on its head the execution call tree and permits the

resource manager to orchestrate the replay of the

application. This is illustrated in Figure 1.

Executions between interactions, i.e. Ex(A) operations,

are thus logged as "physiological" operations. An

Ex(A) reads application state A i as of interaction i and

transforms it by re-execution of the application into Ai+1

as of interaction i+1, which is “written" by Ex(A).

Ex(A) can be logged very compactly since we need only

name the application that produces the change in state,

and whatever parameters were returned to it at

interaction i. That is, we replay Ex(A) by this return of

control to the application.

Logging interactions can be much more costly. The

read above stored in its log record the entire value of the

object. If many pages of a multi-megabyte file are

read, we have a log space problem, a write bandwidth

problem, and an instruction path problem. It is far

better to replay the actual read operation during

recovery, meaning we log the name of the object instead

of its value. That requires, when the read is replayed,

that the named object have the original value. The

reduced data logged is potentially enormous, so it is

worth going to some effort to make this possible.

Here is the problem. After application A reads object

X with value x1, X may be updated to x2. Should A's

read of X be replayed later, x2 would be returned as the

result. But recovery needs to re-create the x1 so that it

is available when A's read is replayed. This seeming

contradiction can be overcome with sophisticated cache

management.

The important observation is that a resource manager

has two versions of actively updated objects, a cached

volatile current version and a stable earlier version.

The stable version enables us to re-create the x1 read by

A. To guarantee this, our cache manager ensures that

x2 is not flushed until we no longer need to replay A's

read of X. Replaying the read is no longer required

when we have flushed a later state of A, or when A

terminates. Recovery of A need continue only from the

flushed, later state or need not be done (if A is

terminated).

The cache manager enforces flush order dependencies

on cached objects so that the more powerful operations

(e.g. a "read" that reads X and application state A and

writes A, i.e. A’s input buffers) can be replayed. This

requires keeping a flush dependency graph, called a

Write Graph in {LoTu95}. The cache manager data

structures needed when reads are the only such "logical"

operation are quite simple {Lo97}. When writes are

treated as logical operations to avoid logging data

values written, we must cope with circular

dependencies.

Circular dependencies are not just a bookkeeping

3

problem. Naively, all objects involved in a cycle must

be flushed atomically together. To avoid this, as before,

requires that the right versions are available when

needed. By clever slight of hand, these versions can be

on the log instead of re-created from earlier stable

versions in the database {Lo97a}. We can then

"unwind" each circular dependency and flush one object

at a time.

Morgan Kaufmann (1997) San Mateo, CA

{GrRe93} J. Gray and A. Reuter, Transaction

Processing: Concepts and Techniques. Morgan

Kaufmann (1993) San Mateo, CA

{Lo97} D Lomet, Persistent Applications Using

Generalized Redo Recovery. Technical Report, March

1997.

Discussion

We log reads/writes of objects local to the resource

manager that manages (provides recovery for) an

application as logical operations. This dramatically

reduces logging cost for these operations. We needn't

log the data values. Cache management is more

complex but reduced logging cost outweighs this. This

is how we “advance toward an elusive goal”, i.e. to

recover applications with low normal execution cost.

But there is surely more to do, both in understanding the

issues and in augmenting the recovery infrastructure.

Some of the issues are described below.

Not fully captured at the current level of abstraction is

how to characterize all interactions in terms of reads

and writes and how to capture the perhaps diffuse state

associated with the application, parts of which are

traditionally held in resource manager volatile data

structures. One needs to be very careful about the

boundary for application state so that the log accurately

captures all non-determinism. And one must decide

exactly what to include in an application checkpoint.

{Lo97a} D. Lomet, Application Recovery with Logical

Write Operations. Technical Report, April 1997.

{LoTu95} D. Lomet and M. Tuttle, Redo recovery from

system crashes. VLDB Conf. (Zurich, Switzerland)

Sept. 1995

{LoTu97} D. Lomet and M. Tuttle, A Formal

Treatment of Redo Recovery with Pragmatic

Implications. DEC Cambridge Research Lab Technical

Report (in prep)

{MHLPS92} C. Mohan, D. Haderle, B. Lindsay, H.

Pirahesh, and P. Schwarz, ARIES: A transaction

recovery method supporting fine-granularity locking

and partial rollbacks using write-ahead logging. ACM

Trans. On Database Systems 17,1 (Mar. 1992) 94-162.

{StYe85} R. Strom, and S. Yemini, Optimistic

Recovery in Distributed Systems. ACM Trans. On

Computer Systems 3,3 (Aug. 1985) 204-226.

Further, some applications are not easily “wrapped” by

a resource manager. Client applications are at

arms-length from server resource managers. Our read

and write optimizations are not possible then. Indeed,

some interactions are inherently non-replayable even

when local, e.g. reading the real-time clock. Is there a

substitute for logging the data values that have been

read or written?

Distributed applications deal with multiple resource

managers. This means partial failures are possible,

which are more difficult to handle than monolithic

failures. {StYe85} describes application recovery in a

distributed system. They do substantial logging and

subtle log management involving logs at each site. It is

highly desirable to make this activity cheaper and

simpler.

Bibliography

{BeNe97} P. Bernstein and E. Newcomer, Principles of

Transaction Processing for the Systems Professional.

4