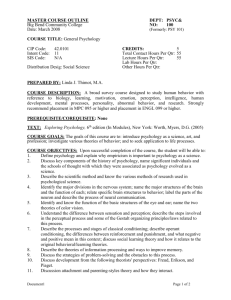

Beyond Psychological Theory: Getting Data that Improves Games

advertisement

Beyond Psychological Theory: Getting Data that Improve Games By Bill Fulton User-Testing Lead One Microsoft Way Redmond WA 98052 billfu@microsoft.com Article originally published in the Game Developer’s Conference 2002 Proceedings, and reprinted on the front page of Gamasutra.com on 3.21.02 at: http://www.gamasutra.com/gdc2002/features/fulton/fulton_01.htm How can I make my game more fun for more gamers? This is THE question for those who want to make games that are popular, not just critically acclaimed. One (glib) response is to "design the games better." Recently, the idea of applying psychological theories as a way of improving game design has been an increasingly popular topic in various industry publications and conferences. Given the potential of applying psychological theory to game design, I anticipate these ideas to become more frequent and more developed. While I think using psychological theories as aids to think about games and gamers is certainly useful, I think that psychology has much more to offer than theory. An enormous part of the value of psychology to games lies in psychological research methods (collecting data), not the theories themselves. I should clarify some terms here. When I talk about "psychology," I do not mean the common perception of psychology--talking to counselors, lying on the Freudian couch, mental illness, etc. In academia, this kind of psychology is called "clinical psychology." In this paper, "psychology" refers to experimental psychology, which employs the scientific method in studying "normal populations functioning normally." But before I talk about how psychological research methods can help improve games, I need to first explain more about how psychological theories are helpful, and the limitations they have with respect to game design. Psychological theories can be useful, but data are more useful All designers think about what people like, hate and want. Some designers may be consciously using theories from psychology as part of the process to evaluate what people want, but most designers probably just rely on their intuitive theories of what they perceive gamers want. The risks of relying on intuitive psychology. What I call "Intuitive psychology" is the collection of thoughts, world views, 'folk wisdom' etc. that people use to try to understand and predict others. Some examples might make this clearer--one common intuitive psychological belief about attraction is that that "opposites attract." However many people (and many of the same people) also believe the opposite, that "birds of a feather flock together." Both of these ideas have some merit and are probably true in some ways for most people. But given that they are clearly conflicting statements, it is unclear which statements to believe and act on--which statement is true? Or, more likely, when is each statement more likely to be true? Does the degree of truthfulness for these statements vary by people? By situation? By both? The problem with intuitive psychology is that many intuitions disagree with each other, and it is unclear which world view is more likely to be right, if either of them are true at all. With intuitive psychology, you're just trusting that the designer's intuitive theories are close enough to reality that the design will be compelling. The insufficiency of formal theories of psychology. Formal theories of psychology have been subjected to rigorous testing to see when they map onto reality and when they do not. In order for a theory of psychology to gain any kind of acceptance, the advocates have to have battled with some success against peers who are actively attempting to show it to be incorrect or limited. This adversarial system of determining "truth" and reliable knowledge employs the scientific method of running experiments and collecting data. Because of this adversarial system, formal theories of psychology are more trustworthy than intuitive theories of psychology--you know that they are more than just one person's unsubstantiated opinion about what people want. So while theories of psychology from academia can be quite useful as a lens to examine your game, their limitation is that they are typically too abstract to provide concrete action items at the level of specificity that designers need. This lack of specificity in psychological theories hasn't really hurt designers too much, because in the most part designers (and people in general) have a decent enough idea of how to please people without needing formal theories. I think very few people had light bulbs go on when they learned that Skinner's theory of conditioning stipulates that people will do stuff for rewards. But the work that Skinner and others did on how to use rewards and punishers well in terms of acquisition and maintenance of behaviors can be enlightening. But academic theories of psychology don't (and probably won’t ever) get granular enough to tell us whether gamers find the handling of the Ferrari a bit too sensitive. An example of why academic theories of psychology aren't specific is in order. Skinner's Behaviorism is probably one of the most well-defined and supported theories, and the easiest to apply to games. (In fact, John Hopson wrote an excellent article in Gamasutra in April 2001 demonstrating how to analyze your game through behaviorism's lens.) One of Hopson's examples is about how players in an RPG behave differently depending upon how close they are to reinforcement (e.g., going up a level, getting a new item, etc.). He talks about how if reinforcers are too infrequent, the player may lose motivation to get that next level. However, how often is not often enough? Or too often? (Who wants to level up every 5 seconds?) Both "too often" and "not often enough" will de-motivate the player. Designers need to find a 'sweet spot' between too often and not often enough that provides the optimal (or at least a sufficient) level of motivation for the player to keep trying to level up. Theory may help designers begin to ask the more pertinent questions, but no theory will tell you exactly how often a player should level up in 3 hours of play in a particular RPG games. Beyond Theory: the value of collecting data with psychological methods So I've argued that the psychological theories (both intuitive and academic) have limitations that prevent them from being either trustable or sufficiently detailed. Now I'm going to talk about what IS sufficiently trustworthy AND detailed--collecting data with psychological methods. Feedback gleaned via psychological testing methods can be an invaluable asset in refining game design. As I said at the beginning of this paper, the central question for a designer who wants to make popular games is "how do I make my game more fun for more gamers?" and that a glib response is to "design the games better." Taking the glib answer seriously for a moment, how do you go about doing that? Presumably, designers are doing the best they can already. The Dilbertian "work smarter, not harder" is funny, but not helpful. The way to help designers is the same way you help people improve their work in all other disciplines--you provide them feedback that helps them learn what is good and not so good about their work, so that they can improve it. Of course, designers get feedback all the time. In fact, I'm sure that many designers sometimes feel that they get too much feedback--it seems that everyone has an opinion about the design, that everyone is a "wannabe" designer (disguised as artists, programmers, publishing execs, everyone's brother, etc.). But the opinions from others often contradict each other, and sometimes go against the opinions of the designer. So the designer is put in the difficult situation of knowing that their design isn't perfect, wanting to get feedback to improve it, and encountering feedback that makes sense, yet is often contradictory both with itself and with the designer's own judgment. This makes it difficult to know what feedback to act on. So the problem for many designers is not a lack of feedback, but an epistemological problem-whose opinion is worth overruling their own judgment? Whose opinion really represents what more gamers want? Criteria for good feedback and a good feedback delivery system Before launching into a more detailed analysis of common feedback loops and my proposed "better" one, I need to make my criteria explicit for what I consider "good" feedback and a good feedback delivery system. The addition of "delivery system" is necessary to provide context for the value (not just accuracy) of the feedback. The criteria are: 1. The feedback should accurately represent the opinions of the target gamers. By "target gamers," I mean the group of gamers that the game is trying to appeal to (e.g., driving gamers, RTS gamers, etc.) If your feedback doesn't represent the opinion of the right group of users, then it may be misleading. This is absolutely critical. Misleading feedback is worse than no feedback, the same way misleading road signs are worse than no signs at all. Misleading signs can send folks a long way down the wrong road. 2. The feedback should arrive in time for the designer to use it. If the feedback is perfect, but arrives too late (e.g., after the game is on the shelf, or after that feature is locked down), the feedback isn't that helpful. 3. The feedback should be sufficiently granular for the designer to take action on it. The information that "gamers hate dumb-sounding weapons" or that "some of the weapons sound dumb" isn't nearly as helpful as "Weapon A sounds dumb, but Weapons B, C, and D sound great." 4. The feedback should be relatively easy to get. This is a pragmatic issue--teams won't seek information that is too costly or too difficult to get. Teams don't want to pay more money or time than the information is worth ($100k and 20 person hours to learn that people slightly prefer the fire-orange Alpha paint job to the bright red one is hardly a good use of resources.) The first criterion is about the accuracy of the feedback which is critical; the rest are about how that feedback needs to be delivered if it is going to be useful, not merely true. Common game design feedback systems and their limitations There are many feedback systems that designers use (or, in some cases, been subjected to). Most designers, like authors, recognize that they need feedback on their work in order to improve it-- few authors have reason to believe that their work is of publishable quality without some revision based on feedback. I'm going to list the feedback systems of which I am aware, and discuss how good of a feedback delivery system it is. There are two main categories of feedback loops: feedback from professionals in the games industry, and from nonprofessionals (i.e., gamers). While these sources obviously affect each other, it is easier to talk about them separately Feedback from Professionals in the games industry. There are two main sources of this kind of feedback: 1. Feedback from those on the development team. This is the primary source of feedback for the designer--people working on the game say stuff like "that character sucks" or "That weapon is way too powerful." This system is useful because it ably suits criteria 2 through 4 (the feedback is very timely, granular enough, and easy to get), but still leaves the designer with a question mark on criteria 1--how many gamers will agree that that weapon is way too powerful? 2. Feedback from gaming industry experts. Game design consultants ("gurus"), management at publishers, game journalists, etc. can also provide useful feedback. While their feedback can often meet criteria 3 (sufficiently granular), criteria 2 (timely) is sometimes a problem--long periods can go between feedback, and recommendations can come after you can use it. And the designer is still left with questions about criteria 1 (accurately represents gamers), although some could argue that they may be more accurately representing gamers because they have greater exposure to more games in development. So while feedback from professionals is the current bread and butter for most teams and definitely nails criteria 2, 3 and 4, it operates a great deal on faith and hope on criterion 1--that the feedback from industry professionals accurately maps onto gamers' opinions. The reason this assumption is questionable is perhaps best illuminated by a simple thought experiment-how many games do you think a typical gamer tries or sees in a year? How many do you think a gaming industry professional tries or sees? They are probably different by a factor of 10 or more. Gaming industry professionals are in the top 1% in knowledge about games, and their tastes may simply be way more developed (and esoteric) than typical gamers' tastes. While some professionals in the industry are probably amazingly good at predicting what gamers will like, which ones are they? How many think they are great at it, when others disagree? So while feedback from industry professionals is necessary when designing the game, they may not be the best at evaluating whether gamers will like something. In the end, they can only speak for themselves. Feedback from Non-professionals. Game teams are not unaware of the problem of their judgment not always mapping onto what most gamers really want. Because of this, they often try to get feedback from those who are more likely to give them more accurate feedback, and the obvious people to talk to are the gamers themselves. Some common ways that this is done are listed below, along with some analysis of how good a feedback system it is according to the 4 criteria. 1. News group postings/Beta testing/fan mail. This is reading the message boards to see what people say about the game. The main problem with this as a feedback system is with criteria 2 (timely). The game has to be able to be fairly far along (at least beta, if not shipped) in order to get the games to people; typically, that feedback arrives too late to make any but the most cosmetic of changes. Also, the feedback often runs into problems of not being sufficiently granular to take action on. ("The character sux!") But at least this kind of feedback is relatively cheap in both time and money. 2. Acquaintance testing. This is where you try to get people (typically relatives, neighbor's kids, etc.) from outside the industry to play your game and give you feedback. This feedback is often sufficiently granular and may be relatively accurate, but it is often not that timely due to scheduling problems, and can be costly in time. 3. Focus groups/Focus testing. This kind of feedback system is typically done by the publisher, and involves talking to small groups (usually 4-8 gamers) in a room about the game. They may get to see or play demos of the game, but not always. One typical problem with focus groups is that often tend to happen very late in the process when feedback is hard to action on (not timely) and not sufficiently granular. The costs for focus groups can also be quite high. This approach has potential to be useful, in that it involves listening to gamers who aren't in the industry. However, there are many pitfalls to this--It is often dubious as to how accurately the feedback represents gamers due to the situations themselves (only certain kind of people post messages, people feel pressured to say positive things, the people running the test often lack sufficient training in how to avoid biasing the participants, etc.), and the relatively small number of people. How to minimize these concerns and create a feedback system that works on all 4 criteria is discussed in the next section. Designing a better feedback system Up to this point, I've mostly been criticizing what is done. Now I need to show that I have a better solution. I'm going to outline some of the key factors that have allowed Microsoft to develop a feedback system that we think meets the 4 criteria that I set up for a "good" feedback system. We call this process of providing designers with feedback from real users on their designs "user-testing," and the people who do this job "user-testing specialists." The importance of using principles of psychological testing. Experimental psychology has been studying how to get meaningful, representative data from people for over 70 years, and the process we use adheres to the main principles of good research. This is not to say that all psychological research is good research any more than to say that all code is good code; researchers vary in their ability to do good research the same way that not all programmers are good. But there are accepted tenets of research methodology that have been shown to yield information worth relying on, and our processes have been designed with those in mind. (For the sake of not boring you senseless, I'm not going to attempt to summarize 70 years of research on how to do research in this paper.) What I'm going to do instead is describe the day-to-day work that the user-testing group at Microsoft does for its dev teams (both 1st and 3rd party). The actual testing methods we use. The user-testing group provides 3 major services: usability testing, playtesting, and reviews. These services are described in detail below. 1. Usability research is typically associated with small sample observational studies. Over the course of 2-3 days, 6-9 participants come to Microsoft for individual 2-hour sessions. In a typical study, each participant spends some unstructured time exploring the game prior to attempting a set of very specific tasks. Common measures include: comments, behaviors, task times and error rates. Usability is an excellent method to discover problems that the dev team was unaware of, and to understand the thoughts and beliefs of the participant and how they affect their interaction with the game. This form of testing has been a part of the software industry for years and is a staple of the HCI (Human-Computer Interaction) field more so than psychology. However, methods used in HCI can be traced to psychological research methods and can essentially be characterized as a field of applied psychology. 2. Playtest research is typically associated with large, structured questionnaire studies that focus on the first hour of game play. The sample sizes are relatively large (25-35 people) in order to be able to compute reliable percentages. Each person gets just over 60 minutes to play the game and answer questions individually on a highly structured questionnaire. Participants rate the quality of the game and provide open-ended feedback on a wide variety of general, genre-specific, and product-specific questions. Playtest methods are best used to gauge participants' attitudes, preferences, and some kinds of behavior, like difficulty of levels. This form of testing has a long history in psychology in the fields of attitudinal research and judgment and decision-making. 3. Reviews are just another version of feedback from a games industry professional. However, these reviews are potentially more valuable as the reviewers are user-testing specialists, who are arguably have more direct contact with real gamers playing games than other game professionals in other disciplines. A user-testing specialist’s entire job is to watch users play games and listen to the complaints and praises. Furthermore, teams often repeat mistakes that other games have made, and thus experienced usertesting specialists can help teams avoid "known" mistakes. The result of each of these services is a report is sent to the team which meticulously documents the problems along with recommendations on how to fix those problems. Our stance is that the dev teams are the ones who decide if and how to fix the problems. One noticeable absence in our services is "focus groups." Our belief (supported by research on focus groups) is that focus groups are excellent tools for generation (e.g., coming up with new ideas, processes, etc.), but are not very good for evaluation (e.g., whether the people like something or not). The group nature of the task interferes with getting individual opinions, which is essential for the ability to quantify the evaluations. How this feedback system fares on the 4 criteria for a good feedback system. So, how does the way we do user-testing at Microsoft stack up to the 4 criteria? Pretty well (in my humble opinion, else I wouldn’t be writing this article). A recap of the criteria, and my evaluation of how we do on them is given below. 1. The feedback should accurately represent the opinions of the target gamers. We supply reasonably accurate, trustworthy feedback to teams, because: a. We have a large database of gamers (~12,000) in the Seattle metro area, who play every kind of game. So we can almost always bring the right kind of gamers for each kind of game. b. We hire only people with strong backgrounds in experimental or applied psychology (typically 2+ years of graduate training) in order to ensure that the user-testing specialist knows how to conduct research that minimizes biases. We also have a rigid review process for all materials that get presented to the user. c. We thoroughly document our findings and recommendations, and test each product repeatedly, which allows us to check the validity of both our work and the team's fixes over multiple tests and different participants. 2. The feedback should arrive in time for the designer to use it. We are relatively fast at supplying feedback. The entire process takes about 6 days to get some initial feedback, and about 11-14 days for a full report. If the tests are well planned, they can happen at key milestones to maximize the timeliness of the feedback. 3. The feedback should be sufficiently granular for the designer to take action on it. The level of feedback in the reports can be extremely specific, because the tests are designed to yield granular, actionable findings. The user-testing specialist typically comments at the level of which cars or which tracks caused problems, or what wording in the UI caused problems. The recommendations are similarly specific. Usability tests typically yield more than 40 recommendations, whereas playtest tends to have anywhere from 10-30 items to address. 4. The feedback should be relatively easy to get. The feedback is relatively easy for the dev team to get--they have a user-testing lead on their game, and that person sets up tests for them and funnels them the results. However, the feedback is relatively inexpensive, when compared to the multi-million dollar budgets of modern games. The total cost of our operation is "substantial," but economies of scale make the cost per game relatively small. Vital statistics on the user-testing group at Microsoft Group history: the usability portion of the user-testing group has been around in a limited fashion since Microsoft entered the games business in earnest, in 1995. Funding was at a very low level (one usability contractor) until the Games Group began investing more heavily in 1998 with the introduction of the Playtest group. The usability and playtest group merged to form the user-testing in 2000. The current user-testing processes have been relatively stable since 1997 (usability) and 1998 (playtest). Current composition of user-testing group: 15 FT user-testing specialists, 3-5 contract specialists, 3 FT support staff. Almost all user-testing specialists have either 2+ years of graduate training in experimental psychology, or equivalent experience in applied psychology and are gamers. All 4 founding members of the user-testing group are still with the group. Amount of work: In 2001, we tested approximately 6500 participants in 235 different tests, on about 70 different games. 23 of those games were on non-Microsoft products. In 2002, we expect to produce about 50% more than we did in 2001. From 1997 to Jan 2002, the group has produced 658 reports on 114 products (53 Microsoft, and 61 non-Microsoft products). Article