A baseline speaker recognition system working over the wireless

advertisement

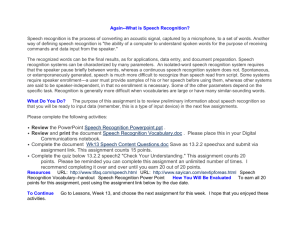

A baseline speaker recognition system working over the wireless telephone network H. Greige, C. Mokbel, University Of Balamand Po Box 100 Tripoli Balamand, Lebanon G. Chollet ENST, dept. TSI 46, rue Barrault 75634 Paris cedex 13, France Abstract Numerous applications may or currently use speaker recognition to control the access of a user to a service. This speech technology permits and may be essential to improve the human-machine interface. The work presented in the present paper concerns textindependent speaker recognition and more specifically speaker verification over wireless telephone network. State of the art speaker recognition systems are based on GMM modelling of the speech signal. A new GMM-based speaker recognition system has been developed. The system makes use of cepstral normalization in order to reduce the telephone line effects in the signal. It also uses a particular adaptation technique that permits to estimate the speakers’ GMM parameters from the world model parameters. The system has been tested in the speaker detection task of the NIST’2002 speaker recognition evaluation and has achieved 22% as equal error rate (EER). I Introduction Speaker recognition is one of the speech technologies that finds a lot of applications in real-life services. It permits to largely improve the security of the access to the services. With the important development of telecommunications, securing the access is highly appreciated in today remotely accessed services. Speaker recognition has been studied for several decades [4]. Several systems have been proposed with different variants. State of the art systems may be distinguished following application and/or technology criteria. At the application level, we may distinguish between speaker identification and speaker verification. In the first case, the system is asked to identify a speaker from a set of known speakers. In the second figure, a speaker declares his identity, one of those known by the system, and the system should verifies this identity. Speaker identification can be done in a closed set or an open set, i.e. the speaker is mandatory one of the speakers known by the system or may not be respectively. In the current work we are particularly interested in speaker verification system. Actually, a speaker identification system may be considered as the association of n speaker verification systems. Speaker recognition systems may also be classified following the mode of recognition. Text independent speaker recognition bases its decision on a certain amount of speech from one speaker independently of what has been uttered. In opposite, text dependent speaker recognition systems expect the speaker to utter a particular word or expression that is considered as a password. An intermediate solution consists in asking the speaker to utter few words chosen randomly by the system. This is called text prompted system. In this work we are mainly interested in text independent speaker recognition which needs by far more complex techniques than the other approaches. Several techniques have been proposed for text independent speaker verification [1][2][3][4][5][9]. These techniques generally derive from statistical modeling of the speech signal given a specific speaker. The system may be based on the computation over a specific speech period of the second order statistics (or the covariance matrix) and to compare these statistics to the speaker statistics computed during a training period. Such approaches are described in [1][2] for example. Statistical pattern recognition techniques may also be used in building speaker recognition systems. Neural networks have been successfully used for this purpose. One of the most successful and popular approaches consists in estimating the distribution of speech for a given speaker. The distribution of speech for any speaker is also estimated. When a new utterance is presented to the system the likelihood ratio between the announced speaker model and the general model is computed and compared to a predefined threshold. The claimed speaker is verified if the likelihood ratio is greater than the threshold. Otherwise the speaker is rejected. Gaussian mixture models (GMM) [9] have been successfully used to model the speech. The general speech model is generally trained using speech provided by a set of speakers. This model is considered to model the speech in general and is adapted to a particular speaker using few minutes of speech from that speaker. In the present work we propose to use the adaptation technique proposed in [6] to perform the estimation of the speaker model. Besides a new GMM speaker recognition system has been developed and is presented in the following. The paper is organised as follows. The next section recalls the GMM modeling in speaker recognition. Section III describes the new system architecture. This system has been experimented in the framework of a NIST’2002 speaker recognition evaluation. The obtained results are shown in the section IV. Finally, conclusions and few perspectives are provided. II GMM modeling This section describes the use of statistical modeling to perform speaker recognition. At the input of the system, the speech signal is processed and relevant features are extracted. These features are chosen since they are more relevant than simply the speech samples. Mel Frequency Cepstral Coefficients (MFCC) together with their first-order and secondorder derivatives are used. At the output of the feature extraction module the speech is T represented by a sequence of feature vectors X 1 . This sequence will be used to verify the claimed identity of the speaker. This is a simple hypothesis test. Let H0 represents the hypothesis that the claimed identity is valid. This hypothesis is to be tested against the null hypothesis H1 H 0 that the claimed identity is not the true identity of the speaker. Under the Bayesian framework, the most probable hypothesis will be chosen. This consists in comparing the probabilities of the two hypotheses given the observed sequence of vectors. Pr(H 0 / X 1 ) Pr(H1 / X 1 ) T T (1) Using the Bayes rule we can deduct: p( X 1 / H 0 ) Pr(H 0 ) p( X 1 / H1 ) Pr(H1 ) T T (2) T where p( X 1 / H i ) is the likelihood of the observed data given an hypothesis and Pr(Hi) is the a priori probability of the hypothesis Hi. Rearranging Eq. 2 in order to use the likelihood ratio leads to: T p( X 1 / H 0 ) T p( X 1 / H1 ) Pr(H1 ) Pr(H 0 ) (3) Eq. 3 shows that if the likelihood ratio is greater than a given threshold, the claimed identity is accepted. Otherwise, it is rejected. Unfortunately, the conditional distributions of the speech vectors given an hypothesis are not available. Parametric models are used to approximate these distributions. In this paper we are interested in modeling the speech vectors using a Gaussian Mixture Model (GMM) [9]. Using GMM, the likelihood of the speech vectors is given by: T p( X 1 where / Hi ) T p( X 1 T / i ) M m(i ) N ( X t , m , m ) (i ) (i ) (4) t 1 m 1 m(i ) , (mi ) , (mi ) are the weight, the mean and the covariance matrix of the mth Gaussian distribution in the mixture for the ith hypothesis. Based on a training set these parameters are estimated using the Expectation-Maximization algorithm [8]. The estimation of the GMM model parameters is constrained by the available amount of data. Typically, the dimension of the feature vector space is larger than 30 and the Gaussian mixture has more than 512 components. This means that more 30 000 parameters are to be estimated if we consider diagonal covariance matrix. Large amount of training data is necessary to train the GMM models for both hypotheses. For the null hypothesis, the corresponding model is called world model and large amount of speech data may be collected off-line from several speakers providing sufficient sample size to do the training. For the speaker model, the problem is quite different since a user cannot be asked to speak for more than 1 hour in order to get sufficient speech frames to train his own model. For practical reasons, only few minutes can be collected from a speaker to train his own model. Thus, training the speaker model is a problem of training a statistical model using small amount of data and often in an unsupervised mode. This problem is well known in speech recognition as an adaptation problem [6]. The main idea is to start from a model trained to represent speech in a general context and to use data from a particular context to adjust the model’s parameters to better represent this new context. In this framework, the speaker model is obtained by adjusting the world model to better represent the speech samples collected from a given user. Several adaptation solutions are proposed in the past decade. A unified adaptation framework is proposed in [6] and is applied in the current work. This unified framework combines the respective advantages of Bayesian adaptation and transformation-based adaptation. In the Bayesian framework, an a priori distribution for the world model parameters is determined. Given few minutes of speech from the target speaker the world model are adjusted to satisfy the maximum a posteriori criterion. Transformation based adaptation suggests to use a transformation function in order to adjust the parameters of the world model to better represent the speech of the target speaker. The parameters of the transformation function are estimated using a maximum likelihood criterion. The unified approach proposes to build a binary tree of the world model Gaussian distributions. Cutting at certain level of the tree defines a partition of the Gaussian components of the world GMM. Given the few minutes of the speaker data, the optimal partition is determined and for every subset of Gaussian distributions a transformation function is associated for which the parameters are estimated using maximum a posteriori criterion. III System architecture The different phases of determining the speaker recognition model is presented in the figure 1: world model estimation, target speaker model estimation, and z-normalization. The speech signal is first analyzed and feature vectors are determined. In our experiments, the feature vector is formed of the energy on logarithmic scale with 12 MFCC coefficients together with their first- and second-order derivatives. Cepstral mean normalization [7] is also applied to make the feature vector robust to telephone effects. a) World Speakers Speech signals Feature Extraction EM estimation Feature Extraction Adaptation b) Target Speaker Speech signal World model Speaker model World model c) Pseudo Impostors Speech signal Feature Extraction World model Estimation of z-norm Speaker model Figure 1: Different steps of training of the speaker recognition model: a) training of the world model, b) training of the speakers’ models and c) z-normalization. To train the world model, speech from different speakers are generally available. In our experiments, gender dependent world models are built. GMM with 256 Gaussian distribution components are considered. First the LBG algorithm is applied to initialize the GMM parameters’ values. Afterwards, the EM algorithm is applied to estimate those parameters. Given the world model and few minutes of speech from a target speaker, the speaker model is estimated by adapted the worl model parameters as described in the previous section. It has been also noted in the previous section that when approximating the likelihood of data conditional to a certain hypothesis with a GMM model the inequality of Eq. 3 stops to be exact. Thus, the decision threshold on the likelihood ratio will depend on the precision of the GMM models. An approach to make the decision threshold independent is to normalize the likelihood ratio. Let us call the log-likelihood ratio the score of a target speaker model for a speech utterance. If speech from a set of pseudo impostors are available the distribution of the impostors scores is computed. This distribution is normalized. The same normalisation will be applied on the scores corresponding to this particular target speaker. This normalization is called z-norm. When a new speaker claims an identity heprovides few minutes of speech to the system. The speech signal is analyzed and the feature vectors are extracted. Based on these feature vectors a log-likelihood ratio score is computed and normalized. Finally the normalized score is compared to a fixed threshold to decide on the identity of the speaker. IV Experiments and results The system has been experimented on the data provided by NIST’2002 speaker recognition evaluation-1speaker detection-cellular data task. The year 2002 speaker recognition evaluation is part of an ongoing series of yearly evaluations conducted by NIST. The experiments are conducted over an ensemble of speech segments selected to represent a statistical sampling of conditions of interest. For each of these segments a set of speaker identities will be assigned as test hypotheses. Each of these hypotheses must be independently judged as “true” or “false” by computing a decision score. This decision score will be used to produce detection error tradeoff curves, in order to see how misses may be traded off against false alarms. The curves are plotted by varyingthe decision threshold on the whole experimental set. The database used for the evaluation is formed of around 400 male and 400 female speakers. Training data for each speaker will consist of about two minutes of speech from a single conversation. The actual duration of the training data used will vary slightly from this nominal value so that whole turns may be included whenever possible. Actual durations will, however, be constrained to lie within the range of 110-130 seconds. Each test segment will be extracted from a 1-minute excerpt of a single conversation and will be the concatenation of all speech from the subject speaker during the excerpt. The duration of the test segment will therefore vary, depending on how much the segment speaker spoke. Besides the users (speakers) and impostors, i.e. the evaluation data, speech data to train the world model and pseudo impostors and pseudo users are needed. These last data form what is called the development data. The evaluation data for the different parts of year 2001 evaluation serve as the development data for corresponding parts of the year 2002 evaluation. The results obtained by the baseline system presented in this paper are shown in the figure 2, 3, and 4. In the figure 2, the global results show that an EER of 22% is achieved by the system. In the figure 3, the results per speaker gender are plotted. We can see that better performance are achieved for male speakers than for female speakers. Finally, the results per condition are plotted in the figure 4. Here, we can see that equivalent results are obtained for the different conditions (in for inside, out for outside, and car for call from a car). Even if better performance can be achieved, the baseline system has the advantage of providing similar results for the different calls conditions. Figure 2: Global detection curve. Figure 3: Per gender detection curve. Figure 4: Per condition detection curve. V Conclusions and perspectives As a conclusion, this paper describes a baseline speaker verification system. The system is designated to be text independent. The system has been experimented on the NIST’2002 cellular data and has provided an equal error rate of 22%. Even if better performance may be achieved, the system has the advantage of providing similar performances in the different conditions. Several perspectives are drawn for this work. It is necessary to include a speech/nonspeech detector in order to remove the nonspeech segments from the signal while doing the recognition. Besides, we do believe that different weights should be given to the different parts of the signal while computing the final score. This direction will be further explored in the future. Acknowledgment: This work has been done within the ELISA consortium and is partly supported by the grant from the CEDRE project no. 2001 T F 49 /L 42. References [1] F. Bimbot and L. Mathan, “Text-free speaker recognition using an arithmeticharmonic sphericity measure,” Eurospeech, 1993. [2] F. Bimbot and L. Mathan, “Second-order statistical measures for text-independent speaker identification,” ESCA Workshop on Automatic Speaker Recognition Identification and Verification, pages 51-54, 1994. [3] F. Bimbot, M. Blomberg, L. Boves, G. Chollet, C. Jaboulet, B. Jacob, J. Kharroubi, J.W. Koolwaajj, J. Lindberg, J. Mariethoz, C. Mokbel & H. Mokbel, “An overview of the Picasso project research activities in speaker verification for telephone applications,” Eurospeech 1999. [4] H. Gish, M. Schmidt, “Text Independent Speaker Identification,” IEEE Signal Processing magazine, p 18, October 1994. [5] J. Mariethoz, D. Genoud, F. Bimbot, C. Mokbel, “Client/world model synchronous alignment for speech verification,” Eurospeech, 1999. [6] C. Mokbel, “On-line Adaptation of HMMs to Real-Life Conditions: A Unified Framework,’’ IEEE Trans on Speech and Audio Processing, May 2001. [7] C. Mokbel, D. Jouvet & J. Monné, ‘‘Deconvolution of Telephone Line Effects for Speech Recognition,’’ Speech Communication, Vol. 19, n° 3, pp. 185-196, September 1996. [8] L.R. Rabiner and B.H. Juang, Fundamentals of Speech Recognition, Prentice-Hall, Englewood Cliffs, 1993. [9] D. Reynolds and R. Rose, “Robust text independent speaker identification systems,” IEEE Trans. on Speech and Audio Processing, january 1995.