Full project report

advertisement

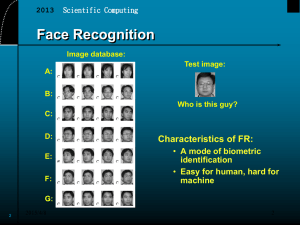

OCR using PCA By Ohad Klausner 1. Introduction Optical character recognition usually abbreviated to OCR, involves a computer system designed to translate images of typewritten text (usually captured by a scanner) into machine editable text or to translate pictures of characters into a standard encoding scheme representing them. OCR began as a field of research in artificial intelligence and computational vision. In this project I decided to implement OCR using the appearance based recognition technique. Formally, the problem can be stated as follows: given a training dataset x, and an object o, find object xj, within the dataset, most similar to o. PCA (defined below) is a popular technique in apperance based recognition. 2. Pricipal Components Analysis In statistics, principal components analysis (PCA) is a technique that can be used to simplify a dataset., more formally it is a linear transformation that chooses a new coordinate system for the data set such that the greatest variance by any projection of the data set comes to lie on the first axis (then called the first principal component), the second greatest variance on the second axis, and so on. PCA can be used for reducing dimnesionalty in a dataset while retaining those characteristics of the dataset that contribute most to its varaince by eliminating the later principal components (by a more or less heuristic decision). These characteristics may be the "most important", but this is not necessarily the case, depending on the application. PCA has the speciality of being the optimal linear transformation subspace that has largest variance. However this comes at the price of greater computational requirement. Unlike other linear transforms, the PCA does not have a fixed set of basis vectors, Its basis vectors depend on the data set. Assuming zero empirical mean (the empirical mean of the distribution has been subtracted from the data set), the principal component wi of a dataset x can be calculate by finding the eigenvalues and eigenvectors of the covariance matrix of x, we find that the eigenvectors with the largest eigenvalues correspond to the dimensions that have the strongest correaltion in the dataset. The original measurements are finally projected onto the reduced vector space. 3. Appearance Based Recognition Using PCA. 1. Obtian a trianing set of images of all objects of interset (in our case: the ABC or the aleph-beth) under variable conditions (in our case: different fonts, bold, italic, etc.). 2. Create a eigenspace from the training set using PCA. 3. Recognize the given image: o Project both the image and the training set to the PCA subspace (eigenspace). o Compute the distances to the training set images in the eigenspace (in our case: using L2 norm) o Find object oj with minimal distance from the given image o Return oj name. 4. The Algorithm 4.1 Creating PCA subspace (eigenspace). o Organize the image database into colunm vectors. The vector size eqauls the image height multiplied by the image width. All the database images must be of the same size, 64 x 48 in our case (equivalent to a 36 size font). The result is a vector_size x database_size matrix, ColumnVectors. o Find the empirical mean vector. Find the empirical mean along each dimension. The result is a vector_size x 1 vector, EmpiricalMean. o Subtract the empirical mean vector EmpiricalMean from each column of the data matrix ColumnVectors. Store the mean-subtracted data in a vector_size x database_size matrix, MeanSubracted. o Compute the eigenvectors and eigenvalues of the covariance matrix of MeanSubracted. In this phase I deviated form the original PCA algorithm. Finding the eigenvectors and eigenvalues of the covariance matrix of MeanSubracted, which is a vector_size x vector _size matrix, was more then Matlab could handle. When I tried to do so, I got an “out of virtual memory” error. Therefore I used a method (that I found on the web), of obtaining the covariance matrix of the transposed matrix of MeanSubracted is a database_size x database_size matrix, which is a smaller matrix but gives similar eigenvalues and eigenvectors as the original matrix. In order to convert these eigenvectors to the eigenvectors required I multiplied the eigenvectors by the MeanSubracted matrix. o Sort the eigenvectors by decreasing eigenvalue. o Create a k dimensional subspace. Save the first k eigenvectors as a matrix, SubSpace. Eigenvector with normalized eigenvalue close to zero will not be saved even though it is in the k largest eigenvalues. The end result is a k (or less) dimensional subspace. 4.2 Recognizing a given image o Transform the image into a colunm vector. Resize the image to the database images size and set it as a colunm vector, ImageColumVec. o Find the distance from the image colunm vector to each of the database colunm vectors in the subspace. Project both the image vector and the training set (database) vector to the PCA subspace, multiply SubSpaceT by ImageColumVec and by ColumnVectors and compute the distance using the L2 norm method. o Return the name (label) of the colunm vector with the minimal distance. 5. The Program The program consists of 9 files and 2 main functions, written in Matlab. Main functions: 1. CreateDB(): loads the database, trains it and saves the data for the OCR use. 2. OCR (image_file_name): loads the image and recognizes it. Files: 1. database.zip – an archive of the database images. Unzip it before database creation and training. 2. imread2.m – this function loads an image and creates its negative. 3. getImages.m – a script used for loading the database images. This file is the one needed to be changed in order to update/add images to the database. 4. pca.m – this function trains the dataset with the images loaded by the script, using the PCA algorithm described above. 5. CreateDB.m – holds the CreateBD() function. 6. PCAdateBase.mat – if created, holds the PCA saved data. 7. im_resize.m – resizes the image to the desired size 8. getCharId.m - this function preforms the recognizing algorithm described above. 9. OCR.m - holds the OCR (image_file_name) function. 6. Results I ran the program using a full hebrew Aleph Beth database, 270 images (10 different images per letter). After running a few tests, the recognition success rate was about 50% due to two main reasons: location sensitivity and similar letters with small differences between them. Location sensitivity: character recognition via apperance based recognition is location sensitive because charatcers come in different sizes (using the same font size) and can not always by at center of the image. In addition, in different fonts, the same letter can by placed in different locations within the “letter box”. When a given letter is located in a different location then the correct dataset images the recognition algorithm will return a long distance under the L2 norm method and a shorter distance can be found to an incorrect letter. For example: the following image was recognized correctly as but the same image (letter) moved aside within the “letter box” incorrectly recognized as was . Similar letters: among the hebrew Aleph Beth one can find very similar letters with very small differences between them. For example VAV “ ”וand NUN SOFIT “”ן, can be called a “similar couple”. This kind of similarity can cause mistakes when writing with a specific font in which the letter resembles its “similar couple partner”. For example: this Kaph Sofit . was incorrectly recognized as Resh 7. Discussion The goal of my project was to create a reliable OCR using the PCA method. After testing this method and ending up with a poor recognition rate as described above, one may think that I failed reaching that goal, but with a few enhancements (maybe a project for next year) one can correct the problems described above. Creating a whole word OCR (instand of one letter at a time) would minimize the similar letters mistake. This can be done by loading a whole word image, dividing it into letters using some clustering or/and a edge detecting techniques and sending the letter to my OCR with a flag indicating the letter location in the word (last letter or not). Because most of the “similar couples”, described above, contains one final (Sofit) letter, ignoring the final letters when checking a letter form the beginning or the middle of the word would minimize those mistakes. Centering the letters. By centering the letters within the “image box” (both the dataset and the given character) the location sensitivity problem would be solved, because all the letters will be in the same location within the “image box”. This can be done again by using clustering or/and a edge detecting techniques in order to find the location of the letter within the “image box” and moving it to the center. Choosing k. k is the dimension of subspace. k represents the trade-off between the recognition accuracy and the amount of compution required. A large k requires more compution time but results in better accuracy. In this case k was choosen arbitrarily to be 32. Obviously, a higher k would have resulted in more accurate results. Exhaming the eigenvalues can proved a method for a better choise of k. 8. References 1. Wikipedia web encyclopedia: http://en.wikipedia.org/wiki/Main_Page 2. Matt's Matlab Tutorial Source Code Page: http://joplin.ucsd.edu/Tutorial/matlab.html 3. Lecture Notes form “Introduction to Computational and Biological Vision” course: Object Identification and Recognition III - Apearance Based Recognition