Fundamental concepts of software reuse

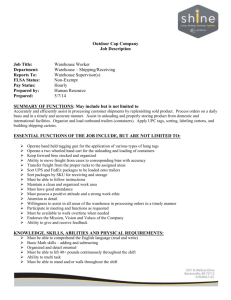

advertisement