Band-Pass Signals & Systems: Representation and Analysis

advertisement

4 Characterization of Communication Signals and Systems

4.1 Representation of band-pass signals and systems

(1). Signals and channels (systems) that satisfy the condition that their bandwidth is much

smaller than the carrier frequency are termed narrowband band pass signals and

channels (systems).

(2). With no loss of generality and for mathematical convenience, it is desirable to reduce all

band pass signals and channels to equivalent low pass signals and channels.

4.1.1 Representation of band pass signals

(1). A real-valued signal s (t ) is called a band pass signal if it has a frequency content

concentrated in a narrow band of frequencies in the vicinity of a frequency f c .

(2). Consider a signal that contains only the positive frequencies in s (t ) , s (t ) , called the

analytic signal or the pre-envelope of s (t ) .

(Why the positive frequencies are considered? This is that the negative frequency does not

exist in the real world.)

(3). Viewed from the frequency domain, the Fourier transform of s (t )

S ( f ) F s (t ) 2u( f )S ( f )

(4.1-1)

where

1,

S ( f ) F [ s(t )]; u( f )

0,

f 0

f 0

Then the pre-envelope of s (t ) is expressed by

s (t )

S ( f )e

j 2 ft

df F 1[2u( f )] F 1[ S ( f )]

(4.1-2)

j

t

(4.1-3)

But since

F 1[2u( f )] (t )

then

j

j

s (t ) (t ) s(t ) s(t ) s(t )

t

t

(4.1-4)

Define the Hilbert transform of s (t ) as

sˆ(t )

1

1 s( )

s (t )

d

t

t

(4.1-5)

where

1

, t

t

The filter h(t ) is called the Hilbert transformer.

The frequency response of this filter is

h (t )

H ( f ) F [[h(t )]

h (t )e

j 2 ft

j, f 0

1 j 2 ft

dt e

dt 0, f 0

t

j, f 0

1

(4.1-6)

(4.1-7)

The observations about this filter are as follows:

(1). H ( f ) 1, f

,

(2). ( f ) 2

,

2

That is, the role of this filter is like a 90

signal.

f 0

f 0

phase shifter for all frequencies in the input

An equivalent low pass representation of band pass analytic signal s (t ) is defined as

Sl ( f ) S ( f f c )

(4.1-8)

and

sl (t ) s (t )e j 2 fct s(t ) jsˆ(t ) e j 2 fct

(4.1-9)

or equivalently,

s(t ) jsˆ(t ) sl (t )e j 2 fct

In general, sl (t ) is complex-valued

sl (t ) x(t ) jy (t )

(4.1-10)

(4.1-11)

Additional relations are as

s(t ) x(t )cos(2 f ct ) y(t )sin(2 f ct )

(4.1-12)

sˆ(t ) x(t )sin(2 f ct ) y(t )cos(2 f ct )

(4.1-13)

Notes:

(1). x (t ), y (t ) may be viewed as amplitude modulations impressed on the carrier

components, cos(2 f ct ), sin(2 f ct ) .

(2). Since the two carriers are in phase quadrature, x ( t ), y (t ) are called the quadrature

components of s (t ) .

s(t ) Re x (t ) jy (t ) e j 2 fct Re sl (t )e j 2 f ct

(4.1-14)

The low pass signal sl (t ) is usually called the complex envelope of the real signal s (t ) .

The polar representation of the low pass signal sl (t ) is

sl (t ) a (t )e j ( t )

(4.1-15)

a(t ) x 2 (t ) y 2 (t ) sl (t )

(4.1-16)

where

y (t )

x (t )

(t ) tan 1

(4.1-17)

Therefore, the signal s (t ) can be expressed as

s(t ) Re sl (t )e j 2 f ct Re a (t )e j (2 f ct ( t )) a (t ) cos(2 f ct (t ))

(4.1-18)

where

a ( t ) is the envelope of s (t ) .

( t ) is the phase of s (t ) .

S ( f ) F [ s(t )

Res (t )e

j 2 f ct

l

e

j 2 ft

dt

(4.1-19)

Use of the identity

1

Re[ ] [ ]

2

(4.1-20)

The Fourier transform of s (t ) is

S( f )

1

1

sl (t )e j 2 fct sl (t )e j 2 fct e j 2 ft dt Sl ( f f c ) Sl ( f f c ) (4.1-21)

2

2

Another important item is the energy of the signal s (t )

E

s 2 (t )dt

Re[s (t )e

l

j 2 f ct

] dt

2

(4.1-22)

Or

E

1

1

2

2

sl (t ) dt sl (t ) cos(4 f ct 2 (t ))dt

2

2

(4.1-23)

The second term in the right hand side of the above equation approaches zero, therefore, the

energy of s (t )

1

1

2

E sl (t ) dt a 2 (t )dt

2

2

(4.1-24)

4.1.2 Representation of linear band pass systems

A band pass linear filter or system h(t ) with real value has

H ( f ) H ( f ),

where

(4.1-25)

H ( f ) F [h(t )]

Define

H ( f ), f 0

Hl ( f fc )

f 0

0,

(4.1-26)

f 0

0,

H l ( f fc )

H ( f ), f 0

(4.1-27)

then

Hint: from (4-1-26)

H l ( f f c ) H ( f ), f 0 H l ( f f c ) H ( f ), f 0 ; Take complex conjugate both

sides, we have (4.1.27).

From (4.1.25), we have

H ( f ) H l ( f f c ) H l ( f f c )

(4.1-28)

and

h(t ) F [ H ( f )] hl (t )e j 2 fct hl (t )e j 2 fct

(4.1-29)

2 Re[hl (t )e j 2 fct ]

Discussion:

You may have seen that (4.1.28) has no “one half” constant, but (4.1.21) does have. In fact,

the difference stems from the definition of the analytic signal in (4.1.1); the amplitude of the

Fourier transform of s (t ) is intentionally doubled that of s (t ) , and that is why when we

try to evaluate S ( f ) in terms of Sl ( f ) we need to add the reverse operation by dividing

the result by two.

4.1.3 Response of a band pass system to a band pass signal

Consider the output of the band pass system is expressed in terms of its corresponding

equivalent low pass from as

r (t ) Re rl (t )e j 2 fct

(4.1-30)

where

r (t ) s(t ) h(t )

s( )h(t )d

(4.1-31)

Viewed from the frequency domain

R( f ) S ( f ) H ( f )

From (4.1.21) and (4.1.28), we obtain

R( f )

(4.1-32)

1

Sl ( f f c ) Sl ( f f c ) H l ( f f c ) H l ( f f c )

2

(4.1-33)

Using the fact that

Sl ( f f c ) 0, H l ( f f c ) 0, f 0 , since both the input signal and the impulse response

are narrow-band, as a result

Sl ( f f c ) H l ( f f c ) 0, and Sl ( f f c ) H l ( f f c ) 0

Finally, (4.1.33) can be simplified as

1

R( f ) Sl ( f f c ) H l ( f f c ) Sl ( f f c ) H l ( f f c )

2

(4.1-34)

1

Rl ( f f c ) Rl ( f f c )

2

where

(4.1-35)

Rl ( f ) Sl ( f ) H l ( f )

The counterpart of Rl ( f ) in the time domain is

rl (t ) sl (t ) hl (t )

s ( )h (t )d

l

l

(4.1-36)

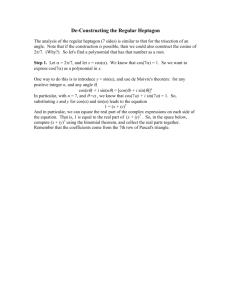

For the sake of convenience to illustrate the correspondence between band pass and lowpass

representations, we end with the following figure.

Bandpass representation

s (t )

h(t ), H ( f )

r (t ) s (t ) h (t )

Equivalent lowpass representation

sl (t )

Time domain

hl (t ), H l ( f )

rl (t ) sl (t ) hl (t )

Frequency domain

s(t ) Re sl (t )e j 2 fct (4.1.18)

h(t ) 2 Re[hl (t )e j 2 fct ] (4.1.29)

r (t ) Re rl (t )e j 2 fct (4.1.30)

s (t )

S ( f )e

j 2 ft

df (4.1.2)

1

Sl ( f f c ) Sl ( f f c ) (4.1.21)

2

H ( f ) H l ( f f c ) H l ( f f c ) (4.1.28)

1

R( f ) Rl ( f f c ) Rl ( f f c ) (4.1.34)

2

S( f )

S ( f ) F s (t ) 2u( f )S ( f ) (4.1.1)

sl (t ) s (t )e j 2 fct

Sl ( f ) S ( f f c )

4.1.4 Representation of band pass stationary stochastic processes

Suppose n(t ) is a sample function of a WSS with zero-mean and PSD nn ( f ) . It relates

with the equivalent low pass form as

n(t ) a(t )cos[2 f ct (t )]

x(t ) cos(2 f ct ) y (t )sin(2 f ct )

(4.1-37)

(4.1-38)

Re[ z(t )e j 2 fct ]

(4.1-39)

where

(1). z (t ) is the complex envelope of the real-valued R.P. n(t )

(2). x (t ), y (t ) are the quadrature components of n(t ) .

(3). E [n(t )] 0 , therefore, E[ x (t )] E[ y (t )] 0

Since n(t ) is WSS, we have the following properties:

xx ( ) yy ( )

xy ( ) yx ( )

(4.1-40)

(4.1-41)

The autocorrelation of n(t ) is

nn ( ) E[n(t )n(t )]

E{[ x (t ) cos 2 f ct y (t )sin 2 f ct ]

[ x (t ) cos 2 f c (t ) y (t )sin 2 f c (t )]}

xx ( ) cos 2 f ct cos 2 f c (t )

(4.1-42)

yy ( )sin 2 f ct sin 2 f c (t )

xy ( )sin 2 f ct cos 2 f c (t ) yx ( ) cos 2 f ct sin(2 f c (t ))

Use of the trigonometric identities

cos A cos B 12 [cos( A B ) cos( A B )]

sin A sin B 12 [cos( A B ) cos( A B )]

sin A cos B 12 [sin( A B ) sin( A B )]

(4.1-43)

Then

nn ( ) 12 [ xx ( ) yy ( )]cos 2 f c

12 [ xx ( ) yy ( )]cos 2 f c (2t )

(4.1-44)

12 [ yx ( ) xy ( )]sin 2 f c

12 [ yx ( ) xy ( )]sin 2 f c (2t )

nn ( ) E[n(t )n(t )] xx ( ) cos(2 f c ) yx ( )sin(2 f c )

(4.1-45)

The equivalent low pass process

z (t ) x(t ) jy (t )

Its autocorrelation function is defined

1

zz ( ) E[ z (t ) z (t )]

2

(4.1-46)

(4.1-47)

Keep in mind that there is no such a “1/2” constant in most books.

zz ( ) 12 E[ xx ( ) yy ( ) j xy ( ) j yx ( )]

Using (4.1.40) and (4.1.41)

zz ( ) xx ( ) j yx ( )

Incorporate (4.1.49) with (4.1.45)

nn ( ) Re[ zz ( )e j 2 f ]

and the PSD relationship

nn ( f )

Re

zz

(4.1-48)

(4.1-49)

c

(4.1-50)

1

( )e j 2 fc e j 2 f d [ zz ( f f c ) zz ( f f c )]

2

(4.1-51)

Note that zz ( ) zz ( ) , accordingly, zz ( f ) is a real-valued function of f.

Band pass

representation

n(t )

Low pass representation

Relationship

z (t )

n(t ) Re[ z(t )e j 2 fct ]

nn ( )

zz ( )

nn ( f )

zz ( f )

nn ( ) Re[ zz ( )e j 2 f ]

1

nn ( f ) [ zz ( f f c ) zz ( f f c )]

2

c

Properties of quadrature components:

Any cross-correlation function satisfies

then from (4.1.41)

yx ( ) xy ( )

(4.1-52)

xy ( ) xy ( )

(4.1-53)

That is, xy ( ) is an odd function of , and therefore, xy (0) 0 . In other words,

x (t ), y (t ) are uncorrelated at 0 . Besides,

(1). If xy ( ) 0, for all , then zz ( ) xx ( ) is real and the PSD satisfies

zz ( f ) zz ( f )

(4.1-54)

and then zz ( f ) is symmetric about f 0 .

(2). If n(t ) is Gaussian, then x (t ) and y (t ) are jointly Gaussian, hence, at 0 , they

are statistically independent with joint pdf as

p ( x, y )

1

2 2

e

( x2 y 2 )

2 2

(4.1-55)

with

2 xx (0) yy (0) zz (0)

Representation of white noise

White noise w(t ) is really a virtual concept; it is defined as a stochastic process that has a

flat (constant) power spectral density over the entire frequency range. From the point of view

of statistics

1

1

ww ( f ) N 0 , or equivalently in the time domain, ww ( ) N 0 ( ) .

2

2

Note: With such a wideband property, white noise cannot be expressed in terms of

quadrature components, which are for narrowband signals or systems only.

A band pass white noise n(t ) with PSD, nn ( f )

And its equivalent low pass noise z (t )

N , | f | 12 B

zz ( f ) 0

1

0, | f | 2 B

(4.1-56)

From (4.1.56), we can obtain the autocorrelation of z (t ) as

zz ( ) F 1[ zz ( f )] N 0

and

sin( B )

zz ( ) N 0 ( ), as B

Here one must keep in mind that the constant is N 0 , not

(4.1-57)

(4.1-58)

1

2

N0 .

Remember PSDs for white noise and bandpass white noise are symmetric about f 0 ,

therefore, yx ( ) 0, for all , and

zz ( ) xx ( ) yy ( )

(4.1-59)

Notes:

(1). x (t ), y (t ) are uncorrelated for all time shifts

(2). The autocorrelation functions of z (t ), x (t ) and y (t ) are all equal.

Equation Section (Next)

4.2 Signal space representation

4.2.1 Vector space concepts

A vector v expressed in terms of a set of unit vectors (basis vectors) is as

n

v vi ei

(4.2-1)

i 1

The inner product of two n-dimensional vectors v1 , v 2 is defined as

n

v1 v 2 v1i v2i

(4.2-2)

i 1

Two vectors v1 , v 2 are orthogonal if v1 v 2 0 , and a set of m vectors are orthogonal if

v i v j 0, i j

(4.2-3)

The norm of a vector v is denoted by v and is defined as

v ( v v)1/ 2

n

v

i 1

2

i

(4.2-4)

A set of m vectors is said to be orthonormal if the vectors are orthogonal and each vector

has a unit norm.

A set of m vectors is said to be linearly independent if no one vector can be represented as

a linear combination of the remaining vectors.

Two n-dimensional vectors v1 , v 2 satisfy the triangle inequality

v1 v2 v1 v2

(4.2-5)

with equality if v1 , v 2 are in the same direction, i.e., v1 =av 2 , a 0, a R

From the triangle inequality, there follows the Cauchy-Schwarz inequality

v1 v2 v1 v2

(4.2-6)

with equality if v1 =av 2 , a R

The norm square of the sum of two vectors can be expressed as

2

2

2

v1 v 2 v1 v 2 2 v1 v 2

(4.2-7)

If v1 , v 2 are orthogonal, then v1 v 2 0 and hence,

v1 v 2

2

v1 v 2

2

2

(4.2-8)

A vector v transforms into some vector v is performed by the transformation matrix A

(4.2-9)

v = Av

In the special case, v = v , i.e., two vectors are collinear

Av v, R

(4.2-10)

The vector v is said to be an eigenvector of the transformation A and is the corresponding

eigenvalue.

The Gram-schmidt orthogonalization procedure

Suppose we have m n-dimensional vectors and try to construct a set of orthonormal vectors

from them. By arbitrarily selecting a vector, say v1

v

(4.2-11)

u1 = 1

v1

Next, we select v 2 , and first subtract the projection of onto u1

u2 v 2 ( v 2 u1 )u1

(4.2-12)

After normalizing the vector u2 , we obtain the second unity length vector u 2 , which is

orthogonal to u1 .

u

(4.2-13)

u2 = 2

u2

The procedure can carrier on in the same manner, for example, for the vector v 3

We obtain u3 first

u3 v3 ( v3 u1 )u1 ( v3 u2 )u2

The normalized vector u 3 , which is orthogonal to both u1 and u 2 vectors, is

u

u3 = 3

u3

(4.2-14)

(4.2-15)

4.2.2 Signal space concepts

For a set of signals defined on some interval [a, b].

The inner product of two complex-valued signals, x1 (t ), x2 (t ) is denoted by

x1 (t ), x2 (t ) , and is defined as

b

x1 (t ), x2 (t ) x1 (t ) x2 (t )dt

(4.2-16)

a

The signals are orthogonal if their inner product is zero, i.e., x1 (t ), x2 (t ) 0

The norm of a signal is defined as

1/ 2

b

(4.2-17)

x(t ) | x(t ) |2 dt

a

A set of m signals is orthonormal if they are orthogonal and their norms are all unity

A set of m signals is linearly independent if no signal can be represented as a linear

combination of the remaining signals.

The triangle inequality for two signals is

(4.2-18)

x1 (t ) x2 (t ) x1 (t ) x2 (t )

and the Cauchy-Schwarz inequality is

b

x (t ) x

1

a

2

b

(t )dt | x1 (t ) | dt

2

a

1/ 2 b

1/ 2

| x (t ) | dt

2

2

a

with equality when x1 (t ) ax2 (t ), a C (complex number set )

(4.2-19)

4.2.3 orthogonal expansions of signals

s (t ) is a deterministic, real-valued signal with finite energy

Es

[s(t )] dt

2

(4.2-20)

Suppose a set of orthogonal functions { f n (t ), n 1,2,..., K} , i.e.,

0 m n

f n (t ) f m (t )dt

1 m n

(4.2-21)

The signal can be approximated by a weighted linear combination of these functions, i.e.,

K

sˆ(t ) sk f k (t ), {sk ,1 k K }

(4.2-22)

k 1

The approximation error incurred is

e(t ) s(t ) sˆ(t )

(4.2-23)

Our objective is to choose the proper coefficients sk to minimize the error energy Ee , i.e.,

2

K

Ee [ s(t ) sˆ(t )] dt s(t ) sk f k (t ) dt

(4.2-24)

k 1

Based on the mean-square criterion, the resulting coefficients can be obtained when the error

is orthogonal to each of the functions in the series expansion. Thus,

2

K

sk f k (t ) f n (t )dt 0, n 1,2,..., K

s(t )

k 1

(4.2-25)

Since the functions { f n (t ), n 1,2,..., K} are orthonormal, the above equation reduces to

sn

s(t ) f (t )dt,

n 1,2,..., K

n

(4.2-26)

The coefficient sn is exactly equal to the projection of s (t ) onto the function f n (t ) .

The minimum mean square approximation error is

Emin

2

[s(t ) sˆ(t )] dt

[s(t ) sˆ(t )]s(t )dt

K

[s(t )] dt s

2

k 1

k

f k (t )s(t )dt

(4.2-27)

K

Es sk 2 0

k 1

(A bug exists for the link between Mathtype 5.0 and MS word when more than one hat are

typed with the same letter or symbol in the equation, for example, when typing two sˆ(t )

twice in a single equation. However, it is correctly shown on the Mathtype canvas.

***Mathtype5.1 is OK)

When the minimum mean square approximation error Emin 0 ,

K

Es sk2

k 1

[s(t )] dt

2

(4.2-28)

and

K

s ( t ) sk f k ( t )

(4.2-29)

k 1

When every finite energy signal can be represented by a series expansion and with Emin 0 ,

the set of orthonormal functions { f n (t ), n 1,2,..., K} is said to be complete.

Example 4.2-1 Trigonometric Fourier series

A finite energy signal s (t ) that is zero everywhere except (0, T ) can be expanded in a

Fourier series as

a

2 kt

2 kt

s(t ) 0 ak cos

bk sin

(4.2-30)

2 k 1

T

T

The coefficients that minimize the MSE are

T

2

2 kt

ak s(t ) cos

dt , k 0

T 0

T

(4.2-31)

T

2

2 kt

bk s (t ) sin

dt , k 1

T 0

T

Gram-Schmidt procedure

We can construct a set of orthonormal functions from a set of finite energy signal waveforms

{si (t ), i 1,2,3..., M } .

By arbitrarily choosing a waveform, say, s1 (t ) , we construct the first orthonormal function

as

s (t )

(4.2-32)

f1 (t ) 1

E1

The second orthonormal function using f 2 (t ) is first removing the projection of it onto

f1 ( t )

c12

s (t ) f (t )dt

2

1

(4.2-33)

f 2 (t ) s2 (t ) c12 f1 (t )

and then its energy is normalized as

f (t )

f 2 (t ) 2

E2

(4.2-34)

(4.2-35)

where E2 represents the energy of f 2 (t ) . Generally, the orthogonalization of the kth

function leads to

f (t )

f k (t ) k

(4.2-36)

Ek

where

k 1

f k (t ) sk (t ) cik f i (t )

(4.2-37)

i 1

and

cik

s (t ) f (t )dt,

k

i 1,2,..., k 1

i

(4.2-38)

The number of the resulting orthonormal functions will be N M .

Accordingly, the M signals can be expressed as linear combinations of the { f n (t )} .

N

sk (t ) skn f n (t ), k 1, 2,..., M

(4.2-39)

n 1

and

Ek

N

2

2

[sk (t )] dt skn sk

2

(4.2-40)

n 1

where s k is the vector form of the signal sk (t )

(4.2-41)

sk [ sk1 sk 2 ... skN ]

That is, any signal can be represented geometrically as a point space spanned by the

orthonormal functions { f n (t )} .

A bandpass signal can be written, in terms of the equivalent lowpass signal, by

sm (t ) Re[ slm (t )e j 2 fct ], m 1, 2,..., M

Recall that the signal energy is

1

2

Em sm 2 (t )dt slm (t ) dt

2

Measurement of the similarity between any two signals, say, sm (t ), sk (t )

(1). The normalized cross correlation

1

1

s

(

t

)

s

(

t

)

dt

Re

slm (t ) slk (t )dt

m

k

Em Ek

2 Em Ek

(4.2-42)

(4.2-43)

(4.2-44)

The complex-valued cross correlation coefficient is km defined as

km

1

2 Em Ek

s

lk

(t ) slm (t )dt

(4.2-45)

Then

Re( km )

or, equivalently,

Re( km )

(2). The Euclidean distance

1

Em E k

s (t ) s

k

m

(t )dt

(4.2-46)

sm sk

s s

m k

sm sk

Em Ek

(4.2-47)

1/ 2

d sm sk [sm (t ) sk (t )]2 dt Em Ek 2 Em Ek Re( km )

If all signals have the equal energy, i.e., Ei E , for all i , then

(e)

km

1/ 2

(e)

d km

2 E [1 Re( km )]

1/ 2

(4.2-48)

(4.2-49)

For digitally, linearly modulated signal, it is convenient to express in terms of two

orthonormal basis functions as

2

f1 (t )

cos 2 f ct

T

(4.2-50)

2

f 2 (t )

sin 2 f ct

T

If the equivalent lowpass signal is denoted as slm (t ) xl (t ) jyl (t ) , then the corresponding

bandpass signal, sm (t ) can be expressed in terms of f1 (t ) and f 2 (t ) as

sm (t ) xl (t ) f1 (t ) yl (t ) f 2 (t )

where xl (t ) and yl (t ) are the signal modulations.

.

(4.2-51)

4.3 Representation of digitally modulated signals

Modulator: A modulator is the interface device that maps the digital information into

analog waveforms that match the characteristics of the channel.

Memoryless modulator: When the mapping from the digital sequence {an } to waveforms

{sm (t )} is performed without any constraint on previously waveforms, the

modulator is called memoryless.

Modulator with memory: A modulator is said have memory if the mapping from the digital

sequence {an } to waveforms {sm (t )} is performed under the constraint that a

waveform transmitted in any time interval depends on one or more previously

waveforms.

Linear/nonlinear modulator: A modulator is said to be linear if the mapping of the digital

sequence into successive waveforms satisfies the principle of superposition,

otherwise, the modulator is said to be nonlinear.

Modulator characteristic

Linear, memoryless

Linear, with memory

Nonlinear, memoryless

Nonlinear, with memory

Modulation types

4.3.1 Memoryless modulation methods

Assume that the sequence of the binary digits at the input to the modulator occurs at a rate of

R bits/sec (bit rate).

PAM: Pulse-amplitude-modulated signals

In digital PAM, the signal waveforms may be represented as

sm (t ) Re Am g (t )e j 2 fct

(4.3-1)

Am g (t ) cos 2 f ct , m 1, 2,..., M , 0 t T

(4.3-2)

Am (2m 1 M )d , m 1,2,..., M

Notes:

(1). { Am , 1 m M } denote the set of M possible amplitudes corresponding to M 2k

possible k-bit block of symbols.

(2). The shaping function g (t ) is a real-valued signal pulse whose shape influences the

spectrum of the transmitted signal.

(3). 2d is the distance between adjacent signal amplitudes.

(4). The symbol rate for the PAM is R/k

(5). The time interval Tb 1/ R is called the bit interval.

(6). The time interval T k / R kTb is called the symbol interval.

The M PAM signals have symbol energies

T

T

1

1

Em sm 2 (t )dt Am 2 g 2 (t )dt Am 2 Eg

2

2

0

0

E g stands for the energy of the shaping function, g (t ) .

(4.3-3)

PAM signals are one-dimensional, therefore,

sm (t ) sm f (t )

with

2

f (t )

g (t ) cos 2 f ct

Eg

(4.3-4)

(4.3-5)

and

sm Am

1

Eg , m 1,2,..., M

2

(4.3-6)

PAM Digital PAM is also called amplitude-shift keying (ASK).

The mapping of PAM can use the Gray encoding, in which the adjacent amplitudes differ

by only one binary digit.

Gray encoding is important because the most likely errors in the receiver caused by noise

involve the erroneous selection of adjacent amplitude to the transmitted signal amplitude. In

such case, only a single bit error occurs in the k-bit sequence.

The Euclidean distance between any pair of signal points is

1

(4.3-7)

d mn ( e ) ( sm sn )2

Eg Am An d 2 Eg | m n |

2

Then the distance between a pair of adjacent signal points, i.e., the minimum Euclidean

distance is

d min ( e ) d 2 Eg

(4.3-8)

The carrier-modulated PAM can be double-sided band (PAM/DSB), or single-sided band

(PAM/SSB). PAM/DSB is as (4.3-1), which needs twice the channel bandwidth of the

equivalent lowpass signal for transmission.

PAM/SSB, requiring half the channel bandwidth of PAM/DSB, is represented by

sm (t ) Re Am [ g (t ) jgˆ (t )]e j 2 fct , m 1, 2,..., M

(4.3-9)

Remember

g (t ) G ( f ); gˆ (t ) Gˆ ( f ) G ( f ) H ( f )

g (t ) jgˆ (t ) G ( f ) G ( f ) H ( f )

2G ( f ) f 0

(1)." " : G ( f ) jG ( f ) H ( f )

, upper

f 0

0

f 0

0

(2)." " : G ( f ) jG ( f ) H ( f )

, lower

2G ( f ) f 0

where the Fourier transform H ( f ) of the Hilbert transformer is

j,

H ( f ) 0,

j,

f 0

f 0

f 0

The digital PAM signal can also be used for base band transmission, in which the carrier is

not required.

(4.3-10)

sm (t ) Am g (t ), m 1,2,..., M

A special case is M=2, the binary PAM waveforms have the special property that

s1 (t ) s2 (t )

Such signals are called antipodal.

Phase-modulated signals

In digital phase modulation, the M signal waveforms are represented as

sm (t ) Re g (t )e j 2 ( m 1) / M e j 2 fct , m 1, 2,..., M , 0 t T

2 (m 1)

g (t ) cos 2 f ct

M

2 ( m 1)

2 ( m 1)

g (t ) cos

cos 2 f ct g (t )sin

sin 2 f ct

M

M

(4.3-11)

m 2 (m 1) / M , m 1,2,..., M are the M possible phases of the carrier that convey the

transmitted information.

Digital phase modulation is usually called phase-shift keying (PSK).

The PSK signal waveforms are two-dimensional and have equal energy,

T

T

1

1

E sm 2 (t )dt g 2 (t )dt Eg

20

2

0

(4.3-12)

sm (t ) sm1 f1 (t ) sm 2 f 2 (t )

(4.3-13)

2

g (t ) cos 2 f ct

Eg

(4.3-14)

2

g (t )sin 2 f ct

Eg

(4.3-15)

where

f1 (t )

f 2 (t )

And the two-dimensional vector s m

sm [ sm1 sm 2 ]

E

2 (m 1)

g cos

M

2

Eg

2

sin

2 ( m 1)

, m 1, 2,..., M

M

(4.3-16)

As mentioned before, the preferred assignment is Gray encoding, so that the mostly likely

errors caused by noise will result in a single bit error in the k-bit symbol.

The Euclidean distance between signal points is

1/ 2

2

d mn sm sn Eg 1 cos

(m n )

M

and the minimum distance is

(e)

(4.3-17)

2

(4.3-18)

d min ( e ) Eg 1 cos

M

A variant of 4-phase PSK, called / 4 QPSK , is obtained by introducing an additional

/ 4 phase shift in the carrier phase in each symbol interval. Such a phase shift facilitates

symbol synchronization.

QAM: Quadrature amplitude modulation

The bandwidth efficiency of PAM/SSB can be obtained by simultaneously impressing two

separate k-bit symbols from the information sequence {an } on two quadrature carriers

cos 2 f ct and sin 2 f ct ; such a technique is called QAM or quadrature PAM.

sm (t ) Re[( Amc jAms ) g (t )e j 2 fct ], m 1,2,..., M , 0 t T

Amc g (t )cos 2 f ct Ams g (t )sin 2 f ct

Amc , Ams are the information-bearing signal amplitudes of the quadrature carriers.

Alternatively, QAM signal can also be written by

sm (t ) Re[Vme jm g (t )e j 2 fct ], m 1,2,..., M , 0 t T

Vm g (t ) cos(2 f ct m )

(4.3-19)

(4.3-20)

Since Vm Amc 2 Ams 2 and m tan 1 ( Ams / Amc ) , QAM can be viewed as combined

amplitude and phase modulation.

QAM is a two-dimensional modulated signal, i.e.,

sm (t ) sm1 f1 (t ) sm 2 f 2 (t )

where

2

f1 ( t )

g (t ) cos 2 f ct

Eg

2

f 2 (t )

g (t ) sin 2 f ct

Eg

and

(4.3-21)

(4.3-22)

Eg

Eg

sm [ sm1 sm 2 ] Amc

Ams

, m 1, 2,..., M

2

2

The Euclidean distance between signal points is

Eg

( Amc Anc )2 ( Ams Ans )2

d mn ( e ) sm sn

2

(4.3-23)

(4.3-24)

A special case is that the amplitudes take the set of discrete values,

{(2m 1 M )d , m 1, 2,..., M } and then the signal space diagram is rectangular. In such a

case, the minimum Euclidean distance is the same as that for PAM,

d min ( e ) d 2 E g

(4.3-25)

Multidimensional signals

Orthogonal multidimensional signals

Suppose there are M equal-energy orthogonal signal waveforms that differ in frequency, and

are represented as

sm (t ) Re slm (t )e j 2 f ct , m 1, 2,..., M , 0 t T

(4.3-26)

2E

cos 2 f ct 2 mf t

T

where

2 E j 2 mf t

(4.3-27)

slm (t )

e

, m 1,2,..., M , 0 t T

T

This type of frequency modulation is called frequency-shift keying (FSK).

The cross correlation coefficients are

T

2 E / T j 2 ( mk ) f t

sin T (m k )f j T ( mk ) f

km

e

dt

e

2E 0

T (m k )f

The real part of km is

(4.3-28)

sin T ( m k ) f

cos T ( m k ) f

T ( m k ) f

(4.3-29)

sin 2 T ( m k ) f

2 T ( m k ) f

1

and m k . Since | m k | 1 corresponds to

Note that r Re( km ) 0 when f

2T

1

adjacent frequency slots, f

represents the minimum frequency separation between

2T

adjacent signals for orthogonality of the M signals.

r Re( km )

Comparison: | km | 0 at k / T , k 1,2,...

Hint: The cross-correlations of baseband signals and the cross-correlations of bandpass

signals are listed in (4.2.44)-(4.2.46). As for the bandpass signal, the most important is

r Re( km ) .

The vector representation for M n-dimensional FSK signals (where M=N) is

s1 [ E 0 0 ... 0 0]

s2 [ 0 E 0 ... 0 0]

(4.3-30)

s M [ 0 0 0 ... 0 E ]

The Euclidean distance between pairs of signals (also the minimum distance) is

dkm( e) 2E ,for all m, k

(4.3-31)

Biorthogonal signals

A set of M biorthogonal signals can be constructed from 12 M orthogonal signals by

including the negatives of the orthogonal signals. Hence, N 12 M are needed, and the

correlation is either r 0 or 1 . The Euclidean distances are

d 2 E or 2 E (the minimum distance) .

Simplex signals

Suppose we have a set of M orthogonal waveforms {sm (t )} with vectors {sm } . Their mean

is

1 M

s

(4.3-32)

sm

M m 1

By subtracting the mean from each of the M orthogonal signals, we obtain another set of M

signals, called simplex signals.

sm sm s

(4.3-33)

Thus,

The effect of the subtraction is to translate the origin of the M orthogonal signals to the point

s.

Note:

(1). Simplex signals have equal energy, i.e.,

2

1

1

2

2

sm sm s E

E

E E 1

(4.3-34)

M

M

M

(2). The cross correlation of any pair of signals is equal and requires less energy, by the

factor 1-1/M.

mn

1

sm sn

(4.3-35)

Re mn

1/ M

1

sm sn

m

n

M 1

1 1/ M

(3). Since only the origin was translated, the distance between any pair of signal points is

maintained at d 2 E .

(4). The signal dimensionality is N M 1.

Signal waveforms from binary codes

A set of M signaling waveforms can be generated from a set of M binary code words

(4.3-36)

Cm [cm1 cm 2 ...cmN ], m 1,2,..., M

where cmj 0 or 1 for all m and j . Each code word is mapped into an elementary binary PSK

waveform as

2 Ec

cmj 1 smj (t )

cos 2 f ct , 0 t Tc

Tc

(4.3-37)

2 Ec

cmj 0 smj (t )

cos 2 f c t , 0 t Tc

Tc

where Tc T / N , Ec E / N . N is called the block length of the code, and it is the

dimension of the M waveforms. Thus, the M code words {Cm } are mapped into a set of M

waveforms {sm (t )} .

The vector form of the waveform is

(4.3-38)

sm [ sm1 sm 2 ...smN ], m 1,2,..., M

where smj E / N for all m and j.

Notes:

(1). There are 2 N possible waveforms that can be constructed from the 2 N possible binary

code words. We may select a subset of M< 2 N signal waveforms for transmission of

the information.

(2). The 2 N possible signal points correspond to the vertices of an N-dimensional

hypercube with its center at the origin.

(3). Each of the M waveforms has energy E. The cross correlation between any pair of

waveforms depends on how we select the M waveforms from the 2 N possible waveforms.

Clearly any adjacent signal points have a cross correlation coefficient (using (4.2.47) and

(4.2.49))

E (1 2 / N ) N 2

r

(4.3-39)

E

N

and a corresponding distance of

(4.3-40)

d ( e ) 2 E (1 r ) 4 E / N .

4.3.2 Linear modulation with memory

In modulation with memory, dependence is intentionally introduced to the signals

transmitted in successive symbol intervals; the purpose is to shape the spectrum of the

transmitted signal so that it matches the spectral characteristics of the channel.

Signal dependence is usually accomplished by encoding data sequence at the input to the

modulator by means of a modulation code.

The memory characteristic will be confined to be of Markov chain, and the signals to be base

band.

In Figure 4.3-12, NRZ (non-return-to-zero) is memoryless and is equivalent to a binary PAM

or a binary PSK signal in a carrier-modulated system; NRZI (non-return-to-zero I) or

NRZ-M (non-return-to-zero Mark, mark stands for one), in which the transitions from one

amplitude level to another occur only when a 1 is transmitted. This type of signal encoding is

called differential encoding.

The encoding process is

(4.3-41)

bk ak bk 1

where

{ak } is the input to the encoder, {bk } is the output, and is the addition modulo 2

operator.

Such an encoding operation can be manifested in three ways:

(1). By a state diagram (a Markov chain) as

Hint: left side of slash represents the input in digit; the right represents the encoder’s output

in waveforms.

(2). By transition matrices: The state diagram can be described by two transition matrices

corresponding to the two possible input bits {0,1} .

(i). ak 0 : The encoder stays in the same state, so the transition matrix is

Note: tij 1 if a k

1 0

T1

0 1

results in a transition from state i to state j, i, j 1, 2 .

(4.3-42)

(ii). ak 1 : The encoder goes to the other state, so the transition matrix is

0 1

T2

1 0

(4.3-43)

(3). By the trellis diagram: The trellis diagram provides exactly the same information

concerning the signal dependence as the state diagram, but also depicts a time evolution of

the state transitions.

Another base band modulation with memory is the delay modulation, in which the data

sequence is encoded by the run-length-limited code, called a Miller code, and then uses

NRZI to transmit the encoded data.

This type of digital modulation has been used extensively for digital magnetic recording

and in carrier modulation systems employing binary PSK.

The signal may be described by a state diagram that has four states as below:

Besides, the two transition matrices are: (because the input is binary, the number of matrices

is two)

(i). When ak 0

0

0

T1

1

1

0 0 1

0 0 1

0 0 0

0 0 0

(4.3-44)

0

0

T2

0

0

1 0 0

0 1 0

1 0 0

0 1 0

(4.3-45)

(ii) When ak 1

Modulation techniques with memory such as NRZI and Miller coding are generally

characterized by a K-state Markov chain with stationary state probabilities

{ pi , i 1,2,..., K} and transition probabilities { pij , i, j 1, 2,..., K } .

Associated with each transition is a signal waveform s j (t ), j 1, 2,..., K .

pij denotes the probability that s j ( t ) is transmitted in a given signaling interval after the

transmission of the signal waveform si (t ) in the previous signaling interval.

The transition probability matrix P is as

p11 p12

p

p22

P 21

pK 1 pK 2

p1K

p2 K

pKK

(4.3-46)

P can be obtained from the transition matrices, {Ti } and the stationary state probabilities,

{ pi , i 1,2,..., K} as

2

P qi Ti p(ak 0) T1 p( ak 1) T2

i 1

q1

(4.3-47)

q2

For NRZI signal with equal state probabilities p1 p2 1/ 2 and T1 , T2 as in (4.3-42) and

(4.3-43), the transition probability matrix is

12 12

P 1 1

2 2

For Miller-coded NRZI with equal likely symbols ( p1 p2 p3 p4 1/ 4 or

p1 p2 1/ 2 , equivalently), the transition probability matrix is

(4.3-48)

0 12 0 12

0 0 1 1

P 1 1 2 2

2 2 0 0

1

1

2 0 2 0

(4.3-49)

The transition probability matrix is useful in the determination of the spectral characteristics

of digital modulation techniques with memory.

4.3.3 Non-linear modulation methods with memory---CPFSK and CPM

In this section, consider a class of digital modulation methods in which the phase of the

signal is constrained to be continuous. This constraint results in a phase or frequency

modulator that has memory.

Continuous-phase FSK (CPFSK)

A conventional FSK signal is generated by shifting the carrier by an amount

f n 12 f I n , I n 1, 3,..., ( M 1) to reflect the digital information that is being

transmitted, and it is memoryless.

The switching from one frequency to another may be accomplished by having M 2k

separate oscillators tuned to the desired frequencies and selecting one of the M frequencies

according to the particular k-bit symbol that is to be transmitted in a signal interval of

duration T k / R seconds.

The drawback is that such abrupt switching from one oscillator output to another oscillator in

successive signaling intervals results in relatively large spectral side lobes outside of the

main lobe of the main spectral band of the signal, i.e., it needs a large frequency band for

transmission of the signal.

To avoid such frequency band dissipation, the information-bearing signal

frequency-modulates a single oscillator whose frequency is changed continuously. The

resulting frequency-modulated signal is phase-continuous and, hence, it is called CPFSK.

This type of FSK signal has memory because the phase of the carrier is constrained to be

continuous.

Begin with a PAM signal, which is used to frequency-modulate the carrier

d (t ) I n g (t nT )

(4.3-50)

n

where

{I n } : The sequence of amplitude obtained by mapping k-bit blocks of binary digits from the

information sequence {an } into the amplitude levels 1, 3,..., ( M 1) ;

g (t ) : A rectangular pulse of amplitude 2T1 and duration T seconds.

The equivalent low pass waveform at the output of the modulator v (t ) is

t

2E

exp j 4 Tf d d )d 0

T

f d the peak frequency deviation

v (t )

(4.3-51)

0 the initial phase of the carrier.

The carrier-modulated signal corresponding to (4.3-51) may be expressed as

2E

s (t )

cos[2 f ct (t; I) 0 ]

T

(t; I ) represents the time-varying phase of the carrier and defined as

(t; I) 4 Tf d

(4.3-52)

t

d ( )d

(4.3-53)

4 Tf d I n g ( nT ) d

n

Note: although d ( t ) contains discontinuities, the integral of s (t ) is continuous, hence

v (t ) is continuous-phase.

t

The phase of the carrier in the interval nT t (n 1)T using (4.3-53) is

(t; I) 2 f d T

n 1

I

k

k

2 f d (t nT ) I n n 2 hI n q(t nT )

(4.3-54)

where

h 2 fdT

(4.3-55)

represents the modulation index

n 1

n h Ik

(4.3-56)

k

represents the accumulation (memory) of all symbols up to time (n-1)T.

(t 0)

0

t

q(t )

(0 t T )

2

T

(t T )

12

(4.3-57)

Continuous-phase modulation (CPM)

In CPM, the phase of the carrier is

(t; I) 2

n

I h q(t kT ),

k

k k

nT t ( n 1)T

{I k } : the sequence of the M-ary information symbols selected from the alphabet

1, 3,..., ( M 1) ;

{hk } : a sequence of modulation indices;

q(t ) : some normalized waveform shape.

It is easy to see that CPFSK is a special case of CPM.

(i) hk h for all k, the modulation index is fixed for all symbols.

(4.3-58)

(ii). When the modulation index varies from one symbol to another, the CPM signal is called

multi-h. In such a case, the {hk } are made to vary in a cyclic manner through a set of

indices.

q(t ) may be expressed as the integral of some pulse g(t):

t

q(t ) g ( )d

0

(a). If g (t ) 0 for t T , the CPM signal is called full response CPM.

(b). If g (t ) 0 for t T , the CPM signal is called partial response CPM.

Three popular pulse shapes are given as

(4.3-59)

LREC denotes a rectangular pulse of duration LT, and L is a positive integer.

(a): L=1CPFSK, the pulse as shown in Figure 4.3-16a.

(b): L=2 the pulse as shown in Figure 4.3-16c.

LRC denotes a raised cosine pulse of duration LT. The pulse shapes for L=1,2 are shown in

Figures 4.3-16b, 4.3-16d.

GMSK denotes a Gaussian minimum-shift keying pulse with bandwidth parameter B, which

represents the –3 dB bandwidth of the Gaussian pulse. Figure 4.3-16e illustrates a set of

GMSK pulses with time-bandwidth productions BT ranging from 0.1 to 1.

In GMSK, the pulse duration increases as the bandwidth of the pulse decreases, as expected.

In practical applications, the pulse is usually truncated to some specified fixed duration.

GMSK with BT=0.3 is used in the European digital cellular communication system, called

GSM. When BT=0.3, the GMSK pulse may be truncated at | t | 1.5T with a relatively

small error incurred for t 1.5T .

It is instructive to sketch the set of phase trajectories (t; I ) generated by all possible

values of the information sequence {I n } . The phase diagram is called phase trees.

(i). Figure 4.3-17, binary CPFSK with I n 1 , demonstrates the set of phase trajectories

beginning at time t 0 .

(ii). Figure 4.3-18, quaternary CPFSK with I n 1, 3 , demonstrates the set of phase

trajectories beginning at time t 0 .

Observations:

(a). The phase trees for CPFSK are piecewise linear because of the fact that the pulse g (t )

is rectangular.

(b). Smoother phase trajectories and phase trees are obtained by using pulses that do not

contain discontinuities, such as the class of raised cosine pulses.

(c). For comparison, a phase trajectory generated by the sequence (1, -1, -1, -1, 1, 1, -1, 1)

for a partial response, raised cosine pulse of the length 3T is shown in Fig. 4.3-19.

m 2 m

s 0,

,

,

p

p

,

( p 1) m

p

(4.3-60)

m 2 m

s 0,

,

,

p

p

,

(2 p 1) m

p

(4.3-61)

pM L1 even m

St

L 1

odd m

2 pM

(4.3-62)

Minimum-shift keying (MSK)

MSK is a special form of binary CFPSK (and, therefore, CPM) in which the modulation

index h 1/ 2 . The phase of the carrier in the interval nT t (n 1)T is

1

2

(t ; I)

n 1

I

k

k

I n q(t nT )

1

t nT

n In

, T t (n 1)T

2

T

(4.3-63)

The modulated carrier signal is

1

t nT

s(t ) A cos 2 f ct n I n

2

T

(4.3-64)

1 1

A cos 2 f c

I n t n I n n , nT t (n 1)T

4T 2

Define two frequencies as

1

f1 f c

4T

(4.3-65)

1

f2 fc

4T

The binary CPFSK signal can be expressed as a sinusoid having one of the two possible

frequencies in the interval T t (n 1)T as

1

si (t ) A cos 2 f i t n n ( 1)i 1 , i 1, 2

(4.3-66)

2

The frequency separation is f f 2 f1 1/ 2T . From (4.3-29), f 1/ 2T is the

minimum frequency separation that is necessary to ensure the orthogonality of the signals

s1 (t ) and s2 (t ) over a signaling interval of length T. That is the reason why BCPFSK with

h 1/ 2 is called MSK.

MSK may also be represented as a form of four-phase PSK. Its equivalent lowpass digitally

modulated signal as

v (t )

I

n

2n

g (t 2nT ) jI 2 n 1 g (t 2nT T )

(4.3-67)

and g(t) is a sinusoidal pulse as

t

0 t 2T

sin

(4.3-68)

g (t ) 2T

otherwise

0

This type of signal is viewed as a four-phase PSK signal in which the pulse shape is one-half

cycle of a sinusoid:

(a). The even-numbered binary symbols, i.e., {I 2 n } are transmitted via the cosine of the

carrier.

(b). The odd-numbered binary symbols, i.e., {I 2 n 1} are transmitted via the sine of the

carrier.

The transmission rate on the two orthogonal carrier components is 1/2T bits/sec, so the total

transmission rate is 1/T bits/sec.

Note: The bit transitions on the sine and cosine carrier components are staggered or offset in

time by T seconds. For this reason, the signal

s(t ) A I 2 n g (t 2nT ) cos 2 f ct I 2 n1g (t 2nT T ) sin 2 f ct

n

n

is called offset quadrature PSK (OQPSK) or staggered quadrature PSK (SQPSK).

Figure 4.3-23 illustrates the representation of an MSK signal as two staggered

quadrature-modulated binary PSK signals.

Note: The corresponding sum of the two quadrature signals is a constant amplitude,

frequency-modulated signal.

It is interesting to compare the waveforms among: (1) MSK, (2) OQPSK with rectangular

g (t ) over 0 t nT , and (3) QPSK with rectangular g (t ) over 0 t nT .

(a). All three methods have the identical data rate.

(b). The MSK signal has continuous phase.

(c). The OQPSK signal with a rectangular pulse is two binary signals for which the phase

transitions are staggered in time by T seconds. Thus is, the signal contains phase jumps of

90 that may occur as often as every T seconds.

(d). The conventional QPSK signal with constant amplitude will contain phase jumps of

180 or 90 every 2T seconds. See Fig. 4.3-224 for the illustration of these three signal

types.

4.4 Spectral characteristics of digitally modulated signals

Equation Chapter 4 Section 4

(1). The available channel bandwidth is limited for digital communications. From the point

of view of channel bandwidth efficiency, the required channel bandwidth for

transmission should be as small as possible.

(2). Hence, it is important to evaluate the spectral content of the digitally modulated signals.

(3). Since the information sequence is random, a digitally modulated signal is a stochastic

process.

4.4.1 Power spectra of linearly modulated signals

Remember that the expression of a bandpass signal and its corresponding equivalent lowpass

signal is

s(t ) Re v (t )e j 2 f ct

The autocorrelation function of s (t ) is

ss ( ) Re vv ( )e j 2 f

(4.4-1)

c

This equation can be derived as follows.

ss ( ) E[ s(t ) s(t )] E Re v(t )e j 2 f

c ( t

)

Re v( t ) e j 2 f ct

1

E v (t )e j 2 fc ( t ) v (t )e j 2 f ct v (t )e j 2 f c ( t )v (t )e j 2 f ct

4

v (t )e j 2 f c ( t ) v (t )e j 2 f ct v (t )e j 2 f c ( t )v (t )e j 2 f ct

1

E v (t )v (t )e j 2 f c (2 t ) v (t )v (t )e j 2 f c

4

v (t )v (t )e j 2 f c v (t )v (t )e j 2 f c (2 t )

Assume s (t ) is WSS, then E[v(t )v(t )] E[v (t )v (t )] 0 , and use the definition of

the autocorrelation function of v (t ) , vv ( ) 12 E[v(t )v (t )] ,

1

c

2

The power density spectrum ss ( f ) is

ss ( ) vv ( )e j 2 f vv ( )e j 2 f Re vv ( )e j 2 f

c

c

ss ( f ) 12 vv ( f f c ) vv ( f f c )

, which can be derived as below:

1

ss ( f ) F [ss ( )] F vv ( )e j 2 fc F vv ( )e j 2 fc

2

1

1

vv ( f f c ) vv ( )e j 2 fc e j 2 f d

2

2

1

1

vv ( f f c ) vv ( )e j 2 ( f f c ) d

2

2

1

1

1

1

vv ( f f c ) vv ( f f c ) vv ( f f c ) vv ( f f c )

2

2

2

2

(4.4-2)

Here we use the fact that vv ( ) vv ( ) vv ( f ) vv ( f ) , i.e., vv ( f ) is

real-valued.

Now it suffices to determine the autocorrelation function and PSD of v (t ) .

For the linear digital modulation methods,

v (t )

I

n

n

g (t nT )

(4.4-3)

Transmission rate is 1/ T R / k . {I n } may be real-valued (PAM) or complex-valued (PSK,

QAM).

1

vv (t ; t ) 12 E[v (t )v(t )] E I n g (t nT ) I m g (t mT )

2 n

m

(4.4-4)

1

E I n I m g (t nT ) g (t mT )

2 n m

Assume {I n } is WSS, mean is i and the autocorrelation function is

1

ii (m) E I n I n m

(4.4-5)

2

Then (4.4-4) can be expressed by

vv (t ; t )

(m n) g (t nT ) g (t mT )

n m

m

ii

ii (m)

g (t nT )g (t nT mT )

(4.4-6)

n

period in t with period T

Thus, vv (t ; t ) is also periodic in t with period T.

vv (t T ; t T ) vv (t ; t )

The mean of v (t ) is

(4.4-7)

(4.4-8)

E[v(t )] E I n g (t nT ) i g (t nT ) i g (t nT )

n

n

n

That is, the mean of v (t ) is also periodic in t with period T.

Both of the mean and the autocorrelation function of v (t ) are periodic in t with period T,

therefore, v (t ) is called a cyclostationary process or a periodically stationary process in

the wide sense.

To make the PSD meaningful, the dependence on t in vv (t ; t ) must be removed out

(averaged out) first. Since it is periodic, only a single period is needed.

T /2

1

vv ( )

vv (t ; t )dt

T T/ 2

1

ii ( m) g (t nT ) g (t nT mT )dt

T n T / 2

m

T /2

m

T / 2 nT

1

g (t ) g (t mT )dt

n T T / 2 nT

ii (m)

Define the time-autocorrelation function of g (t ) as

(4.4-9)

g (t ) g (t )dt

gg ( )

(4.4-10)

Note: here the definition differs from those of other books, for example,

T /2

1

R f ( ) lim

f (t ) f (t )dt

T T

T / 2

in the books: F. G. Stremler, p. 180 and L.W. Couch II, p.63.

vv ( )

1

ii (m)gg ( mT )

T m

(4.4-11)

Observe that the average autocorrelation function vv ( ) depends on the properties of both

the information sequence {I n } and the shaping function g (t ) .

By taking the Fourier transform both sides, we obtain the “averaged” PSD as

1

vv ( f ) vv ( )e j 2 f d ii ( m) gg ( mT )e j 2 f d

T m

1

ii (m) gg ( )e j 2 f ( mT )d

T m

1

ii (m)e j 2 fmT

T m

gg

( )e j 2 f d

ii ( f )

To obtain vv ( f ) , we need to evaluate the integral in the above equation as

j 2 f

gg ( )e d

g

g (t ) g (t )e j 2 f dtd

g

(t ) g (t )e j 2 f (t t ) dtd

(t )e j 2 ft dt g (t )e j 2 f (t ) d

G ( f )G ( f ) G ( f )

As a result, we obtain the average PSD of v (t ) as

2

vv ( f )

1

2

G ( f ) ii ( f )

T

(4.4-12)

and

ii ( f )

( m )e

m

ii

j 2 fmT

(4.4-13)

is the PSD of the information sequence {I n } .

------------------------------------------------------------------------------------------------------------F 1

Time average autocorrelation- an example: because G ( f )G( f )

g * (t ) g (t ) , if the

shaping function is a rectangular function, then the resulting autocorrelation function is

triangular( g * (t ) g (t ) , g (t ) is real). (If further assume the unit data sequence is white, then

1

1

ii (m)gg ( mT ) gg ( ) ). Write a program as a project to confirm this

T m

T

conclusion!

--------------------------------------------------------------------------------------------------------------

vv ( )

From the previous discussions, we know the PSD of v (t ) depends only on (i) the

information sequence, {I n } and (ii) the shaping function, g (t ) . Therefore, by designing

the shape of g (t ) and the autocorrelation characteristic of {I n } , we can control the PSD

of v (t ) .

The PSD ii ( f ) related to the autocorrelation function ii ( m) is in the form of an

exponential Fourier series with the {ii (m)} as the Fourier coefficients.

1/ 2T

ii (m) T

ii ( f )e j 2 fmT df

(4.4-14)

1/ 2T

{I n } is assumed to be real-valued and mutually uncorrelated, then

2 i 2 m 0

ii (m) i 2

m0

i

(4.4-15)

where i 2 denotes the variance of an information symbol. When (4.4-15) is used to

substitute for ii ( m) in (4.4-13), we obtain

ii ( f ) i 2 i 2

e

j 2 fmT

(4.4-16)

m =

Note that the summation in (4.4-16) is periodic with period 1/ T . It may also be viewed as

the exponential Fourier series of a periodic train of impulses with each impulse having an

area 1/ T . Thus,

i 2

m

2

(4.4-17)

ii ( f ) i

f

T m =

T

Substitution of (4.4-17) into (4.4-12) yields the desired result for the PSD of v (t ) when

{I n } is uncorrelated. That is,

i2

i 2

2

m

m

vv ( f )

G( f )

G f

T

T m = T

T

2

depend only on

g ( t ),cont . spectrum

(4.4-18)

discrete spectrum separated by 1/ T

(1). The first term: continuous spectrum, its shape depending on g (t ) only.

(2). The second term: discrete spectrum, each spectral line having a power that is

2

proportional to G ( f ) that is evaluated at f m / T . This term vanishes as i 0 ,

which is usually desirable for digital modulation techniques and it is satisfied when the

information symbols are (i) equally likely, (ii) symmetrically positioned in the complex

plane.

The most important is that we can control the spectral characteristics of the digitally

modulated signal by proper selection of the characteristics of the information sequence to be

transmitted.

Example 4.4-1 Demonstration of the (energy) spectral shape of the rectangular pulse, g (t )

G ( f ) F [ g (t )] AT

sin fT j fT

e

fT

Hence

G ( f ) AT

2

2

sin fT

fT

2

(4.4-19)

It is worthy to indicate that:

(i). It contains zeros at multiples of 1/T.

(ii). It decays inversely as f 2 .

(iii). Because of the zeros in G ( f ) , all but one of the discrete spectral components in

(4.4-18) vanish. Therefore, (4.4-18) reduces to

2

sin fT

(4.4-20)

vv ( f ) i A T

A2 i 2 ( f )

fT

Example 4.4-2. Demonstration of the (energy) spectral shape of the raised cosine pulse,

g (t ) .

2

g (t )

2

A

2

1 cos

2

T

T

t , 0 t T

2

(4.4-21)

AT

sin fT

(4.4-22)

e j fT

2 fT (1 f 2T 2 )

Comparing with the rectangular pulse case:

(i). Its spectrum has zeros at f n / T , n 2, 3, 4, . As a result, all the discrete spectra

components in (4.4-18) except the one at f 0 and f 1/ T vanish.

(ii). It has a broader main lobe but the tails decay inversely as f 6 .

G( f ) F [ g (t )]

Example 4.4-3. The previous two examples concern the effect of the shaping function. This

example discusses the influence of the information sequence.

Consider a binary sequence {bn } from which we form the symbols

(4.4-23)

I n bn bn 1

{bn } are assumed to be uncorrelated and with zero mean and unit variance. The correlation

function of {I n } is

2 m 0

ii (m) E ( I n I n m ) 1 m 1

0 otherwise

Accordingly, the PSD of {I n } is

(4.4-24)

ii ( f ) 2(1 cos 2 fT ) 4 cos 2 fT

The corresponding PSD of the lowpass modulated signal is

4

2

vv ( f ) G ( f ) cos2 fT

T

(4.4-25)

(4.4-26)

In the following, we give a supplementary example (see Couch II, Digital and analog

communication systems, 6th ed., pp. 410-413). The result can be applied to the example 2.1,

p.56 in “Introduction to spread spectrum communications,” by Peterson, Ziemer and Borth.

¥

Let v (t ) ( x (t ) in the following figures, x (t ) =

å

I n g(t - nT ) )

be a polar signal with

n=- ¥

random binary data. The data is assumed independent from bit to bit and has equal

probability in any bit interval (that is, P (I n = 1) = P (I n = - 1) = 1/ 2 ).

To examine the autocorrelation characteristic of v (t ) , we use the result obtained above.

1, m 0

Using (4.4-6) and ii (m)

, we obtain

0, m 0

vv (t ; t )

m

n

ii (m) g * (t nT ) g (t nT mT )

g * (t nT ) g (t nT )

n

To obtain the averaged autocorrelation, we need to take out the parameter, t, and since

vv (t ; t ) is periodic with the period T,

vv ( )

1

1

(

t

;

t

)

dt

g * (t nT ) g (t nT )dt

vv

T T/ 2

T T/ 2 n

T /2

T /2

T /2

1

g * (t nT ) g (t nT )dt

n T T / 2

1, | t | T / 2

Besides, g (t )

, then we first assume that the offset 0 T

0, elsewhere

1, nT T / 2 t nT T / 2

.

g *(t nT ) g (t nT )

elsewhere

0,

If T , there is no overlapping, therefore, the product is zero. Since the integral interval is

( T / 2, T / 2) , then only the n 0 term makes the integral having value and as below.

T / 2

1

1

T

vv ( ) g *(t nT ) g (t nT )dt

1dt

T T / 2

T

n T T / 2

In the same manner, we can get the result for T 0 . (Alternatively, may use the fact

vv ( ) vv ( ) ) Accordingly, we conclude

T | |

vv ( )

, | | T

T

T /2

It has a triangular shape and its PSD is the Fourier transform of vv ( ) .

It is very interesting to observe that a random information sequence with rectangular pulse

has a triangular-shape autocorrelation function and that is exactly the convolution of this

pulse with itself! Therefore, the PSD, the Fourier transform of the autocorrelation function is

just the square of the absolute value of the Fourier transform of such pulse function.

F

If g (t )

G( f ) , then vv ( f ) F{Rvv ( )} F{g ( ) g ( )} | G ( f ) |2

Correction: Review the example 2.1, P. 56 in Peterson, etc. book.

Since the data is assumed to be purely random, therefore, the adjacent bits (1, 1), (1, -1), (-1,

-1) and (-1, -1) are equally probable. If Tc Tc , the resulting area will be Tc as

above. On the other hand, if | | Tc , c(t )c(t ) is another purely random data waveform,

hence, its average is just zero.

However, Figure 2.8 was wrongly illustrated. See the following explanation.

(1). The fractional area is the shadow area above zero.

(2). The area is 1 (Tc ) Tc .

Then the autocorrelation function is obtained from the definition.

T

A

1

1 c

T

Rc ( ) lim

c(t )c(t )dt c(t )c(t )dt c

, 0 Tc

A 2 A

Tc 0

Tc

A

T

, Tc 0

Similarly, Rc ( ) c

Tc

T | |

, | | Tc

Accordingly, Rc ( ) c

Tc

Also, note that this is not the case for the m-sequence, which is periodic and is used in the

spread spectrum communication system for spreading the transmission bandwidth.

4.4.2 Power spectra of CPFSK and CPM signals

The constant amplitude CPM is expressed as

s(t; I) A cos 2 f ct (t; I)

where

(4.4-27)

(t; I) 2 h I k q(t kT )

(4.4-28)

k

Assume:

(1). I n {1, 3,

, ( M 1)} .

(2). I n are iid, and are with prior probabilities

Pn P( I k n), n 1, 3,

where

n

, ( M 1)

Pn 1 .

1 t LT

q(t ) 0 t LT

(3). g (t )

, and q(t ) 2

.

otherwise

0

0 t 0

(4.4-29)

The autocorrelation function of the equivalent lowpass signal v(t ) e j ( t ;I ) is

1

vv (t ; t ) E exp j 2 h I k [q(t kT ) q(t kT )]

2

k

(4.4-30)

(i). Expressing the sum in the exponent as a product of exponents, we rewrite (4.4-30) as

1

(4.4-31)

vv (t ; t ) E exp{ j 2 hI k [q(t kT ) q(t kT )]}

2 k

(ii). Performing the expectation over the data symbols {I k } . Since these symbols in {I k }

are statistically independent

1 M 1

(4.4-32)

vv (t ; t ) Pn exp{ j 2 hn[ q(t kT ) q(t kT )]}

2 k n ( M 1)

n odd

The derivation is as follows.

1

2

1

2

k

vv (t ; t ) E[v(t )v (t )] E exp j 2 h I k [q(t kT ) q(t kT )]

1

E exp j 2 hI k [q(t kT ) q(t kT )]

2 k

1

E exp I k j 2 h[q(t kT ) q(t kT )]

2 k

1 M 1

Pn exp nj 2 h[ q(t kT ) q(t kT )

2 k n ( M 1)

n odd

Hint: E[ X ] xi P( xi ) , and E[ g ( X )] g ( xi )P( xi ) 1.

The average autocorrelation function is

T

1

(4.4-33)

vv ( ) vv (t ; )dt

T 0

To further simplify (4.4-32), we know the fact that if both q(t kT ) and q(t kT ) are

either 0 or ½, the exponent terms will be zero, and Pn 1 . Accordingly, only the nonzero

exponent terms (finite) are needed to evaluate.

Suppose mT 0, m 0, 0 T

0 q(t kT ) 1/ 2 0 t kT LT

t

t

Lk

and

T

T

t

t

mL k

m

T

T

To exclude the situation that both q(t kT ) and q(t kT ) are either 0 or ½, we take

0 q(t mT kT ) 1/ 2 0 t mT kT LT

1

See A. Papoulis and S. U. Pillai, Probability, Random variables and Stochastic Processes, 4 th ed., pages

142-143 about the expected value of function of a random variable for both continuous- and discrete-type

random variables.

the maximum for the upper limit and minimum for the lower limit. Remember

t

t

m

0 T (not " T ") and 0 t T . L k

T

T

From the above inequality, L k 2 m (since 0 / T 1)

Thus, k is confined in the interval [1 L,1 m] , or 1 L k 1 m .

vv ( mT )

1

2T

M 1

P exp{ j 2 hn[ q(t ( k m)T ) q(t kT )]} dt

0 k

n

1 L n ( M 1)

n odd

T m 1

Consider the case when mT LT , which implies m L

written as

vv ( mT ) ( jh)

m L

(4.4-34)

0 T . (4.4-34) may be

( ), m L, 0 T

(4.4-35)

where ( jh ) is the characteristic function of the random sequence {I n } , defined as

( jh ) E e j hI

n

M 1

n ( M 1)

Pn e j hn

(4.4-36)

n odd

and

1

( )

2T

M 1

1

Pn exp{ j 2 hn[ 2 q(t kT )]}

0 k

1 L n ( M 1)

n odd

T

0

M 1

Pn exp[ j 2 hnq(t kT )] dt , m L

k 1 L n ( M 1)

n odd

(4.4-37)

1

(why choose LT? Recall the interval of g (t ) )

(a). Since 0 q(t kT ) 1/ 2 0 t kT LT

t 0, L k 0

t

t

L k , we obtain

T

T

t T, 1 L k 1

Henceforth, the range of k that q(t kT ) with 0 q(t kT ) 1/ 2 is L k 1 , or

1 L k 0 (k integer).

Similarly,

(b).Since

t

t

0 q(t mT kT ) 1/ 2 0 t mT kT LT

mL k

m

T

T

t 0,

T

mLk

T

m

t T,

1 m

T

T

Henceforth, the range of k that q(t mT kT ) with 0 q(t mT kT ) 1/ 2 is

1 m L k

mLk

1 m , or 1 m L k 1 m (k integer and m L ).

T

T

Their relationship can be manifest in graphic as

1/ 2

The shapes in these two

intervals depend on

function q(t)

q(t kT )

k

q(t mT kT )

1/ 2

k

1 m

1 L

0

1 m L

Consequently, the integration can be separated into three subintervals:

vv ( mT ) m L

T

0 M 1

1

1

dt

Pn exp j 2 hn 2 q(t kT )

2T 0 k L1 n ( M 1)

n odd

m L 1

k 1

M 1

P exp j 2 hn 1 0

2

n ( M 1) n

n odd

M 1

Pn exp j 2 hn q(t ( m k )T ) 0

k m L 1 n ( M 1)

n odd

1 m

Hence,

vv ( mT ) m L

T

0 M 1

1

1

( jh ) m L

dt

P

exp

j

2

hn

q

(

t

kT

)

n

2

2T 0 k L1 n ( M 1)

n odd

M 1

1

Pn exp j 2 hnq(t k T )

k 1 L n ( M 1)

n odd

( Let k k m )

The Fourier transform of vv ( ) yields the average power density spectrum vv ( f ) as

0

j 2 f

j 2 f

vv ( )e d 2 Re vv ( )e d

vv ( f )

(4.4-38)

(4.4-38) can be derived as below:

vv ( f ) F vv ( )

vv ( )e

j 2 f

0

j 2 f

j 2 f

j 2 f

vv ( )e d vv ( )e d vv ( )e d

0

d vv ( )e

0

j 2 f

d vv ( )e

0

j 2 f

0

d vv ( )e j 2 f d

0

But

vv ( ) E (t ) (t ) , vv ( ) E (t ) (t )

vv ( ) E (t ) (t ) E (t ) (t ) vv ( )

vv ( f ) 2 Re vv ( )e j 2 f d

0

But

vv

( )e

j 2 f

LT

d

vv

( )e

j 2 f

d vv ( )e j 2 f d

0

(4.4-39)

LT

With the aid of (4.4-35), the integral in the range LT may be written as

vv

( )e

j 2 f

( m 1)T

d

m L

LT

vv ( )e j 2 f d

(4.4-40)

mT

Let mT

vv

( )e

j 2 f

T

d vv ( mT )e j 2 f ( mT )d

m L 0

LT

T

( ) ( jh )

m L

e j 2 f ( mT ) d

(4.4-41)

m L 0

T

( jh ) e j 2 fnT ( )e j 2 f ( LT ) d

n

n 0

0

Note that the right side of the above equation is separable, which mean the summation term

and the integral term may be evaluated respectively. Since ( jh) 1 , depending on the

absolute value of the characteristic function, two cases are discussed:

(A). When ( jh) 1 for some h

( jh)

n 0

n

e j 2 fnT

1

1 ( jh )e j 2 fT

(4.4-42)

In this case, (4.4-41) reduces to

j 2 f

vv ( )e d

LT

T

1

( )e j 2 f ( LT )d

j 2 fT

1 ( jh )e

0

(4.4-43)

T

1

vv ( LT )e j 2 f ( LT )d

1 ( jh )e j 2 fT 0

To further simplify the integral term in (4.4-43), we use the equation (4.4-35)

m L

vv ( mT ) ( jh) ( ), mT LT , and at m L (remember the exponential

term is L), we have

( jh)mL ( ) ( ) vv ( LT ),

0 T

By combining (4.4-38), (4.4-39), and (4.4-43), we can obtain the PSD of the CPM signal as

LT

1

vv ( f ) 2 Re vv ( )e j 2 f d

1 ( jh )e j 2 fT

0

( L 1) T

vv ( )e j 2 f d

LT

(4.4-44)

Note:

(1). The PSD can be evaluated from (4.4-44) by the numerical method.

(2). The average autocorrelation function for 0 ( L 1)T can be computed from

(4.4-34) numerically.

(B). For some h fthat ( jh) 1 , e.g., h K , K I , we can set

( jh) e j 2 , 0 1

(4.4-45)

Then the sum in (4.4-41) becomes

e

j 2 T ( f / T ) n

n 0

1 1

2 2T

n

1

f T T j 2 cot T f T

(4.4-46)

n

Thus, the PSD now contains impulse located at frequencies

fn

n

, 0 1, n 1, 2,

T

(4.4-47)

(4.4-46) can be derived as below:

e j 2 T ( f / T )n e j 2 f n with f T ( f / T )

n 0

e

n 0

n 0

j 2 f n

=

u ( n )e

j 2 f n

n

Thus, we have viewed the sum term as the discrete-time Fourier transform of a unit step

1 n 0,1, 2,

sequence, i.e., u(n )

0 n 1, 2,

It is worthy to say that u(n ) cannot be evaluated its DTFT directly because it does not

converged. Let

1 n 0,1, 2,

1 1

u(n ) sgn(n ) with sgn(n )

. Then

2 2

1 n 1, 2,

1

1

( f k)

. Also see Oppenheim, et al.,

2 k

1 e j

Discrete-time signal processing 2nd ed., pp. 53-54, Examples. 2.23-24, and the equation

2.153)

(Hint; F [u(n )] F [ 12 12 sgn(n )]

To find DTFT of sgn( n ) , we let

x1 (n) a nu(n), x2 (n) a nu(n 1), a 1

Therefore

sgn( n ) x1 ( n ) x2 ( n ) a nu(n ) a nu( n 1)

(i).

a e

n j n

n 0

Then (i)+(ii)=

ae

j

n 0

n

a 1

1

and (ii).

1 ae j

a e

n j n

n 1

ae

n 1

j

n

ae j

1 ae j

1 a 2 2ae j

(1 ae j )(1 ae j )

The DTFT of sgn( n ) is

2

e j / 2

F [sgn(n)] lim[(i) (ii)]

1 j cot( / 2)

a 1

1 e j j sin( / 2)

Finally

1

k

1 j

1

U ( f ) T ( f ) k cot

f

2 k

T

T T

2 2

2 2T k

1 j

cot

2 2

2

Back to the case for which ( jh) 1 , when symbols are equally probable, i.e.,

1

for all n

M

The characteristic function simplifies to the form

1 M 1 j hn 1 sin M h

( jh)

e M sin h

M n ( M 1)

Pn

(4.4-48)

n odd

Note that in this case ( jh ) is real. The average autocorrelation function given by (4.4-34)

also simplifies in this case to

1

vv ( )

2T

1

2T

M 1

j 2 hn [ q ( t ( k m ) T ) q ( t kT )]

Pe

dt

0 k

n

1 L n ( M 1)

n odd

T m 1

T [ / T ]

(4.4-49)

1 sin{2 hM [ q(t kT ) q(t kT )]}

dt

sin{2 h[q(t kT ) q(t kT )]}

M

0 k 1 L

It is also real.

N

Where we have used the expansion

e

j ( n )

n 0

0, 2 h .

sin[( N 1) / 2] j ( N / 2)

e

with

sin( / 2)

The PSD reduces to

LT

vv ( f ) 2 vv ( ) cos 2 f d

0

1 ( jh ) cos 2 fT

2

1 ( jh ) 2 ( jh ) cos 2 fT

( L 1) T

( jh )sin 2 fT

1 ( jh ) 2 ( jh ) cos 2 fT

( L 1) T

2

vv ( ) cos 2 f d

(4.4-50)

LT

vv ( )sin 2 f d

LT

(4.4-50) can be derived as below:

LT

1

vv ( f ) 2 Re vv ( )e j 2 f d

1 ( jh )e j 2 fT

0

( L 1) T

LT

vv ( )e j 2 f d

1

2 vv ( ) cos 2 f d 2 Re

1 ( jh ) cos 2 fT j ( jh )sin 2 fT

0

LT

( L 1) T

vv ( ) cos 2 f j sin 2 f d

LT

1 ( jh ) cos 2 fT j ( jh )sin 2 fT

2 vv ( ) cos 2 f d 2 Re

2

1 ( jh ) cos 2 fT 2 ( jh ) cos 2 fT

0

LT

( L 1) T

vv ( ) cos 2 f j sin 2 f d

LT

LT

2 vv ( ) cos 2 f d

0

( L 1) T

1 ( jh ) cos 2 fT

1 ( jh ) cos 2 fT 2 ( jh ) cos 2 fT

vv ( ) cos 2 f d

LT

( L 1) T

vv ( )sin 2 f d

LT

Power density spectrum of CPFSK

2

( jh )sin 2 fT

1 ( jh ) cos 2 fT 2 ( jh ) cos 2 fT

2

When the pulse shape of g (t ) is rectangular and zero outside the interval [0,T], q(t ) is

linear for 0 t T . The resulting PSD may be expressed as

2 M M

1 M

(4.4-51)

vv ( f ) T An 2 ( f ) 2 Bnm ( f ) An ( f ) Am ( f )

M n 1 m1

M n 1

where

An ( f )

sin fT 12 (2n 1 M )h

fT 12 (2n 1 M )h

cos(2 fT nm ) cos nm

1 2 2 cos 2 fT

h(m n 1 M )

Bnm ( f )

nm

(4.4-52)

sin M h

M sin h

The PSD of CPFSK for M=2,4,and 8 is plotted in Figures 4.4-3-4.4-5 as a function of the

normalized frequency fT with the modulation index h 2 f d T as a parameter.

( jh )

Notes:

(1). Only one-half of the bandwidth occupancy is shown in these graph. The origin

corresponds to the carrier, f c .

(2). For h 1 , the graphs illustrate that the spectrum of CPFSK is relatively smooth and

well confined.

(3). As h approaches unity, the spectra become very peaked and for h 1 when | | 1 , we

find that impulses occur at M frequencies.

(3). When h 1 , the spectrum becomes broader. Therefore, in communication systems

where CPFSK is used, the modulation index is designed to conserve bandwidth, so that

h 1.

The special case of binary CPFSK with h

MSK. The spectrum of such a signal is

1

2

(or f d 1/ 4T ) and 0 corresponds to

2

16 A2T cos 2 fT

(4.4-53)

vv ( f )

2 1 16 f 2T 2

Where the signal amplitude A 1 in (4.4-52). In contrast the spectrum of OQPSK with a

rectangular pulse g (t ) of duration T is

2

sin fT

(4.4-54)

vv ( f ) A T

fT

When we compare these spectral characteristics, we should normalize the frequency variable

by the bit rate or the bit interval Tb . Since MSK is binary FSK, it follows that T Tb in

2

(4.4-53). On the other hand, in OQPSK, T 2Tb so that (4.4-54) becomes

2

sin 2 fTb

vv ( f ) 2 A Tb

2 fTb

The spectra of the MSK and OQPSK signals are illustrated in Figure 4.4-6.

2

(4.4-55)

Observe that

(1). The main lobe of MSK is 50% wider than that for OQPSK. However, the side lobes in

MSK fall off considerably faster.

(2). If we take the bandwidth W containing 99% of the total power, we find that W 1.2 / Tb

for MSK and W 8 / Tb .Accordingly, MSK has a narrower spectral occupancy when

viewed in terms of fractional out-of-band power above fTb 1 . The fractional out of

band power for OQPSK and MSK are shown in Figure 4.4-7.

(3). MSK is significantly more bandwidth-efficient that QPSK. This efficiency accounts for

the popularity of MSK in many digital communication systems.

(4). Even greater bandwidth efficiency than MSK can be achieved by reducing the

modulation index. However, the FSK signals will no longer be orthogonal and there

will be an increase in the error probability.

Spectral characteristics of CPM

(1). The bandwidth efficiency of CPM depends on the choice of the modulation index h, the

pulse shape g (t ) , and the number of signals M.

(2). In CPFSK, small indexes h result in CPM signals with relatively small bandwidth

occupancy, while large indexes h result in CPM signals with relatively large bandwidth

occupancy. This is also the case for the more general CPM signals.

(3). The use of smooth pulses such as raised cosine pulses of the form

2 t

1

1 cos

0 t LT

(4.4-56)

g (t ) 2 LT

LT

0

otherwise

where L 1 for full response and L 1 for partial response, result in smaller

bandwidth occupancy and, hence, greater bandwidth efficiency than the use of

rectangular pulses.