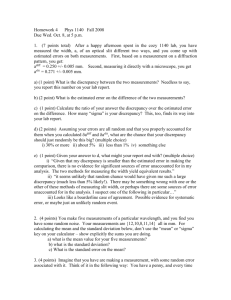

Lecture 4

advertisement

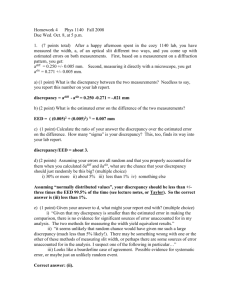

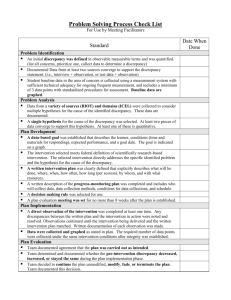

STATISTICAL METHODS FOR ASSESSING ERROR Errors Small random, Small systematic Large random, Small systematic Small random, Large systematic Large random, Large systematic The Mobster wants to buy a new gun. Errors. Assume no systematic. Small random, Large random, x x You see one bullet hole (marked with an X), but you can’t see the target. If there is no systematic error, how well can you tell where the target is?) x Think about the target in 1-d – bullets miss to the right or left. Make a bunch of measurements of x, each with some random error target x x2 x4 x3 bullet holes x5 x1 x 1 N x xi N i1 x xavg xmean x If there is only random error, no systematic error, then in the limit of infinite number of measurements, the average IS the “target”. Even if you only take five “shots”, the average is usually closer to the target than most of the typical individual shots. Make 6 measuremen ts (N 6). xi 2 ,-2, - 1, 2, 5, 0 What is x? (What is average? or, What is mean?) 1 N 1 1 x xi (2 2 1 2 5 0) 6 1 N i 1 6 6 x 1 How much does point #3 differ from the average? x3 x 3 x 3 x2 x4 x3 x5 x1 x How much, on average, does each point differ from the average? N Always 0! Not so enlightening. Could use average i absolute value, but instead, use... i 1 1 N (x x) 0 1 N ( xi x )2 N i1 1/ 2 1 N 2 1/ 2 2 x ( xi x ) N i 1 2 2 1 N ( xi x )2 N i1 1/ 2 x 2 1/ 2 1 N ( xi x ) 2 N i 1 x is sometimes defined with a N-1 instead of an N. This is a little better, I think. 1/ 2 x 2 1/ 2 1 N ( xi x ) 2 N 1 i 1 x “sigma” is the “standard deviation”. Roughly speaking it tells you by about how much a typical measurement will deviate from the mean value. Really it is the square root of the average of the square difference from the mean. 2 is called the “variance”. x is a good value to use for x. it is sometimes called “the standard error.” In fact, By how much does a typical point differ from the average? (root-mean-square difference) x +/-x x2 x4 x3 x5 x1 x If we measure just one point, (call it xi), the true value of x is “probably” within about 1 of xi. Oftentimes by “x” we mean, “one standard deviation”, or “the standard error”, x. 1/ 2 x 2 1/ 2 1 N 2 ( x x ) i N 1 i 1 Make 6 measuremen ts (N 6). xi 2,-2,-1,2,5,0 x 1 What is standard deviation, x ? x 1 N ( xi x ) 2 N 1 i 1 1 (2 x ) 2 (2 x ) 2 (1 x ) 2 (2 x ) 2 (5 x ) 2 (0 x ) 2 N 1 1 (1) 2 (3) 2 (2) 2 (1) 2 (4) 2 (1) 2 N 1 1 1 9 4 1 16 1 N 1 6.4 2.5 1 32 N 1 Make 6 measuremen ts (N 6). xi 2,-2,-1,2,5,0 x 1, x 2.5 If we pick any one point, for instance, x3, which was “-1”, and ask, how far away is it likely to be from true answer, answer is “probably less than x.” So if we make just the one measurement, we say x=x, or in this case, 2.5. But we think, if we take the average of lots of points, that should have a smaller error. How much smaller? Let’s use the master rule. We can think of the average of six points as being a function of six points x f ( x1, x2 , x3 , x4 , x5 , x6 ) 1 f ( x1, x2 , x3 , x4 , x5 , x6 ) ( x1 x2 x3 x4 x5 x6 ) N x f ( x1, x2 , x3 , x4 , x5 , x6 ) f ( x1 , x2 , x3 , x4 , x5 , x6 ) What is f? f 2 f 2 1 ( x1 x2 x3 x4 x5 x6 ) N f 2 f x1 x2 x3 .... x1 x2 x3 2 2 2 f 1 1 1 x1 x2 x3 .... x i are uncorrel., all equal to x N N N f 1 1 1 x x x .... N N N 2 f x x N 2 2 we could write this x x N 2 1 N x .... N or x x N x2 N The “standard error on mean” is smaller than standard error on a single measurement, by a factor of (Number of points averaged together)1/2 . The standard error on a single point is the standard deviation on a single measurement x. The standard error on the mean (if you are reporting an average value for your final result, then the error on that average value) is x/(N)1/2 Say we make a measurement, and the true value of the thing we are measuring is “5”. But there is some purely random noise, so that on average, half the time we measure “0” and half the time we measure “10”. (weirdly, we never measure exactly “5”. But 5 is what we get on average.) So <x> is 5, and the error on a single measurement is x = 5. What if we average ten points? What will be the standard error on the average? Histogram. x = 5 +/- 5. x = 5. Suppose we make ten measurements. We think mean will be “5”, but if we make just ten measurements, can’t be sure mean will come out just right. What is standard error on the mean? Let’s do a mass experiment Heads = “I measure 10”. Tails = “I measure 0”. Flip the coin ten times. Write down the answers (eg “THHHTTHTTT”.) Calculate average. For my example, the answer is “4” (hint: to do this fast, just add up the numbers of heads, multiply by ten, then divide by ten. Or, you could just add up the number of heads, and stop right there!) Clicker questions 5.2a and 5.2b. (Don’t answer both questions!) What is the average of your ten measurements 5.2a 5.2b a 0, or 10 b1 c2 d3 e4 a. 5 b. 6 c. 7 d. 8 e. 9 # of times 0 1 2 3 4 5 6 7 8 9 10 Value 1 # of times 0 1 2 3 4 5 6 7 Value “Normal”, or “Gaussian” distribution. 8 9 10 If data points are “normally distributed”, one expects: +/-3 expected # of times (probability) 99.7%, about 299 out of 300, should be within +/-3 +/-2 95%, about 19 out of 20, should be within +/-2 +/-x +/- 1 68%, about 2 out of 3, should be within +/-1 <x> 0 1 2 3 4 5 6 7 8 9 10 Value The “Gaussian”, or “normal” distribution When do we kill the courier? Shipped quantity = S +/- S Received quantity = R +/- R Discrepancy = R-S. Estimated error on the difference (R-S) = ((R)2 + (S)2)1/2 If we are working with “one sigma” errors, (x = 1* x), and assuming our courier is “honest”, and our measurements, and our estimates of uncertainty are all accurate (ie, no “systematic error” in measurement, or in the courier.) One chance in 3 that the discrepancy will be more than (R-S) (a “one-sigma” discrepancy) But only one chance in 20 that the discrepancy will be more than 2* (R-S) (a “two-sigma” discrepancy) Only one chance in 300 that the discrepancy will be more than 3* (R-S) (a “three-sigma” discrepancy)