Software Quality - Princeton High School

advertisement

Software Quality

We want our software to be of high quality. But what does that mean? As is often

the case, there are a variety of quality characteristics to consider.

Quality

Characteristics Description

Correctness

Reliability

Robustness

Usability

Maintainability

Reusability

Portability

Efficiency

The degree to which software adheres to its

specific requirements.

The frequency and criticality of software

failure.

The degree to which erroneous situations are

handled gracefully.

The ease with which users can learn and

execute tasks within the software.

The ease with which changes can be made

to the software.

The ease with which software components

can be reused in the development of other

software systems.

The ease with which software components

can be used in multiple computer

environments.

The degree to which the software fulfills its

purpose without wasting resources.

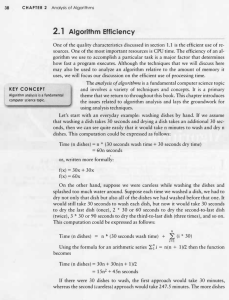

THE EFFICENT USE OF RESOURCES

One of the most important resources is CPU time. The efficiency of an algorithm

we use to accomplish a particular task is a major factor that determines how fast

a program executes. Although we can analyze an algorithm relative to the

amount of memory it uses, CPU time is usually the more interesting issue.

Let’s look at the issues related to algorithm analysis and the groundwork for

using analysis techniques with an example: washing dishes by hand. If we

assume that washing a dish takes 30 seconds and drying a dish takes an

additional 30 seconds, then we can see quite easily that it would take a minute to

wash and dry n dishes. This computation could be expressed as follows:

Time (n dishes) = n ( 30 seconds wash time + 30 seconds dry time )

= 60n seconds

On the other hand, suppose we were careless while washing the dishes and

splashed too much water around. Suppose each time we washed a dish, we had

to dry not only that dish but also all of the dishes we had washed before that one.

It would still take 30 seconds to wash each dish, but now it would take 30

seconds to dry the last dish (once), 2 30 or 60 seconds to dry the second-to-last

dish (twice), 3 30 or 90 seconds to dry the third-to-last dish (three times), and so

on. This computation could be expressed as follows:

Time (n dishes) = n ( 30 seconds wash time) +

n

i 1

(i 30)

( n 1)

2

2

= 15n + 45n seconds

= 30n + 30n

If there were 30 dishes to wash, the first approach would take 30 minutes,

whereas the second (careless) approach would take 247.5 minutes. The more

dishes we wash the worse that discrepancy becomes.

One might assume that, with the advances in the speed of processors and

availability of large amounts of inexpensive memory, algorithm analysis would no

longer be necessary. However, nothing could be farther from the truth. Processor

speed and memory cannot make up for the differences in efficiency of

algorithms.

For every algorithm we want to analyze, we need to define the size of the

problem. For our dishwashing example, the size of the problem is the number of

dishes to be washed and dried. We also must determine the value that

represents efficient use of time or space. For time considerations, we often pick

and appropriate processing step that we’d like to minimize, such as our goal to

minimize the number of times a dish has to be washed and dried. The overall

amount of time spent at the task is directly related to how many times we have to

perform that task. The algorithm’s efficiency can be defined in terms of the

problem size and the processing step.

Consider an algorithm that sorts a list of numbers into increasing order. One

natural way to express the size f the problem would be the number of values

to be sorted.

The processing step we are trying to optimize could be expressed as the

number of comparisons we have to make for the algorithm to put the values

in order.

The more comparisons we make, the more CPU time is used.

A growth function shows the relationship between the size of the problem

(n) and the value we hope to optimize.

This function represents the time complexity or space complexity of the

algorithm.

The growth function for our second dishwashing algorithm is

t( n ) = 15n2 + 45n

However, is not typically necessary to know the exact growth function for an

algorithm. Instead, we are mainly interested in the asymptotic complexity of an

algorithm. That is, we want to focus on the general nature of the function as n

increases. This characteristic is based on the dominant term of the expression –

the term that increases most quickly as n increases. As n gets very large, the

value of the dishwashing growth function approaches n because the term grows

much faster than the n term. The constants and the secondary term quickly

become irrelevant as n increases.

The asymptotic complexity is called the order of the algorithm. Thus, our

dishwashing algorithm is said to have order n time complexity, written O(n 2).

This is referred to as Big O( ) or Bog-Oh notation.

Growth Function

t(n)=17

t(n)=20n -5

t(n)=12n log n + 100n

t(n)=3n2 + 5n – 2

t(n)=2n + 18n2 + 3n

Order

O(1)

O(n)

O(n log )

O(n2)

O(2n)

Questions

1. What is the difference between the growth function of an algorithm and the

order of that algorithm?

2. Why does speeding up the CPU not necessarily speed up the process by

the same amount?

Exercises

1. What is the order of the following growth functions?

a. 10n2 + 100n + 1000

b. 10n3 – 7

c. 2n + 100n3

d. n2 log n

2. Arrange the growth functions of the previous exercise in ascending order

of efficiency for n=10 and again for n=1,000,000.

3. Write the code necessary to find the largest element in an unsorted array

of integers. What is the time complexity of this algorithm?

4. Determine the growth function and order of the following code fragment:

for (int count=0; count < n; count++)

{

for (int count2=0; count2 < n; count2=count2 2)

{

/* some sequence of O(1) steps */

}

}