Comparing Forecasts for House Prices at the

advertisement

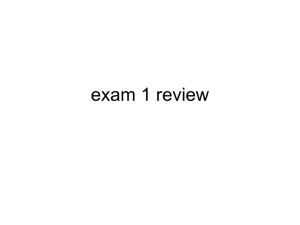

EVALUATING HOUSE PRICE FORECASTS John M. Clapp and Carmelo Giaccotto University of Connecticut School of Business Administration 368 Fairfield Road, U-41RE Storrs, CT 06269-2041 Phone (860) 486-5057 Fax (860) 486-0349 E-Mail JOHNC@SBA.UCONN.EDU E-Mail CARMELO@SBA.UCONN.EDU August 24, 2000 We thank the Center for Real Estate and Urban Economic Studies (CREUES), University of Connecticut for financial support, and Professor Dean Gatzlaff (Florida State University) for providing the data. EVALUATING HOUSE PRICE FORECASTS ABSTRACT We develop a methodology for forecasting house prices at the individual property level; previous literature has focused on testing market efficiency. We model the rate of growth in local prices as an autoregressive process and use the Kalman Filter for estimation and prediction. A battery of tests are employed to compare predicted prices for two common price index models: repeat sales and hedonic. The model is fitted with housing transactions from Dade County, Florida, from 1976 through the second quarter of 1997. Both sets of prediction errors (PE’s) show some departure from the desirable properties of any one-step-ahead forecasts. Also, both show some informational inefficiency, but the hedonic is more efficient than the repeat. This points the way towards using local market information to improve forecasts. The hedonic method dominates the repeat over an important range of PE’s; thus, a case can be made that many risk-averse agents would prefer a forecast based on the hedonic method. EVALUATING HOUSE PRICE FORECASTS INTRODUCTION The literature on housing market efficiency has demonstrated that house prices exhibit some inertia over short-to-intermediate time frames. For example, Case and Shiller (1989) found that, contrary to weakform efficiency, between 25% and 50% of a (real) price index change in one year persisted into the following year. Kuo (1996) improved the Case-Shiller methodology for testing weak-form efficiency by jointly estimating the price index and the serial correlation parameters all within the context of the repeat sales model. Kuo assumed a second order autoregressive process to model the rate of change in the price index. Kuo's research implies some predictability up to four quarters ahead. Interestingly, the semi-strong form of the efficient market hypothesis has not fared well either; Case and Shiller (1990), Clapp and Giaccotto (1994), and others, used a number of macro and local economic variables to forecast prices and excess returns to housing for periods up to one year ahead. These variables include local unemployment, expected inflation, mortgage payments, income and population age. This evidence shows that house prices do not quickly impound publicly available information. Moreover, the research of Mankiw and Weil (1989) suggests that prices may be predicted up to twenty years into the future as a result of current and predictable changes in adult population. However, their hypothesis remains controversial: See DiPasquale and Wheaton (1994) and Hendershott (1991). These papers are designed to test various kinds of market efficiency, not to examine forecasting per se. The models presented might be used for forecasting, but they have not been examined from the perspective of the considerable literature on the econometrics of forecasting. 1 House Price Forecasts This paper examines the forecastability of house prices one-quarter into the future: It is the first to explicitly address house price forecasting for its own sake. We propose to examine forecasting accuracy at the individual house price level rather than at the metropolitan level. Thus, we use the characteristics of each house, including any previous sales price, as the raw information; the forecasts resulting from fitting this information to our time series model are compared to actual out-of-sample sales prices. Accurate one-quarter ahead forecasts at the individual property level would be useful to home buyers and sellers, developers, mortgage lenders (especially if dealing with construction loans), mortgage insurers, and secondary market investors. Macroeconomic forecasters could use this information to improve local, regional and national economic forecasts. Also, one-quarter forecasts could be a building block for methods that deal with longer time horizons. Our forecasts are based on the inertia which has been documented by previous studies of housing markets. We hypothesize that the rate of growth in house prices follows a pth order autoregressive process; i.e., we start with a basic time series approach. Two models are used to implement time series estimation: one following a hedonic price index framework, and the other following the repeat sales approach. These models allow for random variation in the coefficients on previous prices and on hedonic characteristics. Our autoregressive process is a simple generalization of some of the previous research. For example, Shiller and Weiss (“Home Equity Insurance” 1994) fit an AR(1) process to price changes in the Los Angeles area. Kuo (1996) used an AR(2) with a unit root model to test for market efficiency. Hill, Sirmans and Knight (1999) and Englund, Gordon and Quigley(1999) tested the hypothesis of a unit root in the price level. This paper focuses on a variety of empirical tests of the accuracy of these forecasts.1 The criteria for 1 This is an essential part of our strategy of evaluating the properties of the forecasts themselves; we do not intend to test market efficiency. 2 House Price Forecasts accurate one-step-ahead forecasts include zero mean prediction errors (PEs) and normally distributed PEs (i.e., well behaved skewness and kurtosis).2 The mean squared prediction error should be low relative to total variation in the prices to be predicted. The forecasts should be informationally efficient in the sense that PEs are unrelated to predicted price; moreover, PEs should be unrelated to local-market information (e.g., age of the house) available at the time the prediction was made. This paper compares the two methods (repeat sales and hedonic models) using these criteria and several statistical tests. The remainder of this paper is organized as follows: In the next section we model the local price level as a stochastic process and show how the Kalman Filter is a convenient tool for estimation and prediction of individual house prices. In section 2 we outline a number of prefered properties for forecast errors and discuss the informational efficiency of forecasts using Theil’s (1966) decomposition. Next, in section 3, we use a random sample of properties that sold from 1976 through the fourth quarter of 1995 in Dade County, Florida, to estimate the hedonic and repeat sales models; we then compute out-of-sample forecasts for a group of properties not used in estimation and analyze the prediction errors using the statistical methodologies outlined earlier. Section 4 concludes the paper. 2 See Diebold and Lopez (1996), and Gershenfeld and Weigen (1994). 3 House Price Forecasts 1. METHODOLOGY Suppose that we observe a cross-sectional time series sample of house prices plus a number of house characteristics. If the street address is available, then one may construct a repeat sales index ala Bailey, Muth and Nourse (1963) or Case and Shiller (1989); if matching of property sales is not possible, then a variation of Rosen (1974) hedonic model may be estimated. In each of these cases the price index is treated as a fixed quantity to be estimated as the coefficients in a linear regression model. Hence, it is not possible to link the forecast of future individual sales prices to past and present values of the overall price level. Standard methods for estimating house price indices ignore the evidence on forecastability of house prices reviewed in the preceding section. We treat the local (e.g., city-wide) price level as a time series process. We forecast the estimated index one period ahead and also obtain forecasts of individual house prices: The AR process is estimated simultaneously with forecasting at the property level. House prices are likely to share time series characteristics with the consumer price index; in fact, housing constitutes about 40% of the CPI. This suggests that the house price level may contain a unit root and the first differences display serial correlation perhaps over one or two years. Hence, we propose an autoregressive model with a unit root in the level of the index. Define yi,t as the (log) price of the ith house sold at time t. This price may be decomposed into three parts: (1) the city-wide price level, (2) the value of a set of locational and structural characteristics , and (3) a mean zero house specific error term. Case and Schiller (1987), Hill, Sirmans and Knight (1999) and Englund, Gordon and Quigley (1999) assume the second term follows a Gaussian random walk, but treat the city-wide price level as a deterministic constant. This specification is equivalent to a heteroscedastic model where the variance is proportional to time between sales. 4 House Price Forecasts Our forecasting model starts with the same decomposition of price; however, the emphasis is on the city-wide price level rather than the idiosynchratic noise term. The rate of growth in the price level is treated like a stochastic process with a large predictable component; once the model is estimated, individual house price forecasts may be obtained from the city-wide index. Thus, define Ct to be the (log) level of the index at time t; the associated first difference (rate of growth) is Ct = Ct - Ct-1. We hypothesize that this difference follows an autoregressive process of order p: C t = + 1 C t - 1 + ... + p C t - p + t (1.1) Where t = 0, 1, ..., T and T represents the total number of time periods. The innovation term t is assumed to behave like white noise with variance 2q0. The systematic part of this model + 1 Ct - 1 + ... + p Ct - p represents a sustainable trend, whereas the error term t has no lasting effect on prices. The autoregressive coefficient j represents the percentage of the growth rate in period t-j that carries over to time t. This model is similar to the one proposed by Kuo (1996). 1.1 AUTOREGRESSIVE HEDONIC REGRESSION MODEL In this section we first sketch how the AR(p) process may be embedded in Rosen’s hedonic model; and second we discuss estimation and forecasting for individual property values. Suppose that at each point in time t we observe a sample of nt transactions. The hedonic model for this sample of properties may be written as: Y t = Z t t + et (1.2) where Yt is an nt x 1 vector of (log) individual sales prices, Zt is the corresponding nt x (T+2+K) matrix of housing attributes, and is structured as follows: the first T+2 columns are time dummies; all elements equal 5 House Price Forecasts zeros, except for the tth one which consists of a column of 1s;3 the remaining K columns represent observations on the K hedonic characteristics. The residual error et is assumed to be normal with zero mean and ntxnt variance covariance matrix 2R. If no heteroscedasticity is present, then R may be set equal to the identity matrix. The column vector of stochastic hedonic coefficients t is governed by the Markov process: t +1 = t +1 t + Wt +1 (1.3) The vector error term Wt+1 is assumed to be normal with mean zero and variance-covariance matrix 2Qt+1. Furthermore, we assume independence between Wt+1 and the measurement error es at all lags t,s. The intercept term in the conditional estimate of beta follows an AR process (in levels). For a second order process: Ct 1 (1 1)Ct ( 2 1)Ct 1 2Ct 2 which is embedded in equation (1.3). I.e., the vector includes the C’s and the matrix includes and 1 and 2. Details are in the Appendix. We use the Kalman Filter (KF) to estimate this model. This method is especially useful if one is interested in “on-line” predictions of individual house prices. In the language of KF, (1.2) is known as the "measurement" equation, and t is the unobserved vector of state variables. The "Transition" equation (1.3) specifies the probability model for the dynamic behavior of the city wide index plus the hedonic coefficients. Engle and Watson (1987) note that the transition equation describes the long run behavior of the state variables, whereas the data determine short-run behavior; this is captured by the measurement equation. Given a time series sample from 1 to t, the KF provides the optimal forecast of t+1 and, in turn, of individual house prices in the sample. The price forecast of each house sold at time t+1 may be obtained as follows. Let ̂ t | t be the minimum mean square estimator of t -- given information up to and including time 3 The two “extra” columns are for a constant term and t=0. 6 House Price Forecasts t. Use Equation (1.2) to predict t+1, given information up to time t, as ˆ t + 1| t = t + 1 ˆ t | t (1.4) Then the vector of predicted individual house prices is Ŷ t+ 1 = Z t+ 1 ̂ t+ 1 | t (1.5) The vector of one-step ahead prediction errors is given by êt + 1 | t Y t + 1 - Z t + 1 ̂ t + 1 | t ; the covariance matrix of these errors is 2 H t + 1 | t , H t + 1|t Z t + 1 V t + 1|t Z t + 1 + Rt + 1 . The unknown parameters: , 1, 2, …, k,2, q0, q1, ... , qK, may be obtained by maximizing the (log) likelihood function. 1.2 AUTOREGRESSIVE REPEAT SALES MODEL The simplest form of the repeat sales model (Bailey, Muth and Nourse, 1963) requires only the sales prices and dates at which a property was bought and then sold. The methodology of the previous section may be applied here with just a few modifications. The vector Yt now represents rates of growth: for the ith property, with second sale at time t2 and first sale at t1 , the ith element of Yt is defined as the difference in log prices between the first and second sale; suppose that there are nt such properties. The matrix Zt now has dimension nt x t+2. The ith row of this matrix will have -1 in column s, +1 in column t and zero everywhere else. The corresponding beta vector has transition matrix defined in a similar fashion to Equation (1.3). Once the price index is estimated by maximum likelihood, forecasts of individual house prices may be obtained as follows: Consider a sample of houses with second sale at some earlier period ti. Let y it ( y it ) be 1 2 the (log) first (second) sale price; then the predicted second sales price is: 7 House Price Forecasts yˆit 2 Cˆ t 1 Cit 1 t t Cˆ it 1 t yˆit 1 (1.7) stands for the estimated (log) index level at the time of the first sale for the ith house. This is a prediction as if the second sale takes place at t+1, one time period (Quarter) in the future. 2. EMPIRICAL METHODS Empirical methods are designed to evaluate the out-of-sample forecasting accuracy for a cross-section of properties at a given point in time. These tests are conducted for a short time horizon. Thus, the tests focus on the accuracy of forecasts at the individual house level rather than on time series properties of the forecasts.4 2.1 PREFERRED PROPERTIES OF FORECAST ERRORS We propose that forecast errors should possess a set of preferred distributional properties. At the very least, one would expect unbiased forecasts on average. Moreover, although a particular methodology might behave well in the middle of the distribution, it may display a tendency to overpredict (left skewness) or underpredict (right skewness), or make gross errors on both sides of the distribution (kurtosis). Homogeneity of variance is another preferred property for prediction errors. In sum, the set of preferred distributional properties are: zero mean, constant variance across properties, no skewness or kurtosis; that is, forecast errors (PEs) should be approximately normally distributed and fairly homogeneous. The Gaussian assumption about PE’s is not based on the theory of rational expectations. But, it “provides a simple from that captures some desirable features of error 4 As Pointed by Diebold and Lopez (1996), there is no reason to expect serially correlated forecasts when the time horizon of the forecast is one period into the future; that observation, plus the short forecasting period (6 quarters), obviate the need for serial correlation tests. 8 House Price Forecasts weighting (Gershenfeld and Weigend, 1994, p.64).” In practice, the normality of prediction errors for a given cross-section of properties may follow for this reason: If the prediction errors are caused by many small independent variables, then the central limit theorem implies that they will be asymptotically distributed according to the normal distribution. Thus, the normal distribution is one indication that large systematic components of the forecast have been included.5 These criteria are important because analysis of forecast errors (in terms of bias, mean square errors and higher moments) can be performed more easily with parametric tests based on normality. If, in the other hand, prediction errors are non-normal, then one must rely on (often) less powerful nonparametric tests. To check whether a set of forecast errors display these preferred properties, we compute the first four moments of the empirical distribution. In large samples, the third moment (skewness) has a normal distribution with zero mean and standard deviation of normal with mean of 3 and standard deviation of 6/n t ; similarly, the fourth moment (kurtosis) is 24/n t . We use also a non-parametric test for normality of the PE’s: the Shapiro-Wilk test (W). The W-statistic is a good omnibus test based on ordered errors and their variance. Details on this test may be found in Conover (1980 pp. 364-367). Since the PE's may be non-normal in many time periods, we use Wilcoxon's signed rank test that the mean prediction error is equal to zero, i.e., price forecasts are unbiased. Let T+ be the sum of the positive ranks, then by the central limit theorem T+ is normal with mean nt (nt + 1) / 4 , and variance nt (nt + 1)(2 nt + 1) / 24 (see Gibbons and Chakraborti, 1992). It is very difficult to check all possible ways that the variance of PE may vary across individual properties; for example, in the hedonic model the variance may be related to size of the property, to building 5 We use several tests that do not require normal PE’s. So normality is a preferred condition, not a required condition. 9 House Price Forecasts age or to a latent variable. A solution to the problem is to use a variable which encompasses as many of the hedonic characteristics as possible; a good candidate would be the expected house value E(yi t+1). Hence, to check for constant variance across properties, we use the following regression: eˆ = 0 + 1 E ( yi , t+1) + 2 E yi , t 1 i , t+1 2 2 i , t+1 (2.1) where êi , t is the prediction error and yi , t is the log of house price for property i at time t. If the variance is constant, we should find zero slopes; positive slopes imply greater forecast errors for more valuable homes. We will use the estimated predicted value ŷ i , t+1 for E ( yi , t+1) . 2.2 RELATIVE EFFICIENCY OF HEDONIC VS REPEAT FORECASTS A statistically efficient forecast has, by definition, the lowest possible variance. Unfortunately, in practice this lower bound is unknown; so we cannot know whether a particular forecasting model is efficient in a statistical sense. Since we are dealing with two competing methodologies we will focus on whether forecasts based on the hedonic model are more or less efficient than repeat sales forecasts. The degree of efficiency can be judged from Theil's U2 statistic which is based on the Mean Squared Prediction Error (MSPE). Define the MSPE as 1 nt nt 2 ( yi , t - ŷi , t ) = i=1 1 nt nt ê 2 i ,t . Then, the U2 statistic, for each i=1 period t, may be defined as: nt 2 U t = 1.0 - ( yi , t - ŷi , t ) / 2 i=1 nt ( y i ,t - y i , t )2 (2.2) i=1 In a similar fashion to the coefficient of determination, R2, from linear regression, Theil's U2 statistic may be used to measure "goodness of fit" between the actual and predicted house prices. Values of U2 close to 1.0 imply highly efficient forecasts in the sense that the variance of the forecast error is nearly equal to the 10 House Price Forecasts variance of actual prices. This statistic will be useful in ranking forecast performance of the repeat sales model versus the hedonic model. To actually test the hypothesis that one methodology is more or less efficient than the other we use a statistic first proposed by Granger and Newbold (1986, ch. 9). Consider the regression Rpt Hed Rpt Hed êi , t - êi , t = 0 + 1 (êi , t + êi , t ) + i , t (2.3) The ordinary least squares estimator of 1 is equivalent to ˆ 1 = Hed Var( êiRpt ) , t ) - Var( êi , t Rpt Hed Var ( êi , t + êi , t ) (2.4) large and positive values of ̂ 1 are consistent with the hypothesis that the repeat model is less accurate than the hedonic method. The test statistic for this hypothesis is: = r nt - 2 1 - r2 (2.5) where r is the correlation coefficient between the sum and difference of prediction errors. The statistic will follow a t-distribution with nt - 2 degrees of freedom. Lehman (1986, ch. 5) shows this test to be uniformly most powerful and unbiased even though the two prediction error series may be correlated with each other. 2.3 INFORMATIONAL EFFICIENCY OF THE FORECASTS: THEIL’S DECOMPOSITION A one step ahead forecasting methodology that uses all information available at time t can be tested for informational efficiency. An efficient forecast should be uncorrelated with information available at the time the forecast was made. We can test orthogonality of forecast errors by checking whether the Cov(êt+1 , z k t ) = 0 where zk is a property characteristic. Once again there is a problem as to which characteristics one should use; as in the previous section we rely on the expected house price value E(yi t+1). 11 House Price Forecasts êi , t+1 = 0 + 1 E ( yi , t+1) + 2 E ( yi , t+1) + i , t+1 2 (2.6) if the intercept and slope parameters are zero, then the forecasting methodology is not throwing away valuable information, and it is not possible to use any of the information available at time t to improve the forecast. The innovation in this paper is to require informational efficiency for each of the cross-section of properties that traded for each forecasted time period. Equation (2.6) can be tested in linear form (2 = 0) as well as with all parameters unconstrained. If there is informational inefficiency, then it is desirable to know both its source and its economic importance. The source of inefficiency in the forecasting process may be revealed by decomposing the MSPE into its three main components: Degree of bias, variability and unexplained random errors in the forecasting process (Theil, 1966). Consider the regression of actual sales prices on predicted prices during a given period t: yi , t = 0 + 1 ŷi , t + ui , t (2.7) Let ŷt and yt denote the average predicted and actual price, respectively, for period t. Theil pointed out that the mean squared prediction error can be decomposed as follows: MSPE = ŷt - yt + 1 ˆ Var(ŷ) + ESS/n 2 2 1 t (2.8) where ̂ 1 is the ordinary least square estimator of 1 in (2.7), Var(ŷ) is the cross-sectional variance of the predicted y's from the forecasting model for period t, and ESS is the error sum of squares from (2.7). We can divide both sides of equation (2.8) of MSPE to decompose MSPE as follows: 1 = UBias + URegression + UError (2.9) An unbiased, informationally efficient forecasting model would have 0 = 0 and 1 = 1. Since regression 12 House Price Forecasts equation (2.7) passes through the means, 0 = 0 occurs when there is no bias: The mean predicted price for property i is equal to the mean actual price. This corresponds to Ubias, the first term in equation (2.8) and (2.9), being equal to zero. When 1 = 1, cross-sectional variations in ŷi, t are equal to variations in actual price. When this occurs, the second term in equation (2.9) (the regression bias term) goes to zero. Thus, when both 0 = 0 and 1 = 1, we have an informationally efficient and unbiased forecast with mean-squared error being composed entirely of the residual from equation (2.9). However, it is important to realize that the conditions 0 = 0 and 1 = 1 are just sufficient conditions for unbiased forecasts, they are not necessary. In fact, any combination of these two parameters such that 0 - (1 - 1) E ŷi , t = 0 is the necessary and sufficient condition for zero bias (Holden and Peel, 1990). Hence, large deviations from the pair (0,1) may still be consistent with unbiased forecasts. Equation (2.9) gives the economic importance of bias-in-means and regression bias relative to random prediction error. UBias is the percentage of MSPE that is accounted for by bias in the mean, i.e., by 0 0. URegression is the percentage of MSPE accounted for by regression bias, i.e., by 1 . Finally, UError is the percentage of MSPE accounted for by the residual in equation (2.7), and by the third term in equation (2.8). An optimal forecast (i.e., one that is unbiased and informationally efficient) will have UError = 1.0. In this case, the PE is exactly equal to the residual from Equation (2.7): ei t = ui t. This is a desirable property: no extra noise has been added by ŷ . An unbiased forecast with UError less than 1.0 is less desirable because of the extra noise in the forecasted house price (i.e., 11). Another way to evaluate informational efficiency is to regress prediction errors on any information that is available at time t. The null hypothesis in these regressions is no relationship between prediction errors and explanatory variables; if there were a relationship, then these variables could be used to improve 13 House Price Forecasts the forecast. Explanatory variables in this regression include locational or structural characteristics, or any explanatory variables used in the forecast. In the case of repeat sales regression, information available at time t includes the first price of the repeat pair. 2.4 COMPARING THE TWO DISTRIBUTIONS OF PE'S Tests in the previous section focused on evaluating only the first and second moments of prediction errors for each of the two methods (repeat and hedonic) separately. In this subsection we evaluate the entire distribution of hedonic PE's and compare it to the repeat PE's. A graphical comparison of the Cumulative Distribution Functions (CDF's) of the PE's can determine which has more probability mass near zero and in the tails. We use kernel-smoothing methods to estimate the CDF's; this obviates the need to approximate the empirical probability distribution with a theoretical distribution. We can test the significance of any differences between the two empirical distributions of the PE's in several ways. Two of these tests are based on the sum of ranks of the absolute values of the prediction error (PE) for each forecast period: the first is the Wilcoxon-Mann-Whitney test, and the second is the KruskalWallis test. Combine the two PE’s. Let T1 be the rank sum for the repeat method and T2 be the rank sum for the hedonic method. These ranks take on values of 1, 2, ..., N, where N is two times the number of observations in each forecasting period. Under the null hypothesis that the repeat and hedonic distributions are identical, the mean for both should be (N+1)/ 2. The sum of the ranks is N times the mean outcome and the expected mean sum of the ranks under the null hypothesis is: T = N ( N + 1) 4 (2.10) 14 House Price Forecasts The variance of the rank sums is based on choosing N/2 ranks from an urn where each ball has a number from 1 to N. Since the ranks are selected without replacement, the variance is: = 2 T 2 N ( N + 1) 48 (2.11) We use equations (2.10) and (2.11) to form a t-statistic (Z score if N >30)6 We also conduct a chi-square test based on the square difference between the mean sum of the ranks and its expected value. These differences are weighted by the number of observations in the sample. This weighted sum is scaled by a factor proportional to its variance to obtain the Kruskal-Wallis statistic, H: H= 24 (T 1 + T 2) - 3 ( N + 1) 2 N ( N + 1) (2.12) H is distributed chi-square with one degree of freedom. A chi square statistic for the null hypothesis of equal median prediction errors is based on the deviation between the observed number of ranks above the median and its expected value. This chi-square is approximated by a squared standard normal deviate, Z2. 3. DATA AND ANALYSIS OF PREDICTION ERRORS 3.1 DATA AND ESTIMATION OF FORECASTING MODELS The original data base consist of all transactions in the Miami MSA (Dade County, Florida) from 1971 to the present as recorded by the Florida Department of Revenue; the total number of records is over three hundred thousand. The data have been screened to eliminate data errors or transactions that appear to be less than arm's length (e.g., a sales price of $1). For each property we have the two most recent sales 6 Equations (2.10) and (2.11) may look unfamiliar because we deal with the special case where exactly N/2 ranks are selected. 15 House Price Forecasts prices, date of sale (year and month), assessed value, age, and square feet.7 To keep the estimation problem manageable, we selected a random sample of 5,159 houses that sold twice during the interval from the first quarter of 1976 through the second quarter of 1997. Specifically, for the repeat model we have 4,372 properties with first sale during the period 1976Q1 through 93Q4 (Q1 Q72), and second sale from 76Q2 through 95Q4 (Q2 - Q80). These data are used to estimate the parameters of the forecasting model. The remaining 787 pairs, not included in the estimation sample, have second sale in the forecasting period 1996Q1 thru 97Q2 (Q81 - Q86), while the first sale is randomly distributed over the preceding 80 quarters. For the hedonic model we treat each sale as a one-only sale and use property characteristics to control for quality. Thus, the sample size for the estimation period is 9,531 (4,372*2+787), and for the out-of-sample prediction interval we have 787 observations, exactly the same properties as for the repeat sample. By the standards of traditional regression models the size of our database seems relatively small. However, the estimation of the unknown parameters by maximum likelihood requires substantial computer time (even on a large mainframe computer). We used the subroutines KALMN in conjunction with BCONF from the IMSL stat/math library to maximize the likelihood function (subject to boundary constraints). It is difficult to know, a priori, the order of the autoregressive process (Equation 1.1). Based on our own subjective experience with price indices, we hypothesized a fourth order process (p=4), and then used sensitivity analysis to check whether a third or fifth order process lead to better sample results. Similarly, in the spirit of exploratory data analysis, we decided to drop the square footage variable from the hedonic A more general equation with derivations is contained on page 74 of Mosteller and Rourke (1973). 7 We thank Dean Gatzlaff, Florida State University, for providing the data. A more complete description of the data base may be found in Gatzlaff and Ling, 1994. 16 House Price Forecasts model and used assessed value and age for control variables. Part of the rationale for this specification is based on recent research, Clapp and Giaccotto (1998), which suggests that age contains substantial information on the state of local markets in addition to information about the rate of depreciation. The final estimated values for Equation (1.1) are: Ct 1 0.014 0.135 Ct 0.159 Ct 2 0.251Ct 3 for the hedonic model and Ct 1 0.012 0.08Ct 0.152 C t 3 0.155 Ct 4 for the repeat model. Lastly, to speed up the rate of convergence in the estimation program, we treated the parameters of the hedonic model as fixed rather than random walk. The final estimate on assessed value was 0.84 and on age -0.03. 3.2 ANALYSIS OF PREDICTION ERRORS The first question of interest is whether the PE’s are white noise: zero mean, no skewness or kurtosis and homogeneous variance. Table 1 displays the first four sample moments for each of the last six quarters and all six quarters together. The evidence appears to say that, on average, the repeat method does a slightly better job of forecasting than the hedonic model. The average forecast error ranges from -0.027 to 0.024 for the repeat sample, and from -0.030 to 0.031 for the hedonic; for all quarters taken together, the average error is half of one percent for the repeat, and 9/10 of one percent for the hedonic. The standard deviation for all time periods implies that, under a traditional t-test for zero mean, both hedonic and repeat mean prediction errors are not significantly different from zero (i.e., they are unbiased). However, the estimated kurtosis (which we discuss below) is not consistent with normality, therefore the ttest will not be robust and conclusions based on it may be suspect. We will return to the question of bias 17 House Price Forecasts when we analyze the results of Theil's decomposition of mean square errors. The hedonic model appears to generate negatively skewed forecast errors. The repeat model's errors are generally symmetric: over the entire forecast sample period, the coefficient of skewness is only -0.044 with a p-value of 0.614 for the repeat model. However, both models display heavy tails relative to the normal distribution; the sample values are, roughly, 6.0 for the repeat and 8.2 for the hedonic. For a normal random variable these numbers should be much closer to 3.0. Similarly, the p-values for the Shapiro-Wilk W-statistic show significant departures from normality for both methods; the hedonic model shows larger departures in most subperiods. However, in all quarters, the significance of these results appear to be driven by a smaller standard deviation for the distribution of hedonic PE's. Therefore, conclusions about the relative merits of the two methods depends on further tests. Efficient use of information by the two forecasting methods can be evaluated by regressing prediction errors on predicted price (Equation 2.6). The first five columns of Table 2 report these results. From a statistical point of view, both methods display informational inefficiency over the entire forecasting time frame: the estimated value of 1 is -0.15 for the repeat model and 0.043 for the hedonic. Both are significantly different from zero, but the repeat method has greater departures from the null hypothesis of no relationship: The repeat method has higher R2 and F values. The hedonic regression relationship is statistically significant in only two of the six sub-periods (quarters 81 and 86), whereas the repeat regression is statistically significant in all sub-periods. Therefore, these tests reveal a greater degree of informational inefficiency for the repeat method. We note with interest that, for this method, the estimated 1 coefficient (see Equation 2.6) is always negative; this suggests that the repeat model tends to under-predict at the high- 18 House Price Forecasts end of house prices. Interestingly, just the opposite holds for the hedonic model.8 The last 5 columns of Table 2 evaluate the efficiency of the two forecasting methods by determining whether information available at the time of the forecast is correlated with the prediction errors. For the repeat method, prediction errors are significantly and negatively related to the first price of the repeat pair. For the hedonic method, the assessed value and age of the house are significantly related to the PE's over the whole period and in three of six sub-periods. These results are consistent with the regressions of PE's on predicted price; the hedonic method appears to be slightly more efficient than the repeat. In spite of the statistical significance of the results in Table 2, however, one suspects that the absolute values of the regression coefficients are too small to be of economic value, e.g., to set up a trading rule to earn abnormal returns from trading in the housing market. We turn to Theil's decomposition of the mean square prediction errors in order to evaluate the degree and economic significance of efficiency. The first five columns of Table 3 report the results of the regression of actual price on predicted price, Equation (2.7). The t-statistics in the first two columns in Table 3 evaluate the null hypotheses that (individually) 0 = 0 and 1 = 1; columns four and five evaluate that joint hypothesis. The estimated values of 0 and 1, for the most part, differ significantly from the pair (0, 1) for the entire forecast period as well as most quarterly sub-periods. The only exception occurs for the hedonic model in quarters 83, 84 and 85, where the actual values are (0.008, 1.000) in quarter 83, (-0.450, 1.040) in 84, and (0.120, 0.990) in quarter 85. We note that the rejection of the null hypothesis is driven more by the intercept being different from zero, than 1 different from unity. This result suggests that prediction errors may be biased. For additional 8 When Equation (2.6) was estimated with the square term included, the results displayed substantial collinearity. The substantive findings were essentially the same as those reported in Table 2. 19 House Price Forecasts evidence on this point, Table 3 displays the estimated value of Wilcoxon's signed rank test. The result suggests that the mean prediction errors for the hedonic method are significantly different from zero in all sub-periods, except quarter 84, as well as over the entire forecasting horizon. The repeat mean PE's are never significantly different from zero; the smallest p-value of the signed rank test is 0.065 in quarter 84. Therefore, we conclude that forecasts based on the hedonic model display more bias than those based on the repeat sales method. Theil's decomposition of this regression (see the last three columns of Table 3) shows that the hedonic method performs well over all quarters: 99% of the mean squared prediction error (MSPE) is due to the regression residual Uerror, whereas only 1% is due to bias and regression bias combined. This compares to the repeat method, where 92% is due to UError, and 8% is due to Uregression. The hedonic method has four sub periods with Uerror greater than 95%, whereas the repeat method has only one sub-period where this is true. Thus, departures from desirable properties have greater economic significance in the case of the repeat model. To analyze further the second order moments of prediction errors, in Table 4 we report Theil's U2 statistic. The U2 statistic is consistently higher for the hedonic model; it ranges from 0.80 to 0.86. The range for the repeat sales model is from 0.65 to 0.83. This indicates that the hedonic model explains a larger percentage of the variance in forecasted sales prices than the repeat method. Table 4 reports substantial evidence of heteroscedasticity for the two methods. The repeat PE's displays more evidence of variability in variance: over all quarters the repeat coefficients, and their t-values are larger in absolute value. For the repeat method, significant heteroscedasticity is evident in each quarter from 1983-86. The hedonic method shows significant heteroscedasticity only in quarters 83 and 85. For both methods, the statistically significant results indicate that higher predicted prices are associated with smaller 20 House Price Forecasts error variance, over the range of the explanatory variable. The evidence in Table 4 says that local market indicators, as measured by property characteristics, have some power to predict future variances of house prices. This implies that house characteristics must be among omitted variables that could be used to improve forecasting power; the use of these characteristics could produce PE’s that are closer to the normal distribution.9 For repeat sales, this house-specific information is limited to the first price. The hedonic method displays less heteroscedasticity than the repeat method: more detailed housing characteristics can help to move the forecasts towards homogeneous variance. Since neighborhoods tend to have homogeneous housing characteristics, this implies that local information adds significantly to forecasts of the housing market, in the sense of removing variables that have systematic effects. It is straightforward to use the results in Table 4 to re-estimate the forecasts with a correction for heteroscedasticity.10 More importantly for our purposes in this paper, Table 4 indicates that the two forecasting methods differ in their second moments. Thus, we need to look beyond just first and second order moments. The next section compares the entire distribution of PE's for the two methods. In particular, we would like to know how a decision-maker might choose between repeat and hedonic forecasts. 3.2 COMPARING THE TWO DISTRIBUTIONS OF PREDICTION ERRORS Figure 1 shows the empirical cumulative distribution functions for the prediction errors from the two 9 Table 2 shows that property characteristics can be used to improve the level of the house price forecasts. 10 However, reworking the model to remove bias is a difficult job, beyond the scope of this paper. In particular, we want to avoid fitting the model to the forecasting period 21 House Price Forecasts 11 methods. This gives more specificity to the higher standard deviation and lower skewness and kurtosis observed in Table 1 for the repeat method. Figure 1 reveals that the PE's from the repeat method are shifted to the left relative to those from the hedonic method. For PE's from -.06 through +. 21, the hedonic distribution dominates: i.e., for every outcome there is a higher probability than in the repeat distribution. Furthermore, most of the probability is in this range: 60% of the hedonic probability and 46% of the repeat probability. Thus, use of the hedonic method would give the decision-maker 14% greater probability of being within this range. The bottom panel of figure one shows that the two CDF's cross only once. If the mean hedonic PE were closer to zero (or the same as) the repeat mean, then the hedonic method would dominate the repeat method in the sense of second order stochastic dominance.12 But, the mean hedonic PE is greater than the mean repeat PE. Despite the failure of second order stochastic dominance, a case can be made that a broad class of decision-makers would prefer the hedonic forecasts. Consider a developer or an investor who has decided to commit to a new development based on a price forecasted by one of the two methods. When this speculative development is finished, the actual sales price will differ from the forecasted price. With the hedonic method, 60% of the probability mass is concentrated in the range from -6% to +21%. This means that there is a good chance that any error will be neutral to positive: i.e., actual sales price will be above predicted by between zero and 21%, or that the error will be slightly negative. The positive errors imply a pleasant surprise in the sense that the developer and the investors will make more money than they expected. The 11 These empirical CDFs were estimated using standard kernel density estimation techniques. 12 This would mean that any risk-averse decision-maker would prefer hedonic forecasts to repeat forecasts. 22 House Price Forecasts repeat method has much more probability in the lower tail. In particular, there is much greater probability of large, negative surprises, ranging up to -50%. Since these decision-makers are likely to attach a great deal of negative utility to actual price being substantially below forecasted price, they will maximize expected utility by using the hedonic method. Table 5 presents statistical tests on the significance of the differences between the two CDF's. The Wilcoxon and Kruskal-Wallis tests show that the distribution of repeat PE's is significantly lower than the corresponding hedonic distribution. That is, the repeat distribution has significantly more density at lower values of the PE's. Furthermore, the binomial tests show that the median of the repeat distribution is significantly lower than the median of the hedonic distribution, confirming information on the mean PE's (Table 1). We conclude that the behavior in the tails of the two distributions is best understood with Figure 1: The different kurtosis and skewness measures in Table 1 would not lead one to suspect that the repeat PE's have so much probability mass at lower PE values compared to the hedonic method. The tests in Table 5 show that these differences are statistically significant. 4. CONCLUSION This paper uses time series models to produce forecasts of individual house prices one quarter into the future. We hypothesize that the rate of growth in city-wide house prices follows a pth order autoregressive process. Two Kalman filter models are used to implement time series estimation: one following a basic hedonic price index framework and the other following the repeat sales approach. This paper focuses on a variety of empirical tests of the accuracy of these forecasts. The hedonic and repeat sales forecasting models are estimated with housing transactions from 23 House Price Forecasts Miami, Florida. Both sets of prediction errors (PEs) show significant departures from the desirable properties of any one-step-ahead forecast. The repeat sales method performs better than the hedonic in terms of basic, descriptive statistics: repeat sales PEs have lower means, skewness and kurtosis. But, the repeat PEs have larger standard deviations in all forecasted quarters. Both forecasting methods show some informational inefficiency, but the hedonic is more efficient than the repeat. For example, the hedonic PEs are not as closely related to property characteristics available at the time the forecast was made. Also, the hedonic characteristics explain a lower percent of the variance of the PEs. Most importantly, the hedonic performed significantly better than the repeat method in terms of Theil's decomposition of the mean squared prediction error. Local housing market information, as reflected in house-specific characteristics (the first sale in the case of the repeat model) are significantly related to future levels and variances of house prices. Specific hedonic characteristics (age and assessed value) produce forecasts that are more informationally efficient than the repeat method, with lower and less predictable error variance. Kernel smoothing methods are used to estimate probability distribution functions (and CDFs) for the two distributions of PEs (see Figure 1). Neither method dominates in terms of second order stochastic dominance, but the hedonic method dominates over an important range of PEs: from about -6% to +23%, implying neutral to pleasant surprises for investors. The repeat method has much higher probability of a large, negative error. Thus, a case can be made that many risk-averse investors would prefer a forecast based on the hedonic method. 24 House Price Forecasts References Bailey, M.J., Muth, R.F. and Nourse, H.O. (1963), "A Regression Method for Real Estate Price Index Construction," Journal of the American Statistical Association, 58, 933-942. Case, K. E. and Shiller, R. J. (1989),"The Efficiency of the Market for Single Family Homes," American Economic Review, 79(1), 125-137. Case, K. E. and Shiller, R. J. (1990),"Forecasting Prices and Excess Returns in the Housing Market," AREUEA Journal, 18 (3), 253-273. Clapp, J. M. and Giaccotto, C. (1994),"The Influence of Economic Variables on Local House Price Dynamics," Journal of Urban Economics, 36, 161-183. Clapp, J. M., and Giaccotto, C. (1998),"Residential Hedonic Models: A Rational Expectations Approach to Age Effects," Journal of Urban Economics, 44, 415-437. Conover, W. J. (1980), Practical NonParametric Statistics, 2nd Ed. Wiley, New York, U.S.A. Diebold, F. X. and Lopez, J. A (1996),"Forecast Evaluation and Combination" Statistical Methods in Finance, G. S. Maddala and C. R. Rao, Editors, Elsevier, New York, NY. DiPasquale, D. and Wheaton, W.C. (1994), “Housing Market Dynamics and the Future of Housing Prices,” Journal of Urban Economics, 35(1), 1-27 Englund, P, Gordon, T.M. and Quigley, J.M. (1999), “The valuation of Real Capital: A Random Walk down Kungsgatan” Journal of Housing Economics, 8,205-216. Gatzlaff, D. and Ling, D. (1994),"Measuring Changes in Local House Prices: An Empirical Investigation of Alternative Methodologies," Journal of Urban Economics, 35 (2) 221-244. Gershenfeld, N.A. and Weigend, A.S. (1993), “The Future of Time Series: Learning and Undersanding,” Sciences of Complexity, Proc. Vol. XV 1-70, Addison-Wesley Gibbons, J. D. and Chakraborti, S. (1992), Nonparametric Statistical Inference, 3th Ed., Marcel Dekker, Inc., New York. Granger, C. W. J. and Newbold, P. (1986), Forecasting Economic Time Series, 2nd ed. Academic Press, New York, NY. Hendershott, P.H. (1991), “Are Real House Prices Likely to Decline by 47 Percent?” Regional Science and Urban Economics, 21(4), 553-563 Hill, R.C., Sirmans, C.F., and Knight, J.R.(1999). “A Random Walk Down Main Street?” Regional Sci. Urban Econ. 29(1), 89-103. 25 House Price Forecasts Holden, K. and Peel, D. A.(1990),"On Testing for Unbiasedness and Efficiency of Forecasts," Manchester School of Economic and Social Studies, 58, 120-127. Kuo, C. (1996),"Serial Correlation and Seasonality in the Real Estate Market," Journal of Real Estate Finance and Economics, 12, 139-162. Lehman, E.L. (1986), Testing Statistical Hypotheses, 2nd ed. Wiley, New York, NY. Lindgren, B. W. (1976), Statistical Theory, 3th Ed., Macmillan, New York, U.S.A. Mankiw, N. G. and Weil, D. N. (1989),"The Baby Boom, the Baby Bust and the Housing Market," Regional Science and Urban Economics, 19, 235-258. Mosteller, F. and Rourke, R. E. (1973), Sturdy Statistics: Nonparametric and Order Statistics, Addison-Wesley, Redding, Mass. Rosen, S. (1974),"Hedonic Prices and Implicit Markets: Product Differentiation in Pure Competition," Journal of Political Economy, 82, 34-55. Theil, H (1966), Applied Economic Forecasting, Rand McNally & Company. 26 Table 1 Descriptive Statistics for Prediction Errors (PE’s) by Quarter. Hedonic and Repeat Sales models NOTES: Quarter Number of Observations 81 111 82 172 83 165 84 141 85 116 86 82 All Quarters 787 Model Mean Standard Deviation Repeat Hedonic Repeat Hedonic Repeat Hedonic Repeat Hedonic Repeat Hedonic Repeat Hedonic -0.005 -0.030 0.023 0.024 0.017 0.014 -0.022 -0.012 0.024 0.031 -0.027 0.026 0.157 0.140 0.208 0.165 0.176 0.157 0.201 0.155 0.211 0.151 0.232 0.164 0.723 -1.269 0.135 -0.926 0.838 -1.292 -0.046 -2.311 0.059 -1.197 -1.467 -0.499 Repeat Hedonic 0.005 0.009 0.198 0.157 -0.044 (0.614) -1.209 (0.000) Skewness (0.002) (0.000) (0.470) (0.000) (0.000) (0.000) (0.824) (0.000) (0.795) (0.000) (0.000) (0.065) Kurtosis 4.169 7.843 4.524 5.439 6.258 9.847 3.971 14.360 5.729 8.406 8.223 3.470 (0.012) (0.000) (0.000) (0.000) (0.000) (0.000) (0.019) (0.000) (0.000) (0.000) (0.000) (0.385) 5.969 (0.000) 8.245 (0.000) Shapiro-Wilk W Test 0.959 0.925 0.969 0.946 0.967 0.946 0.973 0.871 0.968 0.945 0.904 0.973 (0.012) (0.000) (0.029) (0.000) (0.017) (0.000) (0.130) (0.000) (0.066) (0.000) (0.000) (0.272) 0.969 (0.000) 0.938 (0.000) Quarters 81 thru 86 correspond to 1996Q1 thru 1997Q2. All refers to all six quarters combined. Prediction Errors (PE's) are as a % of predicted price for all properties sold within the indicated forecasting time period. Numbers in parenthesis are P-Values for the statistics. For normal random variables skewness should be zero and kurtosis 3. Small P-values imply that the sample estimate differs from the population moment for a normal variable. The Shapiro-Wilk W statistic is a test for normality: P-value less than 0.05 indicates rejection of normality at the 5% level. Table 2 Informational Efficiency of PE’s: Repeat Compared to Hedonic Quarter 0 81 Repeat 81 Hedonic 82 Repeat 82 Hedonic 83 Repeat 83 Hedonic 84 Repeat 84 Hedonic 85 Repeat 85 Hedonic 86 Repeat 86 Hedonic All Repeat Hedonic NOTES: êi , t+1 = 0 + 1 X 1 i + 2 X 2 i + i , t+1 êi , t+1 = 0 + 1 E( yi , t+1 ) + i , t+1 Model 1 1.08 (2.41) -1.29 (-2.72) 1.78 (3.75) -0.67 (-1.46) 1.58 (3.91) 0.0082 (.43) 1.16 (2.11) -0.446 (-.97) 2.49 (4.41) 0.12 (.508) 2.34 (3.77) -1.33 (-2.22) -0.092 (-2.42) 0.108 (2.66) -0.15 (-3.70) 0.0596 (1.52) -0.135 (-3.87) 0.00045 (.037) -0.101 (-2.15) 0.037 (.94) -0.212 (-4.37) -0.0077 (-.17) -0.2 (-3.82) 0.11 (2.26) 1.75 (8.50) -0.495 (-2.50) -0.15 (-8.48) 0.043 (2.54) R2 F Value Prob>F 0.04 5.85 (0.02) 0.05 7.07 (0.01) 0.07 13.70 (0.00) 0.01 2.29 (0.13) 0.08 14.98 (0.00) -0.01 0.00 (0.99) 0.03 4.62 (0.03) -0.00 0.90 (0.35) 0.14 19.10 (0.00) -0.01 0.03 (0.86) 0.14 14.60 (0.00) 0.05 5.13 (0.03) 0.08 71.92 (0.00) 0.01 6.47 (0.01) 0 1 0.88 (2.303) -1.35 (-3.30) 1.03 (2.86) -1.52 (-3.80) 1.14 (3.51) -0.61 (-1.64) 0.64 (1.47) -0.75 (-1.79) 1.80 (3.93) -0.08 (-.179) 1.15 (1.90) -1.42 (-2.70) -0.08 (-2.31) 0.11 (3.06) -0.09 (-2.80) 0.10 (3.19) -0.10 (-3.46) 0.03 (.94) -0.06 (-1.52) 0.05 (1.51) -0.16 (-3.89) 0.00 (.0684) -0.10 (-1.95) 0.11 (2.57) 1.12 (6.61) -0.84 (-5.006) -0.098 (-6.59) 0.06 (4.071) 2 0.04 (1.87) 0.11 (5.0) 0.09 (3.89) 0.04 (1.94) 0.03 (.949) 0.05 (1.56) 0.06 (6.45) R2 F-test Prob>F 0.05 5.34 (0.02) 0.10 5.68 (0.00) 0.04 7.84 (0.01) 0.14 14.26 (0.00) 0.07 11.97 (0.00) 0.09 7.60 (0.01) 0.02 2.31 (0.13) 0.03 2.43 (0.12) 0.12 15.13 (0.00) 0.01 0.46 (0.50) 0.05 3.80 (0.05) 0.09 3.97 (0.05) 0.05 43.43 (0.00) 0.06 25.66 (0.00) Quarters 81 thru 86 correspond to 1996Q1 thru 1997Q2. All refers to all six quarters combined. The F-values are for a one tail test that all parameters (except for the intercept) are zero. In the regression: êi , t+1 = 0 + 1 E( yi , t+1 ) + i , t+1 , we use the predicted value of each property as a proxy for the expected value. In the regression: êi , t+1 = 0 + 1 X 1 i + 2 X 2 i + i , t+1 , X1 is the first sales price for repeats. For the hedonic regressions, X 1 is assessed value and X2 is building age. Table 3 Theil's Decomposition of Mean Square Prediction Errors (MSPE) y i , t+1 = 0 + 1 yˆ i , t+1 + u i , t+1 Quarter Model 0 1 81 Repeat 1.076 (2.405) -1.291 (-2.72) 1.781 (3.75) -0.670 (-1.46) 1.590 (3.91) 0.008 (0.019) 1.160 (2.11) -0.450 (-.97) 2.490 (4.41) 0.120 (.24) 2.340 (3.77) -1.330 (-2.22) 0.907 (2.419) 1.108 (2.660) 0.849 (3.70) 1.060 (1.52) 0.866 (3.87) 1.000 (.013) 0.899 (2.15) 1.040 (.95) 0.790 (4.37) 0.990 (.175) 0.800 (3.82) 1.120 (2.26) 1.750 (8.49) -0.498 (-2.51) 0.850 (8.47) 1.040 (2.56) 81 Hedonic 82 Repeat 82 Hedonic 83 Repeat 83 Hedonic 84 Repeat 84 Hedonic 85 Repeat 85 Hedonic 86 Repeat 86 Hedonic All Repeat Hedonic R2 0.840 F-test for 0 = 0 and 1 = 1 6.090 Prob>F 0.02 Wilcoxon Test -1.183 (0.118) Theil's Decomposition of MSPE UBias URegression 0.001 0.051 UError 0.948 0.870 12.430 0.00 -1.886 (0.030) 0.043 0.058 0.899 0.720 16.150 0.00 -1.099 (0.136) 0.012 0.074 0.914 0.810 5.870 0.02 -3.002 (0.001) 0.020 0.013 0.970 0.790 16.840 0.00 -0.880 (0.189) 0.009 0.083 0.907 0.820 1.220 0.27 -1.786 (0.037) 0.007 0.000 0.990 0.730 6.350 0.01 -1.515 (0.065) 0.011 0.032 0.957 0.830 1.780 0.18 -0.262 (0.397) 0.006 0.006 0.990 0.700 21.150 0.00 -0.802 (0.211) 0.013 0.142 0.850 0.820 4.870 0.03 -2.904 (0.002) 0.040 0.000 0.960 0.740 16.300 0.00 -0.594 (0.276) 0.014 0.152 0.834 0.860 7.460 0.01 -1.801 (0.036) 0.025 0.059 0.916 0.750 72.400 0.00 -0.053 (0.479) 0.001 0.084 0.920 0.830 9.110 0.00 -3.480 (0.000) 0.003 0.008 0.990 NOTES: Quarters 81 thru 86 correspond to 1996Q1 thru 1997Q2. All refers to all six quarters combined. Numbers in parenthesis under the column headings 0 and 1 are t-statistics.The Wilcoxon Rank Sign test is for the null hypothesis of zero mean PE during the indicated forecasting time period. Numbers in parenthesis following the Wilcoxon test are P-values. Small P-values indicate a non-zero mean. Table 4 Analysis of Second Order Moments: Theil's U2 Statistic and Tests for Constant Variance Across Properties 2 2 eˆ i , t+1 = 0 + 1 E( yi , t+1 ) + 2 E yi , t 1 i , t+1 Quarter Model U2 Statistic All Repeat 0.730 All Hedonic 0.860 81 Repeat 0.690 81 Hedonic 0.800 82 Repeat 0.770 82 Hedonic 0.820 83 Repeat 0.710 83 Hedonic 0.830 84 Repeat 0.650 84 Hedonic 0.810 85 Repeat 0.690 85 Hedonic 0.850 86 Repeat 0.830 86 Hedonic 0.860 r 0 1 0.29 (1.29) 18.926 (2.000) 6.965 (2.617) 0.921 (0.33` 5.07 (0.812) 6.912 (1.477) 1.308 (0.263) 7.417 (2.050) 19.765 (3.154) 15.931 (3.170) -5.173 (-0.590) 22.280 (3.535 14.638 (2.438) 53.465 (8.623) 8.859 (1.820) -3.218 (-9.424) -1.171 (-2.567) -.140 (-.293) -0.824 (-0.770) -1.142 (0.156) -.219 (-0.256) -1.255 (-2.025) -3.347 (-3.121) -2.697 (-3.142) 0.904 (0.603) -3.723 (-3.459) -2.486 (-2.411) -9.140 (-8.722) -1.514 (-1.815) 0.39 (3.41) 0.12 (1.58) 0.31 (3.90) 0.35 (4.00) 0.37 (3.53) 0.29 (1.29) 2 .137 (9.398) 0.049 (2.526) 0.005 (.264) 0.033 (0.732) 0.047 (0.168) 0.009 (0.254) 0.053 (2.008) 0.142 (3.091) 0.114 (3.120) -0.039 (-.612) -0.156 (3.387) 0.106 (2.388) 0.390 (8.826) 0.065 (1.815) R2 0.101 0.015 0.00 0.043 0.024 0.000 0.015 .066 0.061 0.00 0.158 0.049 0.535 0.016 NOTES: Quarters 81 thru 86 correspond to 1996Q1 thru 1997Q2. All refers to all six quarters combined. Prediction Errors (PE's) are as a % of predicted price for all properties sold within the indicated forecasting time period. Numbers in parenthesis are t statistics. In the regression model we use the predicted value of each property as a proxy for the expected value. Table 5 Tests on the Differences Between Repeat and Hedonic Distributions Wilcoxon Rank Sums Test NOTES: Kruskal-Wallis Test, repeat Chi Square Prob>CHISQ Points > Median, repeat Quarter Number of Observations 81 82 83 84 85 86 111 172 165 141 116 82 12505 28633 26944 19054 12930 6292 0.267 -1.124 -0.419 -1.310 -1.143 -1.560 0.789 0.261 0.068 0.190 0.253 0.120 0.721 1.266 0.176 1.720 1.310 2.430 0.788 0.261 0.675 0.190 0.253 0.120 55 78 80 65 52 39 -0.133 -1.720 -0.550 -1.310 -1.570 -0.623 0.894 0.085 0.583 0.191 0.116 0.533 All 787 600149 -2.175 0.030 4.730 0.030 364 -2.970 0.003 W+, repeat Z Prob>|Z| Sum Z Prob>|Z| Quarters 81 thru 86 correspond to 1996Q1 thru 1997Q2. All refers to all six quarters combined. Prediction Errors (PE's) are as a % of predicted price for all properties sold within the indicated forecasting time period. Test statistics are reported for the absolute values of repeat sales prediction errors; these are ranked, after combining with hedonic absolute PE's. See section 2.4 for details. Figure 1: Repeat and Hedonic PE's a. Probability Distributions 0.07000 0.06000 0.05000 PDF's 0.04000 Repeat Hedonic 0.03000 0.02000 0.01000 -1 .0 6 -0 0 .9 8 -0 1 .9 0 -0 3 .8 2 -0 5 .7 4 -0 7 .6 6 -0 8 .5 9 -0 0 .5 1 -0 2 .4 3 -0 4 .3 5 -0 5 .2 7 -0 7 .1 9 -0 9 .1 2 -0 1 .0 42 0. 03 6 0. 11 4 0. 19 3 0. 27 1 0. 34 9 0. 42 7 0. 50 6 0. 58 4 0. 66 2 0. 74 0 0. 81 9 0.00000 Prediction Errors (mult. By 100 to get approx. % error) -1 .0 6 -0 0 .9 8 -0 1 .9 0 -0 3 .8 2 -0 5 .7 4 -0 7 .6 6 -0 8 .5 9 -0 0 .5 1 -0 2 .4 3 -0 4 .3 5 -0 5 .2 7 -0 7 .1 9 -0 9 .1 2 -0 1 .0 42 0. 03 6 0. 11 4 0. 19 3 0. 27 1 0. 34 9 0. 42 7 0. 50 6 0. 58 4 0. 66 2 0. 74 0 0. 81 9 Cumulative Probability b. Cumulative Probability Distribution 1.20000 1.00000 0.80000 0.60000 Repeat Prediciton Errors Hedonic 0.40000 0.20000 0.00000