A Drowsy Driver Alert Interface using Facial Expression Recognition

advertisement

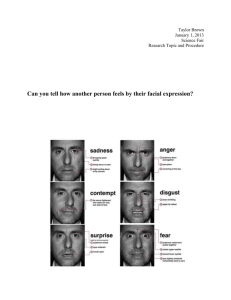

A Drowsy Driver Alert Interface using Facial Expression Recognition Paper Presenter: Sunilduth Baichoo, Dept. of Computer Science and Engineering Author(s): Sunilduth Baichooo, Dept. of Computer Science and Engineering Shakuntala Baichoo, Dept. of Computer Science and Engineering Sundeep Ganeshe, Dept. of Computer Science and Engineering Yadassen Sandiren Palaniandy, Dept. of Computer Science and Engineering Extended Abstract A facial expression is a visible manifestation of the affective state, cognitive activity, intention, personality, and psychopathology of a person (Fasel et al., 2003). Facial expressions are natural means of communications between humans. By looking at the facial expression of a human face the emotional state of that person can be understood. Research in Facial Expression Recognition has considered seven basic facial expressions namely anger, disgust, fear, happy, sad, surprise and neutral (Ekman et al., 1971). With the advancement in the field of face recognition and face detection and the increased availability of relatively cheap computer power (Turk et Al., 1991), there has been an increasing interest in the study of automatic facial expression recognition (AFER) in the last decade. While human beings can easily interpret facial expressions, it is a challenging task for computers to interpret the latter. An AFER system is one which can automatically determine the expressions of a particular user. AFER consists of four main steps: face detection, pre-processing, facial feature extraction and lastly facial expression classification and recognition. For the Driver Alert Interface the basic expressions fear, disgust, anger and an additional expression “sleepy” are used. The proposed system is developed using the four components of the AFER system, as follows: Face detection involves locating the face from an image or video stream from a webcam or camera. Here Face Detection is carried out using Local Successive Mean Quantization Transform (SMQT) Features and Split Up Sparse Network of Windows (SNOW) Classifier developed by Mikael Nilsson, Nordberg Jorgen and Ingvar Claesson (Nilsson et al., 2007). Pre-processing involves improving the quality of the image. Facial feature extraction: The prominent facial features of the face-eyebrows, eyes, nose, mouth, and chin are extracted using the model developed by Matthew Turk and Alex Pentland. Classification and recognition: A feed-forward back-propagation neural network has been used. The classifier is used to categorize the facial features extracted from the previous step to determine the expression being conveyed by the face by feeding the corresponding weight vectors to the input layer of the neural network. The number of neurons in the hidden layer is 20 and the number of neurons in the output layer is 4 corresponding to 3 basic facial expressions (fear, disgust and anger) and also the sleepy state. The back propagation algorithm has been used for the Neural Network learning process. After the Neural Network has learned the four expressions and recognized the emotion, it can simulate an unknown data. If this recognition result corresponds to the true emotion of the user, then the recognition result is said to be correct. Two facial expressions databases were used to train the system and it was found that the accuracy of the system was higher using the JAFFE database than MMI. The highest recognition rate for the JAFFE facial expressions on static images was 85.7 % and at realtime 56%. When integrated with the Drowsy Driver Alert Interface (DDAI), the overall accuracy of the system was acceptable, about 60%. The system was also tested for images with occlusions with rather good results. Though the system yielded good performance, some expressions were confused with each other e.g. if a person is surprised with the mouth open, the identified expression is that of ‘happiness’. References Ekman, P., Friesen, W. V., “Constants across cultures in the face and emotion”, Journal of Personality and Social Psychology, 1971, 17, 124-129. Fasel B., Luettin J., “Automatic facial expression analysis: a survey”, Pattern Recognition, 36(1): 259-275 (2003) Nilsson M., Nordberg J., Claesson I., “Face Detection using Local SMQT Features and Split Up SNoW Classifier”, pp. in proc. IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Honolulu, 2007. Turk, M., and Pentland, A., “Eigenfaces for recognition”, Journal of Cognitive Neuroscience, Vol, 3, pp. 71-86, (1991).