template for the preparation of extended abstracts and electronic

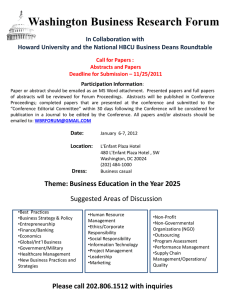

advertisement

ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION AT DELTA UNIVERSITY FOR SCIENCE AND TECHNOLOGY GAMASA, EGYPT, 4 – 6 DECEMBER 2010 Automatic abstract preparation Dr. Tünde Molnár Lengyel Associate professor, Eszterházy Károly College, Department of Informatics, Hungary email: mtunde@ektf.hu Abstract (Abstract Text; 11 pt Times New Roman, Maximum 200 words, justified, single spaced lines) As the rapidly multiplying scientific information condemns any researcher’s effort to keep up with the latest development in his or her own field to futility the automatization of content exploration gains increasing significance. Consequently, any research or project helping to attract attention to or pointing out the essential information of a given scholarly text becomes indispensable. While in part due to the respective intricate linguistic structures the automatization of this task has not been fully achieved in Hungary, the urgency of the implementation of such project is not diminished. Presently several programs facilitate the distinction of essential information in English language texts via the utilization of quantitative or qualitative principles in addition to the available help provided for researchers of German, French, Greek, Spanish, Italian, and Chinese texts. Consequently, my research project aiming to develop an automatized Hungarian language text extraction program attempts to remedy the abovementioned situation. In my presentation I would like to examine the possibilities and limitations of the automation of research abstract preparation in addition to surveying the procedures and technological implementations facilitating the compilation of research abstracts in the Hungarian language. Keywords: Automatic abstract preparation; content analysis, quantitative programme, qualitative programme 1. Introduction As a result of an ever-increasing availability of new information the automation of documentary content exploration is steadily gaining importance. Since the virtually unprocessable amount of information makes keeping up with the latest professional developments even in our field, library science and information management, rather difficult, research projects aiming at promoting the selection and identification of appropriate information assume a vital significance. One possible solution to the above quandary, 1 Paper Title ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION automation can yield several benefits including improved efficiency of information search and retrieval systems, newly developed Internet search engines, in addition to the Internet-based news monitoring systems. While information retrieval appears to be crucial, issues concerning information processing have to be addressed as well. Such potential questions have to be answered as what should be done after identifying the respective information, can after entering a given web-page or obtaining the electronic version of a needed article or book the user be left on his or her own, or be offered assistance? The thorough reading of the given text is rather time-consuming and if we consider the ever-increasing number of publications we can see that an instructor or researcher aiming to keep up with the latest developments in his or her field will be compelled to spend much more time reading these materials than it is practically feasible. The requirements of one’s profession or the expectations of society also lead to an increase in the number of articles as the evaluation and potential advancement of a college instructor or academic researcher in any discipline are based upon the number of articles published and lectures held at scholarly conferences. Consequently, simultaneously with the growing number of publications the articles’ novelty content declines and redundancies along with overlaps also increase. This problem is not discipline-specific as the ever-growing number of professional publications leads to the decline of novelty or new information capacity and increases the potential of overlaps and redundancies. 2. Methods (Experimental / Theoretical) (12pt Times New Roman, Bold) Therefore, the role of research abstracts becomes more and more significant as by the help of the former the reader uses the tenth of the time in obtaining relevant, or (theoretically) the most important information compared to the full reading of the given text. Furthermore, the compilation of research abstracts also helps in the elimination of redundancies. While in my opinion, the significance and importance of research abstracts are beyond any dispute, no one can be expected to read through all the periodicals containing research abstracts and each respective excerpt However, research abstracts allow one to gain more information relevant to his or her field and another important benefit is that the reading of these abstracts helps one to decide whether the full reading of the given article is necessary. or not So far we have examined the importance of research abstracts from a user’s point of view and even after a brief glance we can safely conclude that the overview of the latter poses a significant challenge in most disciplines. When we look at the other side of the coin, the compilation of research abstracts, we have to contend with difficulties in that respect as well. 2 Paper Title ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION The ever proliferating publications make the work of abstract and documentation compilers more and more arduous as traditional methods cannot yield a comprehensive or approximate reproduction of the given materials in the respective fields. A greater reliance on the computer in the work of the documentalist appears to be the only solution, as more and more procedures should be elaborated to facilitate the presentation of the most important elements of articles, or possibly books. While nowadays leading scientific publications require authors to provide abstracts or summaries to their submitted articles, only a very few articles are published in this format, and the abstract preparation potential of documentation experts is limited due to other professional commitments. Consequently, any research or project helping to attract attention to or pointing out the essential information of a given scholarly text becomes indispensable. While in part due to the respective intricate linguistic structures the automatization of this task has not been fully achieved in Hungary, the urgency of the implementation of such project is not diminished. ”In light of the wide-spread applicability and popularity of abstracts, the need to automatize this process is all the more justified”. Due to the usability and popularity of text extracts the automatization of the respective representational process appears to be natural.”[1] Presently several programs facilitate the distinction of essential information in English language texts via the utilization of quantitative or qualitative principles (Supplement 1) in addition to the available help provided for researchers of German(1), French(2), Greek(3), Spanish(4), Italian(5), and Chinese(6) texts. Consequently, my research project aiming to develop an automatized Hungarian language text extraction program attempts to remedy the abovementioned situation. In the following section I would like to examine the possibilities and limitations of the automation of research abstract preparation in addition to surveying the procedures and technological implementations facilitating the compilation of research abstracts in the Hungarian language. Content analysis While several theoretical approaches have been put forth for content analysis purposes including the manual process developed in 1963[2], despite the call of the 2001 edition of the Librarians’ Reference Book[3], for the elaboration of automatic Hungarian language extract programs this goal has not yet been fully realized Look at the some options for the automatization of extract preparation! Content extraction software is usually grouped according to its ability to facilitate quantitative or qualitative 3 Paper Title ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION content analysis. However, no clear distinction can be made between the two approaches. All software capable of performing qualitative content analysis provide quantity-based reports and the qualitative approach is primarily indicated by provision of memoranda and annotations..My research leads me to conclude that most programs do not go beyond statistical reporting, which on the one hand, helps researchers to make conclusions or perform analyses, but on the other, very few applications facilitate content extraction. While my research effort can be grouped into the category of quantity-based content analysis, in addition to focusing on statistical data I aim to discern the essential components of particular texts. The first step for the automation of abstract preparation is ascertaining how many times do certain words (treated as separate units) appear in the text Then the given set of data is organized according to frequency and thus the statistical verbal rendering of the text is obtained. Frequency analysis It was Zipf who discovered a certain regularity in the distribution of the words and structures of the text. He examined Joyce’s Ulysses, and having arranged the words of the novel according to occurrence, he asserted ’’that the product of the cumulative occurrence figures and the inclusive frequency values is constant.’’[4] In order to perform a frequency analysis in the text, the roots of the words of the text (type) along with the different forms of occurrence (sign) have to be identified and the occurrence will be ranked according to frequency. Whereas the identification of roots is a painstakingly long effort calling for the inclusion of the computer in the work process, in case of Hungarian texts this is the most difficult task. One possible solution could be computer-assisted linguistics, which in Hungary started in 1960 with the introduction of mechanized translations. This period was primarily characterized by the elaboration of the foundations of the mechanized translation algorithm from Russian to Hungarian language. The second era (1967-71) is defined by the work of documentary linguistics experts elaborating a syntactic analysis method of their own. The third lexicologic phase (1972-78) responding to the needs of literary critics or philologists included the development of software for language teaching and the compilation of quantitative-analysis based frequency dictionaries focusing on colloquial and literary Hungarian. However, these research results were so closely associated with certain scholars that the disbanding of the Documentation Group in 1972 in Budapest all but eliminated linguistics research efforts. With 1979 the fourth stage begins, experiencing an attempt made to make up for the loss of research 4 ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION Paper Title experience in the 1970’s. As a result of a dynamic development of language processing systems throughout Europe, Hungarian researchers elaborate the MI language culminating in the arrival of a Hungarian morphological analysis application method. The appearance of personal software in the 1990’s lead to rapid developments. The elaboration of a spelling and grammar check system taking into consideration of the characteristics of the Hungarian language was a significant achievement of this period. In this system the composition of words, that is the connection between the root and the suffix, was described by an algorithm. Morphologic, the firm responsible for its development, became one of the leading companies in the computerized linguistics field after Microsoft purchased their program. The newer versions of this software examines the context and can eliminate the irrelevant interpretations.[5] Today more and more Hungarian institutions achieve world wide reputation due to their computerized linguistics efforts. For example, the ILP, or Inductive Logic Programming developed in the Artificial Intelligence Research Laboratory of the Hungarian Academy of Science and of Szeged University played a pioneering role in the introduction of experimental linguistic applications. While the abovementioned results help in the identification of roots of words in the Hungarian language, they have not yet been applied in the field of library science. Following the identification of roots, the counting of the words contained by the given text is necessary for the frequency analyses, a task which can be carried out via simple programming commands. In order to perform a frequency analysis or prepare an abstract or excerpt, the significant expressions contained by the given text have to be identified. Methods Consequently I propose two solution methods. The definition of significant words can be achieved by the use of Luhn’s principle.[6] ”While the textual statistics approach utilizing the Luhn method has provided the most reliable results until now,” the definition of the significant words can be done by the vocabulary or dictionary method as well. 1. Following Zipf’s laws, significant expressions constitute the given domain of the frequency list which is dependent upon the respective discipline. However, it is true in all disciplines that these expression do not constitute the beginning or the end of the given list. The list of significant words is obtained when the Gauss curve 5 ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION Paper Title defined according to the empirical method characteristic of the given discipline is projected on to the frequency distribution function.[7] As far as Hungarian texts are concerned few disciplines elaborated a frequency dictionary facilitating the construction of the Gauss curve. Although word frequency dictionaries have been developed in Hungary,[8] these are not disciplinespecific. Nevertheless, computerized linguistics research and dictionary compilation efforts[9], can promise solutions for the future. 2. Luhn theorem is non-forbidden words occurring more than three times are considered significant. According to Luhn’s notion, elaborated in 1951, the multiple occurrence of certain doublets or triple word constructs can be helpful in computerassisted identification of terms carrying relevant information.. Having omitted trivial expressions, the weighting of adjacent words and triple word constructs help to identify relevant sections of the text. Consequently non-trivial expressions containing two or more units get a higher weighting value than their counterparts appearing only once in the text. Having established the weighting process, one has to define which units he or she wants to retrieve as relevant location, either in the form of a sentence or a paragraph Subsequently, the automation process starts during which a numerical value is assigned to the chosen unit based upon the weighting process and the sentences and paragraphs reflecting the highest numerical value are retrieved as a result. In my view the combination of both approaches appears to be the best answer. Accordingly, in case of texts covering an area in which word frequency dictionaries are available those dictionary compilations should be used for that purpose, and in lieu of the word frequency dictionary Luhn’s method should be applied. The latter method identifies and defines the significant expressions via the analysis of the frequency of the words in the given text. It is vital to determine the structure or components of the resulting extract. While I recommend the preservation of the most important sentences, this method raises several problems as well: The references in the particular sentences have to be eliminated in order to guarantee the intelligibility of the independent extracts. (if necessary linguistic approaches should be used); it is important to focus on the intratextual position of the sentence, attention should be paid to the potential introductory expressions[10] with which the author aimed to stress the given sentence (the collection and compilation of expressions into a dictionary is feasible if the examination of manual text extraction 6 ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION Paper Title substantiates this hypothesis, that is why in my view the examination of the main features of manual extraction by assessing the features and exploring the principal dynamics of manual extraction, and use up the results on the autimatization.) From the very beginning of the research process I was compelled to search for an answer to one of the most intriguing quandaries, the possibility of establishing rules and guidelines for human or non-automated abstract preparation. In order to answer this question I tested the summary preparation capability of students majoring in information management or library science informatics programs at various higher education institutions, of informatics experts and of students majoring in Hungarian language and literature. The participants of this survey were asked to prepare the abstracts of professional articles focusing on various topics. On the basis of result is worthy research this topic. I chose scientific articles focusing on different topics as a subject of the survey. One treatise followed a more interdisciplinary approach while the other treatise exclusively focused on library science. Moreover, for the purpose of analysing the correlation between the subject of the article and the knowledge of the sample group, participants not possessing an informatics background were also involved in the survey. Having examined the abstracts of the article focusing on library science I expect to discern a greater divergence between the outputs of professional and lay sample groups, than in that of the case of the interdisciplinary treatise. The group of people with non-informatics background contains students majoring in Hungarian language and literature enabling me to examine which plays a more important role in abstract preparation, professional knowledge or procedural skills. In order to examine whether the different skills and professional levels of sample groups lead to varying outputs in the abstract preparation process, not only students, but experts represented the Information Science-Library Science field in the survey. In order to examine whether the type of higher education institution in which the student is enrolled impacts the abstract preparation process I analysed the composition of the student sample groups. 3. Results (12 pt Times New Roman, Bold) My results is as follows: In case of both articles a strong correlation can be established between the abstracts prepared by the student sample groups, while compared to the professionally prepared abstracts this correlation is lower, but in case of articles on different subjects the results are similar as well. 7 Paper Title ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION A significant difference supporting the correlation between the subject of the given article and the proficiency of the sample group could not be discerned. Content knowledge and non content-specific procedural proficiency in text condensation and the identification of the essence of texts does not result in significant differences in case of the student sample group. According to the results of the analysis we can conclude that significant differences can be discerned concerning the abstracts prepared by students and that of professional experts further proving the hypothesis that the level of content knowledge impacts the final results of the abstract preparation process. In case of students the content-specific knowledge does not lead to major differences as the abstracts of both articles prepared by the three student groups show a great similarity to each other but at the same time a major difference can be observed in relation to abstracts prepared by professional experts. Students tend to prefer sentences located in the beginning of the texts while the professional experts tend to form the sentences of the abstracts from the total textual domain. Moreover, the student markings are more homogeneous than that of the rankings prepared by experts representing varying proficiency levels and differing professional backgrounds. The identification of the crucial sentences of the text does not depend on the proficiency level of the student groups. The abstracts prepared by student groups majoring in library science and informatics programs at colleges and universities show no significant divergence. The close positive connection is indicated by a higher than 0.9 correlation value of the weighed numbers of the given sentences in the respective abstracts. As one of my priorities was the examination of the efficiency of the abstract preparation program. Consequently, I had to develop clear guidelines concerning the appropriate output and I hoped that the several similar results would provide me with a term of reference and comparison. But I get of the result, that there are no two people who would prepare the same abstract. 4. Conclusion (12 pt Times New Roman, Bold) In conclusion I would point out that automatization procedure, which was presentation in my article, can only be used in case of discursive texts during which the author concentrates on one topic and uses objective statements with a consistent vocabulary, form, and structure instead of writing in a literary language. Generally, we can conclude that the Luhn method is more 8 ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION Paper Title effective in case of scientific statements, publications and reports, than it would be in case of a sophisticated literary work. Whereas automated abstract preparation systems have not yet been implemented in Hungary, the demand for such apparatus appears to increase, well it can be utilised in numerous areas: Researchers and instructors can increase their familiarity of the respective professional texts. The search engines of the various web-pages are continously improved as finding the right information is crucial. But what happens after locating the respective information, or when a user reaches a certain web-page and gains access to a downloadable article or book? At this point the user is left to his or her own devices and is compelled to perform the time consuming activity of reading through the given material. If we take into consideration the continuously increasing number of published scholarly articles and texts it becomes obvious that a researcher, instructor, or expert is forced to allocate more time to keeping up with the latest results of his or her discipline than humanly possible.This is, however, not a discipline specific problem as the ever-increasing number of publications leads to a declining novelty value along with more frequent overlaps and redundance. My program can provide much needed help enabling the researcher to peruse the content of the given article before making a decision to perform a thorough reading. Furthermore, the program helps in the identification of overlapping information as well. While most integrated library systems store and provide extracts of the given documents, the limited capacity of library staff does not allow a full utilization of this feature. Consequently, the program can be potentially used in preserving senior theses and dissertations in an electronic format, along with expanding the applicability and processability options of the latter. Although more and more journals accessible on-line furnish extracts of the respective articles, there are still several publications which do not go beyond offering a table of contents from wich the full text of the article can be reached. My program can significantly contribute to the improvement of this situation. Another potential use of the program concerns the publication activity of researchers and instructors who are often required to prepare extracts or summaries of their own work. This, however, raises a significant theoretical dilemma as there are several conflicting views regarding the propriety of an author preparing the summary or extract of his own work. The self-prepared extracts usually do not meet 9 ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION Paper Title the criteria of the genre as the authors rather explain than summarize their own works.Consequently, if authors prepared a separate extract of their work by the help of the program and formed and improved it subsequently, they not only would save time, but could go a long way in eliminating the errors of bias. Furthermore, due to the increasing popularity and availability of digitalization projects more and more documents become available in electronic format which is not only encouraging, but appears to justify the need for the elaboration of an extracting program. References (12 pt Times New Roman, Bold) [1] Horváth T., Papp I., „Könyvtárosok kézikönyve 2”,„Librarians’ Reference Book 2” Budapest, Osiris, (2001) pp.146. [2] Szalai Sándor, „Gépi kivonatkészítés.” „Mechanized extract preparation” Budapest, Országos Műszaki Könyvtár és Dokumentációs Központ, (1963) pp.25-42. [3] Horváth T., Papp I., „Könyvtárosok kézikönyve 2”,„Librarians’ Reference Book 2” Budapest, Osiris, (2001) pp.146-147. [4] Horváth T., Papp I., „Könyvtárosok kézikönyve 1”,„Librarians’ Reference Book 1” Budapest, Osiris, (1999) p.107. [5] Prószéki G., “Számítógépes nyelvészet.” “Computer Linguistics” Budapest, (1989). p. 489-492. [6] Horváth T., Papp I., „Könyvtárosok kézikönyve 2”,„Librarians’ Reference Book 2” Budapest, Osiris, (2001) p.146. [7] Horváth T., Papp I., „Könyvtárosok kézikönyve 1”,„Librarians’ Reference Book 1” Budapest, Osiris, (1999) p.56. [8] Lengyelné Molnár Tünde, “Gyakorisági szótárak. Magyarországi helyzetkép.” “An assessment of the availabilty of word frequency dictionaries in Hungary”, Könyvtári Figyelő, vol 52. n. 1. p. 45-58. (2006.) [9] Magyar Nemzeti Szövegtár; Szószablya szótár; Magyar Értelmező Kéziszótár gyakorisági adatai Hungarian National Text Collection. Word Sabre Dictionary. Frequency data acquired from the Hungarian Dictionary of Definitions [10] Pl. „Összefoglalva”; „A lényeg”; „Ne feledjük” i.e. Such words are: ”in sum, the essence is,, let us not forget” Multimedia Data: (12 pt Times New Roman) 1) TACT software, http://www.chass.utoronto.ca/tact/index.html 2) TACT software, http://www.chass.utoronto.ca/tact/index.html, T-LAB software, http://www.tlab.it> 3) TACT software, http://www.chass.utoronto.ca/tact/index.html> 10 Paper Title ICI 10 - 10TH INTERNATIONAL CONFERENCE ON INFORMATION 4) TEXTPACK homepage, http://www.gesis.org/en/software/textpack/index.htm>, T-LAB homepage, http://www.tlab.it 5) T-LAB homepage, http://www.tlab.it> 6) CONCORDANCE homepage, http://www.concordancesoftware.co.uk/ 11