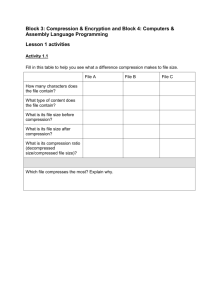

1) Intuitive Compression

advertisement

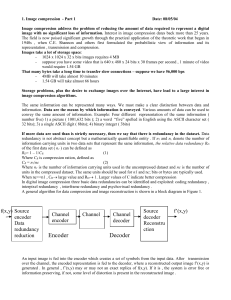

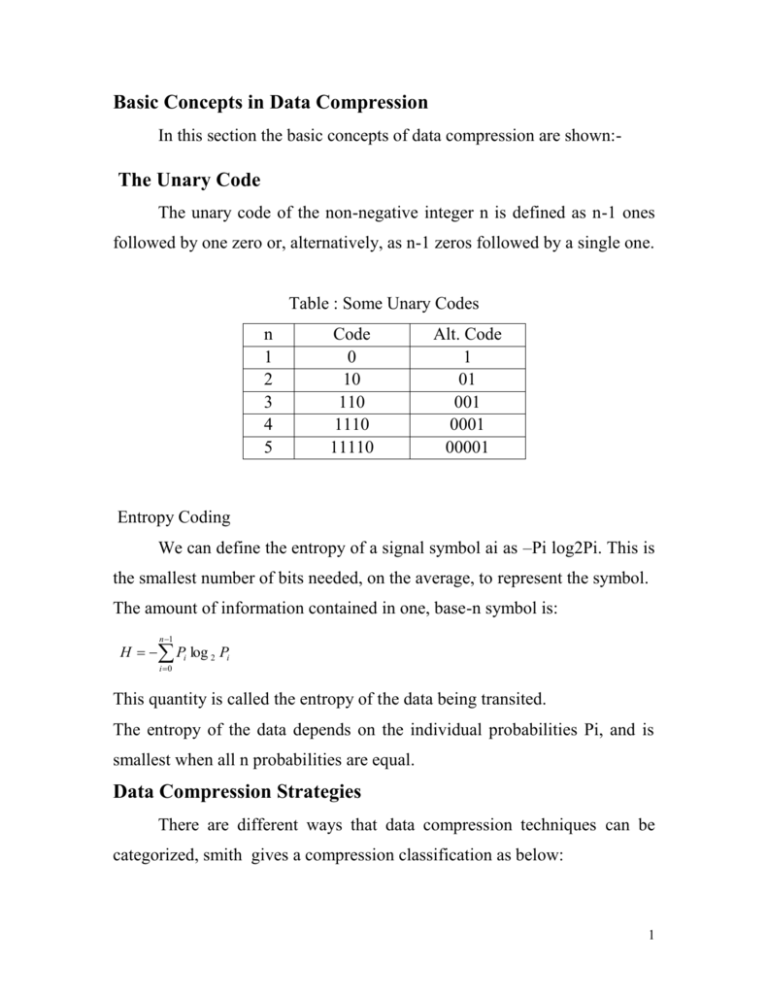

Basic Concepts in Data Compression In this section the basic concepts of data compression are shown:- The Unary Code The unary code of the non-negative integer n is defined as n-1 ones followed by one zero or, alternatively, as n-1 zeros followed by a single one. Table : Some Unary Codes n 1 2 3 4 5 Code 0 10 110 1110 11110 Alt. Code 1 01 001 0001 00001 Entropy Coding We can define the entropy of a signal symbol ai as –Pi log2Pi. This is the smallest number of bits needed, on the average, to represent the symbol. The amount of information contained in one, base-n symbol is: n 1 H Pi log 2 Pi i 0 This quantity is called the entropy of the data being transited. The entropy of the data depends on the individual probabilities Pi, and is smallest when all n probabilities are equal. Data Compression Strategies There are different ways that data compression techniques can be categorized, smith gives a compression classification as below: 1 a. Lossless or Lossy Lossless Lossy RLE JPEG Huffman MPEG Arithmetic Vector quantization Quadtree b. Fixed or variable group size Huffman Group Size Input Fixed Output Variable Arithmetic Variable Variable RLE, LZW Variable Fixed Method Most data compression programs operate by taking a group of data from the original file and compressed it in some way, and then writing the compressed group to the output file. 1.1.2 Irreversible Text Compression This method used to compress text by throwing away some information .the decompressed text will not be identical to the original, so such methods are not general purpose, they can be used in special cases. Ex1//A run of consecutive blank spaces may be replaced by a single space. Ex2// In extreme cases all text characters except letters and spaces may be thrown away, and the letters may be case flattened (converted to all lower- 2 or all uppercase). This will leave just 27 symbols, so a symbol can be encoded in 5 instead of the usual 8 bits. The compression ratio is 5/8 = .625, not bad, but the loss may normally be too great. Ad Hoc Text Compression If the text contains many spaces but they are not clustered, they may be removed and their positions indicated by a bit-string that contains a 0 for each text character that is not a space and a 1 for each space. Thus, the text Here are some ideas, is encoded as the bit-string “0000100010000100000” followed by the text Herearesomeideas. If the number of blank spaces is small, the bit-string will be sparse. Run-Length Encoding The idea behind this approach to data compression is this: If a data item d occurs n consecutive times in the input stream, replace the n occurrences with the single pair nd. The n consecutive occurrences of a data item are called a run length of n, and this approach to data compression is called run length encoding or RLE. We apply this idea first to text compression then to image compression. a) RLE Text Compression Just replacing “2._all_is_too_well” with “2._a2_is_t2_we2” will not work. Clearly, the decompressor should have a way to tell that the first “2” is part of the text while the others are repetition factors for the letters “o” and “l”. Even the string “2._a2l_is t2o_we2l” does not solve this problem (and 3 also does not provide any compression). One way to solve this problem is to precede each repetition with a special escape character. If we use the character “@” as the escape character, then the string “2._a@2l_is_t@2o we@2l” can be decompressed unambiguously. However, it is longer than the original string, since it replaces two consecutive letters with three characters. We have to adopt the convention that only three or more repetitions of the same character will be replaced with a repetition factor. Figure 1.3a is a flowchart for such a simple run-length text compressor. After reading the first character, the count is 1 and the character is saved. Subsequent characters are compared with the one already saved and, if they are identical to it, the repeat-count is incremented. When a different character is read, the operation depends on the value of the repeat count. If it is small, the saved character is written on the compressed file and the newly read character is saved. Otherwise, an “@” is written, followed by the repeat-count and the saved character. Decompression is also straightforward. It is shown in Figure 1.3b. When an “@” is read, the repetition count n and the actual character are immediately read, and the character is written n times on the output stream. 4 5 6