HERE - Steadystone!

advertisement

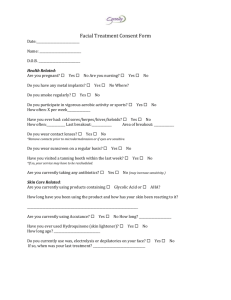

Rapid Facial Expression Classification Using Artificial Neural Networks Nathan Cantelmo Northwestern University 2240 Campus Drive, Rm. 2-434 Evanston, IL, 60208 1-847-467-4682 n-cantelmo@northwestern.edu ABSTRACT Facial expression classification is a classic example of a problem that is relatively easy for humans to solve yet difficult for computers. In this paper, the author describes an artificial neural network (ANN) approach to the problem of rapid facial expression classification. Building on prior work in the field, the present approach was able to achieve a 73.3% mean classification accuracy rate (across five trained nets) when compared with human annotators on an independent (never trained upon) testing set containing 30 grayscale images from the JAFFE facial expression data set. (http://www.kasrl.org/jaffe.html). Categories and Subject Descriptors I.4.8 [Image Processing and Computer Vision]: Scene Analysis – object recognition, shape. General Terms Algorithms, Performance, Design, Reliability, Theory. Keywords ANNs, Artificial Neural Networks, Expression Classification, Computer Vision, Image Processing, Scene Analysis, Facial Recognition. 1. INTRODUCTION From a social psychology standpoint, the ability of humans to correctly classify faces and facial expressions is a critical skill for effective face-to-face interactions. Recent work has indicated that the process itself is both rapid and automatic, and that people will even draw inferences about a person’s personality traits from only a short (100ms) exposure to a face [11]. But if the ability to recognize certain facial expressions is an important part of being human, it is an absolutely crucial part of being human-like. For example, consider a situation in which a human is surprised to see a human-like virtual agent appear on her computer screen. If the agent is able to detect the human’s Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Conference’04, Month 1–2, 2004, City, State, Country. Copyright 2004 ACM 1-58113-000-0/00/0004…$5.00. surprise, it will be better able to plan an appropriate greeting that explains its presence. On the other hand, if the agent can see that the computer user is visibly angry or annoyed, it may instead be best served by avoiding a face-to-face interaction altogether. However, facial expression classification is a classic example of a problem that is relatively easy for humans to solve yet difficult for computers. Indeed, a number of factors make the implementation of an expression-recognition system a non-trivial task, including differences in facial features, lighting conditions, and poses, as well as partial occlusion, and expression ambiguities [8], [12]. Earlier literature in the machine learning community speculated that the facial expression recognition process depends on a number of factors, including familiarity with the target face, experience with various expressions, attention paid to the face, and other non-visual cues [8]. More recent surveys, however, have indicated that two distinct approaches are commonly used with varying levels of success to approach the problem. The first of these classes of solutions involve geometric-based templates, which are applied to faces in order to identify common features. The second class of solutions focuses on deriving a stochastic model for facial expression recognition, often using multi-layer perceptron models [12]. In this paper, the author describes an artificial neural network (ANN) approach to the problem of facial expression recognition that is capable of rapidly classifying images into one of six basic expression categories – happiness, sadness, surprise, anger, disgust, and fear. These categories are identical to those used by Zhang in his work on ANN-based expression classification [12], and are a reflection of the six primary expressions identified by Ekman and Friesen [2]. Further, and perhaps more importantly, nearly all automatic expression classification systems used today rely on these six categories [6]. In order to test the proposed approach, the author developed an ANN similar to the one described in Mitchell, chapter four [5]. The completed classifier used a multi-level perceptron model with a single hidden layer. For the purposes of this study, two versions of the system were trained and tested: One with five hidden units and a second with ten, both in a single layer. As in the Mitchell text, the author used the backpropogation algorithm (with a momentum coefficient of 0.3) to repeatedly cross-train the system over a portion the data set. 2. METHOD 2.1 Training & Testing Data In order to train and test the classification system, the author used images from the Japanese Female Facial Expression (JAFFE) database, located at (http://www.kasrl.org/jaffe.html) [3]. This particular dataset is free for academic use and includes 213 grayscale images, each 256x256px in size. The dataset includes facial images of ten different female models, each assuming seven distinct poses (six basic expressions and one neutral pose). For each model/pose combination, there are (on average) three different images. This results in around 21 images per model or around 30 images per expression. Some resized sample images (with expression labels) from the JAFFE dataset are displayed below in Figure 1. Happiness Sadness Anger 2.2 Implementation As mentioned, the current work involves the use of an artificial neural network (ANN) for the task of facial expression classification. The general implementation of this system followed from the example described in chapter four of Mitchell’s Machine Learning text [5]. The specifics for each portion of the system are described below. 2.2.1 System Parameters For this work, a consistent set of system parameters were selected and maintained throughout all stages of the evaluation. Most importantly, a training rate of 0.3 was used for all training tasks. This value is argued for by Mitchell in chapter four of his text [5]. All versions of the system used a two-layer, feed-forward model with backpropogation through both of the layers. However, the size of the hidden layer was set to each of five or ten units, in order to determine whether five units are sufficient to achieve the same accuracy level as a system with ten units. Results from both of these configurations are described in section 3. 2.2.2 Input Layer Surprise Disgust Fear Each input to the ANN used for this task was a combination of the intensity values averaged over a 32x32 pixel square. This method of image representation is identical to the approach described in chapter four of the Mitchell text [5]. The choice of this specific multi-pixel segment size is supported by some older research into the minimum resolution needed to automatically detect human faces [9]. 2.2.3 Hidden Units Figure 1: Samples Images from the JAFFE Database The JAFFE dataset has previously been used in similar work on automatic expression recognition [3], [12], which makes it especially appealing for this study. In particular, Zhang used the same images with an ANN approach that relied upon hand-placed facial geometry points and Gabor wavelet coefficients as inputs to a multi-layer ANN [12]. Also relevant to the present study, Lyons et al obtained perceived expression recognition values from 60 female Japanese students on each of the 213 images in the JAFFE dataset [3]. For every image, semantic ratings for all six basic facial expressions [2] were collected using a five-point scale, with higher values indicating more of a particular expression. The six mean annotated values for each image were then calculated across all 60 students and reported. Notably, no values for expression neutrality were collected during this process. However, as the goal of the present work was to approximate the human expression recognition process, only the hand-annotated expression values were useful for training and evaluating the system (as opposed to the intended expression poses). Thus, for the purposes of this experiment (and unlike in the Zhang study, which did not rely on hand-coded expression values), all 30 images containing neutral poses were discarded. As described in section 2.2.1, the number of hidden units was varied across two conditions. In condition 1, ten hidden units were used. In condition 2, 5 hidden units were used. 2.2.4 Output Units The output units for the ANN were determined by the problem definition. For each of the six possible facial expression categories, one output unit was required. Each of these six units produced real values ranging from 0.0 to 1.0, which (after scaling) were comparable with the perceived facial expression values reported by the human evaluators. 2.3 Training In order to produce generalized results, 30 randomly-selected images from the training set were moved to a separate location and only ever used for evaluative purposes. Thus, the ANN was trained using the 150 remaining images from the JAFFE dataset. Each of the two system configurations under examination were trained five times over 20,000 epochs, and the mean of each training run was calculated and recorded after every 50 epochs. This was done in order to avoid producing spurious training results due to a convenient initial randomization of the weights. At each epoch, the 150 training images were randomly divided into two groups. The first group, containing roughly 30% of the 150 images, was used to train the ANN. The second group, containing the other 70% of the images, was used to evaluation the updated system. The training algorithm used was the common backpropogation approach described in Mitchell, chapter four [5]. The only variation from base algorithm was the use of a momentum coefficient (with a value of 0.3) during the weight update process [5]. 2.4 Evaluation As in other related work [12], the current system was evaluated using a relatively strict criterion for success. Specifically, the ANN was considered to have made a correct classification if and only if its highest estimated expression value matched the highest mean expression value made by the human annotators. No credit whatsoever was given for classification results that were close to correct, and no additional consideration was given to particularly hard cases (wherein the two highest-rated expressions only differed slightly). Notably, the classification rate for the testing data stabilized after about 5000 epochs and did not degrade, even as the accuracy over the training set continued to rise. This general trend held throughout the 20,000 epochs observed, indicating that overfitting was not a significant problem in this instance. 3.2 Configuration 2: N=5 Hidden Units Results from the second system configuration are displayed in Figure 3 below. In this second training configuration a much smaller hidden perceptron layer (N=5) was used. As shown, the mean classification accuracy for the system suffered as a result, stabilizing at around 65% over the independent (testing) dataset. Arguably, using such strict criterion may seem a bit unreasonable, especially given the inherent ambiguity in many facial expressions. Indeed, a more sensible approach might have involved some element of confidence, or relative error when compared with the human expression evaluations. However, the current approach was chosen in order to maintain consistency with related work, and thus must suffice for the present body of work. 3. RESULTS Evaluation results for both of the system configurations are described below. Figure 3: Classification Accuracy with N=5 Hidden Units 3.1 Configuration 1: N=10 Hidden Units Results from the first system configuration are displayed in Figure 2 below. As shown, the present implementation was able to achieve a (generalized) mean classification accuracy of around 72% over the independent (testing) dataset when N=10 hidden perceptron units were used. 4. DISCUSSION As described in the preceding section, configuration 1 was clearly shown to be preferable to configuration 2 for the task at hand, given the current approach. On the whole, the generalized classification results for configuration 1 were exactly in line with what other researchers have achieved using similar methods. Zhang, for instance, achieved a near identical outcome when training his system on a set of geometric points hand-placed on a facial expression image. [12]. Unfortunately, while interesting, the generalized results for configuration 2 were ultimately less useful than the results for configuration 1. However, they do provide clear evidence in support of using larger hidden layers for ANN-based expression classification. As mentioned in section 2.4, the meaningfulness of the reported classification accuracies are somewhat limited by the fact that they do not account for the perceived ambiguity present in many facial expression instances. Thus, future research in this area should take care to consider the potential applications when devising evaluation schemes. Figure 2: Classification Accuracy with N=10 Hidden Units 5. CONCLUSION In this paper, the author has described a stochastic solution to the problem of facial expression recognition using an artificial neural network. After motivating the problem, two configurations of the system were examined, each differing in the number of hidden units used (five vs. ten). After training and testing both configurations on a set of 180 grayscale images, the ten-unit configuration was shown to be the more effective classifier. The final system was able to achieve a 73.3% mean classification success rate when compared with the classifications made by a group of 60 human annotators. 6. ACKNOWLEDGEMENTS My thanks to ACM SIGCHI for allowing me to modify the templates they had developed. Also, I’d like to thank Francisco Iacobelli, Jonathan Sorg, and Bryan Pardo for their invaluable advice on neural network implementation, training, and evaluation techniques. 7. REFERENCES [1] Chellappa, R., Wilson, C. L., & Sirohey, S. (1995). Human and machine recognition of faces: A survey. Proceedings of the IEEE, 83, 705-740. [2] Ekman, P., & Friesen, W. V. (1977). Manual for the Facial Action Coding System. Palo Alto, CA: Consulting Psychologists Press. [3] Lyons, M., Akamatsu, S., Kamachi, M., & Gyoba, J. (1998). Coding facial expressions with gabor wavelets. Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, IEEE Computer Society, 200-205. [4] Minsky, M., & Papert, S. (1969). Perceptrons. Cambridge, MA: MIT Press. [5] Mitchell, T. M. (1997). Machine Learning. New York, NY: McGraw-Hill Higher Education. [6] Pantic, M. & Rothkrantz, J. M. (2000) Automatic analysis of facial expressions: The state of the art. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(12), 14241445. [7] Rosenblatt, F. (1959). The perceptron: a probabilistic model for information storage and organization in the brain. Psychological Review, 65, 386-408. [8] Rumelhart, D., Widrow, B., & Lehr, M. (1994). The basic ideas in neural networks. Communications of the ACM, 37(3), 87-92 [9] Samal, A. (1991). Minimum resolution for human face detection and identification. SPIE Human Vision, Visual Processing, and Digital Display II, 1453, 81-89. [10] Samal, A., & Iyengar, P. (1992). Automatic recognition and analysis of human faces and facial expressions: A survey. Pattern Recognition, 25, 65-77. [11] Willis, J.,Todorov, A. (2006). First impressions: Making up your mind after a 100-ms exposure to a face. Psychological Science, 17, 592-598. [12] Z. Zhang, (1999). Feature-based facial expression recognition: Sensitivity analysis and experiments with a multilayer perceptron. International Journal of Pattern Recognition and Artificial Intelligence, 13(6), 893-911.