Basic Approaches Of Integration Between Data Warehouse And

advertisement

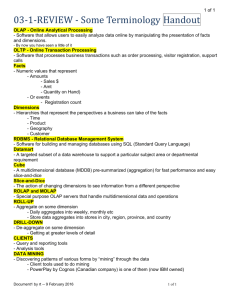

Basic Approaches Of Integration Between Data Warehouse And Data Mining Ina Naydenova, Kalinka Kaloyanova “St. Kliment Ohridski” University of Sofia, Faculty of Mathematics and Informatics Sofia 1164, Bulgaria ina@fmi.uni-sofia.bg, kkaloyanova@fmi.uni-sofia.bg Abstract. Data Warehouse and OLAP are essential elements of decision support, which has increasingly become a focus of the database industry. On the other hand, Data Mining and Knowledge Discovery is one of the fast growing computer science fields. Traditional query and report tools have been used to describe and extract what is in a database. The user forms a hypothesis about a relationship and verifies it with a series of queries. Data mining can be used to generate an hypothesis. But there is some other possibilities for collaboration between Data Warehouse and Data Mining technologies. We discuss several approaches of such collaboration in different architecture levels. 1 Introduction The role of information in creating competitive advantage for businesses and other enterprises is now a business axiom: whomever controls critical information can leverage that knowledge for profitability. The difficulties associated with dealing with the mountains of data produced in businesses brought about the concept of information architecture which has spawned projects such Data Warehousing (DW)[1]. The goal of a data warehouse system is to provide the analyst with an integrated and consistent view on all enterprise data which are relevant for the analysis tasks. On the other hand, Data Mining and Knowledge Discovery is one of the fast growing computer science fields. Its popularity is caused by an increased demand for tools that help with the analysis and understanding of huge amounts of data [3]. The question arises: ”Where is the intersection of these powerful technologies of the day?” The paper provides an overview of three basic approaches of integration and interaction between them: 1. Integration on the front end level combining On-Line Analytical Processing (OLAP) and data mining tools into a homogeneous Graphic User Interface; 2. Integration on the database level adding of data mining components directly in DBMS; 3. Interaction on back end level – the usage of data mining techniques during the data warehouse design process. 2 Ina Naydenova, Kalinka Kaloyanova Initially, we present briefly the fundamentals of data warehouse, OLAP and data mining. Then we consider: (1) the main characteristics of On-Line Analytical Mining, witch integrates OLAP with data mining knowledge; (2) the trends of inclusion of data mining engines in DBMS by manufacturers of popular relational DBMS as Oracle, IBM and Microsoft; (3) the application of existing data mining techniques in support of data warehouse building process. 2 Data Warehouse, OLAP and Data Mining DW and OLAP are essential elements of decision support. They are complementary technologies - a DW stores and manages data while OLAP transforms the data into possibly strategic information. Decision support usually requires consolidating data from many heterogeneous sources: these might include external sources in addition to several operational databases. The different sources might contain data of varying quality, or use inconsistent representations, codes and formats, which have to be reconciled. Since data warehouses contain consolidated data, perhaps from several operational databases, over potentially long periods of time, they tend to be orders of magnitude larger than operational databases. In data warehouses historical and summarized data is more important than detailed. Many organizations want to implement an integrated enterprise warehouse that collects information about all subjects spanning the whole organization. Some organizations are settling for data marts instead, which are departmental subsets focused on selected subjects (e.g., a marketing data mart, personnel data mart). A popular conceptual model that influences the front-end tools, database design, and the query engines for OLAP is the multidimensional view of data in the warehouse. In a multidimensional data model, there is a set of numeric measures that are the objects of analysis. Examples of such measures are sales, revenue, and profit. Each of the numeric measures depends on a set of dimensions, which provide the context for the measure. For example, the dimensions associated with a sale amount can be the store, product, and the date when the sale was made. The dimensions together are assumed to uniquely determine the measure. Thus, the multidimensional data views a measure as a value in the multidimensional space of dimensions. Often, dimensions are hierarchical; time of sale may be organized as a day-month-quarter-year hierarchy, product as a product-category-industry hierarchy [4]. Typical OLAP operations, also called cubing operation, include rollup (increasing the level of aggregation) and drill-down (decreasing the level of aggregation or increasing detail) along one or more dimension hierarchies, slicing (the extraction from a data cube of summarized data for a given dimension-value), dicing (the extraction of a “sub-cube”, or intersection of several slices), and pivot (rotating of the axes of a data cube so that one may examine a cube from different angles). Other technology, that can be used for querying the warehouse is Data Mining. Knowledge Discovery (KD) is a nontrivial process of identifying valid, novel, potentially useful, and ultimately understandable patterns from large collections of data [6]. One of the KD steps is Data Mining (DM). DM is the step that is concerned with the actual extraction of knowledge from data, in contrast to the KD process that is con- Basic Approaches Of Integration Between Data Warehouse And Data Mining 3 cerned with many other things like understanding and preparation of the data, verification of the mining results etc. In practice, however, people use terms DM and KD as synonymous. ALL Sofia Toronto STORE ALL PRODUCT ALL LAA (ALL, ALL, ALL) food (ALL, books, Sofia) books sports (ALL, clothing, ALL) clothing 2001 2002 2003 2004 ALL TIME Fig. 1. Cube presentation of data in multidimensional model The knowledge discovery goals are defined by the intended use of the system. We can distinguish two types of goals: verification and discovery. With verification, the system is limited to verifying the user’s hypothesis. That kind of functionality is mainly supported by OLAP technology. With discovery, the system autonomously finds new patterns. The two high-level primary discovery goals of data mining in practice tend to be prediction and description. Prediction involves using some variables or fields in the database to predict unknown or future values of other variables of interest, and description focuses on finding human-interpretable patterns describing the data. Data mining involves fitting models to, or determining patterns from, observed data. Most data-mining methods are based on tried and tested techniques from machine learning, pattern recognition, and statistics: classification, clustering, regression, and so on (there is an array of different algorithms under each of these headings)[5]. We introduce in brief only some of them having relation with the rest of the paper: Classification is learning a function that maps (classifies) a data item into one of several predefined classes. Classification-type problems are generally those where one attempts to predict values of a categorical dependent variable (class, group membership, etc.) from one or more continuous and/or categorical predictor variables. Regression is learning a function that maps a data item to a real-valued prediction variable. Regression-type problems are generally those where one attempts to predict the values of a continuous variable from one or more continuous and/or categorical predictor variables. There are a large number of methods that an analyst can choose from when analyzing classification or regression problems. Tree classification and regression techniques, produce predictions based on few logical if-then conditions is frequently used approach [16]. Clustering is a common descriptive task where one seeks to identify a finite set of categories or clusters to describe the data. The categories can be mutually exclusive and exhaustive or consist of a richer representation, such as hierarchical or overlapping categories. Closely related to clustering is the task of probability den- 4 Ina Naydenova, Kalinka Kaloyanova sity estimation, which consists of techniques for estimating from data the joint multivariate probability density function of all the variables. Dependency modeling consists of finding a model that describes significant dependencies between variables. Dependency models exist at two levels: the structural level of the model specifies (often in graphic form) which variables are locally dependent on each other and the quantitative level of the model specifies the strengths of the dependencies using some numeric scale (e.g. probabilistic dependency networks). Change and deviation detection focuses on discovering the most significant changes in the data from previously measured or normative values. Summarization involves methods for finding a compact description for a subset of data e.g. the derivation of summary or association rules and the use of multivariate visualization techniques [6]. An association rule is an expression of the form A → B, where A and B are sets of items. Normally, the result of data mining algorithms for finding association rules consists of all rules that exceed pre-defined minimum support (coverage) and have high confidence (accuracy). Association rule discovery techniques are generally applied to databases of transactions where each transaction consists of a set of items. In such a framework the problem is to discover all associations and correlations among data items where the presence of one set of items in a transaction implies (with a certain degree of confidence) the presence of other items. The problem of discovering sequential patterns is to find intertransaction patterns such that the presence of a set of items is followed by another item in the time-stamp ordered transaction set. Monitoring & Administration 3 External sources Operational data bases Meta Repository 2 1 OLAP Servers Analysis Data Warehouse Extract Transform Load Refresh Serve Query/Reporting Data Mining Data Marts Fig. 2. Typical data warehousing architecture and points of integration 3 Integration of OLAP with Data Mining Most data mining tools need to work on integrated, consistent, and cleaned data, which requires costly data cleaning, data transformation, and data integration as pre- Basic Approaches Of Integration Between Data Warehouse And Data Mining 5 processing steps. A data warehouse constructed by such preprocessing serves as a valuable source of high quality of data for OLAP as well as for data mining. Effective data mining needs exploratory data analysis. A user will often want to traverse through a database, select portions of relevant data, analyze them at different granularities, and present knowledge/results in different forms [8]. By integration of OLAP and data mining, OLAP mining (also called On-Line Analytical Mining) facilitates flexible mining of interesting knowledge in data cubes because data mining can be performed at multi-dimensional and multi-level abstraction space in a data cube. Cubing and mining functions can be interleaved and integrated to make data mining a highly interactive and interesting process. The desired OLAP mining functions are: Cubing then mining: With the availability of data cubes and cubing operations, mining can be performed on any layers and any portions of a data cube. This means that one can first perform cubing operations to select the portion of the data and set the abstraction layer (granularity level) before a data mining process starts. For example, one may first tailor a cube to a particular subset, such as “year = 2004”, and to a desired level, such as at the “city level” for the dimension “store”, and then execute a prediction mining module. Mining then cubing: This means that data mining can be first performed on a data cube, and then particular mining results can be analyzed further by cubing operations. For example, one may first perform classification on a “market” data cube according to a particular dimension or measure, such as profit made. Then for each obtained class, such as the high profit class, cubing operations can be performed, e.g., drill-down to detailed levels and examine its characteristics. Cubing while mining: A flexible way to integrate mining and cubing operation is to perform similar mining operations at multiple granularities by initiating cubing operations during mining. By doing so, the same data mining operations can be performed on different portions of a cube or at different abstraction levels. For example, for mining association rules in a “market” data cube, one can drill down along a dimension, such as “time”, to find new association rules at a lower level of abstraction, such as from “year to month”. Backtracking: To facilitate interactive mining, one should allow a mining process to backtrack one or a few steps or backtrack to a preset marker and then explore alternative mining paths. For example, one may classify market data according to profit made and then drill-down along some dimension(s), such as store to see its characteristics. Alternatively, one may like to classify the data according to another measure, cost of product, and then do the same (characterization). This requires the miner to jump back a few steps or backtrack to some previously marked point, and redo the classification. Such flexible traversal along the cube at mining is a highly desired feature for users. Comparative mining: A flexible data miner should allow comparative data mining, that is, the comparison of alternative data mining processes. For example, a data miner may contain several cluster analysis algorithms. One may like to compare side by side the clustering quality of different algorithms, even examine them when performing cubing operations, such as when drilling down to detailed abstraction layers. It is possible to have other combinations in OLAP mining. For example one can perform “mining then mining”, such as first perform classification on a set of data and 6 Ina Naydenova, Kalinka Kaloyanova then find association patterns for each class. In a large warehouse containing a huge amount of data, it is crucial to provide flexibilities in data mining so that a user may traverse a data cube, select mining space and the desired levels of abstraction, and test different mining modules and alternative mining algorithms [7] . 4 An Integration of RDBMS with Data Mining Traditionally, data mining tasks are performed assuming that the data is in memory. In the last decade, there has been an increased focus on scalability but research work in the community has focused on scaling analysis over data that is stored in the file system. This tradition of performing data mining outside the DBMS has led to a situation where starting a data mining project requires its own separate environment. Data is dumped or sampled out of the database, and then a series of special purpose programs are used for data preparation. This typically results in the familiar large trail of droppings in the file system, creating an entire new data management problem outside the database. Once the data is adequately prepared, the mining algorithms are used to generate data mining models. However, past work in data mining has barely addressed the problem of how to deploy models, e.g., once a model is generated, how to store, maintain, and refresh it as data in the warehouse is updated, how to programmatically use the model to do predictions on other data sets, and how to browse models. Over the life cycle of an enterprise application, such deployment and management of models remains one of the most important tasks [9]. The objective of the integration on the database level is to alleviate problems in deployment of models and to ease the data preparation by working directly on relational data. The business community already tries to integrate the KD process. The biggest DBMS vendors like IBM, Microsoft and Oracle integrated some of the DM tools into their commercial systems. Their goal is to make the use of DM methods easier, in particular for users that use their DBMS products [3]. The IBM Solution Data mining is a key component of an IBM's Information Warehouse framework. The core of IBM Data Mining technology is the mining kernel. It’s contains modules that perform data mining functions like classification, clustering, association, sequential patterns [14]. IBM’s DM tool, called Intelligent Miner is a feature of the DB2 Universal Database Data Warehouse Editions, which consists of three components: Intelligent Miner Modeling, Intelligent Miner Visualization, Intelligent Miner Scoring. Intelligent Miner Modeling is a extension of the DB2 database that enables the development of customized mining applications. This modeling tool supports statistical functions and algorithms, including clustering, tree classification, regression, and associations algorithm. When new relationships are discovered, Intelligent Miner Scoring allows you to apply the new relationships in your data to new data in real-time. By isolating both the mining model and the scoring logic (scoring is the process of predicting outcomes) from the application, you can provide continuous model improvement as Basic Approaches Of Integration Between Data Warehouse And Data Mining 7 trends change or additional information becomes available - without disruption to the application. Data-mining model analysis is available via Intelligent Miner Visualizer, a Java-based results browser. Another product - DB2 Intelligent Miner for Data provides a suite of tools to obtain a single framework for database mining. This framework supports the iterative process, offering data processing, statistical analysis, and results visualization to complement a variety of mining methods [15]. The Microsoft Solution SQL Server 2000 includes Analysis Services with data mining technology, which examines data, in relational data warehouse, as well as OLAP cubes to uncover areas of interest to business decision makers and other analysts. Data mining models are exposed through a new OLE DB for Data Mining provider to calling applications and reporting tools. OLE DB DM is an extension of the SQL query language that allows users to train and test DM models. It has only two notions beyond traditional OLE DB definition: cases and models. Input data is in the form of a set of cases (caseset). Data mining model is treated as if it were a special type of “table:” we associate a caseset with a model and additional meta-information while defining a model; when data is inserted into the data mining model, a mining algorithm processes it and the resulting abstraction is saved instead of the data itself. Once a model is populated, it can be used for prediction, or its content can be browsed for reporting. Actually, OLE DB DM is independent of any particular provider or software and is meant to establish a uniform API. Microsoft SQL Server database management system exposes OLE DB DM. The “core” relational engine exposes the traditional OLE DB interface. However, the analysis server component exposes an OLE DB DM interface (along with OLE DB for OLAP) so that data mining solutions may be built on top of the analysis server [9]. OLAP Data Mining allows architects to reuse the results from data mining and incorporate the information into an OLAP Cube dimension for further analysis. In addition, the Decision Support Objects (DSO) library has been extended in order to accommodate direct programmatic access to the data mining functionality present within OLAP services [13]. Data mining algorithms are the foundation from which mining models are created. Microsoft’s SQL Server 2000 incorporates two algorithms: classification trees and clustering. The algorithms available with new SQL Server 2005 (Beta) are much more: classification (decision) trees, clustering, Naïve Bayes (classification algorithm), time series (sequential patterns algorithm), association, neural network (creates classification and regression mining models by constructing a multilayer network) [12]. The Oracle Solution Oracle has integrated OLAP and data mining capabilities directly into the database server. All the data mining functionality is embedded in Oracle9i Database, so the data, data preparation, data mining, and scoring, all occur within the database. Oracle9i Data Mining (ODM) has two main components: Data Mining API and Data Mining Server. Application developers access Oracle data mining's functionality through a Java-based API. Programmatic control of all data mining functions enables automation of data preparation, model-building, and model-scoring operations [11]. The Data Mining Server is the server-side, in-database component that performs the data mining operations within the 9i database, and thus benefits from its availability and scalability. It also provides a metadata repository consisting of mining input objects and 8 Ina Naydenova, Kalinka Kaloyanova result objects [10]. ODM supports tree classification, clustering, associations and attribute importance (in fact only Naïve Bayes). Associated analysis for preparatory data exploration and model evaluation is extended by Oracle's statistical functions and OLAP capabilities. Because these also operate within the database, they can all be incorporated into a seamless application that shares database objects. Models are stored in the database and directly accessible for evaluation, reporting, and further analysis by tools and application functions. Oracle has a DM tool called Oracle Darwin, which is a part of the Oracle Data Mining Suite. Oracle Data Mining Suite has a Windows GUI and is targeted towards data analysts doing "ad hoc" data mining. Oracle9i Data Mining targets Java application developers who want to automate the extraction of business intelligence and its integration into other business applications. 5 The Usage of Data Mining Techniques during the Data Warehouse Design Process The design phase is the most costly and critical part of a data warehouse implementation. In different steps of this process arises problems that can be solved by usage of data mining techniques. For better understanding of where data mining can support design phase, in [2] the process is divided to follow steps: Data Source Analysis, Structural Integration of Data Sources, Data Cleansing, Multidimensional Data Modeling and Physical DW Design. The first step of the warehouse design cycle is the analysis of these sources. They are often not adequately documented. Data mining algorithms can be used to discover the implicit information about the semantics of the data structures. Data Source Analysis Often, the exact meaning of an attribute cannot be deduced from its name and data type. The task of reconstructing the meaning of attributes would be optimally supported by dependency modeling using data mining techniques and mapping this model against expert knowledge, e.g., business models. Association rules are suited for this purpose. Other data mining techniques, e.g., classification tree and rule induction, and statistical methods, e.g., multivariate regression, probabilistic networks, can also produce useful hypotheses in this context. Many attribute values are (numerically) encoded. Identifying inter-field dependencies helps to build hypotheses about encoding schemes when the semantics of some fields are known. Also encoding schemes change over. Data mining algorithms are useful to identify changes in encoding schemes, the time when they took place, and the part of the code that is effected. Methods which use data sets to train a “normal” behavior can be adapted to the task. The model learned can be used to evaluate significant changes. A further approach would be to partition the data set, to build models on these partitions applying the same data mining algorithms, and to compare the differences between these models. Data mining and statistical methods can be used to induce integrity constraint candidates from the data. These include, for example, visualization methods to identify distributions for finding domains of attributes or methods for dependency modeling. Basic Approaches Of Integration Between Data Warehouse And Data Mining 9 Other data mining methods can find intervals of attribute values which are rather compact and cover a high percentage of the existing values. Once each single data source is understood, content and structural integration follows. This step involves resolving different kinds of structural and semantic conflicts. To a certain degree, data mining methods can be used to identify and resolve these conflicts. Structural Integration of Data Sources Data mining methods can discover functional relationships between different databases when they are not too complex. A linear regression method would discover the corresponding conversion factors. If the type of functional dependency (linear, quadratic, exponential etc.) is a priori not known, model search instead of parameter search has to be applied. Data Cleansing Data cleansing is a non-trivial task in data warehouse environments. The main focus is the identification of missing or incorrect data (noise) and conflicts between data of different sources and the correction of these problems. Typically, missing values are indicated by either blank fields or special attribute values. A way to handle these records is to replace them by the mean or most frequent value or the value which is most common to similar objects. Advanced data mining methods for completion could be similarity based methods or methods for dependency modeling to get hypotheses for missing values. On the other hand, data entries might be wrong or noisy. For such kind of problems is proper to be used tree or rule induction methods. If we have a model that describe dependencies between data, every transaction that deviate from the model is a hint for an error or noise. Different sources can contain ambiguous or inconsistent data, e.g., different values for the address of a customer or the price of the same article. With clustering methods you might find records which describe the same real-world entity but differ in some attributes which have to be cleaned. Record linkage techniques are used to link together records which relate to the same entity(e.g. patient or customer) in one or more data sets where a unique identifier is not available. Multidimensional Data Modeling Sometimes it is not sensible to model all the fields as dimensions of the cube as some fields are functionally dependent and other fields do not strongly influence the measures. Data mining methods can help to rank the variables according to their importance in the domain. Non-correlated sets of attributes can be found with correlation analysis. Furthermore, using data mining methods to drive this cube design seems promising as they can help to identify (possibly weak) functional dependencies, which indicate non-orthogonal dimension attributes. Sparse regions should be avoided during modeling. Using special cluster methods (e.g. probability density estimation) data points can be identified as the center of dense regions. The multidimensional paradigm demands that dimensions are of discrete data type. Therefore, attributes with a continuous domain have to be mapped to discrete values if this attribute is to be modeled as a dimension. Algorithms, that find meaningful intervals in numeric attributes help to get discrete values. Physical DW Design 10 Ina Naydenova, Kalinka Kaloyanova Using OLAP tools, the business user can interactively formulate queries. Data mining algorithms can be used to identify tasks and to find query and interaction patterns that are typical for certain tasks or users. Thus, the input to the data mining process is a set of sessions (each consisting of a sequence of multidimensional queries) that can be extracted from a log file of the data warehouse system. The first step during the data preparation phase of the knowledge discovery process is to find a formal representation of an individual query that contains the interesting properties of this query. This involves mapping different representations of conceptually equal queries to the same “query prototype”. A database administrator might be interested in patterns that concern the structural composition of queries (e.g., that certain dimensions are often queried together in certain phases of the analysis process). Mathematically, this first step corresponds to the definition of equivalency classes on the space of all queries. The actual design of the representation and abstraction function is mainly influenced by the type of patterns that should be discovered (Metric Space, Hypertext, Sequence of Events Approach -[2] ). The second step is to represent the transformed log file information as a structure that can serve as an input for an existing data mining method. Summing up, the adequate representation enables the use of temporal and spatial data mining methods in order to find groups of users that are similar concerning the time of their queries (e.g., between 8 am and 10 am), the contents of their queries, their navigational behavior, the granularity of their analysis, or the complexity of their analysis [2]. 6 Conclusions In the paper we observe basic approaches for integration of data warehouse, OLAP and knowledge discovery. We present the main characteristics of On-Line Analytical Mining. OLAM integrates on-line analytical processing with data mining, which substantially enhances the power and flexibility of data mining and makes mining an interesting exploratory process. Important task for future study is the extension of OLAP mining techniques towards advanced and special purpose database systems, including extended-relational, object-oriented, text, spatial, multimedia and Internet information systems. With multiple data mining functions available, one question, which naturally arises is how to determine which data mining function is the most appropriate one for a specific application. To select an appropriate data mining function, one needs to be familiar with the application problem, data characteristics, and the roles of different data mining functions. Sometimes one needs to perform interactive exploratory analysis to observe which function discloses the most interesting features in the database. OLAP mining provides an exploratory analysis tool, however, further study should be performed on the automatic selection of data mining functions for particular applications [7]. We also examine the efforts of RDBMS manufacturers to integrate their warehousing, OLAP and mining solutions in common framework. Such integration is a promising direction for easier and more efficient mining of data warehouses. Regardless observed efforts at present the knowledge discovery industry is fragmented. Knowledge discovery community generates new solutions that are not widely accessible to a Basic Approaches Of Integration Between Data Warehouse And Data Mining 11 broader audience. Because of the complexity and high cost of the knowledge discovery process, the knowledge discovery projects are deployed in situations where there is an urgent need for them, while many other businesses reject it because of the high costs involved [3]. The observed integration contributes for better accessibility of data mining solutions and reduction of project’s cost. Finally we look at how data mining methods can be used to support the most important and costly tasks of data warehouse design. Hundreds of tools are available to automate portions of the tasks associated with auditing, cleansing, extracting, and loading data into data warehouses. But tools that use data mining techniques still are rare. Building of such kind of tools is a promising direction for more efficient implementation of backend steps. References 1. Trillium Software System: Achieving Enterprise Wide Data Quality , White Paper, 2000 2. Sapia C.; Höfling G.; Müller M.; Hausdorf C.; Stoyan H.; Grimmer U.:On Supporting the Data WarehouseDesign by Data Mining Techniques.Proc.GI-Workshop DM and DW, 1999. 3. Cios K. J.; Kurgan L.: Trends in Data Mining and Knowledge Discovery, Knowledge Discovery in Advanced Information Systems, Springer, 2002 4. Chaudhuri S., Dayal U.: An Overview of Data Warehousing and OLAP Technology, SIGMOD Record 26(1): 65-74 ,1997 5. Fayyad U. , Piatetsky-Shapiro G., Smyth P.: From data mining to knowledge discovery in databases. AI Magazine, 17(3):37-54, 1996. 6. Fayyad U., Piatetsky-Shapiro G., Smyth P.: Knowledge discovery and data mining - Towards a unifying framework. Proc. 2nd Int. Conf. on KDD'96, Portland, Oregon, pp. 82-88, 1996. 7. J. Han : OLAP Mining: An integration of OLAP with data mining. Proc. of IFIP ICDS, 1997. 8. Han J., Chee S., Chiang J. Y. : Issues for On-Line Analytical Mining of Data Warehouses. Proc. of 1998, SIGMOD'96 Workshop on Research Issues on DMKD'98 , 1998, pp. 2:1-2:5 9. Netz, A.; Chaudhuri, S.; Fayyad, U.; Bernhardt, J.: Integrating data mining with SQL databases: OLE DB for data mining , ICDE 2001, Page(s): 379 -387 10. Oracle Corporation:Oracle9i Data Mining Concepts Release 2 (9.2),P.No.A95961-01, 2002 11. Oracle Corporation: Oracle9i Data Mining, www.oracle.com/technology/products/oracle9i/pdf/o9idm_bwp.pdf, 2002 12. Paul S., MacLennan J., Tang Z., Oveson S.: Microsoft SQL Server 2005 Data Mining Tutorial. Microsoft Corporation, 2004 13. Charran E., Introduction to Data Mining with SQL Server (Part 2), http://www.sql-serverperformance.com/ec_data_mining2.asp, 2002 14. IBM: Data mining: Extending the information warehouse framework. White Paper, http://www.almaden.ibm.com/cs/quest /paper/whitepaper.html, 2002 15. IBM: IBM Software - DB2 Intelligent Miner - Family Overview, http://www306.ibm.com/software/data/iminer/, 2005 16. StatSoft: Classification and Regression Trees (C&RT), http://www.statsoft.com/textbook/stcart.html, 2003