hw1-sol

advertisement

CS 6378, Fall 2004

Homework 1

(Due on September 14, 2004)

1. Consider a cut of a distributed computation, C = {c1, c2, c3, c4}. VTc1 = {5, 2, 4, 7}; VTc2

= {3, 6, 4, 6}; VTc3 = {4, 5, 7, 4}; VTc4 = {3, 4, 6, 8}. What is the value of VTc? Is C a

consistent cut?

Answer:

VTc = sup(VTc1, VTc2, VTc3, VTc4} = {5, 6, 7, 8}.

C is consistent because VTc = {VTc1[1], VTc2[2], VTc3[3], VTc4[4]}

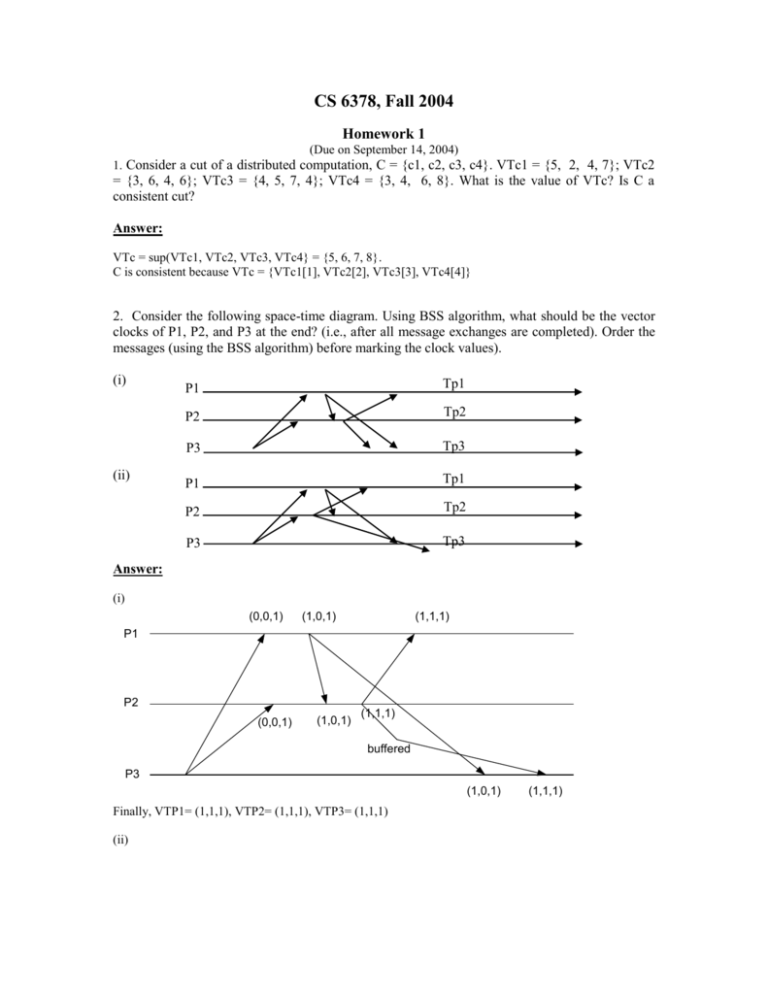

2. Consider the following space-time diagram. Using BSS algorithm, what should be the vector

clocks of P1, P2, and P3 at the end? (i.e., after all message exchanges are completed). Order the

messages (using the BSS algorithm) before marking the clock values).

(i)

(ii)

P1

Tp1

P2

Tp2

P3

Tp3

P1

Tp1

P2

Tp2

P3

Tp3

Answer:

(i)

(0,0,1)

(1,0,1)

(1,1,1)

P1

P2

(0,0,1)

(1,0,1)

(1,1,1)

buffered

P3

(1,0,1)

Finally, VTP1= (1,1,1), VTP2= (1,1,1), VTP3= (1,1,1)

(ii)

(1,1,1)

(0,0,1)

(1,0,1)

(1,1,1)

P1

(0,0,1)

P2

(0,1,1)

(1,1,1)

P3

(1,0,1)

(1,1,1)

Finally, VTP1= (1,1,1), VTP2= (1,1,1), VTP3= (1,1,1)

3. If each process uses a different value for d in Eqs. 5.1, 5.2, and 5.3, will the logical clocks and

vector clocks schemes satisfy the total order relation => and Eq. 5.5?

Answer:

(1) Logical clock does not satisfy 5.5 because if C(a)<C(b), then a->b is not necessarily true. No matter

what d is chosen. (see pp. 103 in textbook)

(2) Using a different value of d in Eqs. 5.1, 5.2, and 5.3 does not affect the property of vector clocks. So,

equation 5.5 still holds.

4. In the SES protocol for causal ordering of messages, when can a pair (s, t) be deleted from the

vector maintained at a site?

Answer:

When a message M from site S is delivered, if t < Tm (message time stamp), then (S,t) can be deleted since

the local time on S is already greater than Tm.

5. Consider the causal message ordering algorithms: BSS (Birman-Schiper-Stephenson),

“original” SES (Schiper-Eggli-Sandoz), and “modified” SES. What type of message time stamps

do they use (vector of clock values OR vector of sequence numbers)? Why? (Proper reason is a

must to get credit).

Answer:

BSS: vector of sequence numbers. Reason: receiver has to figure out whether it has

received all the senders’ messages. Receive events are not counted as part of the message

vector.

“Original” SES: vector of clock values. Reason: receiver has to count the receive events

also to know whether the earlier message indicated in the dependency information has

already been received.

“Modified” SES: vector of sequence numbers. Reason: the matrix identifies the sender

and receiver uniquely, so it is sufficient to count the “sent” messages (receive events are

not counted)

6. We saw in the class that the following is true for Cuts in a distributed system. A Cut is a

consistent cut if and only if VTc[1] = VTc1[1], VTc[2] = VTc2[2],… , VTc[n] = VTcn[n]. Can

you prove/explain why this is true?

(5 Marks)

Answer:

By definition, VTc[1] = max(VTc1[1], VTc2[1], …, VTcn[1]). If VTc[1] = VTc1[1], then

VTc1[1] >= VTc2[1],…,VTcn[1]. This means the cut event C1 includes all the sent messages by

the process P1 that have been recorded/marked as having been received by other processes

P2…Pn. Hence, the cut event C1 leads to consistency. Let us assume that VTc[1] < VTc1[1].

This means some other process has recorded a receive event from 1 and 1 has NOT recorded that

event => the cut is not consistent. However, we started with the fact that the cut is consistent. So

VTc[1] < VTc1[1] is NOT true => VTc1 = VTc1[1].

Similar arguments hold for VTc[2] .. VTc[n].

7. Consider a distributed application developed using Ada. The application has 1 Remote

Procedure Call. However, at run-time, the application has 10 instances of (the same) RPC server

running actively. (The RPC server was instantiated 10 times during run-time).

The system administrator wants to distribute clients’ RPC requests among these 10 server

instances so that their “load” can be approximately uniform. (i.e., the number of clients handled

by different RPC server instances is nearly same). Suggest techniques to the distributed system

administrator to achieve this.

(5 Marks)

Answer:

Use the binding/name server. For each client, bind to different server instances. Perhaps in

around-robin manner to distribute the load.