A short note on conc..

advertisement

A short note on concepts in rational

choice theory

Microeconomic theory is probably the most elaborate and well-structured theory in the social

sciences. Microeconomic theory is basically a theory of rational choice by individual actors.

Microeconomic theory in its original form includes consumer-theory, producer-theory and theory on

how consumers and producers interact in markets. However, the core concepts underlying this

theory can and has also been used to study a broader range of phenomena. This is due generality of

the core assumptions underlying the theory, and I will here speak generally about the theory of

rational choice. Not only consumers and producers, but also voters, politicians, lobbyists social

classes and states in international affairs have been studied under the same core assumptions and by

using similar concepts as those used in microeconomic theory in a narrower sense. That being said,

the core rational choice assumptions are too general to be used fruitfully in social science research

without putting additional information about social structure and actors into the particular model of

interest. I will here dwell mostly on the general core concepts and assumptions, but from time to

time illustrate how rational choice analysts put more flesh on the skeleton of the general framework

in concrete research.1

Thin and instrumental rationality

A “deceptively simple sentence” that summarizes the theory of rational choice is according to Jon

Elster: “When faced with several courses of action, people usually do what they believe is likely to

have the best overall outcome” (Elster, 1989:22). It is perhaps best to think of rational choice not as

one theory, but rather a more general theoretical framework or a family of related theories.

However, the notion of instrumental rationality, that individual actors choose those actions that

maximize the satisfaction of their goals, is common for this family. Rationality does not say anything

particular about the value of the goals actors pursue; rationality is therefore thin in general.

Rationality, as used in rational choice theory, relates to how actors use the means and strategies at

their disposal to follow these goals. Rationality is instrumental. However, in concrete research

settings analysts feed certain preference structures into their models, and ideally, these preferencestructures should resemble central elements of the goals and aims that the real world actors (which

the model is supposed to capture) actually have. Politicians might for example be motivated by

holding office, grabbing economic rents, increasing their power over society or by promoting specific

ideologies. However, the rational choice framework as such does not come with a predisposed list of

what the goals should be. Rational choice theory does not require that the actors are self-interested

either. It is possible for the actors to have altruistic or sociopathic preferences, even though selfinterested actors are most common in the literature. A basic feature of rational choice theory is that

actions are chosen because of intended consequences. Actions are not valuable in their own right,

which can be contrasted for example with Kant’s Categorical Imperative.

1

I will not in this paper go into the debate on the advantages, problems and usefulness of rational choice in the

social sciences, even though the literature on the appropriateness of rational choice is large and fascinating.

This paper only aims at presenting the core theoretical framework. Let me however add that I have trouble

with general and bombastic claims on the usefulness or uselessness of rational choice theory in the social

sciences. The appropriateness of any methodology or theory hinges among others upon the nature of the

particular research question asked.

1

Requirements on preferences

There are however some requirements made on the preferences of rational actors. In order for the

theory to work, preferences must satisfy at least two criteria; completeness and transitivity.

Completeness requires that the actor must be able to rank all possible outcomes. That is, if we have

outcomes x and y, we have either that x>y, x=y (indifference) or x<y. > and < indicate a strict

preference (better than or worse than), whereas ≥ and ≤ indicate a weak preference (better than or

equal to and worse than or equal to). Transitivity requires that if x>y and y>z x>z. If an apple is

preferred to a banana and a banana is preferred to a kiwi, an apple must be preferred to a kiwi.

These assumptions (together with reflexitivity; an outcome cannot be strictly preferred over itself)

are sufficient to utilize a rational choice framework, but as mentioned, in practical research settings

one often puts more structure on the preferences of actors.

Utility functions

If we in addition to the assumptions above assume that preferences are continuous, Gerard Debreu

(1959) has shown that there exists at least one utility function that can be constructed to represent

these preferences. We can for example have a utility function that depends on income, or on

probability of remaining in office, or over location on a policy-dimension (left-right, where extreme

left =0 and extreme right=1.)

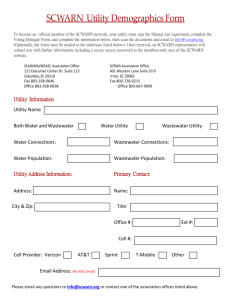

Figure 1: Hypothetical utility function, U(x), where x is income

U(x)

x

Figure 2: Hypothetical utility function, U(x), for centrist party, where x is value on left-right

dimension

U(x)

2

x

The utility functions, as long as there is no uncertainty involved, can be interpreted as ordinal. An

ordinal measurement level implies that we can only draw information about ranking, and not relative

size, from numbers. That is, if U(1) =2 (the utility of having 1 unit is 2) and U(2)= 6, we cannot with

ordinal utility functions say that the utility of receiving two units (for example Euros) is three times

the utility of receiving one. The only thing we can say is that the utility of receiving two is higher than

the utility of receiving one Euro. If we had a cardinal utility function however, the numbers would

have contained information about relative size in addition to rank.

Utility functions can take on different forms and researchers often use simple mathematical

functions to represent the preferences of actors. The simplest alternative is the linear utility function

where U(x) = a + bx. If a is equal to 0 and b is equal to 1, U(x)=x. Another alternative is using a version

of U(x)= a+bxc. We can for example have that a is equal to 0, b are equal to 1, and c equal to 1/ 2

U(x)=x1/2=√x. Another common version is U(x)=ln(x); the natural logarithm of x.

However, actors often care about more than one thing, and it is therefore common to model a utility

function with more than one objective. Let me illustrate a utility function where an actor has two

objectives x and y. Then U = U(x,y), and we can for example choose the simple, linear version U(x,y) =

x+y to represent preferences, or the common Cobb-Douglass form U(x,y)= xαy1-α. Let us take an

example. We have a political party that competes in two district, and the utility of the party depends

on votes gathered in district one (x) and district two (y). The party receives 500 votes in the first

district and 700 votes in the second district. The linear utility function would then yield a utility:

U(500, 700) = 500 + 700 = 1200

When it comes to the Cobb-Douglass version, let us now make a twist and say that the party values

votes in district 1 more than votes in district two, and the α is therefore larger than ½. Let us set

α=0,7, which implies that 1- α=0,3.

This gives us:

U(500, 700) = 5000,7 * 7000,3 ≈553

The choice of a specific utility function is not completely arbitrary however, and the mathematical

structure depends to a certain extent on what more assumptions we make about actor preferences

other than the very general ones presented above. I will come back to this issue, but first we need to

introduce some mathematics.

Elementary calculus

Most Norwegian students learn how to take the derivative of a function (differentiation) in high

school, but unfortunately, not much time is spent on this important topic. MA-Political science

students and many researchers have often not encountered differentiation in many years. Since

differentiation is so central to rational choice-based theory, and utilized in many political-economic

studies, we will have to indulge in a crash-course.

The derivative of a function can be thought of as the slope of the function. It is most straightforward

to think about the derivative of a linear function, for example Y= 3 + 2X. The slope of the function in

this case is always 2, as the function Y increases with 2 units as X increases with 1 unit. When it

comes to more complex functions, the derivative of a function is not necessarily constant. Take the

3

utility function in Figure 1. The derivative of the function is always positive, as the function increases

over the whole interval. However, the derivative decreases as the x-values become larger, because

the slope becomes less and less steep. In formal terms: U’(x)>0 (The derivative of U with respect to x

is positive). And: U’(xb) <U’(xa) if xb>xa. (The derivative of U at point Xb is smaller than at point xa if xb

is larger than Xa). The derivative of the function is decreasing as x gets bigger. The ‘ denotes that we

are referring to the derivative of the U function with respect to x. Alternatively, we can write this

expression as dU/dx.

Now take the utility function in Figure 2. The derivative of the function, U’(x) is positive before the

maximum point and negative after the maximum point, since the function is first sloping upwards

and then downwards. Here we come to an important point. What is the value of the derivative at the

maximum point? Consider Figure 3 below:

Figure 3: The derivative of a function in maximum

The straight line on top of the function is the slope at the maximum point. Since the line is flat, the

slope is equal to zero. This means that at the maximum point of a function, the derivative of a

function is equal to zero (the same is true for minimum points). This is extremely important in

rational choice considerations. What will it say to make the best choice for an individual? It can be

interpreted as maximizing utility (or optimizing). Therefore, when we search for the optimal action,

we take the derivative of a utility function and set it equal to zero. That is, we find the x-value that

satisfies the equation U’(x)=0.

We now introduce some general derivation rules for different functions:

Derivative of powers

f(x) = xr f’(x) = rxr-1

Example: f(x) = x3 f’(x) = 3x3-1=3x2

4

Derivative of powers, extended

f(x) = axr f’(x) = r*axr-1

Example: f(x) = 2x4 f’(x) = 4*2x4-1=8x3

Remember that x1=x and x0=1, such that f(x) =2x f’(x) =1*2X0=2. Similarly, the derivative of

f(x)=25x is equal to 25.

The derivative of a constant is equal to zero

f(x)=5 f’(x)=0

The derivative of sums and differences

f(x) + g(x) differentiated is equal to f’(x) + g’(x) and f(x) - g(x) differentiated is equal to f’(x) - g’(x)

This means that the derivative of 2x2+5 is equal to the derivative of 2x2 plus the derivative of 5. One

differentiates the sum piecewise. The derivative of the function can be expressed as:

(2x2+5)’ or d(2x2+5)/dx, and it is equal to 4x+0=4x, following the rules above.

At this point in time, you might want to go back and read this section one more time. Alternatively,

you might want to look up a text book in mathematics, such as Sydsæter (2000) for Norwegian

students or Gill (2006).

I will stop the discussion on derivatives so far, and go back to the interpretational aspects, but here

are some of the most used rules for differentiation. Even if you don’t remember or understand these,

you can look them up if needed when reading articles or books that use differentiation:

f(x) = ex f’(x) = ex

f(x) = ln(x) f’(x) = 1/x

Product rule: f(x)*g(x) derivated is equal to f’(x)*g(x)+f(x)*g’(x)

Quotient rule: f(x)/g(x) derivated is equal to (f’(x)*g(x) - f(x)*g’(x))/g(x)2

Chain rule: f(g(x)) derivated is equal to f’(g(x))*g’(x)

Example of chain rule f(g(x))= (2x+3)3. g(x) is here 2x+3. Following the chain rule, differentiation gives

us: d(f(g(x))/dx= 3(2x+3)2 *g’(x)= 3(2x+3)2 *2 =6(2x+3)2

How to interpret U’(x): marginal utility

Remember that the derivative of a function in a point is equal to the slope of the function in that

point. The derivative of the utility function is often called marginal utility, and can be interpreted as

the increase in utility when you increase x marginally (sometimes interpreted as one unit): How

much extra utility does the actor get from one extra unit of consumption, or one extra vote.

Consider U(x) = 8x-x2, which could be the utility function of beers on a Monday night student party

U’(x)=8 -2x, and this is the marginal utility function.

5

What is the marginal utility of an extra beer if you already have had one beer?

U’(1)=8-2=6.

When should the rational actor stop drinking beers? The answer is: When she has maximized her

utility function. Remember that this requires that U’(x)=0, that is the marginal utility of beer is equal

to zero. (There are some other conditions that need to be satisfied as well called second-order

conditions, but never mind these here.)

How do we find the optimum? Simply by setting U’(x)=8-2x=0

This implies that the optimal amount of beers is 4.

What if we have a function that we would like to minimize, for example a cost function, C(x)? The

same logic applies. The bottom of the cost function is found where the derivative, C’(x)=0, that is

where the marginal cost is equal to zero. If for example C(x)= x2-4x+16 C’(x)= 2x-4

C’(x)=2x-4=0 x=2

What if we have an action, x, say intensity of guerrilla warfare, which bears with it both gains, U(x),

and costs, C(x)? Then the rational guerilla would like to optimize the expression U(x) – C(x). Let

U(x)=x2 and C(x)=2x

We use the differentiation of a difference presented above and set the total expression equal to

zero:

U’(x) – C’(x) = 0 d(x2)/dx –d(2x)/dx=0 2x-2=0 x=1

X=1 is the optimal intensity of guerilla warfare in this example.

Uncertainty and expected utility

In most interesting social science questions, there are uncertain outcomes related to taking a specific

action. How does rational choice theory deal with such uncertainty? First, under uncertainty, the

analyst will have to move from an ordinal interpretation of the utility function to a cardinal, but I will

not go deeper into this issue. If actors are not under thick uncertainty, a lá the Rawlsian veil of

ignorance, but are able to make predictions on probabilities of different outcomes, the rational

choice framework can easily be applied to situations of uncertainty. Actors are assumed to maximize

expected utility (EU(x)), given their beliefs about probabilities of outcome. Moreover, given certain

specific assumptions (look up a microeconomic textbook), Von Neumann and Morgenstern showed

that maximizing expected utility is equivalent to maximizing the following expression:

EU(x) = p1U(x1) + p2U(x2) + ……+pnU(xn)

In this case, there are n possible outcomes, which are assigned n different probabilities, given that a

certain action is taken. Let us take an example by considering a decision on whether to go to war or

not. If the actor does not go to war, he receives utility =2 for certain, that is with a probability =1.

However, if the actor goes to war, there are three different outcomes, victory, stalemate and loss.

The utility of victory is 10, the utility of stalemate is 0 and the utility of loss is -20. Is it rational for the

actor to go to war? This depends on the probability beliefs the actor holds. If the estimated

6

probability of victory is 0,7, of stalemate is 0,1 and of loss is 0,2, we can easily find the expected

utility of going to war for the actor:

EU = 0,7*10 + 0,1*0 + 0,2*-20 = 7+0-4=3

The expected utility of going to war is 3 and the expected utility (actually a certain utility) of not

going to war is 2. The rational actor chooses the action with the highest expected utility, namely war.

The main, general assumption underlying rational choice analysis under uncertainty is that actors

maximize expected utility, given their beliefs on probabilities of outcomes for specific actions. A

qualifying statement is that actors must, when they are allowed to update their beliefs after

gathering information from observations (for example in dynamic models with more than one time

period), form beliefs after the so-called “Bayes’ rule”. Look up any book on game theory to read

more on rational belief formation. Here is one simple example: You know that you are facing either a

weak or a strong opponent in a war game, and the weak opponent never goes to war. Initially, you

believe that you face a weak opponent with a 0,4 probability and a strong with 0,6 probability. If you

observe, in the first round that the opponent goes to war, you should update your probability beliefs.

Since weak opponent never go to war, the probability of facing a weak opponent has to be 0! Then

you know that the probability of facing a strong opponent is equal to 1. You have successfully used

Bayes’ rule to update your beliefs!

The expected utility framework allows the incorporation of risk aversion among the actors. The risk

aversion can be incorporated into the utility function, and one does therefore not have to assume

risk neutrality. Interested readers can look up a textbook in microeconomics.

Optimization over time

In many rational choice models, there is a time-structure, and thereby the number of time periods is

larger than one. How do rational actors respond to such time structures? The common way to model

such dynamic settings is by presenting a utility function that is additively separable over periods; that

is, total utility can be presented as a sum of utilities gained in each period. However, most actors are

impatient and would rather take an immediate prize now than the same prize in the future, and we

incorporate this trait by discounting the utility in future periods. We then need a discount factor, β,

which tells us how much the actor values future gains (one period ahead) relative to immediate

gains. β is in the interval between 0 and 1, and the closer it is to 1, the more patient the actor. Let

subscript t be time period, and we start in period 0. We now get that total utility, U, is given by:

U(xt) = u(x0) + βu(x1) + β2u(x2) + …..+ βnu(xn) =∑ βtu(xt)

The∑ , sigma, means nothing other than the sum of the components. It is used regularly as a

summation sign, so you should get acquainted with it. Usually, the first time period is written below

the sigma (t=0 here), and the last period in the sum is written on top of the sigma (n here). Notice

that as the t becomes very large, βt goes towards zero, and this means that pay-offs far into the

future is almost neglected by the rational actors.

One special and very important case is when we have an infinite stream of payoffs, that is t ∞. If

we have short time periods or long lived actors (states?), an infinite horizon might function well as an

approximation to the situation, even though no one or nothing lives forever. We can actually

calculate a finite discounted pay-off for the actor, even though we do it over an infinite time horizon.

7

The extra assumption needed for the formula below to be correct is that the size of the x is equal in

each period. The result draws on the mathematical theory of geometric series (look up a textbook in

mathematics for the proof). We have that:

U(xt) = u(x0) + βu(x1) + β2u(x2) + …..+ βnu(xn)+……= u(x)/(1-β)

If we now go back to the rational actor and his optimal choice, the rational actor would choose the

course of action that yields the highest discounted utility. Take one individual considering whether to

invest a 1000 of his 1050 Euros, or consume it all right away. If he invests it, he gets an interest of 5%

a year (50 Euros), which he consumes in each period. The actor is relatively impatient with a β of 0,6.

The utility function for each period is the simple u(x)=x. Let us calculate the utility from the two

courses of action:

Consume right away:

U(xt) = u(1050) + βu(0) + β2u(0)+….=1050 + 0,6*0 +0,62*0 +….=1050

Save 1000 and live of interest:

U(xt) = u(50) + βu(50) + β2u(50)+….= 50 + 0,6*50 +0,62*50 +….=50/(1-0,6) =125

Since 1050>125, the rational actor chooses to consume everything at once. If the actor had been very

patient, with a β of for example 0,98, he would have chosen the other course of action. Try the

calculation yourself!

Extensions on calculus: Concavity and convexity, partial differentiation and optimization

under constraints

In order to read some of the literature in economics and political economy, we need to make some

expansions on the calculus-apparatus that was treated above. First, we need to define two concepts

that regularly come up in the literature, namely concavity and convexity. Figure 4 shows two

examples of concave functions, one increasing concave and one decreasing concave, and figure 5

shows an increasing convex and a decreasing convex function.

Figure 4: Concave functions

8

1

2

Figure 5: Convex functions

4

3

A concave function is a function where the first order derivative decreases in absolute value as the

value of x increases. Take for example the increasing and concave function, function 1 in Figure 4.

This type of function is particularly interesting for us, since most utility functions are assumed to be

increasing and concave. The marginal utility of getting one more unit is always positive, since U’(x)>0.

More is better. However, the size of the marginal utility is decreasing as we increase x. More is

better, but one unit extra is worth more in utility terms when we have little of the good to begin

with. Take money for example. An individual would always value an extra hundred Euros positively.

However, the extra hundred Euros is worth more to the person in utility terms when he is poor than

when he is rich (buying food not too starve versus buying a second bottle of Chardonnay at a fancy

club). Decreasing marginal utility means that the U’(x) function is decreasing. Mathematically, we can

express this by taking the second-order derivative: We differentiate U’(x) and get U’’(x). When a

function, f(x) is concave, f’’(x)<0. When it comes to concave utility functions, U’’(x)<0. A short way to

9

express an increasing concave utility function is then to say that U’(x)>0 and U’’(x)<0. Another

equivalent way to write this is: dU/dx>0 and d2U/dx2<0.

Convexity of a function is marked by f’’(x)>0 (or alternatively written d2f/dx2>0. We therefore have

the following descriptions of figure 1-4:

1) f’(x)>0 and f’’(x)<0 (increasing and concave)

2) f’(x)<0 and f’’(x)<0 (decreasing and concave)

3) f’(x)>0 and f’’(x)>0 (increasing and convex)

4) f’(x)<0 and f’’(x)>0 (decreasing and convex)

*

We have now established many of the basic tools we need to read and understand much of the

mathematically oriented social science literature, but there are some important expansions left.

What if an actor does not care only about one type of outcome, but several? A dictator might for

example care about both personal wealth and personal power. A union might for example care about

both the unemployment rate and wages. A political party might for example care about both

ideology and votes. In these cases, the utility functions must be specified so that they capture these

features. We therefore need to consider functions of multiple variables. Take the dictator. His utility

depends both on wealth, w, and power, p. We can then write his utility function as U(w,p). If we

want to express that the marginal utility of wealth is positive, it is common to write first-order

derivative expressions in the following way when we have a function of many variables: ∂U(w,p)/

∂w>0, or just ∂U/∂w>0. Similarly a positive marginal utility of power can be expressed ∂U(w,p)/

∂p>0, or ∂U/∂p>0. The expressions ∂U(w,p)/ ∂w and ∂U(w,p)/ ∂p are called partial derivatives (or

partial first-order derivatives) of the utility function. There are also other ways of writing partial

derivatives. For example U’w and U’p, or just Uw and Up. This latter way to express partial derivatives is

for example used in Przeworski (2000).

Let us take one example of partial differentiation you are actually already familiar with! The example

is related to the multiple regression equation. What we are interested in, is often to interpret the

different regression coefficients, the βs. We say for example that β1 is the average increase in Y when

X increases with one unit, holding all other variables constant. The operation of finding the increase

in Y when X increases, while holding other variables constant, is actually similar to finding the partial

derivative of the Y-function with respect to X.

If we had a bivariate regression function Y(X) given by Y = α +βX,, then dy/dx = β. The regression

coefficient in a bivariate regression is equal to the derivative of Y with respect to X.

The logic is completely similar in a multiple regression. The only difference is that we now want to

find partial derivatives. It is important to know that when you find the partial derivative of a function

with respect to a variable, you treat all the other variables as constant when performing the

calculation. Let us define such a regression equation with three variables:

Y = α +β1X1+β2X2+β3X3+ε

10

If we want to find ∂Y/∂X1, we use the rules of differentiation and remember to hold X2 and X3

constant: We get ∂Y/∂X1= 0 + β1+0+0+0 = β1. The regression coefficients in the multiple regression

are nothing other than the partial derivatives of Y with respect to the different Xs. We look for the

increase in Y when we increase X marginally (or with one unit since we have a linear function),

holding all the other variables constant. This is how we interpret regression coefficients, and this is

exactly what we do when we take partial derivatives.

*

The dictator in our earlier example had a positive marginal utility of wealth and a positive marginal

utility of power. What if we assume that the marginal utility of power and wealth are both

decreasing (but always positive)? Then we write: ∂2U(w,p)/ ∂w2<0 and ∂2U(w,p)/ ∂p2<0. This is

completely similar to the discussion on concavity and convexity of functions of one variable. The only

difference is that we now have to explicitly state which variable we are treating when investigating

the utility function. It would have made no difference if we expanded the number of variables

beyond two; the practice is the same with regards to specifying partial first-order and second orderderivatives.

We do however have one extra expansion, and that is the so-called cross-derivative. What if the

marginal utility of wealth depends on the level of power a dictator has? We then need to investigate

the expression ∂2U(w,p)/ ∂w∂p, the cross-derivative. If ∂2U(w,p)/∂w∂p>0, the marginal utility of

wealth increases in the level of power (a powerful dictator values an extra dollar more than a less

powerful dictator). If ∂2U(w,p)/∂w∂p<0, the marginal utility of wealth decreases in the level of power

a dictator has. Don’t bother thinking too much about this, if you don’t comprehend it (we first

differentiate U with respect to w, and then we differentiate this new expression with respect to p).

However, it is nice to know the so-called Young’s theorem, which states that ∂2U(w,p)/ ∂w∂p =

∂2U(w,p)/ ∂p∂w, if we have continuous functions. This for example implies that if marginal utility of

wealth increases in level of power, then we automatically know that the marginal utility of power

increases in the level of wealth.

*

An expression that often appears in rational choice literature is the marginal rate of substitution

(MRS). This concept is expressed, if we use the dictatorial utility function above, as:

(∂U/∂w)/(∂U/∂p)

Alternatively: Uw/Up

The marginal rate of substitution is therefore the ratio between the first-order partial derivatives of

the utility function. Why is this expression so important? It can be shown that the marginal rate of

substitution is equal to the amount of power a dictator is willing to give up if he achieves one unit of

wealth and utility levels are held constant. Expressed in another way: How many units of power is

the dictator willing to give up in order to achieve one extra unit of wealth. If Uw/Up =3, then the

dictator will have the same utility level if he trades three units of power for one unit of wealth. We

can describe such trade-offs between the two goods graphically by so-called indifference curves.

Remember that the utility level is held constant when “trading” utility for power, when discussing the

MRS. Figure 6 shows such trade-offs with hypothetical indifference curves, and the utility level is

11

constant along each indifference curve. An indifference curve to the northeast in the diagram implies

a higher level of utility for the dictator than one to the southwest, since the dictator on this

indifference curve has more power and wealth. Do not confuse movement along an indifference

curve (trade-offs with same utility-level) with comparison of different indifference curves (different

utility-levels).

Figure 6: Indifference curves for the rational dictator

w

U=50

U=30

U=10

p

The convex shape of the indifference curve illustrates a common assumption that is often made

about an actor’s preferences, when it comes to trade-offs. We see that when the amount of power is

relatively low, the indifference curves are steep. This means that an actor needs many units of

wealth to compensate for one unit of power when the amount of power is already low. If the amount

of power is high however, the dictator needs relatively less wealth to compensate for a loss of one

unit of power. This changing trade-off, implies that the MRS is not constant, but changing according

to how much of the two goods an actor has. The general rule is that an actor needs more of good 1

to compensate for a loss of good 2, if the actor already has relatively little of good 2, and vice versa.

*

We need one last bit of mathematical knowledge important for rational choice analysts, and this bit

is related to optimization under constraints. Most political and economic decisions are not made in a

vacuum. Actors who try to make optimal choices generally face specific constraints, and they need to

take these constraints into account when they choose courses of action. Constraints can for example

be budget constraints, technological constraints, institutional constraints, or, as we will see below in

the section on game theory, constraints that are caused by other actors’ (responding) actions.

Take for example the consumer. She wants to maximize her utility from consumption, and generally

more consumption is better, that is U’(c)>0. This would alone have implied a rational choice of

12

infinite consumption. As we all know however, consumers face a particular constraint in the market,

namely the budget constraint. The consumer wants to optimize consumption, given that she keeps

her budget. Similarly a technological constraint binds when firms want to minimize costs. The firm

cannot go any lower in costs, given a certain target level of production, than the best available

technology allows it to.

If we therefore go back to the optimization calculus related to finding the maximum of a utilityfunction, we need to incorporate this feature. Let us take the consumption example, since it is

particularly illustrating. Assume that our consumer wants to maximize a utility function that depends

on the consumption of two goods x1 and x2: U(x1, x2), with Ux1 and Ux2, the first order derivatives,

both positive. However, the consumer has an exogenously given income, m, and faces exogenously

given prices, p1 and p2, in the market. The problem then boils down to:

Max U( x1, x2)

Subject to the constraint: p1 x1 + p2 x2 = m

The optimal choice of x1 and x2 can be found graphically in a indifference curve diagram, by rewriting

the budget constraint so that x2 = m/p2 - p1/p2*x1. This rewritten constraint represents a straight line,

with the slope -p1/p2. Remember now that the utility in such an indifference curve diagram increases

to the northeast, and the consumer wants to land on the highest possible utility level. The

implication is that the consumer will choose the x1 and x2 combination that coincides with the point

where the indifference curve just exactly touches the constraint. In this point the slope of the

indifference curve (which is given by the MRS) is equal to the slope of the constraint. That is where

U’1/U’2 = p1/p2. See figure 7 below:

Figure 7: Utility maximization under a budget constraint

x2

x1

13

A way to find the optimum mathematically is by putting in the rewritten budget constraint, where x2

is expressed as a function of x1 into the utility function so that we get:

U( x1, x2) = U( x1, m/p2 - p1/p2*x1)

We now have a function of one variable, and we can differentiate and set the expression equal to

zero. We will then find the optimal allocation. The final answer will be equal to the solution

expressed above, namely that the MRS is equal to the price ratio. The interpretation is that in

optimum, the subjective utility tradeoff the consumer has when trading units of x1 and x2 is equal to

the relative price (p1/p2) of the units in the market. If this was not the case, and the consumer valued

x1 relatively more than the market, she would have just buy more of those units, and less of x2. See

Przeworski’s (2000) chapter 2 or a microeconomics book, if you want to understand more of the

logic. For now, it is sufficient to remember that rational actors face constraints, and in optimum they

tend to adjust so that the subjective MRS, their relative evaluation of two goods at the margin, is

equal to the objective trade-offs they face between the two goods (those given by budget-market

constraints, technological constraints etc).

For the interested reader, let us take one example of a particular utility function to show the

procedure more concretely. Assume that the utility function in the example above is given by:

U( x1, x2) = x1*x2

We can further set p1 =1 and p2=2, and let m = 10.

We remember that the constraint p1 x1 + p2 x2 = m can be rewritten as x2 = m/p2 - p1/p2*x1

This gives us: x2 = 10/2 – ½* x1 = 5 - ½* x1

The procedure is now first to substitute for x2 into the utility function. We get:

U( x1, x2) =x1*(5 - ½* x1) = 5x1 -1/2 x12

We remember that utility maximization was equivalent to differentiating the utility function, and

setting the resulting expression (marginal utility function) equal to zero. First we differentiate:

dU/dx1 = d(5x1 -1/2 x12)/d x1 = 5- x1

Then we set the expression equal to zero:

5- x1 = 0 x1=5

The optimal amount of x1 is 5. What about x2? Well, we know that x2 = 5 - ½* x1 from the rewritten

budget constraint, so that the optimal amount of x2 is equal to 2,5.

Let me sum up the procedure of calculating optimal choice under constraints very quickly.

1) Rewrite constraint

2) Put constraint into the utility function

3) Differentiate utility function with respect to remaining variable

14

4) Set the new expression equal to zero

5) Calculate optimal choice on the variable

6) Calculate the optimal choice on other variable by inserting into the constraint

There is another way of calculating optimization under constraints, which is performed by

constructing a so-called Lagrange-function. When following this procedure, one arrives at the same

result as if one rewrites the constraint and put it into the utility function. You don’t have to worry

about learning this technique. Look up a textbook in mathematics (Sydsæter, 2000; Gill, 2006) if you

are interested. Przeworski (2003) uses this technique, but don’t bother with the calculations. Just

make sure that you remember that it is optimization under constraints that is going on, and be sure

to understand the interpretation of the final result!

Game theory

We will just go through some of the concepts of game theory very quickly here, and interested

students should look up a game theory book to get a more complete picture. Very good

introductions are Watson (2002) in English and Hovi (2008) in Norwegian, and a more complex

presentation related to game theory in political science with several superb examples is McCarty and

Meirowitz (2006).

Game theory is a theoretical framework on strategic interaction between (rational) actors, also

known as players. Optimization in a game theoretical framework requires that the players think

through how other players’ actions will affect the pay-off from their own action. That is, the pay-offs

you get depend not only on your own actions, but also on what others do. A phrase that

characterizes such a setting is strategic interaction. Not only are players assumed to be rational, but

the players assume that everybody else is rational as well.

You can now look up a qualitative description of a prisoner’s dilemma or a chicken game if you don’t

remember these games. Descriptions of these games are everywhere, even on the internet, so I

don’t bother giving a description here. This will give you a feeling of what’s going on in a game

setting. Remember however that these games are only examples of games (some students tend to

think that game theory is equivalent with the prisoner’s dilemma game).

Game theory is very general, and is widely used in political economic research. We have descriptions

of games between politicians and electorates, games between countries, games between politicians

and businesses, games between dictators and democratic movements etc. What is common is (as

mentioned) that all players are rational, and that they try to make projections of how other players

will act, and bring these beliefs into their calculations.

There are different ways of describing a game. In a static game, where we interpret the game as if

the players were moving simultaneously (or at least that they cannot observe the other player’s

action before moving), we usually model the game by using the normal form representation. Figure 8

shows a normal form game:

Figure 8: Game presented in the normal form

15

Player 1\Player 2

Left

Right

Up

(2,0)

(3,1)

Down

(5,2)

(2,4)

The normal form presents the players of the game, their potential strategies (Up and Down for 1 and

Left and Right for 2), and the payoffs (utilities) for the actors given the different outcomes. The payoffs for player 1 is the number to the left of the comma. We see that the pay-offs are not only

dependent on the player’s own strategy, but also on the other player’s choice of strategy.

A Strategy is a complete plan of action for a player in a game: A strategy expresses a plan of action

for every contingency. This is not important in the static game, since there are no contingencies (one

cannot observe what the other player does), but in a sequential game, which we will come to later,

the importance of having a strategy that specifies what to do given different situations becomes

important.

A strategy is dominant if the strategy yields higher pay-offs than any other strategy, independent of

which action the other player takes (we here assume only two player’s because of simplicity, but

there can of course be more than two players in a game). A rational actor always chooses a dominant

strategy. In games with more than two strategies, we might have strategies that are dominated by

other strategies, even if we do not have any universal dominant strategy. A rational player never

chooses dominated strategies, so these strategies can be skipped from further consideration in an

analysis. In the prisoner’s dilemma (PD), not telling on the other prisoner is a dominated strategy,

and telling is a dominant strategy. Telling always gives a higher pay-off independent of what the

other player does, and both prisoners therefore choose to tell. Note that the outcome related to the

strategy combination {tell,tell} in the PD, is worse for both prisoner’s than the outcome {not tell, not

tell}.

It is not given that rational players interacting in a game are able to arrive at the best possible

solution. {tell,tell} is not Pareto optimal, since it is possible to make at least one actor better of by

moving to another situation, namely {not tell, not tell}. However, {tell, tell} is the equilibrium of the

game, since no player has an individual incentive to deviate. Make sure that you are able to separate

these concepts. Here are the two definitions of Pareto improvement and Pareto optimality:

Given a set of alternative allocations for a set of actors, a movement from one allocation to another

that can make at least one individual better off without making any other individual worse off is

called a Pareto improvement.

An allocation is Pareto optimal when no further Pareto improvements can be made, that is when no

one can be made better off without anyone else being made worse off.

These two concepts are very important in economics, and Pareto Optimality (PO) is often used as a

normative criterion to judge whether a situation is “good”, or more precisely efficient. Notice that

16

the concept does not say much about distribution. PO does however indicate that we do not waste

resources, since there are no alternatives that can make at least one actor better off without making

anyone else worse off. The PD equilibrium is inefficient in this sense (it is not PO).

The concept of equilibrium is not a normative criterion, but a description of a plausible solution to a

game (or the outcome in a market, or in a political process). The equilibrium concept entails the

notion that there are no individual forces that can push or pull the outcome away from the situation

we are in. Equilibria are assumed to have a certain degree of stability to them because of this

property. Situations that are not equilibria are assumed to be more unstable, and will ultimately

move towards an equilibrium (there can be more than one equilibrium).

There are several different specifications of the equilibrium concept, and the most commonly used in

simple game theory is the Nash equilibrium. The definition of a Nash equilibrium is that all players

have chosen their best strategy, given the other players’ strategies. That is, given the other players’

choices, no one can make themselves individually better off by deviating from their chosen strategy

in the Nash equilibrium. No one regrets their choices in a Nash equilibrium, once they observe what

the others have done. Another way to express the Nash equilibrium is by saying that all players play

their best responses to the other players’ chosen strategies.

In the game in Figure 8, {Up, Right} is the only Nash equilibrium. The reason is that Player 1 cannot

gain by switching from Up to Down given that 2 plays right (She would go from 3 to 2), and Player 2

cannot gain by going from Right to Left, given that 1 is playing Up (she would go from 1 to 0). Notice

that {Down, Left} would have been a Pareto-improvement from {Up, Right}. Both players would have

preferred this outcome, but it is not a feasible equilibrium. Why? Well, Player 2 would now have had

an incentive to go Right, and the situation is therefore not an equilibrium.

When game theorists look for descriptive solutions to a game, they look for equilibria. This does not

mean that the equilibria are normatively good, or preferred by the actors themselves. However, in

many games, equilibria can be Pareto optimal, but not in the game in Figure 8.

*

The last concept we are going to deal with is the concept of a sequential game. In a sequential game,

there is a sequence to the actions of the players, in the sense that one player can observe the other

player’s moves before making his own move. In these games, the Nash equilibrium is not a

sufficiently strong equilibrium concept, since it yields some implausible solutions. We need another,

stronger equilibrium concept, which is known as Subgame Perfect Nash Equilibrium (SPNE). I will not

go into the definition of the concept here, since it requires the understanding of what a subgame is,

but just know that this is the solution concept used in sequential games.

The reason why Nash equilibrium is too weak as a solution concept in sequential games is that it

cannot deal with non-credible threats and non-credible promises. SPNE can. Fortunately, there is a

simple way of finding solutions to sequential games, and it is known as backwards induction. The

best way to understand this procedure is by looking at a concrete example. Sequential games are

modeled by a game tree, which has a very intuitive structure, as seen in Figure 9. The circles are

called nodes and the lines are called branches. A specified player acts at a node, and the different

alternative actions are illustrated by the branches.

17

Figure 9: A game tree

Iran

Peace

Bomb

Israel

(1,1)

Bomb back

Passive

(2, -1)

(0,0)

The game tree illustrates a situation where Iran has a nuclear bomb, and must decide whether to

bomb Israel or to keep the peace. If the Iranians decide to bomb, Israel must decide between

remaining passive or bombing back. What will Iran do? The best situation for Iran is to nuke Israel if

Israel thereafter remains passive. This yields a utility (or pay-off) of 2 for Iran. However, this is not a

plausible solution to the sequential game. We can show this by backward induction. We start at the

last node, and ask what Israel will do if they are bombed. Remaining passive yields a utility of -1 and

bombing back yields a utility of 0. Israel is rational and will opt for bombing back, since this yields the

highest utility. Iran knows that Israel is a rational player, and it can therefore eliminate the option of

bombing Israel and Israel remaining passive. The two only real options are choosing peace or

choosing to engage in a mutual nuclear war. Peace yields a utility of 1 and reciprocal bombing yields

a utility of 0. Iran, knowing that Israel will bomb back if they bomb, therefore chooses the peaceoption. The outcome of the game is peace.

We have now solved the simple sequential game by backwards induction. We start at the last node in

the game, and eliminate non-plausible branches. We then move further up the game tree and

eliminate more non-plausible actions, given that the player knows that the next player to move in

the game will choose a certain action should a particular node be reached. The players evaluate

realistic alternatives, and do not engage in wishful thinking. They are rational, and they know that

other players are rational as well.

We also have games where the actors are uncertain about the other player’s type; that is, his

preference structure. In these games, the solution concept is called Bayesian Nash Equilibrium for

static games and Perfect Bayesian Equilibrium for sequential games, but I will not treat these games

and solution concepts.

18

Some encouraging words

If you have been able to capture a third of the knowledge in this text after the first read-through, you

should be happy about yourself! Economists use at least a year, or maybe more, to fully grasp these

issues, so you should not expect to understand them completely. However, I advise you to take a

break, and go through the text again. Later, you can have this text on the side of your desk when you

read substantial articles using rational choice approaches. It is not important to know how to do the

calculations yourself at first, but it is important to understand the general concepts. You do not have

to perform calculations yourself to check whether scholarly articles are correct mathematically (they

probably are). However, you should have some vague sense of what is going, and most importantly:

You should be able to understand the authors’ qualitative description of their results.

19

Literature

Debreu, Gerard (1959) The Theory of Value. New Haven: Yale University Press

Elster, Jon (1989) Nuts and Bolts for the Social Sciences, Cambridge: Cambridge University Press.

Gill, Jeff (2006) Essential Mathematics for Political and Social Research

Hovi, Jon (2008) Spillteori: En innføring. Oslo: Universitetsforlaget.

McCarty, Nolan and Adam Meirowitz (2007) Political Game Theory: An Introduction. Cambridge:

Cambridge University Press.

Przeworski, Adam (2003) States and Markets – A Primer in Political Economy. Cambridge: Cambridge

University Press.

Sydsæter, Knut (2000) Matematisk analyse. Bind 1. Oslo: Gyldendal Akademisk.

Watson, Joel (2002) Strategy: An Introduction to Game Theory. New York: W.W. Norton.

20