Enterprise Software - UC Berkeley School of Information

advertisement

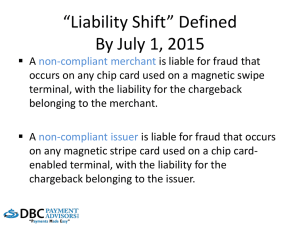

Software Liability Debate Report Group B DeLynn Bettencourt Kevin Cheng Yuval Elshtein Fong Hui Qintao Zhang -1- Introduction Everyday, thousands of computers around the world are illegally breached because of software security flaws. It is obvious that this situation will have negative repercussions if security flaws in software are not found and fixed. For example, there are virus creators who exploit these security flaws with internet worms,1 resulting in fraud, the release of confidential information, and the loss of business due to downtime. The consequences of such exploits are damages to businesses, organizations, and the general public. The National Institute of Standards and Technology issued a study in July 2002 that claimed software quality cost the industry nearly $60 billion a year while customers bear about two-thirds of that cost. The question of who is responsible has lead to raging debates between parties with opposing interests. This report will provide an overview of this debate, and we now present issues and statistics from both sides. Please note that there exist end-users who require customized IT software solutions (i.e. mission critical and enterprise solutions) which are agreed upon via complex contracts, but we have chosen to focus our argument more on the software product providers, whose relation to the market is closer to that of a product supplier than that of a solution provider. Pro-Liability: Vendors should be liable for software security issues The majority of security holes are due to poor programming and a lack of quality control. Software manufacturers usually place far more emphasis on getting a new system out of the door with profitable features than on ensuring that the system is sufficiently secure before it’s released. Because of this, the enterprise software users have to use extra resources to identify and track security flaws during the deployment process.2 There are no other consumer products for which customers have to do so much to ensure product safety. If software manufacturers do not suffer negative consequences for putting out bad software that carry enough weight, then they will only respond to the benefits of getting software out faster, and they will continue to produce insecure software. The computer industry has no real economic incentive to improve software safety those areas that their customers lack choice; in other words, there is no incentive to fix security flaws when customers suffer from lock-in3. Oracle, for instance, controls the market for enterprise database software. Its users, with a huge amount of data on the system, are so reliant on the product that they have no way to threaten Oracle with shifting to another provider. Software security issues are often exacerbated by a lack of transparency of the system to the customer—not enough information about the system is disclosed to the customer, so the customer is not informed enough to know how to handle some security issues. Additionally, customers often have no real way of evaluating the software before signing the contract with the vendors. To make matters even worse, Oracle, Microsoft, and other software manufactures include provisions in their licenses that forbid criticism of their software without permission.5 1 For a good definition, see: http://en.wikipedia.org/wiki/Computer_worm Source: “Manufacturers should be liable when computer bugs leave consumers in the lurch” http://www.bos.frb.org/economic/nerr/rr2002/q3/perspective.pdf 3 Source: “Manufacturers should be liable when computer bugs leave consumers in the lurch” http://www.bos.frb.org/economic/nerr/rr2002/q3/perspective.pdf 5 Source: “Manufacturers should be liable when computer bugs leave consumers in the lurch” http://www.bos.frb.org/economic/nerr/rr2002/q3/perspective.pdf 2 -2- These End User License Agreements (EULA)6, commonly called “shrink wrap” or “click wrap” contracts, eliminate consumers’ ability to seek compensation if the software does not perform as expected. Some firms even threaten researchers who publish security holes with civil and criminal litigation under copyright law. These privileges enable the software vendors to hide the known software flaws and escape from the liability for software security issues. In most markets, manufacturers are held liable for products that are predictably dangerous. When manufacturers in industrial markets are held responsible for the failure of their products, their safety records often improve. For instance, imposing liability and creating manufacturing standards for cars has greatly improved cars’ safety. If software vendors are held liable for security holes, then a similar improvement (in the form of adequately testing for security holes or flaws) can be expected.7 If software manufacturers are forced by the shadow cost of security to improve the security of their products, then at the very least manufacturers will reap the benefits of doing things right the first time by avoiding the large amount of expense to patch, bad reputation, and potential loss of sales of their software. Summary of supporting points (Pro-liability): There’s an economic incentive for companies to focus on getting software out faster and neglect the quality of the software. If software manufacturers do not suffer any negative consequences for putting out bad software, then they will only respond to the benefits of getting software out faster, and they will continue to produce bad software. Market forces are not adequate in motivating companies to invest in software security so the government needs to step in to regulate; holding vendors financially liable is the only way to economically motivate them to fix security flaws in software before distribution. The government should be involved because if a software affects entities that are in the public domain (for example the Internet) - any potential threat to a public infrastructure is of interest to the government to regulate. The expectation that end users are responsible for security issues within software codes is faulty. Users can be expected to have basic knowledge of security breach prevention (such as firewalls, anti-virus applications, etc.) and to patch their systems at best, but they should not be expected to be able to self-detect and fix any flaws in the software code itself. The analogy is that a car owner is expected to service and maintain his/her vehicle but is not expected to be able to fix a car when there is a mechanical failure. Responsibility to fix security flaws should rest on the IT professionals or the software providers. The lack of transparency of software to the user often makes it difficult for the user to handle software security issues without the help of the software provider. EULA, commonly known as “shrink wrap” or “click wrap” contracts eliminate consumers’ ability to seek compensation if the software does not perform as expected. These privileges enable the software vendors to hide the known software flaws and escape from the liability 6 End-User License Agreements Source: “Manufacturers should be liable when computer bugs leave consumers in the lurch” http://www.bos.frb.org/economic/nerr/rr2002/q3/perspective.pdf 7 -3- for software security issues. If these EULA hold up in court, then software vendors will not be held responsible if they ship programs without adequately testing for security holes.8 If users are allowed to sue the software manufacturers for damages caused by security vulnerabilities, then the shadow cost of producing insecure software will rise drastically for software manufactures, making devoting extra resources to ensuring software security economically viable. Costs of producing more secure software will be passed on to users, but the increased cost of software should be offset by the fact that the software will cause fewer problems—problems that are costly for the end-user to solve/remedy. Con: Vendors should not be responsible for software security issues There is a question of whether holding software liable for product quality (in terms of security), can realistically be helpful in eliminating security flaws. Not only may vendor liability not be the appropriate tool for reducing the number and severity of software security holes, but also holding vendors liable may cause negative side effects on software industry, such as the elimination of small-to-medium scale software vendors due to the heightened shadow cost that vendor liability imposes. Software is different from other consumer products, such as a car. Therefore, liability models cannot be compared. The biggest difference is that people don't purposely abuse cars seeking defects like they do with software. There are simply no real “hackers” for cars, while there are large groups of people finding security flaws in widely used products such as Microsoft Windows. In order for software to be compromised, a third-party attacker is necessary, and he is “aided and abetted” by bug/security flaw finders. How can software providers be wholly responsible when they are not the ones with the malicious intent to exploit users’ machines? Moreover, there are no software vendors intentionally creating defects, and at the same time, it is impossible to create absolutely perfect software. Finally, a liability case could take years to move through the courts, while the software has a relatively short life cycle and it could even become obsolete before the case comes to trial. Thus, even if software liability is confirmed, other than the potential for financial damages to be awarded to the plaintiff, outcome could be basically moot. Also, this would burden the already backed-up legal system with more cases. Summary of opposing (con) points (Anti-liability): Software is a uniquely complex product that will always have some defects. It is impossible to make error-free software. It is, however, possible that software developers don’t have all the skills they need to develop secure code and that they need to be trained better. According to the research published in Technology Strategy and Management magazine, the median level of 0.15 defects per thousand lines of code as reported by customers in a year after release during 2002-2003 compared with 0.6 defects reported in 1990. This shows that software vendors have made remarkable progress even it’s still far from perfect. Source: “Manufacturers should be liable when computer bugs leave consumers in the lurch” http://www.bos.frb.org/economic/nerr/rr2002/q3/perspective.pdf 8 -4- The Internet is an open system. Enterprise software is designed for large data sharing and data transactions and it has to be executed in an open Internet system to efficiently fulfill the enterprise software functionalities. Therefore, because we have an open system, we must deal with the inconveniences of hacker activities. Software manufacturers cannot predict how software will be used or where and how it will be installed. This lack of information makes it impossible for manufacturers to guarantee software for fitness of use. Users can adapt enterprise software through configuration. Users demand this flexibility, but this characteristic of enterprise software makes the testing process more complicated, thus making this software secure becomes more difficult. Many small-to-medium software manufacturers would leave the business if faced with liability suits, leaving a few remaining large manufacturers to serve a large and growing marketplace. In other words, only the largest software manufacturers will be able to afford the risk imposed by the added liability, thus eliminating the competition. Under threat of litigation, software vendors might feel too risky to release a new product or write new functions, and customers will not be able to enjoy the benefits of these new software products and features. Innovation will slow or will be destroyed completely, because it won’t be worth taking the risk to develop new functions or applications. An alternative to litigation is to require software companies to provide a "software safety data sheet" that includes detailed information about how their software operates, with the same information that host intrusion-prevention solutions (HIPS) currently discover in the aftermarket. This way, the consumers will have more knowledge about the quality of the products and be able to prevent hackers’ attacks properly. Litigation will make people reluctant to integrate their software with anyone else’s since they are not sure how the software will be used and whether the software security will be compromised after the integration. Lock-in will become more prevalent because open file formats or plug-ins or other interoperable functions will mean that control is lost over some inputs or program operations, and software manufacturers won’t want to risk that. Exposure to more lawsuits does not fully guarantee that software vendors will properly address security testing. The increased use of more popular software will allow for more security issues to be reported, so the liability costs born will be disproportionate to that of less used software; the rate at which flaws are found is a function of how many people are using it. The unleashing of a virus that turns the software holes into security damage for computer users is a criminal act. No matter how careful software vendors might be, hackers will most likely be able to find ways to break the systems. There is no such thing as an absolutely secure piece of software. Holding software vendors wholly responsible would place the blame onto a third party, instead of on the real criminals. So far, only commercially produced software has been addressed. Open source software has the advantage that freely available source code encourages peer review, which in turn, makes software less buggy because there are more pairs of eyes finding bugs. Since open source software is free and the code is provided, consumers should be able to test the software quality and modify it as needed. There is no reason to place the liability on those open source providers who do not receive income from their software. -5- Interesting Facts The current statistics on flawed software9 The Sustainable Computing Consortium reported that there can be as many as 20 to 30 bugs per 1,000 lines of software code in most software applications The Cutter Consortium claimed that of the software firms it polled, 32 percent admitted releasing software with too many defects, 38 percent said their software lacked an adequate quality assurance program, and 27 percent said they do not conduct formal quality reviews In his book titled Software Quality: Analysis and Guidelines for Success, author T. Capers Jones reported that: o No method of removing software defects or errors is 100 percent effective o Formal design and code inspections average about 65 percent in defect-removal efficiency o Most forms of testing are less than 30 percent efficient Few companies have a system of peer reviewing of code in place, yet the Institute of Electrical and Electronics Engineers (IEEE) concluded that peer reviews of software will catch 60 percent of all coding defects Conclusion We understand that the issues with flawed software are grave and that it’s necessary to reassess our methods of addressing software security. However, we also feel that that there is no clear-cut way of defining security in software. The reason being is that even if a system meets the customer’s specification, is still not secure if it doesn't adequately control access, protect integrity, provide availability, and otherwise meet security policy goals. Software is by nature a very complex entity that caters to a wide spectrum of users, and it is practically impossible to write flawless software code. The general attitude that software providers have towards security is that they will provide users with patches to fix security flaws is not adequate. Rather, adequate measures include having good policies, the appropriate tools, and trained administrators that can create a dependable and secure computing environment. In general, the main players in security are humans and by nature humans have flaws. As a result of the role human nature plays in this whole ordeal, software security has become a very vague, not to mention controversial, topic around the world. Hence, regulating the vague notion of software security by holding only one party, the software manufacturers, liable is a flawed proposition. Group B hopes to argue the “con” side: that software providers should not be legally liable for security flaws and vulnerabilities. 9 Source: “Can Software Kill You?” http://www.technewsworld.com/story/33398.html -6-