3679_0_Data Preprocessing

advertisement

Data Preprocessing for Automotive Engine Tune-up

Chi-man VONG, Io-weng CHAN, Chio-pang CHANG, Wai-kei LEONG

{cmvong, da11229, da11220, da11324}@umac.mo

Department of Computer and Information Science, Faculty of Science and Technology, University of Macau

Abstract: Data preprocessing [4, 6, 7] is an important procedure for mathematical modelling.

Mathematical model estimated based on a training data set results better if the data set has been properly

preprocessed before passed to the modelling procedure. In the paper, different preprocessing methods on

automotive engine data are examined. The preprocessed data sets using different preprocessing methods

are passed to neural networks for models estimation. The generalizations of these estimated models could

be verified by applying test sets, which determine the effects of different preprocessing methods. The

results of preprocessing methods for automotive engine data are shown in the paper.

Key words: Automotive engine setup, PCA, CCA, Kernel PCA, Kernel CCA, Data preprocessing

1. Introduction

Mathematical modelling [1, 2, 3] is very common in many applications because of its capability of

estimating an unknown and complex mathematical model covering the application data. However, as

there is a natural law – GIGO (Garbage In Garbage Out). No matter how good the modelling tool is. If

garbage data is passed in, then garbage results are returned. Hence data preprocessing is a must for high

accuracy of modelling results. Traditional statistical methods concentrate on data redistribution and data

sampling in order to provide consistency within the data. However, most statistical methods are not

capable to handle high data dimensionality. To overcome this problem, dimensionality reduction is

usually applied. However, reducing some input features may cause information loss because the input

features themselves are highly (and perhaps nonlinearly) correlated. Under this situation, several

preprocessing methods from machine learning, support vector machines (SVM) and statistics are

compared to verify their ability to handle the issues of high dimensionality and nonlinear correlation.

In the comparison, a testing application of automotive engine tune-up is selected since it involves a

moderate number of dimensions (> 30) and the input features are nonlinearly correlated.

2. Data Preprocessing

Formally, data preprocessing is a procedure to clean and transform the data before it is passed to other

modelling procedure. Data cleaning involves removing the noise and outliers in the data set, while data

transforming tries to reduce the irrelevant number of inputs, i.e., reducing dimensionality of the input

space. As data cleaning is very straightforward of applying standard process of “zero mean and unit

variance”, the concentration is put on data transforming. The following subsections introduce the

common data transformation methods [6, 7].

2.1 Principal Component Analysis

A well-known and frequently used technique for dimensionality reduction for input space is linear

Principal Component Analysis (PCA). Suppose one wants to map vectors x Rn into lower dimensional

vectors z Rm with m < n. One proceeds then by estimating the covariance matrix:

ˆ

Σ

xx

where x

1

N

1 N

( x k x)( x k x) T

N 1 k 1

(1)

N

x

k 1

k

and xk is a vector = the kth data point in training set and computes the eigenvalue

decomposition

ˆ u u

Σ

xx i

i i

(2)

where ui is the ith eigenvector of Σ̂ xx and i is the ith eigenvalue of Σ̂ xx . By selecting the m largest

eigenvalues and the corresponding eigenvectors, one obtains the m transformed variables (score

variables):

z i u Ti ( x x)

(3)

The remaining n–m variables are neglected as they are no longer important. Note that the transformed

variables are no longer real physical variables.

2.2 Kernel Principal Component Analysis

Linear PCA always performs well in dimensionality reduction when the input features are linearly

correlated. However, for nonlinear case, PCA cannot give good performance. Hence PCA is extended to

nonlinear version under SVM formulation. This nonlinear version is called Kernel PCA (KPCA). KPCA

involves solving the following system of equations in :

Ωα α

where

(4)

Ω kl K ( xkT , xl ) k, l = 1,…,N.

The kernel function K is chosen as RBF (Radial Basis Function), i.e., K(x, y) = exp(-||x-y||/22), with the

user predefined standard deviation. The vector of variables = [1 ; … ; N] is an eigenvector of the

problem and R is the corresponding eigenvalue. In order to obtain the maximal variance one selects

the eigenvector corresponding to the largest eigenvalue. The transformed variables become

N

zi ( x) i ,l K ( xl , x)

(5)

l 1

where i = [i1 ; … ; iN] is the eigenvector corresponding to the ith largest eigenvalue, i = 1, 2, …,p, and

p is the largest number such that eigenvalue p of the eigenvector p is nonzero. One more point to note is

that the eigenvectors i should satisfy the normalization condition of unit length:

αTi α i

1

i

, i 1,2,...., p

(6)

where 1 2 … p > 0, i.e., nonzero.

2.3 Canonical Correlation Analysis

In canonical correlation analysis (CCA), one is interested in finding the maximal correlation between

project variables zx = wTx and zy = vTy, where x Rn, y Rm denote given random vectors with zero mean.

CCA also involves an eigenvalue problem for which the eigenvectors w, v are solved:

1

1

2

C xx C xy C yy C yx w w

1

1

2

C yy C yxC xx C xy v v

(6)

where Cxx = E[xxT], Cyy=E[yyT], Cxy = E[xyT] and the eigenvalues 2 are the squared canonical

correlations.

Only one of the eigenvalue equations needs to be solved since the solutions are related by

Cxy w xCxx w

C yx v yC yy v

(7)

where

x 1

y

vT C yy v

wT C xx w

(8)

2.4 Kernel Canonical Correlation Analysis

In kernel canonical correlation analysis (KCCA), the formulation is similar to CCA except kernel trick is

applied. The kernel chosen is again RBF. Solve the following system in , as the projection vectors:

0

0 c, 2

1c ,1 I

0

0

2c, 2 I

c ,1

(9)

where

c ,1 M c 1 M c

c , 2 M c 2 M c and

M c I 1v1Tv / N

1,kl x kT xl

2 ,kl y kT y l for k , l 1,..., N

v1, v 2 are lagrange multipiers

I is an identity matrix, 1v is a 1 - vector R N

(10)

3. Automotive Engine Tune-up

Modern automotive petrol engines are controlled by the electronic control unit (ECU). The engine

performance, such as power output, torque, brake specific fuel-consumption and emission level, is

significantly affected by the setup of control parameters in the ECU. Much parameter is stored in the

ECU using a look-up table format. Normally, the car engine performance is obtained through

dynamometer tests. Traditionally, the setup of ECU is done by the vehicle manufacturer. However, in

recent the programmable ECU and ECU read only memory (ROM) editors have been widely adopted by

many passenger cars. The devices allow the non-OEM’s engineers to tune-up their engines according to

different add-on components and driver’s requirements.

Current practice of engine tune-up relies on the experience of the automotive engineer. The engineers will

handle a huge number of combinations of engine control parameters. The relationship between the input

and output parameters of a modern car engine is a complex multi-variable nonlinear function, which is

very difficult to be found, because modern petrol engine is an integration of thermo-fluid,

electromechanical and computer control systems. Consequently, engine tune-up is usually done by

trial-and-error method. Vehicle manufacturers normally spend many months to tune-up an ECU optimally

for a new car model. Moreover, the performance function is engine dependent as well. Knowing the

performance function/model can let the automotive engineer predict if a new car engine set-up is gain or

loss, and the function can also help the engineer to setup the ECU optimally.

In order to acquire the performance model for an engine, modelling techniques such as neural networks,

support vector machines, statistical regression could be employed. No matter which method is used for

modelling, the data must be preprocessed.

4. Experiment Setup

In order to compare the previous methods, a set of 300 training data is acquired through the dynamometer.

Practically, there are many input control parameters and they are also ECU and engine dependent.

Moreover, the engine horsepower and torque curves are normally obtained at full-load condition. The

following common adjustable engine parameters and environmental parameters are selected to be the

input (i.e., engine setup) at engine full-load condition.

x = < Ir, O, tr, f, Jr, d, a, p > and y = <Tr>

where

r: Engine speed (RPM) and r = {1000, 1500, 2000, 2500, …, 8000}

Ir: Ignition spark advance at the corresponding engine speed r (degree before top dead centre)

O: Overall ignition trim ( degree before top dead centre)

tr: Fuel injection time at the corresponding engine speed r (millisecond)

f: Overall fuel trim ( %)

Jr: Timing for stopping the fuel injection at the corresponding engine speed r (degree before top dead

centre)

d: Ignition dwell time at 15V (millisecond)

a: Air temperature (°C)

p: Fuel pressure (Bar)

Tr: Engine torque at the corresponding engine speed r (Nm)

After acquiring the training data, it is ready to pass to each of the previously mentioned preprocessing

methods to verify which method is best to automotive engine data. Those methods are implemented in

commercial computing package such as MATLAB under Windows XP.

5. Results

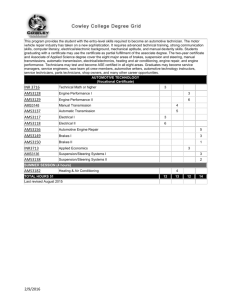

Results are separated into two parts: dimensionality reduction and pertained accuracy. Table 1 shows the

effects of dimensionality reduction of different methods, with 5% information loss, i.e., all the dimensions

contributing only 5% information in total for the training data set are discarded.

Original Dimension

Reduced Dimension

PCA

50

45

KPCA

50

41

CCA

50

46

KCCA

50

43

Table 1. Comparison of dimensionality reduction for different methods

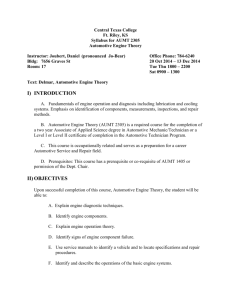

Another result is the accuracy pertained, i.e., the generalization on unseen inputs of the models built using

the reduced dimensional data set. To compare the pertained accuracy, we need a mathematical model to

be built based on the original data set and additional four models based on the reduced data set. In total,

there are five mathematical models built. In our case, neural networks [1, 3, 8] are used as the modelling

tool because it is very mature and built inside MATLAB. The generalizations for the five models are

tested upon a common test set of 20 cases that are acquired from the dynamometer as well. Table 2 shows

the results of accuracy. From the results, it is shown that KPCA performs best among all preprocessing (or

no preprocessing) methods.

Accuracy on test set

No preprocessing

92.2%

PCA

86.3%

KPCA

93.1%

CCA

89.1%

KCCA

91.2%

Table 2. Comparison of generalization of models built upon the reduced training sets

6. Conclusions

Data preprocessing is a useful procedure for data transformation especially when the dimensionality of a

training data set is high. With lower dimensions, the computation issues are relaxed and the estimated

models based on the reduced training set may even perform better in not only the training accuracy but

also the generalization.

In this paper, different preprocessing methods are tested and the results are also compared. In the

application domain of automotive engine setup, it is verified that KPCA is the best among the methods we

test.

Reference

[1] Bishop C., 1995. Neural Networks for Pattern Recognition. Oxford University Press.

[2] Borowiak D., 1989. Model Discrimination for Nonlinear Regression Models. Marcel Dekker.

[3] Haykin S., 1999. Neural Networks: A comprehensive foundation. Prentice Hall.

[4] Pyle D., 1999. Data Preparation for Data Mining. Morgan Kaufmann.

[5] Seeger M., 2004. Gaussian processes for machine learning. International Journal of Neural Systems,

14(2):1-38.

[6] Smola

V.,

A., Burges C., Drucker H., Golowich S., Van Hemmen L., Muller K., Scholkopf B., Vapnik

1996.

Regression Estimation with Support Vector Learning Machines, available at

http://www.first.gmd.de/~smola

[7] Suykens J., Gestel T., De Brabanter J., De Moor, B. and Vandewalle, J., 2002. Least Squares Support

Vector Machines. World Scientific.

[8] Traver M., Atkinson R. and Atkinson C., 1999. Neural Network-based Diesel Engine Emissions

Prediction Using In-Cylinder Combustion Pressure. SAE Paper 1999-01-1532.