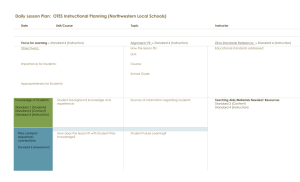

Using Machine Learning in Instructional Planning

advertisement