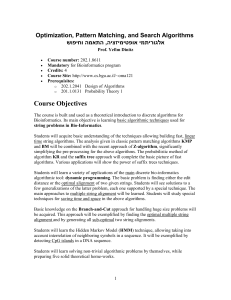

Chapter 9 The Wide Window Approach

advertisement

Chapter 9 A Review of the Exact String Matching Algorithms We have presented and discussed many algorithms. of them. It is time for us to have review First of all, we must note that we should not compare all of these algorithms. We may say that every algorithm has its own value as well as its weakness. But different algorithms may be used under different conditions. It is meaningless to say there is a best string matching algorithm. Let us imagine that our pattern string is a rather short one and our text is not too long either. In this case, we may simply use Chu’s Algorithm introduced in Section 4.3, the elimination oriented algorithm introduced in Section 2.10, the convolution algorithm introduced in Chapter 2. Let us consider the case where T catgacgctagt and P gatc . Suppose we use the Chu’s Algorithm. Although it was used to find the LSP( X , Y ) , we can easily modify it so that it can used as an exact string matching algorithm. It will proceed as follows: Initially 2 a 1 3 t 1 4 g 1 5 a 1 6 c 1 7 g 1 8 c 1 9 t 1 10 a 1 11 g 1 12 t 1) Consider p1 g 1 2 c a D = (0 0 3 t 0 4 g 1 5 a 0 6 c 0 7 g 1 8 c 0 9 t 0 10 a 0 11 g 1 12 t 0) Consider p2 a 1 2 c a D = (0 0 3 t 0 4 g 0 5 a 1 6 c 0 7 g 0 8 c 0 9 t 0 10 a 0 11 g 0 12 t 0) Consider p3 t 1 2 c a D = (0 0 3 t 0 4 g 0 5 a 0 6 c 0 7 g 0 8 c 0 9 t 0 10 a 0 11 g 0 12 t 0) D = 1 c (1 Since no 1 exists in D , we report “No”. Suppose we use the elimination oriented method, we would proceed as follows: Consider p1 g . D (4,7,11) 9-1 Consider p2 a . D (5) Consider p3 t . D () . We may now report “No”. Let us use the convolution method. catgacgctagt ctag 000100100010 010010000100 001000001001 00000000000000 We report “No”. We now discuss the convolution approach. The biggest advantage of convolution is that no window is produced, It is easy to program and besides, we may conveniently use the bit-parallel approach. That is, we perform a pre-processing on the text string to produce all of the incidence vectors. Suppose the size of the vocabulary set is 4. We only need 4 incidence vectors. Then we just perform some left shift and logical “and” operation. Of course, the size of the text string must not be too large; otherwise, the bit-parallel approach can not be used. This is its major disadvantage. If we use the bit-parallel approach, we perform a pre-processing and this pre-processing is performed on the text string which means this pre-processing will be useful for different pattern strings later. This is an advantage of the convolution approach. If the convolution approach is used, we should use the early termination approach. Actually, this is physically equivalent to prefix finding. If the size of the vocabulary is very large, this early termination approach is quite efficient. As for the suffix tree approach, we must admit that it is not very practical because it is hard to write a program to do the tree searching. Note that the suffix tree is not a binary tree. There is a linear algorithm to construct a suffix tree. But it is a rather complicated algorithm.. The advantage of using the suffix tree is that the pre-processing, namely construction of a suffix tree, is performed on the text string which means that this pre-processing is useful for different pattern strings. Another important thing about being familiar with suffix tree is that it is academically interesting. There is a large amount of research results on suffix tree. Any scholar must have some knowledge on suffix trees. 9-2 Although it is not very practical to use the suffix tree approach in exact string matching, it is practical to use the suffix array. Having a suffix array, we may use binary search or hashing to solve the exact string matching problem. We now discuss the Reverse Factor Algorithm. We like to point out that this is indeed a very good exact string matching algorithm.. Our main job is to find LSP (W , P ) where W is the window. If the length of LSP (W , P ) is long, we cannot shift too much. But, the probability theory tells us that it is quite unlikely that the length of LSP (W , P ) can be long. The reader may take a pen and randomly generate two strings X and Y . Then find LSP( X , Y ) . You will always find that LSP( X , Y ) is a short one. Thus we can often perform a long shift. The reader may be worried that LSP (W , P ) is found in run-time and thus the algorithm may not be very efficient. But, as we pointed out in the above paragraph, LSP(W , P) is usually very short, Thus, it usually takes a few steps to finish the process of finding LSP (W , P ) . As to the algorithms to find LSP (W , P ) , we introduced four of them. Most of them are easy to implement and efficient. Let us consider a case here. W actggctatgac and P gtatgcaccatg . Suppose we use the Chu’s Algorithm. Consider w12 c . D (000001011000) Consider w11 a . D (000000100000) Consider w10 g . D (000000000000) We report LSP(W , P) 0 and we may shift 12 steps. If we use the bit-parallel method, we do a pre-processing on the pattern string. Since the Reverse Factor Algorithm is a window sliding method, this pre-processing is useful for all windows. In the chapter discussing the Reverse Factor Algorithm, we introduce the filtering concept. This filtering is quite straightforward. We test whether a suffix of W with a particular length appears in P or not. If it does not, we ignore this window. This is actually an exact string matching problem. So, what are we going to solve the problem? Do we have to use any sophisticated algorithm in this case? Note that the suffix will be a short one, at most, say with length of 4, and the pattern is also relatively short also. Therefore, it is quite easy to solve the problem. For example, suppose the data are W actggctatgac , P gtatgcaccatg and we want to see whether suffix tgac of W appears in P . As shown in the above discussion, it only takes 3 steps to conclude the searching. 9-3 Although filtering was introduced in the Reverse Factor Algorithm, it can be used in almost any algorithm. The reader should remember this. We now come to the Horspool Algorithm, The greatest advantage of this algorithm is it is easy to implement. Besides, it is a constant space algorithm because the result of pre-processing, namely the location table, is of length , the size of the alphabet set, which is considered a constant.. But, there is a fundamental problem of this algorithm. The average number of steps of shifting is short. The reader is encouraged to write down any string and then construct the location table. You will find out that the chance that there is a large number is the table is very small. Consider the case presented above where P gtatgcaccatg . Only when the last character of W is g will cause a long shifting. We recommend the reader to use Liu’s Algorithm which will perform much better than the Horspool Algorithm. The KMP Algorithm is perhaps the most famous exact string matching algorithm. Researchers also like to compare their algorithms with this algorithm. But, this algorithms suffers from a disadvantage: It scans from left to right and stops as soon as a mismatch occurs. Let us assume that the mismatch occurs after j steps. Then we can shift at most j steps. But, unfortunately, j must be quite small because it is unlikely that there exists a long prefix of the window which is exactly equal to a prefix of the pattern. If j 4 , the probability of having such a prefix is roughly 0.0004. Because of this, we cannot expect the KMP Algorithm to be effective because we do have to open a large number of windows. Yet the KMP Algorithm is academically interesting because in the worst case, its time-complexity is O (n) . At the original KMP Algorithm paper, it was mentioned that filtering mechanism can be used. We wonder why researchers seldom mention that mechanism. Actually, the KMP Algorithm should include that part. The filtering mechanism improves the KMP Algorithm to a large degree. Consider Fig. 9.1. j T P U Z P U Y U X U Z 9-4 Fig. 9.1 An illustration of the KMP Algorithm From the above figure, we can see that a good substring U is a short one. In fact, the best situation is that there is not suffix in P(1, j ) equal to a prefix of P(1, j ) . This leads to the proposal of the Boyer and Moore Algorithm. The Boyer and Moore Algorithm scans from the right to left. Suppose a suffix U of the window W is found to be exactly the same as a suffix of P . If in P , U also appears to the left of it, we can move P as shown in Fig. 9.2. T P Z U P Fig. 9.2 X U Y U Z U U appearing in the left of it in P Suppose U is unique in P . Then we may find a suffix V of P , contained in U which is a prefix of P and we can move P as shown in Fig. 9.3. T P V V V P Fig. 9.3 V U being unique Since the Boyer and Moore scans from right to left, it is more efficient than the KMP Algorithm. Yet it suffers from one advantage: The pre-processing of it is much more complicated. As can be seen, if U is unique in P , we can shift P much farther to the right.. Both KMP and Boyer and Moore Algorithms utilize such a substring in P which exactly matches with a substring in W . Ideally, U should be unique and short. But U is not pre-determined. Since it is obtained in run-time, this is not guaranteed, Many algorithms are therefore what we call “selective scanning order” 9-5 algorithms. They neither scan from left to right nor from right to left. They have a mechanism to determine a scanning order in the hope that the resulting slide is a long one. 9-6