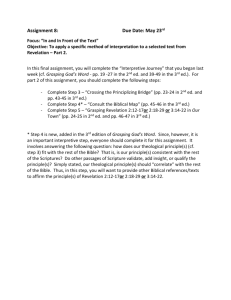

The Brain`s Concepts: - EECS Instructional Support Group Home Page

advertisement