MS Word document - cognition research

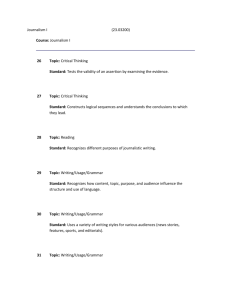

advertisement