The psychology of face construction: giving evolution a

advertisement

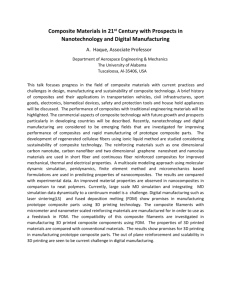

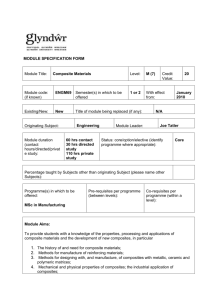

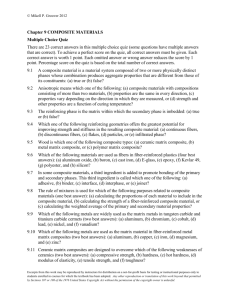

The psychology of face construction: giving evolution a helping hand Charlie D. Frowd (1*) Melanie Pitchford (2) Vicki Bruce (3) Sam Jackson (1) Gemma Hepton (1) Maria Greenall (1) Alex H. McIntyre (4) Peter J.B. Hancock (4) (1) School of Psychology University of Central Lancashire, PR1 2HE * Corresponding author: Charlie Frowd, Department of Psychology, University of Central Lancashire, Preston PR1 2HE, UK. Email: cfrowd@uclan.ac.uk. Phone: (01772) 893439. (2) Department of Psychology University of Lancaster, LA1 4YF (3) School of Psychology Newcastle University, NE1 7RU (4) Department of Psychology University of Stirling, FK9 4LA Running head: Evolving human faces Applied Cognitive Psychology 1 Abstract Face construction by selecting individual facial features rarely produces recognisable images. We have been developing a system called EvoFIT that works by the repeated selection and breeding of complete faces. Here, we explored two techniques. The first blurred the external parts of the face, to help users focus on the important central facial region. The second, manipulated an evolved face using psychologically-useful ‘holistic’ scales: age, masculinity, honesty, etc. Using face construction procedures that mirrored policework, a large benefit emerged for the holistic scales; the benefit of blurring accumulated over the construction process. Performance was best using both techniques: EvoFITs were correctly named 24.5% on average compared to 4.2% for faces constructed using a typical ‘feature’ system. It is now possible, therefore, to evolve a fairly recognisable composite from a 2 day memory of a face, the norm for real witnesses. A plausible model to account for the findings is introduced. Acknowledgements The research was gratefully supported by grants from the Engineering and Physical Sciences Research Council (EP/C522893/1) and Crime Solutions at the University of Central Lancashire, Preston, UK. Keywords: facial composite, EvoFIT, PRO-fit, recall, recognition. 2 Witnesses to and victims of crime are confronted with a series of daunting tasks to bring a criminal to justice. They normally are required to describe the crime and those involved. If the police have a suspect, witnesses (and victims) may be asked to try to identify the offender from an identity parade. If not, and in the absence of other evidence such as DNA or CCTV footage, they may be asked to build a likeness of the offender’s face. This is a visual representation known as a facial composite and is traditionally produced by witnesses describing the offender’s face and then selecting individual facial features from a kit of parts: hair, face shape, eyes, nose, mouth, etc. There are several methods that the police use to construct composites in this way. These include sketch artists, people skilled in portraiture who use pencils and crayons to draw the face by hand, and computer software systems such as E-FIT and PRO-fit in the UK, and FACES and Identikit 2000 in the US. In all these methods, witnesses select from a collection of facial features to build the face. The techniques have been the subject of considerable research. The general conclusion is that they are capable of producing good likenesses of a face in certain circumstances when a person works directly from a photograph (Davies et al., 2000; Frowd et al., 2007b). When relying on a fairly recent memory of a face, composite quality is worse and the ability to name such images is about 20% correct (Brace et al., 2000; Bruce et al., 2002; Davies et al., 2000; Frowd et al., 2004, 2005b, 2007b). Image quality is considerably worse, however, when the memory is several days old, which is the normal situation for real witnesses; composite naming levels are typically only a few percent correct (Frowd et al., 2005a, 2005c, 2007b, 2007c). The reason for this poor performance has been known for over 30 years (Davies, Shepherd & Ellis, 1978). Face recognition essentially emerges from the parallel processing of individual facial features and their spatial relations on the face (see Bruce & Young, 1986, for a review). In contrast, face production is traditionally based more on the recall of information: the description and selection of individual features. While we are excellent at recognising a familiar face, and quite good at recognising an unfamiliar one, we are generally poor at describing individual features and selecting facial parts (for a recent review, see Frowd et al., 2008a). Several research laboratories have been working on alternative software systems. These include EFIT-V (Gibson et al., 2003) and EvoFIT (Frowd et al., 2004) in the UK, and ID in South Africa (Tredoux et al., 2006). The basic operation of these ‘recognition-based’ systems is similar. They present users with a range of complete faces to select. The selected faces are then ‘bred’ together, to combine characteristics, and produce more faces for selection. When repeated a few times, the systems converge on a specific identity and a composite is ‘evolved’ using a procedure that is fairly easy to do: the selection of complete faces. One problem with the evolutionary systems is the complexity of the search space. They contain a set of face models, each capable of generating plausible but different looking faces. The models, which are described in detail in Frowd et al. (2004), capture two aspects of human faces: shape information, the outline of features and head shape, and pixel intensity or texture, the greyscale colouring of the individual features and overall skin tone. The number of faces that can be generated from these models is huge, as is the search space. The goal then is to converge on an appropriate region of space before a user is fatigued by being presented with too many faces. The current authors have been working on one of these ‘recognition’ based systems called EvoFIT that presents users with screens of 18 such faces. Users select from screens of face shape, facial textures, and then combinations thereof before the 3 selected faces are bred together using a Genetic Algorithm, to produce more faces for selection. This process is normally repeated twice more to allow a composite to be ‘evolved’. This version of EvoFIT was compared with a standard ‘feature’ system, PRO-fit, in the laboratory under simulated real life procedures (Frowd et al., 2007b). People were recruited to act as ‘witnesses’ and shown an unfamiliar face, in this case a UK footballer. Two days later, they described the face and used either EvoFIT or PRO-fit. The resulting composites were given to football fans to name. Composites from EvoFIT were correctly named at 11%, those from PRO-fit at 5%. Since then, two main improvements to EvoFIT have been proposed and evaluated in separate studies. The first was based on research that has found fundamental differences in our perception of familiar and unfamiliar faces (Ellis et al., 1979; Young et al., 1985). This research compared the internal facial features – the region including eyes, brows, nose and mouth – with the external facial features – hair, face shape, ears and neck. For a familiar face, the internal facial features were more important than the external; when unfamiliar, the external part played a more equal role. The consequence is less accurate face recognition for unfamiliar faces – for a review, see Hancock et al. (2000). The implication of this research is that witnesses will tend to focus too much on the external features during the construction of an offender, which is a problem since the internal features are more important when the composite is shown later to other people for recognition (Frowd et al., 2007a). Therefore, different facial regions have different salience depending on whether the task is to construct or to recognise a composite. Our solution was to reduce the perceptual impact of external features, to allow people building the face to make choices more on the internal region (Frowd et al., 2008b). This should allow that part of the face to be more accurately constructed, and produce an overall more recognisable image. A Gaussian or low-pass filter was applied to the faces’ external features at 8 cycles per face width, a level that renders recognition difficult when applied to the whole image (Thomas & Jordan, 2002). While it is possible to mask the external features completely, this would remove the context for selecting the inner face – the importance of which is established for unfamiliar face recognition (e.g. Davies & Milne, 1982; Malpass, 1986; Memon & Bruce, 1983, 1985; Tanaka & Farah, 1993) – and probably hinder recognition. In practice, the blurring was applied at the start of face construction with EvoFIT, following the initial selection of hair, and disabled at the end, prior to saving the composite to disk. We note that distorting selective regions of a face like this has been carried out previously (Campbell et al., 1999). Using a design similar to Frowd et al. (2007b), blurring was evaluated: participants looked at a photograph of a target face and 3 to 4 hours later constructed a composite with EvoFIT with or without external features blurring. Frowd et al. (2008b) found that composites evolved using blurred externals were correctly named significantly more often than those that were not, with a small-to-medium effect size. Sometimes a user may think their composite could be improved, for example by changing its apparent age. The second development was to design a set of ‘holistic’ tools to alter a face, for example to make it look older or plumper, without changing apparent identity. Rather than leaving this entirely to the users, however, they were shown the effects of each facial transform in turn on their evolved face. This addresses a theoretical and practical problem: a user might know that something was inaccurate with the face, but could not verbalise what, making it hard to improve the likeness (Frowd et al., 2005a). This issue is one of face recall, and particularly affects ‘feature’ systems. In practice, users adjust each scale in turn searching for a 4 better likeness, with the best face being carried forward. As with EvoFIT as a whole, this allows users to engage in face recognition rather than face recall to improve the likenesses. The holistic manipulations chosen were those thought to be most useful: age, face weight, attractiveness, extroversion, health, honesty, masculinity and threatening. The development of the scales is described fully in Frowd et al. (2006). To evaluate their effectiveness, that work did not involve participant-witnesses constructing faces from memory, but it did confirm that the scales appeared to be operating appropriately and that a more identifiable face could be produced from their use. The current work tested these two developments under more realistic construction procedures. The performance of EvoFIT was compared with and without external features blurring and holistic tools, and against the PRO-fit feature system. The expectation was that both techniques would be effective on their own, but would be best when used in conjunction with each other; also, that EvoFIT would be superior to PRO-fit. If the developments were effective, they could be used with real witnesses when evolving an EvoFIT and/or as part of other composite systems. Experiment The experiment required two stages. In the first, participants constructed a single composite of an unfamiliar target face using EvoFIT, with or without external features blur, or with a standard ‘feature’ system. In the second, different participants evaluated the quality of the composites by attempting to name them. Stage 1: Composite Construction Design Participants were randomly assigned to one of three conditions to construct a single composite. In the first two conditions, they used EvoFIT either with or without external features blurring; all used holistic tools at the end of evolving the face. Participants in the third group constructed a face using the PRO-fit ‘feature’ system. While it would have been preferable to implement a balanced experimental design, with blurring and holistic tools used throughout, this was not possible due the absence of such functionality in a feature system. For the EvoFITs constructed, the design was within-subjects for holistic tools and between-subjects for blur. Composite system was between-subjects (EvoFIT with blur / EvoFIT without blur / PRO-fit). Frowd et al. (2005a) developed a ‘gold standard’ for evaluating facial composite systems. This standard involved procedures that mirror construction by eyewitnesses as far as possible in the laboratory; because of its forensic value, it was adopted here. One of the fundamental design elements is that the faces used as targets should be unfamiliar to those who construct the composites, but familiar to other people who would later evaluate them. Here, photographs of international snooker players were chosen as targets; this would enable people who did not follow the game to construct the composites, and snooker fans to evaluate them. A second important consideration is the time interval between a person seeing a target face and constructing a composite. As mentioned above, when this delay is short, up to a few hours in duration, all systems appear to perform fairly well (e.g. Brace et al., 2000; Bruce et al., 2002; Frowd et al., 2005b). When the delay is much longer, for example 2 days, performance is much worse, especially for the ‘feature’ systems. In the current design, 2 days was chosen to mirror police work. Thirdly, participants should be interviewed using a Cognitive Interview (CI) to elicit the most accurate recall of the target face. The CI is particularly important for 5 the traditional systems, since the description of the face produced is used to locate a subset of facial features within the system itself, for presentation to the witness (or the participant here). The CI is normally administered by the police interviewer, or in this case the Experimenter, immediately before starting to construct the face. A good review of the CI may be found in Wells et al. (2007). Here, to mirror police work, we follow the ‘gold’ standard procedure specified in Frowd et al. (2005a). Fourthly, composite systems should be used as specified by manufacturers. For PRO-fit, this includes the opportunity for enhancement using an artwork package, to add shading, lines, wrinkles, etc., and a software tool called PRO-warp to manipulate feature shapes. These enhancement tools are used in police work and were followed here. The same opportunities were made available to participants who used EvoFIT, achieved by transferring the final EvoFIT image into PRO-fit for rework. To facilitate this design issue, the software was controlled by a suitably experienced experimenter (the second author on this paper). This person was trained ‘in house’ and had previously made in excess of 30 composites with participant-witnesses. We note that her role was to guide participant-witnesses in the face construction process and to control the software under their direction; she was unaware of the identity of the composite being constructed. On a final note, EvoFIT’s holistic tools have been expanded since Frowd et al. (2006), to allow adjustment of greyscale colourings of individual features and thereby potentially reducing the amount of artwork required. It is possible now to lighten and darken the irises, eyebrows, mouth and laughter lines; also, to add a beard, moustache, overall stubble and deep-set eyes. While the new scales are more ‘featural’ in nature, for simplicity, we refer to the complete set under the umbrella term ‘holistic tools’. Materials Twelve front face photographs of international snooker players, ranked in the top 40 players in the world during the 2007-8 season, were located on the Internet. These individuals were white male and photographed clean shaven (or with minor stubble) and without jewellery or spectacles. Included were Mark Allen, Ken Doherty, Peter Ebdon, John Higgins, Stephen Maguire, Alan McManus, Shaun Murphy, Ronnie O’Sullivan, Joe Perry, Neil Robertson, Mark Selby and Mark Williams. Three sets of 12 photographs were printed at approximately 8 cm (wide) x 10 cm (high) on A4 paper in colour using a good quality printer. Each set was given a different a random order and placed in separate envelopes for presentation to participant-witnesses. In a G*Power analysis, this number of targets is sufficient in 2x2 repeatedmeasures by-item analyses (of the type planned to be carried out) to detect practicallyuseful, medium effect sizes, f = 0.28. This was based on the following parameter settings: power, 1 – β = .8; α = .05; and correlation among measures, r = 0.7. Verbal description sheets were used to record participants’ recall of targets. These contained prompts for each feature – overall observations, hair, face shape, brows, eyes, nose, mouth and ears – with space underneath for making written notes. Software versions were 1.3 for EvoFIT and 3.4 for PRO-fit. Participants Participants were 31 female and 5 male staff and students at the University of Central Lancashire. Their age ranged from 19 to 61 (M = 27.6, SD = 10.4) years. Recruitment was via a global email requesting non-snooker fans to construct a composite for a £10 reward. It was specified that no one should have constructed a composite previously. 6 Procedure Participant-witnesses made two visits to the laboratory. In the first, the Experimenter explained that they would be shown a picture of a snooker player to be used to construct a composite two days later. Also, that she must not see the targets, to mirror real life. Participants were randomly assigned to construct a composite using EvoFIT with blur, EvoFIT without blur or PRO-fit, and were given an envelope containing the relevant set of target photographs. With her back to the participant, each person was instructed to remove a photograph and say if the face was familiar. All targets were reported to be unfamiliar (if not, they would have been asked to select another). They were then given 60 seconds to inspect the face. Participants wrote their initials on the back of the photograph along with the date, for future identification, and placed it in a second (‘used targets’) envelope (i.e. non-replacement sampling was used). Each participant returned to the laboratory after 46 to 50 hours. An initial briefing was given explaining that two stages would follow. Firstly, they would be asked to describe the appearance of their target face. To do this, a Cognitive Interview would be used, a set of techniques to help them recall as much accurate information as possible about the face. Secondly, a composite would be constructed. For those assigned to EvoFIT, they would repeatedly select from arrays of alternative faces and a composite would be ‘evolved’; for PRO-fit, this would involve selecting facial features to build-up a likeness of the face. Participants were encouraged to ask questions throughout. When ready, the session moved on to the Cognitive Interview. Participants were told that, in a few moments time, they would be asked to think back to when the target had been seen and to visualise the face. They would then describe the face in as much detail as possible. While doing this, the Experimenter would not interrupt, but would take notes. This procedure was carried out to recover a description of the face. Next, the Experimenter repeated the description given for each facial feature and promoted for more information: e.g., “You mentioned that the brows were brown, can you recall anything further?” The Experimenter explained that a composite would be constructed next. For those assigned to PRO-fit, it was explained that the facial description would be entered into PRO-fit, to enable appropriate features to be located. They would then be able to select the best examples, and resize and reposition each on the face to create the best likeness. Also, as the features were cut from photographs, an exact likeness was unlikely, but an artwork paint package was available to add shading, wrinkles, etc. Such changes were normally carried out towards the end of the session, along with the PRO-warp tool to manipulate the shape of any feature. The Experimenter entered the description into PRO-fit, to locate about 20 examples per facial feature, and presented an ‘initial’ composite, a face with features to match the description. Using this face, the Experimenter demonstrated how features were selected, resized and positioned on the face, along with the effect of changing the feature brightness and contrast levels. It was explained that witnesses could work on any facial feature at a time, though most people elected to start with the hair and face shape. The Experimenter thus assisted participant-witnesses to construct a composite using this procedure, including the use of the artwork package and PROwarp if required. When complete, the final image was saved to disk as the composite. Participants assigned to construct an EvoFIT (in either of the blur conditions) were told that the correct age database for their target would be selected and then an appropriate hairstyle. Afterwards, they would be shown four screens of facial shape, and select two per screen up to a maximum of six, and four screens of facial texture, or colourings, and similarly select six. They would next select the best combination of 7 shape and texture, and all selected faces would be bred together to combine facial characteristics. This process would be repeated once or twice more to allow a composite to be evolved. Afterwards, the face would be enhanced using ‘holistic tools’, to change the age, weight, and other aspects of the face to improve the overall likeness. Finally, an artwork package would be made available to add shading and wrinkles, and a further software tool called PRO-warp to change the shape of features. A composite was evolved using this procedure. This was the same for all participants, except for those assigned to the blurred condition. These participants were informed that faces would seen with the hair, ears and neck blurred, to allow them to focus on the central part of the face that is important for later recognition of the composite; also, after evolving, the blurring would be removed prior to holistic tool use. At the end of the second and third generation, all witnesses were given the opportunity to resize and reposition features on their ‘best’ face using EvoFIT’s Shape Tool. Composites were saved at three stages during construction: at the end of evolving, after holistic tool use, and after any artwork and PRO-warp changes. For consistency, artwork and warp changes were carried out in PRO-fit. Composites took about 1 hour to construct using PRO-fit and 1.5 hours using EvoFIT. Example constructions are presented in Fig. 1. Fig. 1 about here Stage 2: Composite Evaluation Evaluation of the composites was planned in two parts, to coincide with two major snooker championships in the UK. Evaluation involved asking attendees of these tournaments to name a set of composites. A fairly arbitrary decision was made to evaluate intermediate images first, then final composites. Thus, the first part considered the effectiveness of blurring and holistic tools, and used intermediate (nonfinal) images created by the blur and non-blur groups before and after holistic tool use. The second part used finished images, those resulting from artwork and feature warp. This part is important as it assesses the effectiveness of finished images – facial composites – as would be produced in police work. It will also consider the effectiveness of artwork/warping, the effect of which is unknown for EvoFIT. The first part contained 4 sets of 12 images: with and without blurring, and before and after holistic tools. They were divided into four booklets of 12 composites, with each containing one composite of each snooker player, with three examples taken from each condition and rotated around booklets. As participants inspected images from each condition, the design was within-subjects for blur and holistic tools. The design for the second part was also within-subjects and the 36 final composites (3 sets of 12) were similarly rotated around three booklets. In both tasks, it was important that participants were very familiar with the snooker targets; if not, then poor composite naming would result. To control for this, participants were asked to name the target photographs after naming the composites. An a priori rule was applied such that data were only included from participants who correctly named 10 or more targets. As a result, twice the number of people were recruited than recorded in the Participants section below. Materials EvoFIT and PRO-fit images were printed at approximately 8cm (wide) by 10 cm (high) on A4 paper in greyscale using a good quality printer. A set of target photographs was also printed (the same as for the participant-witnesses). 8 Participants Participants were volunteer snooker fans attending the Snooker World Championship at the Crucible Theatre in Sheffield and the Snooker Grand Prix at the SECC in Glasgow. The evaluation using intermediate images involved 4 females and 28 males, from 17 to 67 (M = 38.8, SD = 14.2) years. For the finished composites, there were 1 female and 23 males, from 25 to 55 (M = 40.1, SD = 9.7) years. Procedure Participants were tested individually and told that facial composites would be seen of snooker players ranked in the top 40 during 2007-8 season. Their task was to name as many as possible. For those in the first part of the evaluation, they were randomly assigned to one of four booklets, with equal sampling, each of which contained 12 images from EvoFIT (see Design for details). Composites were presented sequentially and participants provided a name where possible (but they were not coerced to do so). Afterwards, the target photographs were similarly presented and participants attempted to name those. The task was self paced and the order of presentation of composites was randomised for each person. Participants in the second part followed the same procedure, except that only three sets of booklets were used which contained the final EvoFITs from the blur and non-blur conditions, and the PRO-fits. Results Participant responses for composites and target photographs were scored correct or incorrect with reference to the relevant identity. ‘Conditional’ naming scores were used to analyse composite quality, as in Frowd et al. (2005a). These by-item scores were calculated by dividing the number of times a composite was correctly named by the number of times the relevant target photograph was correctly named. Three main analyses are presented below. Firstly, the effect of blur and holistic tools (intermediate EvoFIT images). Secondly, the effect of blurring at the final construction stage as well as ‘finishing’ techniques, warp and artwork. This used intermediate images after holistic tools and final EvoFIT composites. Note that while a combined analysis would be preferable for all intermediate and finished EvoFITs, the design at face construction was not fully crossed (to do so would have necessitated artwork/warping applied directly to images after evolving). Thirdly, finished EvoFITs constructed under the optimal blur condition and finished PRO-fits. Parametric statistics were used throughout, an established method for analysing composite data (e.g. Brace et al., 2006; Davies et al., 2000; Frowd et al., 2005b). By-items analyses are presented as we are more interested in results that generalise across composites than across observers (by-subjects analyses do show the same pattern of results). Due to the a priori screening, naming of the target photographs was appropriately very high, specifically at over 90% in all cells of the design for both intermediate images and finished composites. Item means for naming of the intermediate images are presented to left and centre of Fig. 2. Correct naming was slightly higher with blurring (M = 9.5%, SD = 14.3%) than without (M = 6.3%, SD = 8.4%); holistic tools substantially increased naming both without blur (M = 11.9%, SD = 15.5%) and with blur (M = 20.1%, SD = 21.5%). Naming was thus maximal when both techniques were used. The conditional naming scores were analysed using a two way repeatedmeasures Analyses of Variance (ANOVA). This was significant for holistic tools, Blur and holistic tools (intermediate EvoFITs). 9 F(1, 11) = 9.1, p = .012, ηp2 = 0.45, but not blur, F(1, 11) = 1.6, p = .24, ηp2 = 0.13, or the interaction, F(1, 11) = 0.9, p = .37, ηp2 = 0.07. An analysis was conducted on the incorrect naming data, to provide an indication of guessing (bias). In this case, these data differed little by condition and were not reliable, Fs < 2.2, p > .17. Fig. 2 about here As illustrated in Fig. 2 (right), finishing (artwork and warp) made little difference overall to correct naming scores (Before: M = 16.0% , SD =14.7%, After: M = 14.3%, SD = 21.1%). However, naming was much higher with blurring (M = 22.3%, SD = 20.3%) than without (M = 8.0%, SD = 9.2%). The ANOVA was significant for blur, F(1, 11) = 5.4, p = .041, ηp2 = 0.33, not significant for finishing, F(1, 11) = 0.2, p = .68, ηp2 = 0.02, but marginally significant for the interaction, F(1, 11) = 4.1, p = 0.068, ηp2 = 0.27. Simple-main effects revealed that blurring was only effective for finished composites, p = .014; other contrasts were ns. The above analyses suggest that blurring has a benefit on correct naming at the end of the process. As Fig. 2 illustrates, however, the increase in naming with blur is small after evolution (M = 3.3%, SD = 11.0%), larger after holistic tools (M = 8.2%, SD = 23.3%) and largest after warp/artwork (M = 20.3%, SD = 24.1%). Simplecontrasts of an ANOVA of these difference data confirm the reliability of a linear trend, F(1,11) = 8.6, p = .014, indicating the increasing value of this technique as the construction process unfolds. Incorrect naming scores of EvoFITs before finishing (M = 44.8%, SD = 12.7%) were somewhat higher than those after (M = 32.8%, SD = 17.3%); and similarly, somewhat higher without blur (M = 44.3%, SD = 12.9%) than with (M = 33.3%, SD = 19.5%). The ANOVA was significant for finishing, F(1, 11) = 6.3, p = .029, ηp2 = 0.26, approached significance for blur, F(1, 11) = 3.3, p = .099, ηp2 = 0.23, and was not significant for the interaction, F(1, 11) = 0.61, p = .45, ηp2 = 0.05. Blurring and finished EvoFITs. This part compares conditional naming scores of PRO-fits (M = 4.2%, SD = 8.1%) with finished EvoFITs in the optimal condition, blur and holistic tools (M = 24.5%, SD = 22.8%). These data clearly favour the EvoFITs, as confirmed by a paired-samples t-test, t(11) = 2.6, p = .024, d = 0.75. The incorrect naming data were not significant by system, t(11) = 0.58, p = .58. Finished composites. Table 1 about here Referring to Table 1, 15 out of the 17 holistic scales were used at some stage by participants using EvoFIT; only the moustache and beard scales were not, reflecting the absence of these features in the targets. Overall, each scale changed the face to some extent on 52.2% of occasions, features scales on 25.2%; there was little change in scale use for composites constructed with and without blur (M = 40.7% vs. 37.5% respectively). Greatest use was for weight, masculinity and brow-colouring. Scale use. Discussion Composite construction by selecting individual features results in faces that are rarely recognised. Recent alternatives are based on the more natural process of selecting and breeding complete faces. For EvoFIT, this basic method did not reliably converge on a specific identity (Frowd et al., 2004, 2005a, 2007b), but two recent developments 10 emerging from the psychology of face perception offer promise (Frowd et al., 2006, 2008b). While one development blurred the external parts of faces presented to users, the other built a set of image manipulation scales to rework an evolved face. Here, we explored the effectiveness of these developments using a design that reflected real-life construction procedures in the laboratory. Participant-witnesses looked at a photograph of an unknown snooker player and 2 days later constructed a single composite using either EvoFIT, with or without blurring, and with holistic tools, or PRO-fit. The resulting composites were named by snooker fans. The correct naming data revealed a clear benefit for holistic tools and an increasingly important one for blurring during the construction process; final artwork/warping changes also reduced the number of incorrect names elicited from the EvoFITs. In Frowd et al. (2008b), participants inspected a target face and evolved a composite 3 to 4 hours later; images were correctly named better when a Gaussian blur filter had been applied to the external features during construction than when it had not. The current work used the same number of composites per condition, but the delay was longer. In the evaluation using intermediate images, blurring increased naming scores during evolution, but not significantly. A failure to replicate this effect is likely to be an issue of experimental power; for the small effect size found here, the power was not sufficient to detect a reliable benefit for blurring (1 – β = 0.21). While not significant, the effect is of somewhat similar magnitude but in the same direction as reported before. Holistic tools, though, produced a significant increase in correct naming scores, and with a large effect size. The data thus provide evidence for the benefit of holistic scales to face production; they lend some support to the previous studies (Frowd et al., 2006, 2008b). The second part of the evaluation found that artwork and feature warping techniques did not reliably increase correct naming scores. They did, though, decrease the number of incorrect names produced. These additional changes tended to involve the warp tool (rather than the artwork package), but were quite subtle, including shortening of the brows, enlarging the nostrils and increasing the down-turn of a nose; see Fig. 1 for example hairline changes. The consequence is an overall more accurate image, one that is not more recognisable as the target (due to the non-significant difference by correct naming) but has an appearance less like other potential targets, in this case, other snooker players. Incorrect names tend to waste police time – investigating an innocent suspect – and a reduction thereof is of practical value. The analysis also revealed that external features blurring had an increasingly important role in correct identification of composites over the three stages of construction: evolving, holistic tool use and finishing; in the last stage, this benefit was statistically reliable, and there was also a trend in the reduction of incorrect names (from that marginally significant result). This is a curious finding as blurring was turned off after evolving the face: participant-witnesses assigned to the blur condition saw intact (non-blur) faces thereafter. Their composites continued to improve in quality relative to the non-blur group, but why should this be? There appears to be two non mutually-exclusive explanations. The first is based on process: having focused on the internal features during evolution, witnesses continued to do so. This is simply a carry-over effect, of the type seen in repeatedmeasures designs, of which face construction is an example. The other is related to memory: blurring helped to recover a mental image of the internal features, which was then of value for the ensuing tasks. The data available to date would support the former explanation, which is based on research involving participants building pairs of composites (rather than just one). In the initial work for Frowd et al. (2008b), an 11 EvoFIT constructed after another EvoFIT was inferior if the initial face was made without blur than with it. In more recent work, currently in preparation for journal submission, PRO-fits constructed after EvoFITs were superior, but naming levels from a person’s composites did not significantly correlate with each other, also indicating a process effect. Further research is currently exploring this issue. The next part of the evaluation involved finished composites: PRO-fits and EvoFITs constructed with blur. Correct naming was found to be fairly low for the former, at 4.2% correct, but substantially less than the EvoFITs, at 24.5%; the effect was reliable and large in size. The data clearly indicate that the combination of blur and holistic tools, with subsequent image enhancement, produces composites with fairly good naming levels. The result is likely to be of interest to police practitioners who are involved with composite construction. Here, the two developments together produce composites with correct naming levels that are useful practically. Theoretically, it supports the notion that EvoFIT, together with these techniques, form an appropriate interface to human memory. It also supports the accumulating research that indicates poor performance for feature systems when the delay to construction is several days in duration (e.g. Frowd et al., 2005a, 2005c, 2007b, 2007c). A potentially surprising result was the apparent decrease in correct naming scores for EvoFITs made without blur after the holistic tools (M = 11.9%) and after finishing (M = 4.2%). For these composites, artwork and feature warp enhancements had been carried out on 11 of the 12 composites, but note that this difference was not significant, and thus consistent with random effects. There was, however, a marginally significant decrease in incorrect names produced and therefore the overall representation of the face was more accurate. A Gaussian blur of 8 cycles/face width was used, as this level, when applied to the entire face, renders face recognition difficult (Thomas & Jordan, 2002). But, was this setting optimal? Clearly, using a less intense level of blur is likely to reveal the external features to a greater extent, and be potentially more distracting for a user; a more intense blur is likely to lessen the context in which the internal features are perceived. Past research has shown that removing the external features completely reduces unfamiliar face recognition relative to a complete face (e.g. Ellis et al., 1979), so this would argue for preserving some contextual information in the exterior face. Recent work has found that very accurate hair helps users to produce superior quality EvoFITs relative to hair that is only slightly inferior (Frowd et al., in press), which argues for a less intense distortion. On-going research is exploring this issue, along with its effectiveness for the traditional feature systems. The holistic tools were designed originally to allow users to describe what aspects of their evolved face needed changing, and to easily implement those changes on the face. Since then, all holistic scales are presented in sequence and recall is no longer required; now, users are asked to recognise when a more identifiable face is seen. So, how much of the current utility relies on face recall and how much on face recognition? There is perhaps some anecdotal evidence to start to answer this question. While the majority of our EvoFIT participants did comment upon the need to change the age and/or weight of their evolved face, none mentioned changes to other holistic properties, but then frequently used them (refer to Table 1). It may simply be that some transforms are fairly easy to verbalise, such as age and weight, while others are more difficult. Note that the ‘feature’ scales do require recall, and seven of those were fairly well-used, especially for iris and brow colour, and so recall is still potentially of value here. Ongoing research is exploring this issue generally, along with their general applicability for other composite systems. 12 On a practical note, it is perhaps worth commenting on the degree to which the size of the potential pool of faces might influence the evaluation of the composites – i.e. for the participants evaluating the composites. Recall that these participants were told that the snooker players were in the top 40, but could this knowledge have inflated naming responses? This is of course possible, but likely to be somewhat limited, since the composites produced in the PRO-fit condition were only named at 5% correct. Thus, in spite of knowledge of the target set, correct naming was still very poor; the levels are also very similar to other composite research that has used target sets with a similar or larger pool of candidates (e.g. Frowd et al., 2005a, 2007b). The benefit of blurring and holistic tools to the process of face construction can be explained by an exemplar-based (absolute-coding) model of face recognition originally proposed by Valentine (1991). In these models, faces are stored and retrieved within a multi-dimensional space, which is similar to that constructed for an EvoFIT face model. Somewhat average-looking faces tend to have small values along each dimension and are thus stored close to the centre of the space (i.e. near the origin); more distinctive faces have larger values and are located more distally. There are good candidates for what these dimensions might be. Examples include hair, face shape and age, all of which are important when processing an unfamiliar face (e.g. Ellis, 1986). Similarly, there is evidence for the importance of the eye and brow region (e.g. Shepherd, Davies & Ellis, 1981) and the spatial relation between features (e.g. Davies & Christie, 1982; Leder & Bruce, 1998; Tanaka & Farah, 1993). Thus, some dimensions appear relevant to the external features (hair, face shape) while others to the internal features (age, spatial relationships, eye/brow region). The current finding, involving blurring of the external features, can be explained in an exemplar-based model by assuming that the dimensions responsible for the external features are suppressed in the cognitive system of the composite constructor. This would allow dimensions for the internal features to exert a greater weight in the model’s (or user’s) response, thereby improving the effectiveness of unfamiliar face selection and the ensuing tasks. For the holistic tools, manipulating a face along multiple (internal and external features) dimensions is likely, some of the time, to provide a probe that is closer to a target face, thus offering the potential to improve the accuracy of the representation. In summary, it is difficult to externalise an unfamiliar face seen several days previously. The traditional method involves the selection of individual facial features and does not work well. Our EvoFIT alternative asked users to repeatedly select complete faces from an array, with breeding. Two recent developments are potentially valuable to face construction: face selection with external features blurring, and face manipulation using a set of psychologically-useful scales. In the current paper, both developments were effective at improving the quality of EvoFIT composites. While the latter successfully improved the correct naming levels of an evolved composite, the former had an accumulating benefit over the whole procedure. It was argued that blurring resulted in users continuing to focus on the internal facial features. When the new developments were used together, the resulting images were named about 25% correct, compared to about 5% for those constructed with a feature system. The work reveals that it is now possible to construct a composite with a fairly good naming level after a 2 day retention interval. The version of EvoFIT with blur and holistic tools would appear to be valuable for detecting those who commit crime. 13 References Brace, N., Pike, G.E., Allen, P., & Kemp, R. (2006). Identifying composites of famous faces: investigating memory, language and system issues. Psychology, Crime and Law, 12, 351-366. Brace, N., Pike, G., & Kemp, R. (2000). Investigating E-FIT using famous faces. In A. Czerederecka, T. Jaskiewicz-Obydzinska & J. Wojcikiewicz (Eds.). Forensic Psychology and Law (pp. 272-276). Krakow: Institute of Forensic Research Publishers. Bruce, V. (1986). Influences of familiarity on the processing of faces. Perception, 15, 387-397. Bruce, V., Ness, H., Hancock, P.J.B, Newman, C., & Rarity, J. (2002). Four heads are better than one. Combining face composites yields improvements in face likeness. Journal of Applied Psychology, 87, 894-902. Bruce, V., & Young, A.W. (1986). Understanding face recognition. British Journal of Psychology, 77, 305-327. Campbell, R., Coleman, M., Walker, J., Benson, P. J., Wallace, S., Michelotti, J., & Baron-Cohen, S. (1999). When does the inner-face advantage in familiar face recognition arise and why? Visual Cognition, 6, 197-216. Davies, G.M., & Christie, D. (1982). Face recall: an examination of some factors limiting composite production accuracy. Journal of Applied Psychology, 67, 103-109. Davies, G.M., & Milne, A. (1982). Recognizing faces in and out of context. Current Psychological Research, 2, 235-246. Davies, G.M., Shepherd, J., & Ellis, H. (1978). Remembering faces: acknowledging our limitations. Journal of Forensic Science, 18, 19-24. Davies, G.M., van der Willik, P., & Morrison, L.J. (2000). Facial Composite Production: A Comparison of Mechanical and Computer-Driven Systems. Journal of Applied Psychology, 85, 119-124. Ellis, H. D. (1986). Face recall: A psychological perspective. Human Learning, 5, 1-8. Ellis, H.D., Shepherd, J., & Davies, G.M. (1979). Identification of familiar and unfamiliar faces from internal and external features: some implications for theories of face recognition. Perception, 8, 431-439. Frowd, C.D., Bruce, V., McIntyre, A., & Hancock, P.J.B. (2007a). The relative importance of external and internal features of facial composites. British Journal of Psychology, 98, 61-77. 14 Frowd, C.D., Bruce, V., McIntyre, A., Ross, D., Fields, S., Plenderleith, Y., & Hancock, P.J.B. (2006). Implementing holistic dimensions for a facial composite system. Journal of Multimedia, 1, 42-51. Frowd, C.D., Bruce, V., Ness, H., Bowie, L., Thomson-Bogner, C., Paterson, J., McIntyre, A., & Hancock, P.J.B. (2007b). Parallel approaches to composite production. Ergonomics, 50, 562-585. Frowd, C.D., Bruce, V., & Hancock, P.J.B. (2008a). Changing the face of criminal identification. The Psychologist, 21, 670-672. Frowd, C.D., Carson, D., Ness, H., McQuiston, D., Richardson, J., Baldwin, H., & Hancock, P.J.B. (2005a). Contemporary Composite Techniques: the impact of a forensically-relevant target delay. Legal & Criminological Psychology, 10, 63-81. Frowd, C.D., Carson, D., Ness, H., Richardson, J., Morrison, L., McLanaghan, S., & Hancock, P.J.B. (2005b). A forensically valid comparison of facial composite systems. Psychology, Crime & Law, 11, 33-52. Frowd, C.D., Hancock, P.J.B., & Carson, D. (2004). EvoFIT: A holistic, evolutionary facial imaging technique for creating composites. ACM Transactions on Applied Psychology (TAP), 1, 1-21. Frowd, C.D., & Hepton, G. (in press). The benefit of hair for the construction of facial composite images. British Journal of Forensic Practice. Frowd, C.D., McQuiston-Surrett, D., Anandaciva, S., Ireland, C.E., & Hancock, P.J.B. (2007c). An evaluation of US systems for facial composite production. Ergonomics, 50, 1987–1998. Frowd, C.D., McQuiston-Surrett, D., Kirkland, I., & Hancock, P.J.B. (2005c). The process of facial composite production. In A. Czerederecka, T. JaskiewiczObydzinska, R. Roesch & J. Wojcikiewicz (Eds.). Forensic Psychology and Law (pp. 140-152). Krakow: Institute of Forensic Research Publishers. Frowd, C.D., Park., J., McIntyre, A., Bruce, V., Pitchford, M., Fields, S., Kenirons, M. & Hancock, P.J.B. (2008b). Effecting an improvement to the fitness function. How to evolve a more identifiable face. In A. Stoica, T. Arslan, D. Howard, T. Higuchi, and A. El-Rayis (Eds.) 2008 ECSIS Symposium on Bio-inspired, Learning, and Intelligent Systems for Security (pp. 3-10). NJ: CPS. (Edinburgh). Gibson., S.J., Solomon, C.J., & Pallares-Bejarano, A. (2003). Synthesis of photographic quality facial composites using evolutionary algorithms. In R. Harvey and J.A. Bangham (Eds.) Proceedings of the British Machine Vision Conference (pp. 221-230). Hancock, P.J.B., Bruce, V., & Burton, A.M. (2000). Recognition of unfamiliar faces. Trends in Cognitive Sciences, 4-9, 330-337. 15 Leder, H., & Bruce, V. (1998). Local and relational aspects of face distinctiveness. Quarterly Journal of Experimental Psychology, 51A, 449-473. Memon, A., & Bruce, V. (1983). The effects of encoding strategy and context change on face recognition. Human Learning, 2, 313-326. Memon, A., & Bruce, V. (1985). Contexts effects in episodic studies of verbal and facial memory: A review. Current Psychological Research & Reviews, Winter 198586, 349-369. O'Donnell, C., & Bruce, V. (2001). Familiarisation with faces selectively enhances sensitivity to changes made to the eyes. Perception, 30, 755-764. Malpass, R.S. (1996). Enhancing eyewitness memory. In S.L. Sporer, R.S. Malpass & G. Koehnken (Eds.). Psychological issues in eyewitness identification. (pp. 177-204). Hillsdale, NJ: Lawrence Erlbaum. Rhodes, G., Carey, S., Byatt, G., & Proffitt, F. (1998). Coding spatial variations in faces and simple shapes: a test of two models. Vision Research, 38, 2307–2321. Shepherd, J.W., Davies, G.M., & Ellis, H.D. (1981). Studies of cue saliency. In G. Davies, E. Hadyn, J. Shepherd (Eds). Perceiving and Remembering Faces (pp. 105132). London: Academic Press. Tanaka, J.W., & Farah, M.J. (1993). Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology: Human Experimental Psychology, 46A, 225245. Thomas, S.M., & Jordan, T.R. (2002). Determining the influence of Gaussian blurring on inversion effects with talking faces. Perception & Psychophysics, 64, 932-944. Tredoux, C.G., Nunez, D.T., Oxtoby, O., & Prag, B. (2006). An evaluation of ID: an eigenface based construction system. South African Computer Journal, 37, 1-9. Valentine, T. (1991). A unified account of the effects of distinctiveness, inversion and race in face recognition. Quarterly Journal of Experimental Psychology, 43A, 161204. Wells, G., Memon, A., & Penrod, S.D. (2007). Eyewitness evidence: improving its probative value. Psychological sciences in the public interest, 7, 45-75. Young, A.W., Hay, D.C., McWeeny, K.H., Flude, B.M., & Ellis, A.W. (1985). Matching familiar and unfamiliar faces on internal and external features. Perception, 14, 737-746. 16 Figure captions Fig. 1. Composites constructed in the current study of the snooker player, Mark Williams. EvoFITs are presented on the first two rows: the left image is after evolution; centre is after holistic tool use; right is after final enhancements with the artwork package and/or feature warp. The images on the top row were constructed using EvoFIT with blur; those on the second row using EvoFIT without blur; the PRO-fit is shown on the third row. Fig. 2. Correct composite naming when evolving using EvoFIT with and without blur, at each stage in the experiment. Levels are calculated as the number of correct names given for the composites divided by the relevant correct names for the targets (expressed in percent). Error bars are SE of the means. Table 1. Holistic tool use for holistic scales, top row, and for the feature scales, bottom row. Figures are overall percent scale use for all participant-witnesses who constructed an EvoFIT. 17 List of figures and tables Fig. 1. Composites constructed in the current study of the snooker player, Mark Williams. EvoFITs are presented on the first two rows: the left image is after evolution; centre is after holistic tool use; right is after final enhancements with the artwork package and/or feature warp. The images on the top row were constructed using EvoFIT with blur; those on the second row using EvoFIT without blur; the PRO-fit is shown on the third row. 18 Correct naming (percent) 30 25 20 15 No blur Blur 10 5 0 After evolving After holistic tools After artwork (finished composite) Construction stage Fig. 2. Correct composite naming when evolving using EvoFIT with and without blur, at each stage in the experiment. Levels are calculated as the number of correct names given for the composites divided by the relevant correct names for the targets (expressed in percent). Error bars are SE of the means. 19 Table. 1. Holistic tool use for holistic scales, top row, and for the feature scales, bottom row. Figures are overall percent scale use for all participant-witnesses who constructed an EvoFIT. Age 50.0 Brows 72.7 Weight 68.2 Iris 59.1 Attractiveness 36.4 Deepset eyes 50.0 Extroversion 54.5 Eyebags 36.4 Health 45.5 Laughter lines 45.5 Honesty 63.6 Mouth 31.8 Masculinity 77.3 Stubble 31.8 Threatening 22.7 Moustache 0.0 Beard 0.0 20