ARC_test_plan_v0.5

advertisement

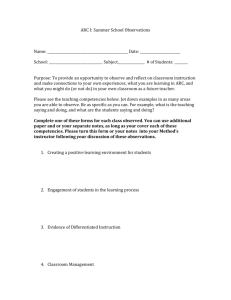

T ITLE Test plan for ARC products Date: 3.10.2011 E UROPEAN M IDDLEWARE I NITIATIVE TEST P L AN F O R ARC PRODUCTS Document Version: 0.5.0 EMI Component Version: 01/01/00 Date: 03/10/11 This work is co-funded by the EC EMI project under the FP7 Collaborative Projects Grant Agreement Nr. INFSORI-261611. 1 T ITLE Test plan for ARC products Date: 3.10.2011 Obsah 1. SOFTWARE TESTING TERMS AND DEFINITIONS ................................................................................4 2. TESTING/ARC-CORE .....................................................................................................................................5 2.1. REGRESION TESTS .....................................................................................................................................6 2.2. FUNCTIONALALITY TESTS ..........................................................................................................................6 2.3. PERFORMANCE TESTS .............................................................................................................................. 11 2.4. SCALABILITY TESTS ................................................................................................................................. 12 2.5. STANDARD COMPLIANCE/CONFORMANCE TESTS ..................................................................................... 12 2.6. INTER-COMPONENT TESTS ....................................................................................................................... 12 3. TESTING/ARC-CE ......................................................................................................................................... 13 3.1. REGRESSION TESTS .................................................................................................................................. 14 3.2. FUNCTIONALALITY TESTS ........................................................................................................................ 14 3.3. SCALABILITY TESTS ................................................................................................................................. 23 3.4. STANDARD COMPLIANCE/CONFORMANCE TESTS ..................................................................................... 23 3.5. INTER-COMPONENT TESTS ....................................................................................................................... 23 4. TESTING/ARC-CLIENT................................................................................................................................ 24 4.1. REGRESSION TESTS .................................................................................................................................. 26 4.2. FUNCTIONALALITY TESTS ........................................................................................................................ 27 Test name: F1: direct job submission .................................................................................................. 28 Test name: F8: proxy renewal for an active job .................................................................................. 32 Test name: F9: job failure recovery ..................................................................................................... 32 Test name: F9: job failure recovery ..................................................................................................... 32 Test name: F10: job list population via job discovery ......................................................................... 32 Test name: F10: job list population via job discovery ......................................................................... 32 Test name: F11: test utility check ...................................................................................................... 33 Name of scenario: .............................................................................................................................. 34 4.3. PERFORMANCE TESTS .............................................................................................................................. 34 Test name: P1: load test of compute cli ............................................................................................... 34 Test name: P2: reliability test of compute cli....................................................................................... 35 4.4. SCALABILITY TESTS ................................................................................................................................. 37 4.5. STANDARD COMPLIANCE/CONFORMANCE TESTS ..................................................................................... 38 Test name: STD1: JSDL for compute client ......................................................................................... 38 4.6. INTER-COMPONENT TESTS ....................................................................................................................... 38 5. TESTING/ARC-INFOSYS.............................................................................................................................. 39 5.1. REGRESSION TESTS .................................................................................................................................. 39 5.2. FUNCTIONALALITY TESTS ........................................................................................................................ 40 5.3. PERFORMANCE TESTS .............................................................................................................................. 41 5.4. SCALABILITY TESTS ................................................................................................................................. 43 5.5. STANDARD COMPLIANCE/CONFORMANCE TESTS ..................................................................................... 43 5.6. INTER-COMPONENT TESTS ....................................................................................................................... 43 6. TESTING ARC-GRIDFTP ............................................................................................................................. 44 6.1. REGRESSION TESTS .................................................................................................................................. 44 6.2. FUNCTIONALALITY TESTS ........................................................................................................................ 44 6.3. PERFORMANCE TESTS .............................................................................................................................. 46 6.4. SCALABILITY TESTS ................................................................................................................................. 47 6.5. STANDARD COMPLIANCE/CONFORMANCE TESTS ..................................................................................... 48 2 T ITLE Test plan for ARC products Date: 3.10.2011 6.6. INTER-COMPONENT TESTS ....................................................................................................................... 48 7. DEPLOYMENT TESTS .................................................................................................................................. 50 8. PLANS TOWARD EMI-2 ............................................................................................................................... 51 8.1. TESTING FOR EMI 1.0.1 UPDATE ............................................................................................................. 52 9. LARGE SCALABILITY TESTS .................................................................................................................... 53 10. CONCLUSIONS ............................................................................................................................................ 54 3 T ITLE Test plan for ARC products Date: 3.10.2011 1. SOFTWARE TESTING TERMS AND DEFINITIONS The basic terms used in the ARC test plan are in agreement with EMI approved Testing policy: https://twiki.cern.ch/twiki/bin/view/EMI/EmiSa2TestPolicy The goal of this plan is to propose the test plan for all ARC product teams in EMI project (2010-1013). Testing is organized on the short term basis and long term basis. The short term testing consists of automatic tests of quality code using tools from the Nordugrid and EMI mandatory ETICS tool. Nordugrid project tools are operated by two EMI project partners Nightly builds for several platforms (Copenhagen), revision and functional tools (Kosice). The tools enable to monitor quality of code in early stage of development or release process. For example, builds are produced after each developer commit, nightly builds. Set of functional tests is performed on daily basis. Certification testing of release or update of release is a short term organized activity. The goal is to test release candidates to ensure final functionality and quality of distribution. This testing is part of EMI release activity. The outcomes are Test reports for each ARC component. The structure of planned test is exactly the same as is required by EMI Testing policies. Long term basis testing includes activities which need more effort. Typical examples are: development of new tools used during testing or setting up a new infrastructure for performance and reliability tests. At present performance tests are realized manually for limited components and functionality. A preparation of new tests and analysis is considered for very important activity is. Feedback about test results are systematically collected on Nordugrid project WIKI http://wiki.nordugrid.org/index.php/Testing . Tests performed during EMI-1 code analysis, unit tests, regression tests, functional tests, limited set of performance tests. The test scenarios are collected on WIKI, I database of automatic tools or are listed in this plan. In the next chapter we present the test scenarios used during EMI 1.0.0 release. Unordered scenarios and tests results are collected on the project WIKI http://wiki.nordugrid.org/index.php/Testing 4 T ITLE Test plan for ARC products Date: 3.10.2011 2. TESTING/ARC-CORE Testing Core components and modules: HED, HED LIDI, HED security, HED language bindings, DMCs, Configuration, all the common utilities Overview of tests: Regression tests Functionality tests F1: ARCHED daemon F1.1: Without TLS F1.2: Without TLS and Plexer F1.3: With TLS F1.4: With TLS and without Plexer F1.5: Username token authentication together - unexisting username/password file F1.6: Username token authentication together - wrong username/password F1.7: Username token authentication together - with valid credentials F1.8: Username token authentication together without Plexer F1.9: Username token authentication together without TLS F1.10: Username token authentication together without TLS and Plexer F2: arched command line options F2.1: Dump generated XML config F2.2: Show help options F2.3: Run daemon in foreground F2.4: full path of XML config file F2.5: full path of Ini config file F2.6: full path of of pid file F2.7: user name F2.8: group name F2.9: full path of XML schema file F2.10: version Performance tests P1: service reliability Scalability tests S1: Perftest utility used to test the stability and throughput of running Echo service without the TLS MCC S2: Perftest utility used to test the stability and throughput of running 5 T ITLE Test plan for ARC products Date: 3.10.2011 Standard compliance/conformance tests Inter-component tests 2.1. REGRESION TESTS Regression tests are performed based on bugs associated to the component. The structure is recommended as follows: Test name: Name of scenario: Description: Command: Expected result: 2.2. FUNCTIONALALITY TESTS Test name: F1: ARCHED daemon Name of scenario: F1.1: Without TLS Description of the test: We create this ( tcp->http->soap->plexer->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho http://localhost:66000/Echo message Command: Expected result: We will get the follows response from the server. [message] Test name: F1: ARCHED daemon Name of scenario: F1.2: Without TLS and Plexer Description of the test: We create this ( tcp->http->soap->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho http://localhost:66000/Echo message arcecho http://localhost:66000/ message Command: Expected result: We will get the follows response from the server. 6 T ITLE Test plan for ARC products Date: 3.10.2011 [message] [message] Test name: F1: ARCHED daemon Name of scenario: F1.3: With TLS Description of the test: We create this ( tcp->tls->http->soap->plexer->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho https://localhost:66000/Echo message Command: Expected result: We will get the follows response from the server after the authentication. ERROR: Can not access proxy file: /tmp/x509up_u500 Enter PEM pass phrase: [message] Test name: F1: ARCHED daemon Name of scenario: F1.4: With TLS and without Plexer Description of the test: We create this ( tcp->tls->http->soap->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho https://localhost:66000/Echo message arcecho https://localhost:66000/ message Command: Expected result: ERROR: Can not Enter PEM pass [message] ERROR: Can not Enter PEM pass [message] access proxy file: /tmp/x509up_u500 phrase: access proxy file: /tmp/x509up_u500 phrase: Test name: F1: ARCHED daemon Name of scenario: F1.5: Username token authentication together - unexisting username/password file Description of the test: We create this ( tcp->tls->http->soap->plexer->echo ) MCC chain on server side and after we use the arcecho command on client side. 7 T ITLE Test plan for ARC products Date: 3.10.2011 arcecho https://localhost:66000/Echo message In case of configured but unexisting username/password file. (With and without TLS.) Command: Expected result: We will get the follows response from the server after the authentication. ERROR: Can not access proxy file: /tmp/x509up_u500 Enter PEM pass phrase: Not existing credentials. Test name: F1: ARCHED daemon Name of scenario: F1.6: Username token authentication together - wrong username/password Description of the test: We create this ( tcp->tls->http->soap->plexer->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho https://localhost:66000/Echo message In case of configured and existing but invalid username/password. (With and without TLS.) Command: Expected result: We will get the follows response from the server after the authentication. ERROR: Can not access proxy file: /tmp/x509up_u500 Enter PEM pass phrase: Invalid username/password. Test name: F1.7: Username token authentication together - with valid credentials Description of the test: We create this ( tcp->tls->http->soap->plexer->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho https://localhost:66000/Echo message Command: Expected result: We will get the follows response from the server after the authentication. ERROR: Can not access proxy file: /tmp/x509up_u500 Enter PEM pass phrase: [message] Test name: F1: ARCHED daemon Name of scenario: F1.8: Username token authentication together without Plexer Description of the test: We create this ( tcp->tls->http->soap->echo ) MCC chain on 8 T ITLE Test plan for ARC products Date: 3.10.2011 server side and after we use the arcecho command on client side. arcecho https://localhost:66000/ message Expected result: We will get the follows response from the server. ERROR: Can not access proxy file: /tmp/x509up_u500 Enter PEM pass phrase: [message] Test name: F1: ARCHED daemon Name of scenario: F1.9: Username token authentication together without TLS Description of the test: We create this ( tcp->http->soap->plexer->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho http://localhost:66000/Echo message Command: Expected result: We will get the follows response from the server. [message] Test name: F1: ARCHED daemon Name of scenario: F1.10: Username token authentication together without TLS and Plexer Description of the test: We create this ( tcp->http->soap->echo ) MCC chain on server side and after we use the arcecho command on client side. arcecho http://localhost:66000/ message Command: Expected result: We will get the follows response from the server. [message] Test name: F2: arched command line options Name of scenario: F2.1: Dump generated XML config Description of the test: Create ini-files for all services available in /usr/share/arc/profiles/general.xml. Check if correct XML config is dumped. Command: Expected result: Dumped XML config can be used to start corresponding service. Test name: F2: arched command line options Name of scenario: F2.2: Show help options 9 T ITLE Test plan for ARC products Date: 3.10.2011 Description of the test: Run arched with help option. Command: Expected result: Help options are showed both with short and long option. Help options correspond to man page. Test name: F2: arched command line options Name of scenario: F2.3: Run daemon in foreground Description of the test: Run arched with foreground option. Command: Expected result: arched is run in the foreground both with long and short option. Test name: F2: arched command line options Name of scenario: F2.4: full path of XML config file Description of the test: Run arched with XML config file option. Give XML file as input. Command: Expected result: arched starts what is described in the given XML file and not in the default XML file. Test name: F2: arched command line options Name of scenario: F2.5: full path of Ini config file Description of the test: Run arched with Ini config file option. Give Ini file as input. Command: Expected result: arched starts what is described in the given Ini file and not the default Ini file. Test name: F2: arched command line options Name of scenario: F2.6: full path of of pid file Description of the test: Run arched with pid file option. Command: Expected result: Starting arched creates pid file on given path. 10 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: F2: arched command line options Name of scenario: F2.7: user name Description of the test: Run arched with user name option. Command: Expected result: arched process is owned by given user. Test name: F2: arched command line options Name of scenario: F2.8: group name Description of the test: Run arched with group name option. Command: Expected result: arched process is owned by given group. Test name: F2: arched command line options Name of scenario: F2.9: full path of XML schema file Description of the test: Run arched with XML schema option on echo XML config. Command: Expected result: Given schema file is used and and XML config is validated . Test name: F2: arched command line options Name of scenario: F2.10: version Description of the test: Run arched with version option. Command: Expected result: Version 1.0.0b4 is printed and arched is not running. 2.3. PERFORMANCE TESTS Test name: P1: service reliability Description of the test: First time see the times to return 100 arcecho requests (simultaneous and sequential). Then repeat a following cycle for three days - every 5 minutes use the perftest utility with input parameters 50 threads, 30 seconds. After every day try to measure the times to return 100 arcecho requests (simultaneous and sequential) and make some conclusion when comparing the times to times from beginning of test. 11 T ITLE Test plan for ARC products Date: 3.10.2011 Command: Expected result: 2.4. SCALABILITY TESTS Test name: S1: ws-layer response Name of scenario: Description: Command: Expected result: 12 T ITLE Test plan for ARC products Date: 3.10.2011 2.5. STANDARD COMPLIANCE/CONFORMANCE TESTS Test name: Name of scenario: Description: Command: Expected result: 2.6. INTER-COMPONENT TESTS Test name: Name of scenario: Description: Command: Expected result: 13 T ITLE Test plan for ARC products Date: 3.10.2011 3. TESTING/ARC-CE Testing Computing Element components and modules: A-REX, ARC Grid Manager, CE-Cache, CE-staging, LRMS modules, infoproviders, Janitor, JURA, nordugridmap Overview of tests: Regression tests R1 Uploaders are hanging and using 100% CPU R2 Downloader checksum mismatch between calculated checksum and source checksum Functionality tests F1: job management with invalid input (WS interface) F1.1: job status retrieval using not existing jobID F2: job management with invalid credentials F2.1: job submission using expired grid proxy certificate F2.2: job submission using expired voms proxy certificate F2.3: job submission using grid proxy certificate of not authorized DN F2.4: job submission using voms proxy certificate of not authorized VO F3: simple job execution F3.1: simple JSDL job submission arcsub F3.2: simple JSDL job submission ngsub F3.3: simple XRSL job submission arcsub F3.4: simple XRSL job submission ngsub F4: data stage-in job F4.1: submission of a JSDL job uploading one file F4.2: submission of a JSDL job staging in one file from Unixacl SE F4.3: caching of uploaded files F4.4: caching of staged in files F5: data stage-out job F5.1: submission of a JSDL job downloading one file F5.2: submission of a JSDL job staging out one file to Unixacl SE F6: job management via pre-WS F7: parallel job support F9: job management through WS-interface F9.1: Submission of simple job described in JDL F9.2: Submission of simple job described in XRSL F9.3: Submission of simple job described in JSDL 14 T ITLE Test plan for ARC products Date: 3.10.2011 F10: LRMS support F11: Janitor tests F12: gridmapfile F13: infopublishing: nordugrid schema F14: infopublishing: glue1.2 schema F15: infopublishing: glue2 LDAP schema F16: infopublishing: glue2 xml schema Performance tests P1: service reliability P2: load test P2.1: load test 1 P2.2: load test 2 P3: job submission failure rate Scalability tests Standard compliance/conformance tests Inter-component tests 3.1. REGRESSION TESTS Test name: R1: Uploaders are hanging and using 100% CPU Name of scenario: Scenario description: Submit 10 jobs each staging out 10 files to Unixacl SE Command: Expected result: All staged out files shall be transferred and the uploader processes on ARC CE shall return to idle state. Test name: R2: Downloader checksum mismatch between calculated checksum and source checksum Name of scenario: Scenario description: Upload file to Unixacl SE and then submit job staging in uploaded file. Command: 15 T ITLE Test plan for ARC products Date: 3.10.2011 Expected result: The job shall end in FINISHED state (without any errors) 3.2. FUNCTIONALALITY TESTS Test name: F1: job management with invalid input (WS interface) Name of scenario: F1.1: job status retrieval using not existing jobID Description of the test: Using arcls command with not existing jobID Command: Expected result: The ARC CE shall return “ERROR: Failed to obtain stat from ftp” (when querying Classic ARC CE) or “ERROR: Failed listing files” (when querying WS-ARC CE) output Test name: F2: job management with invalid credentials Name of scenario: F2.1: job submission using expired grid proxy certificate Description of the test: We try to submit a job using expired grid proxy certificate Command: Expected result: The ARC CE shall return “Failed authentication: an end-of-file was reached/globus_xio: An end of file occurred” (when querying Classic ARC CE) or “DEBUG: SSL error: 336151573 – SSL routines:SSL3_READ_BYTES:sslv3 alert certificate expired” (when querying WS-ARC CE) output Test name: F2: job management with invalid credentials Name of scenario: F2.2: job submission using expired voms proxy certificate Description of the test: We try to submit a job using expired voms proxy certificate Command: Expected result: The ARC CE shall return “Failed authentication: an end-of-file was reached/globus_xio: An end of file occurred” (when querying Classic ARC CE) or “DEBUG: SSL error: 336151573 – SSL routines: SSL3_READ_BYTES:sslv3 alert certificate expired” (when querying WS-ARC CE) output Test name: F2: job management with invalid credentials Name of scenario: F2.3: job submission using grid proxy certificate of not authorized DN Description of the test: We try to submit a job using grid proxy certificate of not 16 T ITLE Test plan for ARC products Date: 3.10.2011 authorized DN Command: Expected result: The ARC CE shall return “VERBOSE: Connect: Failed authentication: 535 Not allowed” (when querying Classic ARC CE) or “Job submission aborted because no resource returned any information” (when querying WS-ARC CE) output Test name: F2: job management with invalid credentials Name of scenario: F2.4: job submission using voms proxy certificate of not authorized VO Description of the test: We try to submit a job using voms proxy certificate of not authorized VO Command: Expected result: The ARC CE shall return “VERBOSE: Connect: Failed authentication: 535 Not allowed” (when querying Classic ARC CE) or “Job submission aborted because no resource returned any information” (when querying WS-ARC CE) output Test name: F3: simple job execution Name of scenario: F3.1: simple JSDL job submission Description of the test: We try to submit a JSDL job to ARC CE using arcsub command Command: Expected result: The job is successfully submitted Test name: F3: simple job execution Name of scenario: F3.2: simple JSDL job submission Description of the test: We try to submit a JSDL job to ARC CE using ngsub command Command: Expected result: The job is successfully submitted Test name: F3: simple job execution Name of scenario: F3.3: simple XRSL job submission Description of the test: We try to submit an XRSL job to ARC CE using arcsub command 17 T ITLE Test plan for ARC products Date: 3.10.2011 Command: Expected result: The job is successfully submitted Test name: F3: simple job execution Name of scenario: F3.4: simple XRSL job submission Description of the test: We try to submit an XRSL job to ARC CE using ngsub command Command: Expected result: The job is successfully submitted Test name: F4: data stage-in job Name of scenario: F4.1: submission of a JSDL job uploading one file Description of the test: We try to submit a JSDL job with one input file to ARC CE using arcsub command Command: Expected result: The job is successfully submitted Test name: F4: data stage-in job Name of scenario: F4.2: submission of a JSDL job staging in one file from Unixacl SE Description of the test: We try to submit a JSDL job with one input file (staged in from Unixacl SE) to ARC CE using arcsub command Command: Expected result: The job is successfully finished. Test name: F4: data stage-in job Name of scenario: F4.3: caching of uploaded files Description of the test: We try to submit a JSDL job with one input file to ARC CE using arcsub command. After the job is successfully finished we submit the same job again. Command: Expected result: The second job processing on ARC CE side is using cached file (cached when first job was submitted) 18 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: F4: data stage-in job Name of scenarioo: F4.4: caching of staged in files Description of the test: We try to submit a JSDL job with one input file (staged in from Unixacl SE) to ARC CE using arcsub command. After the job is successfully finished we submit the same job again. Command: Expected result: The second job processing on ARC CE side is using cached file (cached when first job was submitted) Test name: F5: data stage-out job Name of scenario: F5.1: submission of a JSDL job downloading one file Description of the test: We try to submit a JSDL job with one output file to ARC CE using arcsub command Command: Expected result: After successful finish of job the retrieved job output contains file described in JSDL job description Test name: F5: data stage-out job Name of scenario: F5.2: submission of a JSDL job staging out one file to Unixacl SE Description of the test: We try to submit a JSDL job with one output file (staged out to Unixacl SE) to ARC CE using arcsub command Command: Expected result: The job is successfully finished. Test name: F6: job management via pre-WS Name of scenario: Description: Check the main operations of the ARC-CE production interface: cancel, kill, so on... Use ordinary and VOMS proxies. simple job submission simple job migration migration of job with input files job status retrieval job catenate retrieval killing job 19 T ITLE Test plan for ARC products Date: 3.10.2011 job cleaning job results retrieval (retrieve of job with one output file) Command: Expected result: Test name: F7: parallel job support Name of scenario: Description: Check that more than one slots are request-able and allocated to a job when the corresponding job description element is used. Command: Expected result: Test name: F9: job management through WS-interface Name of scenario: F9.1: Submission of simple job described in JDL Description: Command: Expected result: Test name: F9: job management through WS-interface Name of scenario: F9.2: Submission of simple job described in XRSL Description: Command: Expected result: Test name: F9: job management through WS-interface Name of scenario: F9.3: Submission of simple job described in JSDL Description: Command: Expected result: Test name: F10: LRMS support 20 T ITLE Test plan for ARC products Date: 3.10.2011 Name of scenario: Description: Test pbs/maui, SGE, Condor, SLURM, fork, ..... correct job status identification correct identification of running/pending/queueing jobs correct CE information propagation (part of Glue2 tests) Command: Expected result: Test name: F11: Janitor tests Name of scenario: Description: Static Dynamic using Janitor component classic RTE tests submission of jobs requiring different types of RTEs Command: Expected result: Test name: F12: gridmapfile Name of scenario: Description: retrieval of proper DN lists example authorization scenarios (vo groups) Command: Expected result: Test name: F13: infopublishing: nordugrid schema Name of scenario: Description: 1. Setup the testbed as described above using the given arc.conf, restart a-rex and grid-infosys; 2. From a remote machine with ng* or arc* clients installed, submit at least 4 jobs 21 T ITLE Test plan for ARC products Date: 3.10.2011 using testldap.xrsl, wait until the jobs are in any INLRMS:Q and INLRMS:R status. 3. from a remote machine, run the command: ldapsearch -h gridtest.hep.lu.se -p 2135 -x -b 'Mds-Vo-Name=local,o=grid' > nordugrid_ldif.txt 4. in the same directory as the file generated above, place attached testngs.pl and nsnames.txt 5. run ./testngs.pl Command: Expected result: the output of testngs.pl should contain at least 89 published objects, and these should be at least: nordugrid-job-reqcputime nordugrid-queue-maxqueuable nordugrid-cluster-support nordugrid-job-stdin nordugrid-info-group nordugrid-job-execcluster nordugridqueue-architecture nordugrid-cluster-lrms-type nordugrid-authuser-sn nordugridqueue-localqueued nordugrid-cluster-architecture nordugrid-cluster-sessiondirlifetime nordugrid-job-gmlog nordugrid-cluster-runtimeenvironment nordugridqueue-totalcpus nordugrid-cluster-name nordugrid-queue-defaultcputime nordugridcluster-cache-total nordugrid-job-cpucount nordugrid-queue-name nordugridcluster-sessiondir-total nordugrid-cluster-prelrmsqueued nordugrid-clusterissuerca-hash nordugrid-cluster-opsys nordugrid-queue-maxwalltime nordugridcluster-sessiondir-free nordugrid-cluster-comment nordugrid-queue-gridrunning nordugrid-job-completiontime nordugrid-queue-comment nordugrid-queueprelrmsqueued nordugrid-queue-nodememory nordugrid-authuser-diskspace nordugrid-cluster-cpudistribution nordugrid-job-usedmem nordugrid-jobsubmissionui nordugrid-cluster-middleware nordugrid-queue nordugrid-job-status nordugrid-queue-homogeneity nordugrid-queue-defaultwalltime nordugrid-clustertrustedca nordugrid-job-sessiondirerasetime nordugrid-job-usedwalltime nordugridcluster-issuerca nordugrid-queue-mincputime nordugrid-cluster-owner nordugridqueue-gridqueued nordugrid-cluster-nodecpu nordugrid-job-reqwalltime nordugridcluster-contactstring nordugrid-cluster-localse nordugrid-info-group-name nordugrid-cluster-benchmark nordugrid-job-stdout nordugrid-job-executionnodes nordugrid-queue-running nordugrid-cluster-usedcpus nordugrid-job-globalid nordugrid-cluster-totaljobs nordugrid-queue-opsys nordugrid-job-stderr nordugridcluster nordugrid-authuser-name nordugrid-queue-maxrunning nordugrid-queuestatus nordugrid-job-runtimeenvironment nordugrid-queue-nodecpu nordugridcluster-credentialexpirationtime nordugrid-authuser nordugrid-jobproxyexpirationtime nordugrid-job-globalowner nordugrid-cluster-totalcpus 22 T ITLE Test plan for ARC products Date: 3.10.2011 nordugrid-queue-benchmark nordugrid-job-execqueue nordugrid-cluster-cache-free nordugrid-authuser-queuelength nordugrid-cluster-homogeneity nordugrid-jobusedcputime nordugrid-job-exitcode nordugrid-job-queuerank nordugrid-queuemaxcputime nordugrid-queue-schedulingpolicy nordugrid-authuser-freecpus nordugrid-job-jobname nordugrid-cluster-lrms-version nordugrid-job nordugridcluster-aliasname nordugrid-job-submissiontime Test name: F14: infopublishing: glue1.2 schema Name of scenario: Description: 1. Setup the testbed using the given arc.conf, restart a-rex and grid-infosys; 2. On a remote machine, setup of the EMI glue validator that can be found here: [1] 3. From a remote machine with ng* or arc* clients installed, submit at least 4 jobs using testldap.xrsl, wait until the jobs are in any INLRMS:Q and INLRMS:R status. 4. from a remote machine, run the command: ldapsearch -h gridtest.hep.lu.se -p 2135 -x -b 'Mds-Vo-Name=resource,o=grid' > glue12_ldif.txt 5. run the glue validator on the resulting file: glue-validator -t glue1 -f glue12_ldif.txt Command: Expected result: glue12_ldap.txt validates with no relevant errors using EMI validator. Test name: F15: infopublishing: glue2 LDAP schema Name of scenario: Description: 1. Setup the testbed as described above using the given arc.conf, restart a-rex and grid-infosys; 2. On a remote machine, setup of the EMI glue validator that can be found here: [2] 3. From a remote machine with ng* or arc* clients installed, submit at least 4 jobs using testldap.xrsl, wait until the jobs are in any INLRMS:Q and INLRMS:R status. 4. from a remote machine, run the command: ldapsearch -h gridtest.hep.lu.se -p 2135 -x -b 'o=glue' > glue2_ldif.txt 5. run the glue validator on the resulting file: 23 T ITLE Test plan for ARC products Date: 3.10.2011 glue-validator -t glue2 -f glue2_ldif.txt Command: Expected result: glue2_ldap.txt validates with no relevant errors using EMI validator. Test name: F16: infopublishing: glue2 xml schema Name of scenario: Description: Command: Expected result: Test name: P1: service reliability Description of the test: After fresh restart of ARC CE we measure time to submit a simple job. Then we repeat following job management cycle: - submit five simple jobs simultaneously - wait 2 minutes - all jobs status retrieval - submit five simple jobs sequentially (divided by 1 minute pause) - all jobs status retrieval - all job results retrieval This cycle will be repeated over three days. After third day we measure time to submit a simple job to that ARC CE. Command: Expected result: The times to submit a job at the beginning and at the end shall not significantly differ. Test name: P2: Load test Name of scenario: P2.1: load test 1 Description of the test: After fresh restart of ARC CE we submit 1000 simple jobs synchronously. After synchronous job submission is finished, we submit one simple job. Command: Expected result: The simple job submitted as last will end in FINISED state. 24 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: P2: Load test Name of scenario: P2.2: load test 2 Description of the test: After fresh restart of ARC CE we do 1000 info queries synchronously. After synchronous queries are finished, we do single info query. Command: Expected result: The single info query is retrieved successfully. Test name: P3: job submission failure rate Name of scenario: Description of the test: After fresh restart of ARC CE we submit 1000 simple jobs sequentially. Command: Expected result: The submission ratio is 90% and all successfully submitted jobs end up in FINISHED state 3.3. SCALABILITY TESTS Test name: Name of scenario: Description: Command: Expected result: 3.4. STANDARD COMPLIANCE/CONFORMANCE TESTS Test name: Name of scenario: Description: Command: Expected result: 25 T ITLE Test plan for ARC products Date: 3.10.2011 3.5. INTER-COMPONENT TESTS Test name: Name of scenario: Description: Command: Expected result: 26 T ITLE Test plan for ARC products Date: 3.10.2011 4. TESTING/ARC-CLIENT Testing Client components and modules: ng*, arc*, arcproxy, libarcclient, libarcdata2 Overview of tests: Regression tests R1 Python bindings are broken since operator = is not handled correctly R2 Incompatibility between ngsub and arcsub XRSL parser R3 Occasional seq faults at the end of arcstat R4 Configuration issues with arc* commands when using ARC0 target R5 Criptic error when trying to access ARC0 resource using arc* tools R6 Functionality tests F1: direct job submission F1.1: Job submission to pre-WS ARC CE using arcsub and XRSL job description language F1.2: Job submission to pre-WS ARC CE using arcsub and JSDL job description language F1.3: Job submission to pre-WS ARC CE using arcsub and JDL job description language F1.4: Job submission to pre-WS ARC CE using ngsub and XRSL job description language F1.5: Job submission to pre-WS ARC CE using ngsub and JSDL job description language F2: job migration F2.1: Query the job status submitted to pre-WS ARC CE using arcstat command F2.2: Query the job status submitted to pre-WS ARC CE using ngstat command F3: job and resource info querying F3.1: Query the job status submitted to pre-WS ARC CE using arcstat command F3.2: Query the job status submitted to pre-WS ARC CE using ngstat command F4: retrieval of job results/output F4.1: Retrieve the results of successfully finished job submitted to pre-WS ARC CE using arcget command 27 T ITLE Test plan for ARC products Date: 3.10.2011 F4.2: Retrieve the results of successfully finished job submitted to pre-WS ARC CE using ngget command F5: access to stdout/stderr or server logs F6: removal of jobs from CE F6.1: Removal of finished job from pre-WS ARC CE using arcclean command F6.2: Removal of finished job from pre-WS ARC CE using ngclean command F7: termination of active jobs F8: proxy renewal for an active job F9: job failure recovery F10: job list population via job discovery F11: test utility check ngtest arctest F12: job description support test XRSL test JSDL test JDL F13: brokering F14: data clis: access to EMI storage elements F15: proxy manipulation Performance tests P1: load test of compute cli P2: reliability test of compute cli P3: load test of data cli P3.1: Download/upload 5GB file using file:// protocol P3.2: Upload 5GB file using https:// protocol P3.3: Download 5GB file using https:// protocol P3.4: Upload 5GB file using gsiftp:// protocol P3.5: Download 5GB file using gsiftp:// protocol P3.6: Download/upload many files using file:// protocol P3.7: Upload many files using https:// protocol P3.8: Download many files using https:// protocol P3.9: Upload many files using srm:// protocol P3.10: Download many files using srm:// protocol P3.11: Upload many files using gsiftp:// protocol P3.12: Download many files using gsiftp:// protocol P4: reliability test of data cli Scalability tests S1: jobs submission ratio S2: data transfer ratio Standard compliance/conformance tests 28 T ITLE Test plan for ARC products Date: 3.10.2011 STD1: JSDL for compute client S2: data standards Inter-component tests 4.1. REGRESSION TESTS Test name: R1: Python bindings are broken since operator = is not handled correctly Name of scenario: Description: Run python script proposed in bug report Command: Expected result: The python script executes successfully Test name: R2: Incompatibility between ngsub and arcsub XRSL parser Name of scenario: Description: Create XRSL job description using stdout, stderr and join options. Dryrun the job description using ngsub and arcsub commands Command: Expected result: The dryrun shall report parse error Test name: R3: Occasional seq faults at the end of arcstat Name of scenario: Description: Repeat following cycle 10 times: - submit 5 simple jobs - wait 120 seconds - query the job status Command: Expected result: The arcstat command shall always return correct job statuses and no seg 29 T ITLE Test plan for ARC products Date: 3.10.2011 faults shall be observed Test name: R4: Configuration issues with arc* commands when using ARC0 target Name of scenario: Description: Move the usercert, userkey and ca certificate to non standard directory. Update client.conf file according to new locations of usercert, userkey and client.conf. Generate a proxy certificate. Submit a simple job to pre- WS ARC CE Command: Expected result: The job is submitted successfully and no request to enter password is displayed Test name: R5: Criptic error when trying to access ARC0 resource using arc* tools Name of scenario: Description: Submit simple job to pre-WS ARC CE using arcsub command. Query the status using arcstat and retrieve the results using arcget Command: Expected result: All actions shall end successfully Test name: R6: Name of scenario: Description: Command: Expected result: 4.2. FUNCTIONALALITY TESTS Test name: F1: direct job submission ngsub arcsub test pre-WS, WS, CREAM, Unicore Name of scenario: F1.1: Job submission to pre-WS ARC CE using arcsub and XRSL job description language Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using arcsub command (manual test). XRSL job description can be found at http://vls.grid.upjs.sk/testing/job_descriptions/get_hostname_xrsl.html. 30 T ITLE Test plan for ARC products Date: 3.10.2011 Command: Expected result: The job is successfully submitted Test name: F1: direct job submission ngsub arcsub test pre-WS, WS, CREAM, Unicore Name of scenario: F1.2: Job submission to pre-WS ARC CE using arcsub and JSDL job description language Description of the test: Create simple job in JSDL format and submit it to pre-WS ARC CE using arcsub command (manual test). JSDL job description can be found at http://vls.grid.upjs.sk/testing/job_descriptions/get_hostname.html. Command: Expected result: The job is successfully submitted Test name: F1: direct job submission ngsub arcsub test pre-WS, WS, CREAM, Unicore Name of scenario: F1.3: Job submission to pre-WS ARC CE using arcsub and JDL job description language Description of the test: Create simple job in JDL format and submit it to pre-WS ARC CE using arcsub command. (manual test). JDL job can be found at http://vls.grid.upjs.sk/testing/job_descriptions/get_hostname_jdl.html Command: Expected result: The job is successfully submitted Test name: F1: direct job submission ngsub arcsub test pre-WS, WS, CREAM, Unicore Name of scenario: F1.4: Job submission to pre-WS ARC CE using ngsub and XRSL job description language Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using ngsub command (manual test). Command: 31 T ITLE Test plan for ARC products Date: 3.10.2011 Expected result: The job is successfully submitted Test name: F1: direct job submission ngsub arcsub test pre-WS, WS, CREAM, Unicore Name of scenario: F1.5: Job submission to pre-WS ARC CE using ngsub and JSDL job description language Description of the test: Create simple job in JSDL format and submit it to pre-WS ARC CE using ngsub command (manual test). Command: Expected result: The job is successfully submitted Test name: F2: Job migration arcmigrate Name of scenario: Description: Command: Expected result: Test name: F3: job and resource info querying ngstat arcstat arcinfo Name of scenario: F3.1: Query the job status submitted to pre-WS ARC CE using arcstat command Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using arcsub command. Wait 120 seconds. Query the job status using arcstat command. Expected result: The job is successfully queried Test name: F3: job and resource info querying ngstat arcstat arcinfo Name of scenario: F3.2: Query the job status submitted to pre-WS ARC CE using ngstat command 32 T ITLE Test plan for ARC products Date: 3.10.2011 Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using ngsub command. Wait 120 seconds. Query the job status using ngstat command. Command: Expected result: The job is successfully queried Test name: F4: retrieval of job results/output ngget arcget Name of scenario: F4.1: Retrieve the results of successfully finished job submitted to preWS ARC CE using arcget command Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using arcsub command. Wait until the job is successfully finished (verify it using arcstat command). Retrieve the results of finished job using arcget command. Command: Expected result: The job results are successfully retrieved Test name: F4: retrieval of job results/output ngget arcget Name of scenario: F4.2: Retrieve the results of successfully finished job submitted to preWS ARC CE using ngget command Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using ngsub command. Wait until the job is successfully finished (verify it using ngstat command). Retrieve the results of finished job using ngget command. Command: Expected result: The job results are successfully retrieved Test name: F5: access to stdout/stderr or server logs ngcat arccat Name of scenario: F5.1 ngcat Description: Command: Expected result: 33 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: F5: access to stdout/stderr or server logs ngcat arccat Name of scenario: F5.2 arccat Description: Command: Expected result: Test name: F6: removal of jobs from CE ngclean arcclean Name of scenario: F6.1: Removal of finished job from pre-WS ARC CE using arcclean command Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using arcsub command. Wait until the job is successfully finished (verify it using arcstat command). Issue an arcclean command with jobID as parameter. Query the job status using arcstat command Command: Expected result: The second job status query shall return a warning that the job is no longer available Test name: F6: removal of jobs from CE ngclean arcclean Name of scenario: F6.2: Removal of finished job from pre-WS ARC CE using ngclean command Description of the test: Create simple job in XRSL format and submit it to pre-WS ARC CE using ngsub command. Wait until the job is successfully finished (verify it using ngstat command). Issue an ngclean command with jobID as parameter. Query the job status using ngstat command Command: Expected result: The second job status query shall return a warning that the job is no longer available 34 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: F7: termination of active jobs ngkill arckill Name of scenario: F7.1 ngkill Description: Command: Expected result: Test name: F7: termination of active jobs ngkill arckill Name of scenario: F7.2 arckill Description: Command: Expected result: Test name: F8: proxy renewal for an active job ngrenew arcrenew Name of scenario: F8.1 ngrenew Description: Command: Expected result: Test name: F8: proxy renewal for an active job ngrenew arcrenew Name of scenario: F8.2 arcrenew Description: Command: Expected result: 35 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: F9: job failure recovery ngresume arcresume Name of scenario: F9.1 ngresume Description: Command: Expected result: Test name: F9: job failure recovery ngresume arcresume Name of scenario: F9.2 arcresume Description: Command: Expected result: Test name: F10: job list population via job discovery ngsync arcsync Name of scenario: F10.1 ngsync Description: Command: Expected result: Test name: F10: job list population via job discovery ngsync arcsync Name of scenario: F10.2 arcsync Description: Command: Expected result: Test name: F11: test utility check Name of scenario: F11.1: ngtest 36 T ITLE Test plan for ARC products Date: 3.10.2011 Description: Command: Expected result: Test name: F11: test utility check Name of scenario: F11.2: arctest Description: Command: Expected result: Test name: F12: job description support Name of scenario: F12.1: test XRSL Description: Command: Expected result: Test name: F12: job description support Name of scenario: F12.2: test JSDL Description: Command: Expected result: Test name: F12: job description support Name of scenario: F12.3: test JDL Description: Command: Expected result: Test name: F13: brokering test resource discovery, use a JDL that matches "everything" test some of the brokering algorithms 37 T ITLE Test plan for ARC products Date: 3.10.2011 Name of scenario: Description: Command: Expected result: Test name: F14: data clis: access to EMI storage elements arcls arccp arcrm ngls ngcp ngrm Name of scenario: Description: Command: Expected result: Test name: F15: proxy manipulation Name of scenario: Description: Command: Expected result: 4.3. PERFORMANCE TESTS Test name: P1: load test of compute cli Name of scenario: Description: Command: Expected result: Test name: P2: reliability test of compute cli Name of scenario: 38 T ITLE Test plan for ARC products Date: 3.10.2011 Description: Command: Expected result: Test name: P3: load test of data Name of scenario: P3.1: Download/upload 5GB file using file:// protocol Description of the test: Download/upload a 5GB file using file:// protocol as source and target Commad: Expected result: The file specified as target shall exist and be of size 5GB. Test name: P3: load test of data Name of scenario: P3.2: Upload 5GB file using https:// protocol Description of the test: Download/upload a 5GB file using https:// protocol as target Commad: Expected result: The file specified as target shall exist and be of size 5GB. Test name: P3: load test of data Name of scenario: P3.3: Download 5GB file using https:// protocol Description of the test: Download/upload a 5GB file using https:// protocol as source Command: Expected result: The file specified as target shall exist and be of size 5GB. Test name: P3: load test of data Name of scenario: P3.4: Upload 5GB file using gsiftp:// protocol Description of the test: Download/upload a 5GB file using gsiftp:// protocol as target Command: Expected result: The file specified as target shall exist and be of size 5GB. 39 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: P3: load test of data cli Name of scenario: P3.5: Download 5GB file using gsiftp:// protocol Description of the test: Download/upload a 5GB file using gsiftp:// protocol as source Command: Expected result: The file specified as target shall exist and be of size 5GB. Test name: P3: load test of data cli Name of scenario: P3.6: Download/upload many files using file:// protocol Description of the test: Download/upload 1000 1kB files using file:// protocol as source and target Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Got 100 segmentation faults, but all files exist with correct size. Test name: P3: load test of data cli Name of scenario: P3.7: Upload many files using https:// protocol Description of the test: Upload 1000 1kB files using https:// protocol as target Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Test name: P3: load test of data cli Name of scenario: P3.8: Download many files using https:// protocol Description of the test: Download 1000 1kB files using https:// protocol as source Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Test name: P3: load test of data cli Name of scenario: P3.9: Upload many files using srm:// protocol 40 T ITLE Test plan for ARC products Date: 3.10.2011 Description of the test: Upload 1000 1kB files using srm:// protocol as target Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Test name: P3: load test of data cli Name of scenario: P3.10: Download many files using srm:// protocol Description of the test: Download 1000 1kB files using srm:// protocol as source Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Test name: P3: load test of data cli Name of scenario: P3.11: Upload many files using gsiftp:// protocol Description of the test: Upload 1000 1kB files using gsiftp:// protocol as target Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Test name: P3: load test of data cli Name of scenario: P3.12: Download many files using gsiftp:// protocol Description of the test: Download 1000 1kB files using gsiftp:// protocol as source Command: Expected result: The 1000 files specified as target shall exist and be of size 1kB. Test name: P4: reliability test of data cli Name of scenario: Description: Command: Expected result 41 T ITLE Test plan for ARC products Date: 3.10.2011 4.4. SCALABILITY TESTS Test name: S1: jobs submission ratio Name of scenario: Description: Command: Expected result: Test name: S2: data transfer ratio Name of scenario: Description: Command: Expected result: 4.5. STANDARD COMPLIANCE/CONFORMANCE TESTS Test name: STD1: JSDL for compute client Name of scenario: Description: Note that JSDL compliance is also tested in the ARCJSDLParserTest unit test located in source tree at arc1/trunk/src/hed/acc/JobDescriptionParser/ARCJSDLParserTest.cpp. Command: Expected result: Test name: S2: data transfer ratio Name of scenario: S2: data standards Description: Command: Expected result: 42 T ITLE Test plan for ARC products Date: 3.10.2011 4.6. INTER-COMPONENT TESTS Test name: Name of scenario: Description: Command: Expected result: 43 T ITLE Test plan for ARC products Date: 3.10.2011 5. TESTING/ARC-INFOSYS Testing Information System components and modules: ARIS, EGIIS, ARC Grid Monitor Overview of tests: Regression tests R1 ARC1 infosys does not start Functionality tests F1: ARIS F2: EGIIS F2.1: registering ARC CE to EGIIS F2.2: de-registering ARC CE from EGIIS F3: Grid Monitor F3.1: Check registered ARC CE to EGIIS using Grid Monitor F3.2: Check de-registered ARC CE from EGIIS using Grid Monitor Performance tests P1: ARIS service reliability P2: EGIIS service reliability P3: ARIS load test P3: EGIIS load test Scalability tests S1: ARIS Standard compliance/conformance tests Inter-component tests 5.1. REGRESSION TESTS Test name: R1: ARC1 infosys does not start Name of scenario: Description: Install EMI1 RC1 and try to start the a-rex-grid-infosys daemon Command: Expected result: The daemon is started successfully and the Infosys can be queried using ldapsearch command with proper parameters 44 T ITLE Test plan for ARC products Date: 3.10.2011 5.2. FUNCTIONALALITY TESTS Test name: F1: ARIS Name of scenario: Description of the test: Configure and distcleanstart the ARC CE and its Infosys. Submit a simple job to ARC CE, wait 120 seconds and query the local Infosys using the ldapsearch command with proper parameters. Repeat the submission and query every five times. Command: Expected result: After each query the produced output shall contain information about additional job being submitted to that ARC CE (after first query there shall be one job recorded, after second, there shall be two, etc.). Test name: F2: EGIIS Name of scenario: F2.1: registering ARC CE to EGIIS Description of the test: Configure and start the EGIIS. Configure the ARC CE`s Infosys so that it registers to the running EGIIS. Query the EGIIS using ldapsearch command with proper parameters. Command: Expected result: The output produced by ldapsearch shall contain the information about registered ARC CE. Test name: F2: EGIIS Name of scenario: F2.2: de-registering ARC CE from EGIIS Description of the test: Configure and start the EGIIS. Configure the ARC CE`s Infosys so that it registers to the running EGIIS. Query the EGIIS using ldapsearch command with proper parameters to check if the ARC CE is registered. Stop the ARC CE`s Infosys and query the EGIIS using ldapsearch command with proper parameters Command: Expected result: The output produced by ldapsearch shall not contain any information about previously running ARC CE. 45 T ITLE Test plan for ARC products Date: 3.10.2011 Test name: F3: Ldap Grid Monitor Name of scenario: F3.1: Check registered ARC CE to EGIIS using Grid Monitor Description of the test: Configure and start the EGIIS. Configure the ARC CE`s Infosys so that it registers to the running EGIIS. Query the EGIIS using ldapsearch command with proper parameters to check if the ARC CE is registered. Configure and start the Grid Monitor and check the details about registered CEs. Command: Expected result: The Grid Monitor shall include correct information about ARC CE registered to EGIIS. The information shall be identical with output produced by ldapsearch command Test name: F3: Ldap Grid Monitor Name of scenario: F3.2: Check de-registered ARC CE from EGIIS using Grid Monitor Description of the test: Configure and start the EGIIS. Configure the ARC CE`s Infosys so that it registers to the running EGIIS. Query the EGIIS using ldapsearch command with proper parameters to check if the ARC CE is registered. Check the details of Grid Monitor to make sure the ARC CE is listed there properly. Stop the ARC CE`s Infosys and check the Grid Monitor again. Command: Expected result: No information about ARC CE shall be found in Grid Monitor 5.3. PERFORMANCE TESTS Test name: P1: ARIS service reliability Description of the test: After fresh restart of ARC CE and its local infosys we submit 100 simple jobs to that CE. After the jobs are finished we measure the time to query the Infosys using ldapsearch command and record memory usage of running infosys. Then we repeat following cycle: - all job results retrieval - submit 10 simple jobs sequentially (divided by 1 minute pause) - all jobs status retrieval - wait 20 seconds - all job results retrieval This cycle will be repeated over three days. After third day we measure time to query the 46 T ITLE Test plan for ARC products Date: 3.10.2011 Infosys using ldapsearch command and record memory usage of running infosys. Command: Expected result: The times at the beginning and at the end shall not significantly differ and the memory usage of running Infosys at the end shall not be higher than 2xINITIAL_ECHO_SERVICE_MEMORY_USAGE. Test name: P2: ARIS load test Name of scenario: Description of the test: After fresh restart of ARC CE and its local infosys we submit 100 simple jobs to that CE. After the jobs are finished we submit 1000 concurrent queries to the Infosys using ldapsearch command (using & so the command executions can run on background). After the queries are finished we submit a single ldapsearch query to the Infosys. Command: Expected result: The single ldapsearch query shall return information about 100 finished jobs. Test name: P3: EGIIS service reliability Name of scenario: Description of the test: After fresh restart of EGIIS we register two ARC CEs to it. Submit 100 simple jobs over the EGIIS service (as a source for arcsub the index and not cluster will be used). After the jobs are finished we measure the time to query the EGIIS using ldapsearch command and record memory usage of running EGIIS. Then we repeat following cycle: - all job results retrieval - submit 10 simple jobs sequentially (divided by 1 minute pause) over the EGIIS service - all jobs status retrieval - wait 20 seconds - all job results retrieval This cycle will be repeated over three days. After third day we measure time to query the EGIIS using ldapsearch command and record memory usage of running EGIIS. Command: Expected result: The times at the beginning and at the end shall not significantly differ and 47 T ITLE Test plan for ARC products Date: 3.10.2011 the memory usage of running EGIIS at the end shall not be higher than 2xINITIAL_ECHO_SERVICE_MEMORY_USAGE. Test name: P4: EGIIS load test Name of scenario: Description of the test: After fresh restart of EGIIS we register two ARC CEs to it. Submit 100 simple jobs over the EGIIS service (as a source for arcsub the index and not cluster will be used). After the jobs are finished we submit 1000 concurrent queries to the EGIIS using ldapsearch command (using & so the command executions can run on background). After the queries are finished we submit a single ldapsearch query to the EGIIS. Command: Expected result: The single ldapsearch query shall return information about two CEs and 100 finished jobs. 5.4. SCALABILITY TESTS Test name: S1: ARIS scalability test Name of scenario: Description of the test: After fresh distcleanstart of ARC CE and its local infosys we submit 1 simple job to that CE. After the job is finished we measure the time to query the Infosys using ldapsearch command and record memory usage of running Infosys (stdout redirected to file). Then we repeat following cycle: - submit 50 simple jobs sequentially - wait until jobs are finised - measure the time to query the Infosys using ldapsearch command Repeat this cycle until there are 1000 finished jobs in the cluster Command: Expected result: The last recorded time shall not be more than five times the first recorded time. 48 T ITLE Test plan for ARC products Date: 3.10.2011 5.5. STANDARD COMPLIANCE/CONFORMANCE TESTS Test name: Name of scenario: Description: Command: Expected result: 5.6. INTER-COMPONENT TESTS Test name: Name of scenario: Description: Command: Expected result: 49 T ITLE Test plan for ARC products Date: 3.10.2011 6. TESTING ARC-GRIDFTP Modules and Components: ARC gridftp server Overview of tests: Regression tests Functionality tests F1: SE with unixacl F2: SE with gacl control Performance tests P1: service reliability Scalability tests Standard compliance/conformance tests STD1: gridftp interface STD1.1: Upload local file to GridFTP service STD1.2: Download file from GridFTP service STD1.3: Copy file from GridFTP service to third-party GridFTP service Inter-component tests 6.1. REGRESSION TESTS Test name: Name of scenario: Description: Command: Expected result: 6.2. FUNCTIONALALITY TESTS Test name: F1: SE with unixacl Name of scenario: F1.1: Listing files in unixacl SE Description of the test: Configure and start the unixacl SE, place 10 files with different file sizes to the unixacl SE directory, record the file sizes and use the arcls command with –l 50 T ITLE Test plan for ARC products Date: 3.10.2011 option to list the content of unixacl SE. Command: Expected result: The arcls command return list of 10 files and the file sizes listed are identical with recorded file sizes. Test name: F1: SE with unixacl Name of scenario: F1.2: Uploading files to unixacl SE Description of the test: Configure and start the unixacl SE and use the arccp command to upload 100 files sequentially. Command: Expected result: The arcls command list all uploaded files. Test name: F1: SE with unixacl Name of scenario: F1.3: Downloading files from unixacl SE Description of the test: Configure and start the unixacl SE and place 100 files to the unixacl SE directory. Use the arccp command to download all 100 files. Command: Expected result: All 100 files were downloaded successfully. Test name: F1: SE with unixacl Name of scenario: F1.4: Deleting files from unixacl SE Description of the test: Configure and start the unixacl SE and place 100 files to the unixacl SE directory. Use the arcrm command to delete all 100 files. Command: Expected result: The arcls command lists empty SE. Test name: F2: SE with gacl control Name of scenario: F2.1: Listing files in gacl controlled SE by not authorized user Description of the test: Configure and start the gacl controlled SE for a particular DN, place 10 files with different file sizes to the unixacl SE directory, record the file sizes and generate a grid proxy certificate using user credentials different from DN used for configuring the SE. Use the arcls command with –l option to list the content of gacl controlled SE. 51 T ITLE Test plan for ARC products Date: 3.10.2011 Command: Expected result: The arcls command return XXX error message. Test name: F2: SE with gacl control Name of scenario: F2.2: Listing files in gacl controlled SE by authorized user Description of the test: Configure and start the gacl controlled SE for a particular DN, place 10 files with different file sizes to the unixacl SE directory, record the file sizes and generate a grid proxy certificate using user credentials identical with DN used for configuring the SE. Use the arcls command with –l option to list the content of gacl controlled SE. Command: Expected result: The arcls command return list of 10 files and the file sizes listed are identical with recorded file sizes. Test name: F2: SE with gacl control Name of scenario: F2.3: Uploading files to gacl controlled SE Description of the test: Configure and start the gacl controlled SE and use the arccp command to upload 100 files sequentially. Command: Expected result: The arcls command list all uploaded files. Test name: F2: SE with gacl control Name of scenario: F2.4: Downloading files from gacl controlled SE Description of the test: Configure and start the gacl controlled SE and place 100 files to the gacl controlled SE directory. Use the arccp command to download all 100 files. Command: Expected result: All 100 files were downloaded successfully. Test name: F2: SE with gacl control Name of scenario: F2.5: Deleting files from gacl controlled SE Description of the test: Configure and start the gacl controlled SE and place 100 files to the gacl controlled SE directory. Use the arcrm command to delete all 100 files. Command: 52 T ITLE Test plan for ARC products Date: 3.10.2011 Expected result: The arcls command lists empty SE. 6.3. PERFORMANCE TESTS Test name: P1: Unixacl SE reliability Name of scenario: Description of the test: After fresh restart of unixacl SE we measure time to upload 100 files sequentially and record memory usage of running unixacl SE. Then we repeat following cycle: - upload 100 files simultaneously - wait 20 seconds - list the content of SE - download 100 files - remove 100 files from SE This cycle will be repeated over three days. After third day we measure time to to upload 100 files sequentially. Command: Expected result: The times at the beginning and at the end shall not significantly differ and the memory usage of running unixacl SE at the end shall not be higher than 2xINITIAL_ECHO_SERVICE_MEMORY_USAGE. Test name: P2: Reliability of SE with gacl control Name of scenario: Description of the test: After fresh restart of gacl controlled SE we measure time to upload 100 files sequentially and record memory usage of running gacl controlled SE. Then we repeat following cycle: - upload 100 files simultaneously - wait 20 seconds - list the content of SE - download 100 files - remove 100 files from SE This cycle will be repeated over three days. After third day we measure time to to upload 53 T ITLE Test plan for ARC products Date: 3.10.2011 100 files sequentially. Command: Expected result: The times at the beginning and at the end shall not significantly differ and the memory usage of running gacl controlled SE at the end shall not be higher than 2xINITIAL_ECHO_SERVICE_MEMORY_USAGE. 6.4. SCALABILITY TESTS Test name: Name of scenario: Description: Command: Expected result: 6.5. STANDARD COMPLIANCE/CONFORMANCE TESTS Test name: STD1: gridftp interface Name of scenario: STD1.1: Upload local file to GridFTP service Description of the test: Upload file to GridFTP service using third-party client Command: Expected result: File is copied to GridFTP service. Test name: STD1: gridftp interface Name of scenario: STD1.2: Download file from GridFTP service Description of the test: Download file from gridftp service using third-party client Command: Expected result: File is copied from GridFTP service. Test name: STD1: gridftp interface Name of scenario: STD1.3: Copy file from GridFTP service to third-party GridFTP service Description of the test: Copy file from GridFTP service to external GridFTP service. Command: 54 T ITLE Test plan for ARC products Date: 3.10.2011 Expected result: File is copied to external GridFTP service. 6.6. INTER-COMPONENT TESTS Test name: Name of scenario: Description: Command: Expected result: Tests performed during 1.0.1 release update were realized in two rounds testing 1.0.1 RC1 and 1.0.1 RC4 The set of mandatory tests was carefully selected to ensure that main lacks of software were corrected and final product ensure better functionality and performance parameters than previous version 1.0.0. The results are collected on the same place http://wiki.nordugrid.org/index.php/Testing_for_update_EMI_1.0.1rc4 (RC4) http://wiki.nordugrid.org/index.php/Testing_for_update_EMI_1.0.1 (RC1) Testers to communicate observation during testing obviously used dedicated WIKI packages, bugzilla e-mails or meetings. The test results after careful analyze are communicated with developers. 55 T ITLE Test plan for ARC products Date: 3.10.2011 7. DEPLOYMENT TESTS These tests are realized during certification of software. To ensure higher quality the binaries are installed on Integration testbed and each negative observation must be reported. 56 T ITLE Test plan for ARC products Date: 3.10.2011 8. PLANS TOWARD EMI-2 The short term activities are depended on the EMI release plan. We assume to participate on certification testing of each update or regular release in accordance of release plan. All EMI mandatory tests are planned during all short term activities. We are capable to perform “ad hoc’’ testing based on urgent request of developers, however such cases are not subject of this plan. On the other hand, all testing activities will be recorded on the Nordugrid project WIKI in effort to build knowledge database. The main goal for the second year of the project is to keep reached standard of testing. We assume to increase total amount of tasks and transferred data during tests. For example, in the previous period we tested 1, 10 and 100 tasks or events, the plan is to increase number to 1000, 10000 events or more during one test. The planned long term activity is to obtain reliable data about performance parameters of all main components i.e, server and client. During certification of major relase we plan to use all scenarios used during certification of EMI-1 release. The new scenarios are in planning phase mainly for scenarios which have not been performed during EMI-1 phase. The new requests from developers will involve probable new scenarios. Update testing is specific because is involved by effort to solve urgent issues. Performances test provide comprehensive information about all software components in conditions which should be close to the limits. We will test performance at stress tests, for example job submission of extremely high number of jobs, transfer duration of big data files and etc. The role of these tests is to know limits of software components and requirements on specification of hardware. For example, memory, CPU and network bandwidth. Detail analysis of the results could be very valuable because they help us to identify weak components or hidden errors from the previous phases (for example design or its implementation). Preparation, realization and analysis of performance tests of components is expensive activity mainly if many steps need tester interventions. This is a one of reasons to perform these tests rare, for example after realization of EMI-1 and EMI-2. Our strategy in EMI project is to realize two man performance tests after release EMI-1 and EMI-2. The tests will be focused on client and server behaviors in extreme conditions. We will measure memory and CPU usage, and system response on high workload. Activity Status (February 2011) Start End Development and Beginning phase realization of simple specification, initial automatic performance ideas test suite 20th February 2011 30th September 2011 Deployment of 1th October 2011 End of the EMI project 57 T ITLE Test plan for ARC products Date: 3.10.2011 automatic performance test suite Increasing number of performance test cases 1th January 2012 End of the EMI Project Planned long term activities: Maintenance of automatic revision test tool Maintenance of automatic functional tool (increase number of scenarios) 8.1. TESTING FOR EMI 1.0.1 UPDATE Limited set of tests was proposed. We focused on BDII issue and performance and reliability tests. The results are collected on pages: http://wiki.nordugrid.org/index.php/Testing_for_update_EMI_1.0.1 http://wiki.nordugrid.org/index.php/Testing_for_update_EMI_1.0.1rc4 8.2. TESTING FOR EMI 1.1.0 UPDATE Testing period: 19.September 2011 - 3. October EMI EMI update 8 The information for a coordination of tester activities are shared on common WIKI page: http://wiki.nordugrid.org/index.php/Testing_for_update_EMI_1.1.0 The page contains list of bugs and their status to monitor progress of testing. The plan is to verify many of listed bugs taking into account their relevance. Performance and reliability tests of 1000 job submission provide quick and complex information about global status of update and cover testing of basic functionality of all components. An additional set of carefully selected functional tests (from the previous chapters) is applied to ensure a detail view on functionality of the release update by ARC component. The test reports are better organized as their were organized in the previous testing. The DB application with web interface is used to store all tests reports http://arc-emi.grid.upjs.sk/tests.php. (Static test results recording ( Search static test results)). A new solution enables us to better monitor testing activities and better to identify PASSED/FAILED tests. We plan to produce tests reports compliant with EMI policies by automatic manner. 58 T ITLE Test plan for ARC products Date: 3.10.2011 59 T ITLE Test plan for ARC products Date: 3.10.2011 9. LARGE SCALABILITY TESTS To participate on the large scalability testing we assume to use currently available based on virtualization own resources. Scalability testing will be realized in frame of SA2.6 task and tightly coordinated with SA2.6 team. 60 T ITLE Test plan for ARC products Date: 3.10.2011 10. CONCLUSIONS The plan will be updated to reflect actual demands. 61