There are a number of central issues involved in

There are a number of central issues involved in Artificial Intelligence.

1.

Complexity

2.

Reasoning

3.

Knowledge Representation

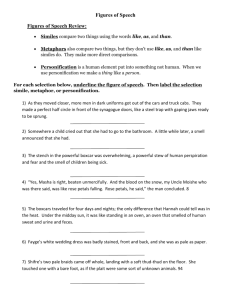

Complexity

This is about how difficult it is to solve problems:

It involves Search Space

Reasoning

This is the ability to create new knowledge given old knowledge

Knowledge Representation

This is central to the above two concerns: Classical Representation schemes include

1.

Logic

2.

Procedural

3.

Semantic Nets

4.

Frames

5.

Direct

6.

Production Schemes

Each Representation Scheme has a reasoning mechanism built in so we will treat these issues together and follow this with a discussion on Complexity.

Knowledge Representation.

Introduction.

The objective of research into intelligent machines is to produce systems which can reason with available knowledge and so behave intelligently. One of the major issues then is how to incorporate knowledge into these systems. How is the whole abstract concept of knowledge reduced into forms which can be written into a computers memory. This is called the problem of Knowledge Representation.

The concept of Knowledge is central to a number of fields of established academic study, including Philosophy, Psychology, Logic,and Education. Even in Mathematics and Physics people like Isaac Newton and Leibniz reflected that since physics has its foundations in a mathematical formalism, the laws of all nature should be similarly described.

The eighteenth Century Psychologist, Immanuel Kant wrote in his landmark

“Critique of Pure Reason” that the mind has a priori principles and makes things outside conform to those principles. In other words as we absorb knowledge we impose some internal structure on it and tend to view the world in terms of this structure. The theories of the great twentieth century Educationalist Jean Piaget would conform to this view.

With the exception of Logic, however, efforts made to formalize a knowledge representation tend to be disjointed and infrequent in the days before computers.

However the revolutionary impact of the advent of computers proved a new motivating force and many researchers are now addressing the problem of Knowledge

Representation.

The following are some of the major representation schemes which have resulted from their labours:

Logic

Formal Logic is a classical approach of representing Knowledge. It was developed by philosophers and mathematicians as a calculus of the process of making inferences from facts.

The simplest logic formalism is that of Propositional Calculus which is effectively an equivalent form of Boolean Logic, the basis of Computing. We can make Statements or Propositions which can either be atomic ( i.e. they can stand alone) or composite which are sentences constructed using atomic statements joined by logical connectives like

AND represented by

and OR represented by

. The following is an example of a composite sentence.

Fred_is_Big

Fred_is_Strong

The semantics of Propositional Logic is based on Truth Assignments. Statements cane have either of the values, TRUE or

FALSE assigned to them. We can also have rules of Inference. An Inference rule allows for the deduction of new sentences from existing sentences. For example : given the sentence,

Substance_Can_Pour

Substance_is_a_Liquid

If we know Substance_Can_Pour is true then we can assume that

Substance_is_a_Liquid is true under the Inference Rule Modus Ponens.

Propositional Logic is limited in its application to Knowledge Representation. We need, not only to be able to express TRUE or FALSE propositions but also to be able to speak of objects, to postulate relationships between objects and to generalize these relationships over classes of objects. We turn to the Logic of Predicate Calculus to realize these objectives.

First Order Predicate Calculus (FOPC) is an extension of the notions of the propositional calculus. The basic notions of statements and logical connectives are retained but certain new features are allowed.

These include assignments of specific objects in the Domain of Interpretation in addition to TRUE and FALSE and also the notion of predicates and functions.

Predicates consist of a predicate symbol and a number of arguments called the arity of the predicate e.g. is_red(X) is a predicate of arity 1 in this case X and the predicate symbol is_red. A predicate is assigned the value TRUE or FALSE under the assignment of an Interpretation depending on the values of the arguments under the same interpretation.

We often have occassion to refer to facts that we know to be true for all or some members of a class. For this we use quantification and the notion of the quantifiers

For all,

and There Exists,

. An example of a quantified sentence,

X. playing_card(X)

is_red(X)

The inference Rules of Propositional Logic are also retained. However they are extended to include new rules for quantifiers. Pedicate Calculus also allows for fnctions which are similar to predicates except that they can return values other than

True or False.

A Calculus is said to be first order if it allows quantification over terms but not over predicates or functions. First Order Logic is both sound (impossible to prove a false

Statement) and complete (Any true statement has a proof). Obviously then FOPC is a good representation scheme.

Deduction and Term Rewriting:

This is a form of reasoning where predicate calculus expressions are replaced by new ones using logical equivelences. For example

X pupil(X)

latin(X)

X

pupil(X)

Irish(X). can be replaced by the

[latin(X)

Irish(X)] using the equivalence A

B := - A

B

Resolution.

Resolution theorem proving works by showing the negation of the theorem, to be proved cannot be true. This is called proof by refutation.

To use resolution, the axioms involved in the proof must be expressed in a logic form called clause form. Logic sentences in clause form contain no quantifiers, implications or conjunctions.

Clause 1:Not(Hairy(X)) Or Dog(X) is an example of a sentence in clause form.

Resolution theorem proving works by producing resolvents.

Two clauses can be resolved to produce a new clause(i.e. its resolvent), if one clause contains the negation of a predicate in the other formula.

The resolvent consists of those literals in both clauses less the opposing literals.

The following clause

Clause 2: Hairy(X) could be resolved with Clause 1. to produce the resolvent Dog(X).

It might be that two literals are not quite direct opposites but only can be matched by appropriate substitutions. Finding appropriate substitutions is called unification and the substitution is called a unifier.

For example, if clause 2 were Hairy(Y) then it could only be resolved with clause 1 using the unifier {Y / X} i.e. Y substituted by X.

To illustrate Resolution theorem proving we use clause 1 and and the latter version of clause 2, i.e. Hairy(Y).

The theorem we want to prove is that X is a dog, i.e. Dog(X). So we negate this clause to produce clause 3, Not(Dog(X)).

Resolving clause 1 and clause 2 using the unifier {Y/ X} we get clause 4, Dog(X).

Adding this and clause 3 to the set of clauses we get:

Clause 1:Not(Hairy(X)) Or Dog(X).

Clause 2:Hairy(Y).

Clause 3:Not(Dog(X).

Clause 4: Dog(X).

Now, all these clauses are supposed to be true but we have a contradiction between clause 3 and clause 4, so the refutation of the theorem, i.e. clause 3 is inconsistent with the original set of clauses. Therefore we can assume the theorem to be proved.

Summary

Logic is a natural way to express certain notions. The expression of a problem in logic corresponds to our intuitive understanding of a domain. This gives a dimension of clarity to the representation.

Another advantage of Logic is that it is precise. There are standard methods of establishing semantics of an expression in a logical scheme.

Incorporating knowledge into a system is a long and changeable process. It is important that modifications be easily made to the knowledge base. In this respect the modularity and flexibility of logic represents a significant advantage.

The major disadvantage of logic is that proofs for any real problems tend to be computationally unfeasible. So its reasoning power is limited by practical constraints.

Its big failure as an expressive representation scheme is its failure to adequately represent time or higher order concepts needed in analagous reasoning, generalization and learning.

The following are the major kinds of reasoning employed with Predicate Calculus

Procedural Representation.

Logic is whats known as a declarative representation, in that it expresses (declares)

Knowledge without specifiying how it is to be used. Opponents of the declarative approach adhere to the notion of procedural representation where knowledge is intrinsically bound up in the routines and procedures which use it. These procedures and routines know how to do a particular task which would be regarded as intelligent.

A landmark Artificial Intelligence system which uses procedural knowledge is

Winograds famous blocks world system SHRDLU. The knowledge of this system is represented in the PLANNER procedural language. The procedures of SHRDLU know how to recognize other instances of a specific concept, the status of that concept in a given sentence and such things as the conditions in which that concept exists and the consequence of that existence.

The major advantage of procedural represntation schemes is their ability to use knowledge. These systems have a marked directness in their approach to problem solving. They solve problems directly without wasting much time searching the problem space. Consequently they are usually much more efficient in dealing with the problems to which they are applied than other representations.

Procedural representation lends itself to encoding using common programming languages. This avoids the need for system developers to concern themselves with a whole battery of difficult issues from theorem proving to problem space traversal.

Hence the development process tends to be quicker and the skills pool inclusive of a wider range of professionals.

Two major problems which arise in a procedural approach concern completeness and consistency. Many procedural systems are not complete in the sense that given all the facts necessary to make certain deductions they fail to make these deductions. In addition

Two Problems which arise in a procedural approach concern completeness and consistency.Many procedural systems are not complete meaning there are cases in which a system could know all the facts required to reach a certain conclusion but not be powerful enough to make the required deductions.

Secondly a deductive system is consistent if all deductions are correct. However the use of default reasoning with procedural representation introduce inconsistencies into the deductive process.

However completeness and consistency are not always fully desirable in AII systems because we humans often work with incomplete knowledge and are willing to make exceptions in certain cases.

Modularity is another feature that is sacrificed in procedural representation. It is not easy to modify procedural knowledge because it is usually intrinsically bound up in very complicated code.

The flow of execution of a procedural system is often unclear and as such it becomes quite difficult to chart the development of a solution to a particular problem. Because of this it is often difficult to explain the knowledge and reasoning that went into making a particular decision. Many applications require explanations e.g. expert systems so procedural knowledge is at a big disadvantage in this respect.

The pros and cons of the decalarative versus procedural approaches were the substance of one of the great debates in AI. However this row gave the whole concept of Knowledge Representation huge impetus and lead many researchers to focus their attention on the problem.

Finally more recent research efforts tend to combine the best aspects of both approaches.

Semantic Nets

The ideas of association, relationships inheritance and so on are central to the concepts of knowledge. Semantic networks are a popular scheme which elegantly reflect these ideas.

A network consists of nodes repesenting objects, concepts and events and links between the nodes representing their interrelations. Using the example Birds have wings a typical semantic network representation for this is.

The development of semantic networks had its origins in psychology. Ross Quillian in

1968 designed two semantic network based systems that were intended primariliy as psychological models of associateive memory.

Semantic Networks quickly found application in AI. B. Raphaels SIR system, also

1968, was one of the first programs to use this type of representation scheme. SIR was a question Answering system and could answer questions requiring a variety of simple reasoning tasks and relationships.

Semantic networks are a good example of how psychological ideas and methods can have application in AI. Node and link structure captures something essential about symbols and pointers in symbolic computation and also about association in the psychology of memory. the semantics of net structures however depends solely on the program that manipulates them and there are no fixed conventions about their meaning. A wide variety of network based systems have been implemented that use totally different procedures for making inferences.

One example of a network reasoning procedure was Quillians "spreading Activation" method. He wanted to make inferencesabout a pair of concepts by finding connections between the nodes. He begins with the two nodes and activates all nodes connected to each of the newly activated nodes are activated forming an expanding sphere of activation around each of the original concepts. When some concept is activated simultaneously from two directions a connection is established.

A more common reasoning procedure is based on matching network structures. A network fragment is constructed representing a sought_for object and then matched against the network database to see if such an object exists. Variable nodes in fragments are bound in the matching process to the values they must have to make the match perfect. the matcher can make inferences during the matching process to create a structure not exspliciltly present in the network.

One of the major problems with the early semantic networks was that there was no distinction between individuals and classes of objects. Some things are meant to apply to all in the class and others only to some. There are a number of ways of dealing with this problem. One is to have distinct nodes to represent classes and individuals respectively. This approach is usd by Fahlman in his NETL system to represent real world knowledge.

Another solution due to Hendrix is that of partitioned semantic networkd. Nodes are put into partitions. Some partitions represent the general situationa and others to one specific instance of a concept within its boundaries.

The great advantage of semantic nets is of course that important associations can be made explicitly and succinctly. Relevant facts about nodes can be inferred from other nodes to which they are linked. The visual aspect of semantic net graphs is another great advantage and is one reason why they have been adopted by the Object Oriented community as a design notational tool e.g. the Object Model in OMT.

The ease with which knowledge can be added or deleted from a network is dependent on the knwoledge itself. For example removing a node from the network might also result in the removal of relationships which are vital to another node . However the ease with which networks can be graphically represented should make it easier to see the consequences of adding or deleting a piece of knowledge.

One of the big disadvantages of this representation scheme is that there are no standard conventions about the formal interpretation of semantic networks. Because of this inferences drawn by manipulating the net are not assuredly valid in the sense that they are assured in logic based systems.

Computational problems can arise when network databases become large enough to represent non-trivial amounts of knowledge.

Frames:

The representation of knowledge about objects and events typical to specific situations has been the focus of research into a concept called frames. frames were originally proposed in 1975 by Minsky, as a basis for understanding visual perception, natural language dialogues and other complex behaviours.

A frame is a composite data structure which consists of a number of slots which correspond to various aspects of the object being represented. For example slots in the frame could contain information such as

1: frame identification information.

2: relationship of this frame to other frames.

3: descriptors of requirements for frame match. for example the chair frame might specify a flat base on four vertical supports with a back support at 90 degrees to the base.

4: procedural information on the use of the structure called demons.

5: frame default information: for example as default a chair has four legs.

6: new instance information. Many frame slots are left unspecified until a particular instance of the structure is identified. For example the colour of the chair might not be filled in until a particular instance is identified.

7: Frames support inheritance:

Class types are represented by frames which omit the detail of objects of the class, for example the frame representing a three legged chair object could inherit the slots of a class frame representing an abstract chair and augment these with its own particular detail to describe the new object.

8: The big problem with frames is controlling their invocation particularly when a number of them are applicable to a situation. e.g.

The man broke a leg when he fell onto the chair. Would the man frame or the chair frame take precedence.

Frames then are data structures for representing composite entities. Attched to each frame are several different kinds of knowledge. Some knwoledge is how to use the frame, some is about the different elements of the frame, some is about what to expect in given situations and some is what to do if the specified conditions dont materialise.

Frames contain a representation mechanism called a slot. Knowledge which is relevant to part of a given situation can be entered into a slot. thus the composite pieces of knwoledge that go to make up the larger entity can be represented as slots within the larger structure of a frame.

The top levels of a frame are fixed and represent things that are always true about a supposed situation. The top levels therefore give a high level abstract view of the situation. Only the details are missing.

The lowere levels have many slots that must be filled by specific instances or data.

That isb the details of the specific situation can be added at these levels.

The slot mechanism is a modular concept. New knowledge pertaining to a frame can be added simply by creating new slots. Conversly redundant knowledge may be deleted by removing slots from a frame.

The idea of having fixed higher and variable lower levels is entirely suitable for representing different versions of the one context. This is so because different instances of the same context differ only in detail. For example any room has walls, a ceiling and a floor but one instance of a room differs from another in what it contains and how these contents are arranged i.e. in the specific details of that room.

Many everday situations are stereotyped or conform to a familiar pattern. waking or opening a door are examples of stereotyped situations. Most of the events associated with these situations are expected. The slots of a frame are therefore filled with default values to mirror these expected events. However if we are trying to represent new or uncertain situations then default values tend not to work very well. More sophisticated inference is generally needed.

The notion of using stereotypes would reflect thinking among psychologists that we use previous experiences to deal with situations that we have never met before.

Procedures called demons can be attached to slots of a frame. Thus algorithmic knowledge can be incorporated into a frame based sytem. One interesting function of these demons is to activate other appropriate frames.

There are three major problems involved in the use of frames.

1: When should a frame be activated i.e. when does it become relevant.

2: When should a frame be de-activated i.e. when does it become irrelevant.

3: What happens if a situation arises in which no frames are relevant.

When Minsky first published his ideas on frames, it started a flurry of research into its use.

A side development but one that is very similar is the development of Object Oriented programming Paradigms a huge area in its own right.

Modern AI research is considering the notion of flavors i.e. a frame based structure which introduces concepts by presenting a stereotypical flavor of it. One major applications Minsky proposed for frame based structures is the idea of scripts.

Scripts

A script is a structured representation describing a stereotyped sequence of events in a particular context. The script was originally deigned by Schank and Ableson

1977.

A typical script is the restaraunt script. The customer recognizes the restaraunt by a sign on the door. They are met at the door and directed to an appropriate table by the waiter. They are brought menus. They order the meal which is brought by the waiter. They eat the meal, pay and leave.

This is fairly typical scenario and comfortably describes the average trip to restaurant.

To represent scenarios like this a script has a number of components.

1: Entry Conditions or descriptors of the world thaty must be true for the script to be called. In a restaraunt script these might be for example a restaurant that is open and a customer who is hungry.

2: Results or facts that are true when the script has terminated, for example the customer is full and the money has been paid.

3: Props or the things that make up the content of the script including cast for example menu, money, tables, waiters, customers and so on.

4: Roles are the actions that the individual participants perform, for example the waiter shows the customer to their seat, brings the menu and food and accepts the money at the end.

5: Scenes: These provide a temporal partitioning of the script for example in the restaurant the scenes and their order might be: entering, ordering, eating, paying , leaving.

Scripts can be used to answer questions about particular situations. For example it would be easy to answer questions like Who brought the food, With what did the customer pay etc.

They can be used to resolve referential ambiguities because each prop has a specific role.

Of the disadvantages of scripts the biggest problem is when two or more scripts are applicable to a given situation.

Consider for example the text

"John visited his favourite restaurant on the way to the concert. He was pleased by the bill because he liked Mozart"

In this case which should take precedence a concert script or a restaurant script.

Exact meaning can still present problems because of the idiosyncratic ambiguities in

English.

For example the following text will present problems.

"John was eating dinner at his favourite restaraunt when a large piece of plaster fell from the ceiling and landed on his date"

Was the date on his plate or was he gazing into her eyes.

Direct Reasoning.

There is a kind of representation scheme called analogical or direct reasoning. This class of scheme which includes representations such as maps, models , diagrams and sheet music, can represent knowledge about certain aspects of the world in especially natural ways.

A street map, depicting any town or city, is a typical example of direct reasoning because a street on the map corresponds in size and orientation to the real street it represents. Also the distance between any two points on the map corresponds to the distance between any two points on the map corresponds to the distance between the places they represent in the city.

Correspondence is the key requirement in direct representations. There must be a correspondence between the important relations in the representing data structure and the relations in the represented situation.

Direct representations do not represent everything in a given situation. they are only direct with respect to certain things. For example a street map is direct with respect to location and distance but not usually to elevation.

For some problems direct representation is particularly advantatgeous. For one the problem of updating the representation to reflect changes in the world is usually far simpler than in other representations. An example of this to add a new town to a map we simply put it in the right place. It is not necessary to state explicitly its distance from the towns that are already there since distance on the map corresponds to distance in the real world. Another of the advantages of direct representations over their counterparts relates to the difference between observation and deduction.

In some situations observation can be accomplished relatively cheaply in terms of computation using direct representation. For example it would be easier to see if three points are colinear using the direct representation of a diagram rather than geometric calculations.

The use of direct representation can facilitate search constraints in the problem situation. Constraints are represented by constraints on the types of transformations applied to the representation so that impossible strategies are rejected immediately.

However the advantages of efficiency and convenience during the actual processing of a problem must be weighed against the problems of setting up a direct representation in the first place.

Direct representation schemes tend to represent specific instances and there are times when generality is needed. For example one map might show a town which has a university. However from that there is no way whether we could say this is a property of all towns.

There is the possibility too thata some features in a direct representation might not hold in the actual situation and we might not know which ones these are. Mountains on a map for instance might be coloured bright yellow.It would be wrong to infer that the actual mountains are this colour. All this is part of the problem of knowing the limits of the representation schemes.

Direct representations become unwieldy when we have to make inferences to fill in gaps in the knowledge. Sometimes we only know indirectly where something is to be entered into a direct representation and need to make complicated inferences to find the exact location. In such cases the power of direct representation is dimininshed.

To conclude then direct representations are useful in some situations only. In others the problems whichn arise far outweigh the benefits.

Conclusion the choice of representation scheme is one of the most important issues concerning any intelligent system. this is so because a bad representation scheme can create many problems in both the design and execution of the system. The following are some general features.

It is important that the scheme chosen should be suited to the particular problem domain for which the program is designed.

The representation should reflect the nature of knowledge associated with the problem domain and it should be easy to express this knowledge in the representation formalism.

It is required that the representation scheme be modular.

The representation scheme should be flexible enough to represent the many diverse forms of knowledge required in problem solving.

The representation scheme should be mathematically sound and complete to guarantee the veracity of its inferences and its ability to make them.

It is important tha the path to solution be clearly understood for explanation purposes and the representation scheme should not hinder this.

Efficient traversal of the problem search space is obviously central and the representation scheme should facilitate this.

A system must know how to use its knowledge and the representation scheme must accommodate this.

It may be that one single scheme will not provide all of these for a particular problem domain and a mixture of schemes must be used.

Overview of Knowledge Base Systems including production rule representation.

Production Systems

Introduction

These are a form of knowledge representation which have found widespread application in AI particularly in the area of Expert Systems. Production systems consist of three parts: a) A rule base consisting of a set of production rules. b) the data c) an interpreter which controls the systems activity.

A production rule can be thought of as a condition action pair. They take the form

IF condition holds THEN do action e.g.

IF traffic light is red THEN stop car.

A production rule whose conditions are satisfied can fire, i.e. the associated actions can be performed. Condiitions are satisfied or not according to what data is currently available.

Existing data may be modified as production rules are fired. Changes in data can lead to new conditions being satisfied. New producton rules may then be fired. The decision which production rule to fire next is taken by a program known as the interpreter. The interpreter therefore controls the systems decisions and actions and must know how to do this.

Production systems were first proposed in 1943 by Post. Present day systems howver bear little resemblance to those earlier ones. Newell and Simon in 1972 used the idea of production systems for their models of human cognition and AI in general has found widespread use for them.

They are particularly popular form of representation in the area of expert systems.

This is because one of an experts main activities involves proposing suitable actions for particular situations.

There is another important reason for their popularity. In the development of intelligent systems the knowledge being used generally has to be modified many times as the understanding of the problem domain grows.. Since each rule can represent a distinct piece of knowledge new rules can be added or deleted to and from the knowledge base with considerable ease. This ease of modificiaction and modularity is an advantage of any representation scheme.

Another general attribute of production systems is the uniform structure imposed on the knowledge in the rule base. Since all knowledge is encoded in the rigid form of production rules it can often be more easily understood by another person than is the case with the freer form of semantic nets and procedures.

The biggest disadvantage of production rules is that they are inefficient. There are high overheads involved with matching current data with the conditions of a rule.

With large systems especially it has been the experience that they run very slowly.

A second major disadvantage is that it is hard to follow the flow of control in problem solving. That is, although situation - action knowledge is expressed naturally in production rule form, algorithmic knowledge is not.

Davis and King (1977) proposed the following criteria for problem domains where productions rules are suitable.

1: Domains in which knowledge is diffuse, consisting of many different strands of knowledge rather than some domain which is based on a unified theory like Physics.

2: Domians in which processes cane be represented as a set of independent actions as opposed to domains with dependent subprocesses.

3: Domains in which knowledge can be easily separated from the manner in which it is to be used as opposed to cases where representation and control are merged.

Knowledge Based Systems.

This section provides a brief introduction to knowledge based systems in general and in particular to other knowledge based systems used in program construction.

Knowledge Base systems are intended to perform tasks which require some specialized knowledge and reasoning. Medical diagnosis, geological analysis, and chemical compound identification are examples of tasks to which Knowledge Base systems have been applied.

Knowledge Base systems are often called expert systems because the problems in their application domain are usually solved by human experts. For example medical diagnosis is usually performed by a doctor.

Knowledge Base systems consist of Four major parts; The Knowledge Base, the

Inference Engine and the User Interface and the Explainer.

The knowledge to which the Knowledge Base system has access, is stored in the

Knowledge Base, (hence the name).

The Inference Engine is the part of a Knowledge Base system which is responsible for using its knowledge in a productive way. The Knowledge Base system's reasoning mechanisms are built into the Inference Engine. Most Knowledge Base systems employ deductive reasoning mechanisms.

The Knowledge Base system communicates with the user through the User Interface.

In many applications the Knowledge Base system is required to explain its reasoning to the user. This is particularly true in situations such as the identification of chemical structures where new results must be verified.

Obviously the knowledge to which a Knowledge Base system has access is no good to it, if it cannot use it to solve the problems in the application domain.

Therefore the systems knowlege must be represented in a form which can be manipulated by the reasoning mechanisms of the Inference Engine.

In many Knowledge Base systems most of the Knowledge is represented as production rules.

These are logical sentences of the form "If A then B" which are often referred to as condition-action pairs. A is the condition which must hold if action B can be applied to a given problem. Because standard mechanical reasoning techniques like resolution can be applied to production rules, their use as a knowledge representation scheme is widespread.

The User Interface is also a very important part of the Knowledge Base system.

There are a number of reasons why a Knowledge Base system needs to communicate with the user.

Often it is necessary to extract information about the specific task to be performed from the user. For example if a medical diagnosis is to be made the user must supply details of the patients symptons.

The system must also present whatever results it produces to the user.

Many Knowledge Base systems are semi rather than fully-automated. They need the user to guide their reasoning to some extent. For example the Knowledge Base system might ask the user to choose between a number of possible options in circumstances where conflicts arise in the problem solving process.

While the Knowledge Base, the IE and the User Interface are essential components, in many Knowledge Base systems there are also facilities to help in the acquisition of new knowledge. Teiresias, [DAVIS '76], which is used in association with MYCIN, [Shortliffe '76, Davis '76] is an example of such a system. It elicits high level information from the user which it converts into structured knowledge for its Knowledge Base. It also performs consistency checks on the updated knowledge base.

The ability to acquire new knowledge is important since the amount of knowledge to which a system has access determines the range of problems which it can solve.

Applications of Knowledge Base systems

Knowledge Base systems have been applied to many diverse problem domains, such as the following.

Diagnostic Aids such as MYCIN, [Shortliffe '76, Davis '76], which diagnoses bacterial blood infections and PUFF, [Kunz et al '78], which diagnose pulmonary disorders.

Aids to Design and Manufacture such as R1, [McDermott '82], which configures computers.

Teaching Aids such as SCHOLAR [Carbonell '70] which gives Geography

Tutorials and SOPHIE, [Brown et al '82], which teaches how to detect breakdown in electrical circuits.

Problem Solving

Recognition of forms, e.g. DENDRAL, [Buchanan and Feigenbaum '78, Lindsay et al

'80], which recognizes the structures of chemical compounds.

Robotics e.g. SHDRLU, [Winograd '73], which manipulates polygons in a restricted environment

.

Game playing systems such as Waterman's Poker Player, [Waterman '70], and

Automatic theorem Provers such as AM, [Lenat '82].

[Hayes-Roth et al '83, Handbook A.I. '82, Waterman '86], describe some more categories than those mentioned above. These include Planning systems such as

NOAH, [Sacerdoti '75] and MOLGEN, [Friedland '75] and Prediction systems such as Political Forecasting

Systems, [Schrodt '86] based on the Holland Classifier, [Holland '86].

MYCIN was a joint venture between Dept. of Computer Science and the Medical

School of Stanford University.

Much of the work took place in the 1970's.

Mycin was designed to solve the problem of diagnosing and recommending treatments for meningitis and bacteremia, (blood infections).

Knowledge Representation in MYCIN.

Mycin is a rule based expert system which employs probabilistic reasoning. Because of this the form of the rules in MYCIN are as follows:

Premise : Consequence

The consequence is given a probabalistic value which is a measure of the certainty of the consequence givine the associated premise.

Inference Engine.

Because probabalistic rule based reasoning is used the basis of inference is an extension of the modus ponens idea to include certainty discrimination.

That is, given two rules A -> B and B -> C then C is considered to be a consequence of A within acceptable levels of certainty if its computed probability exceeds a given threshold.

The combined probabilities of rules is computed using Bayes theorem. It provides a way of computing the probability of a hypothesis following from a particular piece of evidence given only the probaility with which the evidence follows from its actual causes: (hypotheses)

Bayes theorem.

where P(Hi|E) is the probability that Hi is true given evidence E.

P(Hi) is the probability that Hi is true overall.

Pi(E|Hi) is the probability of observing evidence E when Hi is true. n is the number of possible hypotheses.

Certainty theory makes some simple assumptions for creating confidence measures and has some equally simple rules for combining these confidences as the program moves towards its conclusions. The first assumption is to split confidence for from confidence against.

Call MB(H|E) the measure of belief of a hypothesis H given evidence E.

Call MD(H|E) the measure of belief of a hypothesis H given evidence E.

Now either:

1 > MB(H|E) > 0 while MD(H|E) = 0

1 > MD(H|E) > 0 while MB(H|E) = 0

The two measures constrain each other in that in agiven piece of evidence is either for or against a particular hypothesis. This is an important difference between certainty theory and probability theory. Once the link between measures of belief and disbelief have been established, they may be tied together agtain with the certainty factor calculation:

CF(H|E) = MB(H|E) - MD(H|E)

As the certainty factor CF approaches 1 the evidence is stronger for a hypothesis; as

CF approaches -1 the confidence against the hypothesis gets stronger; and a CF around 0 indicates that there is little evidence either for or against the hypothesis.

When experts put together the rule base they must agree on a CF to go with each rule.

This CF reflects their confidence in the rules reliability. certainty measures may be adjusted to tune the systems performance, although slight variations in this confidence measure tend to have little effect on the overall running of the system.

The premises for each rule are formed of the AND and OR of a number of facts.

When a production rule is used, the certainty factors that are associated with each condition of the premise are combined to produce a certainty measure for the overall premise in the following manner.

For P1 and P2 premises of the rule:

CF(P1 AND P2) = MIN(CF(P1),CF(P2))

CF(P1 OR P2) = MAX(CF(P1),CF(P2))

The combined CF of the premises using the above combining rules is then multiplied by the CF of the rule to get the CF for the conclusions of the rule.

One further measure is required : how to combine multiple CF's when two or more rules support the same result R. This is the certainty theory analog of the probability theory procedure of multiplying the probability measures to combine independent evidence. By using this rule repeatedly one can combine the results of any number of rules thta are used for determining result R. Suppose CF(R1) is the present certainty

factor associated with result R and a previously unused rule produces result R (again) with CF(R2); then the new CF of R is calcualted by:

CF(R1) + CF(R2) - (CF(R1)*CF(R2)) when CF(R1) and CF(R2) are positive.

CF(R1) + CF(R2) + (CF(R1)*CF(R2)) when CF(R1) and CF(R2) are negative.

CF(R1) + CF(R2)________ otherwise where |X| is the absolute value of X.

1-Min(|CF(R1) |,| CF(R2)|)

Besides being easy to compute, these equations have other desirable properties. First, the CF's. Second, the result of combining contradictory CF's is that they cancel each other out, as would be desired. Finally the combined CF measure is a monotonically increasing function in the manner one would expect for combining evidence.

Example

Consider the following rule:

Rule 1:(car_red or car_big (0.7)) and car_4_door (0.3) => car_sedan (0.5)

Now one rule produces car_red with CF (+0.3) and another produces car_red with CF(-0.2). What is the CF of the conclusion of Rule 1 given this scenario.

CF ~ Certainty Factor.

CF of car_red is 0.3 + (-0.2) =

1 - Min(|0.3|,|-0.2|)

0.1 =

1 - 0.2

0.1 / 0.8 = 0.125

Adding this to Rule 1 we get

Rule 1:(car_red (0.125) or car_big (0.7)) and car_4_door (0.3) => car_sedan (0.5)

Combining CF's

CF of (car_red (0.125) or car_big (0.7)) = Max (0.7, 0.125) = 0.7

CF of (car_red (0.125) or car_big (0.7)) and car_4_door (0.3) =

Min(0.7, 0.3) = 0.3

Finally Cf of conclusion of Rule 1 is 0.3 * CF of car_sedan = 0.3* 0.5 = 0.15

Answer 0.15.

TEIRISIAS

In order for an expert system to be an effective tool people must be able to interact with it easily.

An important aspect of this is the ability to explain any conclusions drawn.

TERESIAS is a system that works in association with MYCIN and acts as its explainer.

An important premise underlying TERESIAS approach to providing explanations is that the behaviour of a program can be expalined simply by referring to a trace of the rules used in drawing up the systems ultimate conclusion.

For example if rules

IF A then B,

If B then C and

If C then D were used in coming to conclusion D then

TERESIAS would return A,B C as its explanation.

TERESIAS also helps in the acquisition of knowledge.

It does this by asking the user to provide explanations of any new knowledge.

If the user supplies a premise that is in conflict with any premise of MYCINS knwoledge base TEIRESIAS will present its chain of conclusions in establishing that premise and ask the suer to explain the conflict.

The behaviour of TERESIAS may be explained somewhat by the following piece of dialogue. In it the system has asked the doctor to supply some missing information.

The Doctor asks why she should.

TEIRESIAS:

Is the patients illness with Organism-1 a hospital acquired infection:

Doctor Why?

(i.e. Why do you need to know)

TEIRESIAS:

This will aid in determining the category of Organism-1:

It has already been established that:

2.1) the gram stain of Organism-1 is gramneg and

2.2) the morphology of Organism-1 is rod and

2.3)the aeorobicity of Organism-1 is facultative

Therefore if (2.4) the infection with Organism-1 was not acquired while the patient was hospitalized then there is strongly suggestive evidence (.8) that the category of

Organism-1 is enterbacteriaceae.

Doctor: How (2.3)

(i.e. how was it established (2.3) that Organism-1 is facultative

TEIRESIAS:

The following were used:

(3.1) Rule027 indicated that there was strongly suggestive evidence (0.8) that the aerobicity of Organism-1 was facultative

(3.2) Rule033 weakly suggested (0.2) that the aerobicity of Organism-1 was not facultative.

This gives a strong suggestion (0.6) that Organism-1 has a faucltative aerobicity.