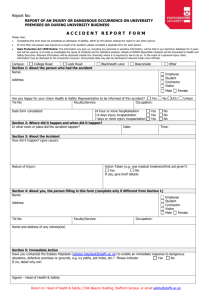

Word Doc.

advertisement