Uses of Ensemble Output

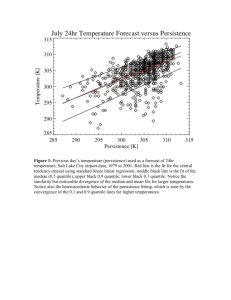

advertisement

STATISTICAL INTERPRETATION METHODS APPLIED TO ENSEMBLE FORECASTS USES AND INTERPRETATION OF ENSEMBLE OUTPUT (Laurence Wilson, Environment Canada) Abstract: It could possibly be argued that ensemble prediction systems (EPS) have been more useful to the modeling and data assimilation community than to the operational forecasting community. There are a few possible reasons for this. First, the expectation that an ensemble system would permit a confidence estimate to be issued with each operational forecast has not been fully realized. Second, EPSs produce enormous quantities of output, which must be further processed before it can be effectively used in operations. Over the years since EPSs became operational, various processing methods have been tried and evaluated, with varying degrees of success. One can also think of many ways of interpreting EPS forecasts for operational use, which have not yet been tried. In this talk various aspects of interpretation of ensemble output will be discussed, focusing on those methods which should be most promising for operational use of ensembles. The discussion will begin with the search for a relationship between the ensemble spread and the skill of the forecast, which is a prerequisite to the ability to predict forecast skill. Then, following a brief review of existing statistical interpretation methods, a survey will be presented of statistical interpretation efforts that have been applied to ensemble forecasts. The presentation will also include some suggestions for new methods of interpretation of ensemble output. 1. Introduction It is probably fair to say that the output of ensemble systems has not been as widely used and accepted in the operational meteorological community as one would have hoped. It can also be said that ensemble systems have been quite useful to numerical weather prediction centers, as an aid to the evaluation of models and especially data assimilation systems. Now that ensembles have been run operationally for about eight years, it is worthwhile to examine the impediments to their operational use, and to search for and identify ways to encourage their use by forecasters, in countries which run ensemble systems as well as in countries which have access to the output of ensemble systems run elsewhere. To help stimulate thinking about the use of ensembles in short and medium range forecasting, this paper proposes reasons for the slow adoption of ensemble output in operations, and discusses by example ways of overcoming these problems. Several examples of the interpretation and processing of ensemble output are discussed. Included are some methods that have already been tried and tested on ensemble output, and some post-processing techniques that have been applied successfully to deterministic forecasts but have not yet been tried with ensemble output. In all cases, the examples have been selected for their potential to facilitate the interpretation by forecasters of the very large volumes of data generated by ensemble systems. 2. Challenges to the use of ensembles in forecasting The output of an ensemble system consists of a set of alternative forecast values of each model variable at each forecast projection time, which is usually interpreted as a representation of the probability distribution of each model variable. As such, ensembles always produce an enormous quantity of data, linearly related to the number of members in the ensemble. This presents the first challenge: How to summarize or otherwise process the data so it can be interpreted and used in operational forecasting, that is, how to process the data so that it yields meaningful information. Since forecasters normally already have available to them a full range of forecast guidance from the full resolution model, and perhaps also from statistical interpretation of that model output, ensemble output is likely to be used only if it is demonstrated that it offers additional value or information over existing products. If, for example, it is shown that ensemble forecasts are superior to the available model output, or it could be shown that the ensemble gives reliable 2 information on the expected skill of the full resolution forecast, then potential users might be persuaded to search the ensemble output for these added benefits. A third challenge to the use of ensembles in forecasting is related to the stochastic nature of the output. Forecaster may not be used to evaluating and using probabilistic forecasts. If the issued forecast products must be deterministic or categorical, the forecaster must convert the probabilistic ensemble output into a categorical forecast. In short, dealing with forecasts in probabilistic format may require some basic change in the forecasting process, which might be resisted, especially if the final forecast is not probabilistic. 3. Presentation and processing of ensemble forecast output for use in operational forecasting There are three general types of products that are produced from ensemble systems: Forecasts of forecast skill or confidence Graphical displays of output Probability forecasts. These are described in this section with examples. 3.1 Forecasts of forecast skill One of the potential benefits of ensemble prediction that was recognized right from the beginning is the ability to use the spread of the ensemble as an indication of the relative confidence in the forecast. It would be expected that a large spread in the ensemble, as represented for example by its standard deviation, would be associated with greater errors in the forecast, either the forecast from the ensemble model or the full resolution version of the model. The search for a strong “spread-skill” relationship has continued for many years, and it has proven somewhat elusive. However, more recent efforts have met with more success and some centers, including the UK Met Office and NCEP, are now issuing confidence factors along with the forecast output. The spread-skill relationship can be represented in various ways and several have been tried in experiments. The spread of the ensemble is usually represented either by the variance or by its square root, the standard deviation. The spread can be taken with respect to the ensemble mean or the unperturbed control forecast. The spread about the control will always be greater or equal to the spread about the ensemble mean. Forecast skill may be expressed in many ways; the most common are the anomaly correlation and the root mean square error (rmse). Probably the most relevant to forecasters would be to compare the skill of the full resolution model with the ensemble spread, but the comparison is often made with the skill of the unperturbed control forecast. The relationship may be examined via a simple scatter plot, or by means of 2 by 2 contingency tables, where the skill and spread are each characterized by above- and below-average categories, and total occurrences are tallied in each of the four categories, 1. high skill-high spread; 2. high skilllow spread; 3. low skill-high spread, and 4. low skill-low spread. In such a format, a strong spreadskill relationship is indicated by large counts in categories 2 and 3, and low counts in categories 1 and 4. While studies of the spread-skill relationship have typically been carried out over relatively large domains, it may well be true that a local spread-skill relationship could be found, even if a more general relationship does not exist. This should be investigated. Figure 1. A plot of ensemble spread in terms of anomaly correlation vs. Skill of the control forecast, for the ECMWF ensemble, August to October, 1995. Forecasts are for day 7 500 mb geopotential height. The different symbols refer to the months of the verified season. (After Buizza, 1997) Figure 1 shows an example of a spread-skill plot graph, for an earlier version of the ECMWF ensemble system. In this example, the data are presented in terms of the anomaly correlation coefficient, and the relationship sought is between the skill of the control forecast and the 3 ensemble spread, both expressed as an anomaly correlation. The figure is graphically divided into four quadrants by the vertical and horizontal lines indicating the mean skill and mean spread respectively. If a strong relationship existed, it would show up as an elongation of the cloud of points in the direction of the positive diagonal on the graph, with more points in the upper right and lower left quadrants of the graph, and fewer points in the upper left and lower right quadrants. This is indeed the case here, but there is also considerable scatter, and the relationship cannot be considered particularly strong. Fig. 2, from a more recent assessment of the spread-skill relationship for the ECMWF ensemble, appears more encouraging; the plotted points for each forecast projection do tend to line up nearly parallel to the diagonal, especially for the longer projections. However, averaging over several cases to obtain each point has eliminated most of the scatter. It is difficult to know from this graph whether one could reliably estimate the skill from the ensemble spread for a particular case. Figure 2. Relationship between the ensemble standard deviation and the control error standard deviation for the ECMWF ensemble forecasts during winter 1996/97. The sample for each 24 h projection is divided into 10 equally populated categories, represented by the points on the graph. (After Atger, 1999a). Although a completely reliable spread-skill relationship has proven somewhat elusive, it remains tempting to use the ensemble output in this way, and at least two centers, the UK Met Office and NCEP in the U.S., regularly give out confidence estimates with their forecasts, based on the ensemble output. More evaluation of the spread-skill relationship is needed, and it would be best if it were done with respect to the operational model, since that is what forecasters already have available. If the ensemble spread is related to the forecast error of the full-resolution model, this information would certainly be of use in operational forecasting. In Canada an experiment has just started to determine a relationship between the ensemble spread and the skill of the operational model, but in terms of surface weather element forecasts. If successful, this information will be used directly to affect the terminology used in our computer-generated worded forecasts. 3.2 Graphical displays of output To help with the interpretation of the distribution of forecasts, ensemble output is frequently displayed in various graphical forms, particularly “postage stamp” maps, “spaghetti” diagrams and “plume” diagrams. Postage stamp maps consist of forecast maps for all of the ensemble members, presented on one page, covering a limited domain of interest, and at a particular level. They most often depict the 500 mb or surface fields, for a particular forecast projection. These present enormous amounts of information, and may be hard to read and interpret, especially if the ensemble has many members. To help with the interpretation of spatial fields from the ensemble, the forecasts may be processed to help group “similar” patterns or, alternatively, identify patterns that are markedly different from the others. Two such processing methods in operational use are clustering and tubing (Atger, 1999b). Clustering involves calculating the differences among all pairs of ensemble members, grouping together those for which differences are small, and identifying as separate clusters those members with larger differences. Tubing involves identifying a central cluster which contains the higher density part of the ensemble distribution, then identifying “outliers” - extreme departures from the centroid of the main cluster. The line joining the centroid of the main cluster and each outlier then defines the axis of a tube, which extends in the direction of the outlier from the main cluster. The tubes may be interpreted as an indication of the directions in which the forecast may differ from the ensemble mode. Whatever the similarities and differences between these two methods of categorizing or grouping the members of the ensemble distribution, the aim is the same: To organize the massive information content of the ensemble to become more easily interpretable by forecasters. Figure 3. An example of a plume diagram, as issued operationally at ECMWF. Panels are for 2 m temperature (top), total precipitation over 12 h periods (middle), and 500 mb heights (bottom). The full resolution forecast, control forecast, ensemble mean and verifying analysis are included on the top and bottom panels. 4 Spaghetti plots represent a sampling of the full ensemble output in a different way. In a spaghetti plot, a single 500 mb contour is plotted for all the ensemble members. Thus the main ridge and trough features of the 500 mb flow can be seen along with the way in which they are forecast by each ensemble member. Areas where there is greater uncertainty immediately stand out as large scatter in the position of the contour. One must be cautious in the interpretation of the chart, however, because large spatial scatter may not be significant when it happens in areas of flat gradient. For this reason, a measure of the ensemble spread such as the standard deviation is usually plotted on the map. Then, areas of large apparent uncertainty in the 500 mb contour forecast can be checked to verify that they also coincide with larger ensemble spread. An example of a spaghetti plot is shown in Wilson (2001a) elsewhere in this volume. Plume diagrams are equivalent to spaghetti plots. Instead of plotting the spatial distribution of the ensemble member forecasts at a particular forecast projection, the distribution of the ensemble forecasts is plotted for a particular location as a function of projection time. Plume diagrams are most often prepared for weather elements such as temperature, for specific stations. For ease of interpretation, the probability density of the ensemble distribution might be contoured on a plume chart. Fig. 3 is an example of a plume diagram as prepared and run operationally at ECMWF, showing plumes for temperature, total precipitation and 500 mb heights for Reading UK. The temperature and 500 mb height graphs are contoured to show probability density in four intervals, and the ensemble mean, control, full resolution forecast and verifying analysis (not the observations) are included. The plumes indicate graphically the increasing ensemble spread with increasing projection time and show clearly where the full resolution forecast lies with respect to the ensemble for a specific location. In the example shown, the mode of the ensemble remains close to the verifying value and the full resolution forecast also agrees. Only once, at about day 5 on the 500 mb forecast, does the verifying value lie outside the plume and therefore outside the ensemble. The precipitation graph clearly shows where there are timing differences among the precipitation events, and facilitates comparison of the ensemble forecast with the full resolution forecast. Figure 4. “Box-and-whisker” depiction of ensemble forecasts of cloud amount (top), total precipitation (2nd from top), wind speed (2nd from bottom) and 2 m temperature (bottom), for Beijing, based on ECMWF ensemble forecasts of January 30, 2001. The control forecast (red) and the full resolution forecast (blue) are also shown. Another format in which single-location ensemble output is presented is shown in Fig. 4. These are “box-and-whisker” plots of direct model output weather element forecasts. The boxes shown at each 6 h indicate the range of values forecast by the ensemble between the 25th and 75th centile (the middle 50% of the distribution), while the median of the ensemble is indicated by the horizontal line in the box. The ends of the lines extending out of both ends of the box (the “whiskers”) indicate the maximum and minimum values forecast by the ensemble. These kinds of plots are an effective graphical way of depicting the essential characteristics of a distribution. Asymmetries and the spread of the distribution can be immediately seen, and variations over the period of the forecast are also immediately apparent. 3.3 Probability forecasts Aside from the ability to predict the skill of the forecast in advance, the most important use of ensemble forecasts is to estimate probabilities of events of particular interest. Specifically, probabilities of the occurrence of severe or extreme weather events are usually of greatest interest. Prior to the advent of ensemble forecasts, the only way to obtain probability forecasts was to carry out statistical interpretation of the output from a model (MOS or Perfect Prog techniques - see below). These statistical methods depend on the availability of enough observational data to obtain stable statistical relationships, which is difficult to achieve for extremes. If the ensemble model is capable of predicting extreme events reliably, and provided the perturbations can represent the uncertainty reasonably well, then it should be possible to obtain reliable estimates of the probability of an extreme event from the ensemble. 5 The procedure usually used for estimating probabilities is straightforward. First, a threshold is chosen to describe the event of interest, and to divide the variable into two categories. This might be “temperature anomaly greater than 8 degrees” for example, where the threshold of (mean + 8) is used to separate temperatures which are extremely warm from all other temperatures. As another example, extreme rainfall events might be delineated by “12h precipitation accumulation greater than 25 mm”. Once the event is objectively defined, the probability may be estimated simply by determining the percentage of the members of the ensemble for which the forecast satisfies the criterion defined by the threshold. Table 1. Suggested distributions to fit ensemble weather element forecasts when the ensemble is small. Weather Element Temperature, geopotential height, upper air temperatures Precipitation (QPF) Distribution Normal Gamma Kappa (cube-root normal) Wind speed Weibull Cloud amount Beta Visibility Lognormal Characteristics Two-parameter, mean and standard deviation Symmetric, bell-shaped Gamma: Two-parameter - "shape and spread"; positively skewed. Applies to variables bounded below, approaches normal when well away from lower bound Kappa: Similar to gamma in form, but not as well known. Cube-root normal: The cube root of precipitation amount has been found to be approximately normally distributed. Two-parameter; negatively skewed; applies to variables bounded below. Two-parameter; a family of distributions including the uniform and U-shaped as special cases. Intended for variables which are bounded above and below. Negatively or positively skewed, depending on parameters. Normal distribution with logarithmic x-axis; applies to positive-definite variables. Figure 5. Schematic of the process of probability estimation from the ensemble. Either the raw ensemble distribution or a fitted distribution may be used to estimate probabilities. Probability estimation is shown schematically in Fig. 5, where the threshold of the variable X is 1 and we are interested in the probability of the event (X>1). Fig. 5 shows a normal distribution; it is usually the empirical distribution of the ensemble values that is used to estimate probabilities. This is preferable, except for small ensembles (perhaps less than 30 members), when fitting a distribution to the ensemble might lead better resolution in the probability estimates. Table 1 indicates theoretical distributions that have been shown to fit empirical climatological distributions of some weather elements quite well. It should be noted that probability estimation represents a form of interpretation of the ensemble forecast. The predicted distribution is sampled at a specific value, then integrated to a single probability of occurrence of the chosen event. Details of the probability distribution are lost in the process. Some of these details can be kept by choosing multiple categories of interest using several thresholds, for example 1,2, 5, 10 and 25 mm of precipitation, and determining probabilities for each category. However, this still amounts to sampling the distribution. If the number of categories becomes a substantial fraction of the ensemble size, e.g. more than 1/5, it is preferable to fit a distribution in order to obtain stable probability estimates. Once probabilities have been estimated for particular events, they can be presented as probability maps. Several examples of such maps are contained elsewhere in this volume. If it is probability of an anomaly 6 over a specific threshold that is plotted, one should be aware of the source of the climatological information, i.e., observations or analyses. 4. Statistical interpretation applied to ensembles Statistical interpretation methods can be applied to ensemble forecasts just as they have been applied to deterministic forecasts from operational models. There are, however, a number of factors that must be considered in choosing the statistical interpretation method. There are also many possibilities for further application. This section describes several statistical processing techniques that have been applied or could be used with ensemble forecasts. Some examples are also discussed. 4.1 PPM and MOS Perfect prog (PPM) and model output statistics (MOS) both involve the development of statistical relationships between surface observations of a weather element of interest (the predictand) and various predictors, which may come from analyses (PPM) or from model output (MOS). Some details of the characteristics of the two methods are described in tables 2 and 3. Both methods can be used with a variety of statistical techniques, including regression, discriminant analysis, and non-linear techniques such as Classification and regression trees or Neural nets. PPM and MOS refer to the way in which predictors are obtained; the statistical technique determines the form of the relationship that is sought. Table 2. Comparison of Perfect Prog and MOS Classical Development of Equations Application in operational forecast mode Comments Perfect Prog MOS PredictandObserved at T0 PredictorsObserved (Anal) at T0 -dT PredictandObserved at T0 PredictorsObserved (Anal) at T0 PredictandObserved at T0 PredictorsForecast values valid at T0 from prog issued at T0 -dT Predictor values observed not (T0) to give Predictor values valid for T0 +dT from prog issued now to give Predictor values valid for T0 +dT from prog issued now to give Forecast valid at T0 -dT Forecast valid at T0 +dT Forecast valid at T0 +dT dT less than or equal to 6 hours preferable unless persistence works well. Time lag built into equaitions dT can take any value for which forecast predictors are available Sample application as PPM but separate equations used for each dT One might apply PPM and MOS development to ensemble forecasts in different ways. The simplest would be to take an existing set of PPM equations, developed perhaps for deterministic forecasts, and simply apply them to all the members of the ensemble. In this way, an ensemble of interpreted weather element forecasts can be obtained. For MOS, one must ensure that the 7 development model is the same as the model on which the equations are run. Therefore it would likely be necessary to develop a new set of MOS equations using output from the ensemble model. Furthermore, if the model itself is perturbed (as in the Canadian system), different equations would need to be developed for each different version of the model. The extra development cost is very likely prohibitive, which means that PPM is the method of choice for use on ensembles except where MOS equations exist for the ensemble model. Table 3. Other characteristics of MOS and Perfect Prog Classical Perfect Prog MOS Potential for good fit Relationships weaken rapidly as predictor predictand time lag increases Relationships strong because only observed data concurrent in time is used Relationships weaken with increasing projection time due to increasing model error variance Model dependency Model-independent Model-independent Model-independent does not use model output does not account for model biasmodel errors decrease accuracy accounts for model bias Large development sample possible Large development sample possible Generally small development samples depends on frequency of model changes Access to observed or analysed variables Access to observed or analysed variables Access to model output variables that may not be observed Model bias Potential sample size Potential Predictors The simultaneous production of many forecasts leads to the possibility of using a collection of ensemble forecasts as a development sample for MOS. One might argue that ensembles provide a means of obtaining a large enough dataset for MOS equation development much sooner than would be possible with only a single model run. While a MOS development sample collected in this way might represent some improvement on a MOS sample collected over the same period for a single model, the main effect is to increase the unexplainable variance in the data. Each member of a specific ensemble forecast will be matched with only one value of the predictand. The variation within the ensemble represents error variance with respect to the predictand value, which will be a minimum when the observation coincides with the ensemble mean, but will not be explainable by the predictors. Thus, a MOS development sample composed of pooled ensemble forecasts is not likely to lead to a significantly better fit than a MOS development sample based on a single full resolution model. The ensemble mean has been shown in many evaluations to perform better than both the control forecast and the full resolution model in terms of the mean square error, mainly because the ensemble mean tends not to predict extreme conditions. Although the ensemble mean is a statistic of the ensemble and shouldn’t be used or evaluated as a single forecast, it is likely to work 8 well as a MOS predictor, especially a “least-squares” regression-based system which seeks to minimize the squared difference between the predictors and the predictand. Another option for the use of MOS would be to design a system which builds on what already exists. If a MOS system exists for the full resolution model, then MOS predictors from any ensemble model(s) could be added to the existing system. This would be a way of determining whether the ensemble forecasts add information to the forecast that is not already available from the full resolution model. Hybrid systems may also include perfect prog predictors; there are no restrictions on the predictors that can be used as long as they are available to run the statistical forecasts after development. Figure 6. Example of single station MOS forecasts produced by running MOS equations on all members of the U.S. NCEP ensemble. Forecasts are for maximum and minimum temperature (top), 12h precipitation (middle) and 24h precipitation (bottom). The ensemble mean and the full resolution model forecast are also indicated. In summary, the easiest way to link ensemble forecasts to observations via statistical interpretation is to run an existing PPM system on all the ensemble members, to obtain forecast distributions of weather elements.. MOS forecasts require more effort to obtain, but the may be worthwhile, especially for single-model ensemble systems. In the interest of determining what information the ensemble might contribute to what is already available from probability forecasts from statistical interpretation systems, it would be useful to compare the characteristics of MOS forecasts on the full resolution model with those directly from the ensemble. Some such comparisons have been done (See, for example, Hammill and Colucci, 1998), but more comparisons are needed. Hamill and Colucci (1998) found that the ensemble forecasts could perform better than MOS for higher precipitation thresholds, but the ensemble system could not outperform MOS on forecasts at lower precipitation thresholds. They also were not able to find a spread-skill relationship using surface parameters; evidently such a relationship is even more elusive than it is for middle atmosphere, medium range forecasts. An example of PPM maximum-minimum temperature forecasts computed from the Canadian ensembles is shown in another paper in this volume, Wilson (2001a). These forecasts used the same regression equations that have been used for many years with the operational model output, and are run daily for eight Canadian stations. As another example, NCEP has test-run existing MOS equations on their ensemble system, presenting the output in the form of box and whisker plots (Fig. 6). These forecasts are not currently operational. 4.2 Analogue forecasts The analogue method has been applied to deterministic forecasts for a long time. The idea is to search for past situations which are “best matches” to the current forecast, then to use the weather associated with the analogue cases as the forecast. Usually matches are sought with the 500 mb pattern, but the surface map or the thickness may also be used. “Best” is usually defined in terms of the correlation or the Euclidean distance. The forecast is then given by a weighted function of the weather conditions associated with the analogue maps. Analogue methods are a form of PPM, since previous analyses are used (rather than model output) to define the analogue cases. The analogue method requires a large historical dataset to work well, and the more detail that is demanded in the matching process, the harder it is to find close matches. It would seem that the analogue method would be attractive for use with ensembles. For example, one could find the best analogue cases for all members of the ensemble individually using a reanalysis database. If a few matches were chosen for each ensemble member, these cases could be taken as an ensemble of actual outcomes that resemble most closely the ensemble forecast. Such an analogue ensemble could be used to evaluate and interpret the ensemble forecast in several ways: 9 To validate the ensemble forecast on individual cases, by comparing the model and occurrence distributions. To define clusters, for example if the same analogue matches several of the ensemble members. To produce PPM estimates of probabilities, from the weather associated with the analogue ensemble, and comparing with the probability estimates directly from the ensemble. To check the realism of the outcomes predicted by the ensemble members, by comparing the goodness of fit with analogue cases. Figure 7. Assessment of combination full resolution model/eps-based MOS forecasts (“MOS”), vs. Full resolution (“no eps”) and eps-based (“DMO eps”) forecasts of probability of remperature anomaly greater than +2 degrees. Verification in terms of ROC area (top) and Brier score (bottom) for one summer of independent data for De Bilt. (after Kok, 1999). Some interesting questions come to mind, also: Is there more or less variance in the analogues that correspond to forecasts from the unperturbed control, compared to analogues for the ensemble members. Is there any consistency in the evolution of the ensemble with respect to the evolution of the analogue cases? The analogue method has not been used in this way with ensembles yet, to my knowledge, except in connection with standard PPM and MOS methods. Some MOS development experiments using analogue predictors were carried out in the Netherlands (Kok, 1999), applied to the ECMWF ensemble forecasts. Two tests were done to determine the impact of the ensemble forecasts: First, MOS forecast equations were developed for 2 m temperature at De Bilt using direct model output predictors and analogue predictors based on the full-resolution model and 2 m temperature forecasts from the eps. (Indicated “MOS” on Fig. 7) A second set of equations was developed without eps predictors (“no eps” on Fig. 7). Both these sets of equations were developed on two summers of data, and tested on a third summer. Probabilities of temperature anomalies exceeding various thresholds were computed and evaluated using the ROC and Brier score (See Wilson, 2001b elsewhere in this volume). Fig. 7 shows sample results from this comparison. It can be seen that, in terms of both the Brier score and ROC, the “no eps” equations perform better than the ensemble alone (“DMO eps”) at all forecast ranges out to day 7, and are about equal in performance after day 7. The “MOS” forecasts, however, show improvement over both the other sets of forecasts at all ranges. Improvements over the eps alone are largest at day 3 and smallest at day 10, while improvements over the “no eps” are marginal until day 7. These results suggest that the eps does add information to what is available from the full resolution model, but that it does not contribute significantly except after day 7. This may be partly because the full resolution model gives sharper forecasts (higher resolution), which are subject to larger errors and lower reliability at longer ranges than the ensemble forecasts. 4.3 Neural Networks A neural network is a non-linear statistical interpretation method that seeks to classify or organize predictand data into a set of response nodes which are triggered by specific characteristics of the stimulus or predictor data. A particular form of neural nets, called self-organizing maps (Kohonen, 1997) has been shown by Eckert et al (1996) to be useful to the operational interpretation of ensemble forecasts. Eckert et al (1996) used three years of 10 by 10 grids of 500 mb height analyses to train the network. The result of this was an organized 8 by 8 array of 64 nodes, each of which represents a particular map type for the area of interest around Switzerland. The 64 maps are organized in the sense that maps which are close to each other in the 8 by 8 array are 10 similar, and those which are far apart are markedly different. Fig. 8 shows the bottom right hand corner of the array, 16 of the maps. It can be seen, for example that the top row is characterized by a ridge over the area, which decreases in amplitude from left to right. Moving downward along the left side of the array, the ridge becomes displaced more to the west, and a low located to the east becomes more intense. Figure 8. Sixteen of the 64 map types determined from three years of data, for the Eckert at al (1996) application of Neural nets to the interpretation of ECMWF ensemble forecasts. Arrows indicate the direction and intensity of the geostrophic wind over Switzerland. (After Eckert et al, 1996). Once the array is trained on the development data, the ensemble members for a particular forecast can be compared to the array of map types. The RMS distance of each ensemble member from each node of the array is computed, and the ensemble members are classified into corresponding map types by choosing the closest (lowest RMS difference). Fig. 9 shows an example of the classification result, for three different cases, for 4, 6 and 10 days all verifying at the same times, using the ECMWF ensemble forecasts. The spread in the ensemble forecast is immediately apparent by the spread of the triggered nodes on each display. The top case is an example of little ensemble spread, the second case (middle) shows a forecast where the ensemble changes its idea as the verification time approaches, and the third case shows a bimodal ensemble forecast, with the ensemble split nearly equally between two different map types at all three forecast projections. Figure 9. Three examples of application of the Kohonen Neural net to ensemble forecasts from the ECMWF system, expressed as an 8 by 8 grid of gray-shaded squares. The intensity of the shading indicates the number of ensemble members that are closest to each map type, the map type that fits the full resolution model is shown by a square, and the analysis type is indicated by a cross. Four day (left), 6 day (center) and 10 day (right) forecasts are shown. The self-organizing maps were used to verify the mode of the ensemble vs. The full resolution model forecast, against the analysis. That is, the map type which matched the greatest number of ensemble members was compared with the map type that matched the full resolution model forecast, and both were compared with the map type that fit the analysis. The verification sample consisted of 8 months; the 4 day and 6 day projections were verified. For the 96h projection, it was found that when the spread was small, the full resolution model coincided with the ensemble mode usually, but the mode was more accurate when the two didn’t correspond. Overall accuracy was above average when the spread was small, quality was low when the spread was large. When the ensemble spread was large, the full resolution model was superior. For 144h, the spread-skill relationship was the same as for 96h, but the ensemble mode was more accurate than the full resolution model, regardless of the spread. These results tend to confirm the existence of a spread-skill relationship, using a synoptically-oriented verification, but it is also clear that the relationship is not strong. Neural nets, especially the version described here, are similar in many ways to the analogue method. Instead of using a compete set of archive cases as in the analogue method, the selforganizing maps represent a way of pooling the historical cases into a set of map types, a synoptically-organized form of clustering the historical cases. With only three years of historical data, map typing is needed to eliminate some of the variance in the dataset. If a full reanalysis dataset were to be used as a training sample for a Kohonen self-organizing map, it should be possible not only to increase the number of nodes, but to stratify by season and to perhaps use additional predictors to train the network. This is potentially a very powerful method for extracting synoptically meaningful information from ensemble forecasts and deserves additional development. In practical terms, the use of a network of maps also eliminates the need to search the entire history for matching cases, as is required in the analogue method, which is another advantage of neural nets. 5. Concluding remarks 11 This paper has presented a discussion of the challenges faced by those who wish to use the output of ensemble prediction systems in operational forecasting, along with a description of some graphical and statistical methods which can be used to overcome these challenges. The most important issue concerns the need to extract the information that is relevant to the forecasting process from the vast quantities of data that are produced by ensemble systems, and to present that information in ways which facilitate and encourage its use. Secondly, since ensemble output is presented as additional information to other model output, it is necessary to demonstrate that the ensembles add value to that output. And thirdly, in those countries where probability forecasts are not in general use, some adaptation of the forecasting process to prepare and interpret probability forecasts will be necessary. Several ways of graphically sampling the ensemble distribution have been described, along with statistical processing methods that summarize the information in the ensemble. These include clustering, tubing and probability estimation. Following the success of statistical interpretation of deterministic model output, there should be considerable potential for the adaptation of statistical interpretation methods to ensembles. The application of PPM and MOS formulations to ensembles has been discussed, and a few examples presented. Finally, a rather promising application of neural nets has been described. It is clear that ensemble forecasts show great potential for effective use in forecasting, which is only beginning to be tapped. The ability to forecast the skill of the forecast in advance, and the possibility of obtaining reliable forecasts of extreme weather are only two of the most exciting uses of ensemble forecasts. To realize this potential, much development remains to be done. Let’s get on with it! 6. References Atger, F., 1999a: The skill of ensemble prediction systems. Mon. Wea. Rev., 127, 1941-1953. Atger, F., 1999b: Tubing: An alternative to clustering for the classification of ensemble forecasts. Wea. Forecasting, 14, 741-757. Buizza, R., 1997: Potential forecast skill of ensemble prediction and spread and skill distributions of the ECMWF ensemble prediction system. Mon. Wea. Rev., 125, 99-119. Eckert, P., D. Cattani, and J. Ambuhl, 1996: Classification of ensemble forecasts by means of an artificial neural network. Meteor. Appl., 3, 169-178. Hamill, T.M., and S.J. Colucci, 1998: Evaluation of Eta-RSM ensemble probabilistic precipitation forecasts. Mon. Wea. Rev., 126, 711-724. Kohonen, T., 1997: Self-organizing maps, Second edition. Springer-Verlag, New York, 426pp. Kok, C.J., 1999: Statistical post-processing on EPS. Unpublished manuscript, presented at the ECMWF expert meeting on EPS, 1999, 15pp. Wilson, L.J., 2001a: The Canadian Meteorological Center Ensemble Prediction System. WMO, Proceedings of the Workshop on Ensemble Prediction, Beijing, in press. Wilson, L.J., 2001b: Strategies for the verification of ensemble forecasts. WMO, Proceedings of the Workshop on Ensemble Prediction, Beijing, in press.