References - Academic Science,International Journal of Computer

advertisement

FACE EXPRESSION RECOGNITION USING ANN

Ramya Avabhrith

M Tech, Dept of CSE, Nitte Meenakshi

Institute of Technology,Yelahanka,

Bangalore, Karnataka, India

ramyaavabrath@gmail.com

Dr. Jharna Majumdhar

Shipla Ankalaki

Dean R&D, Professor and Head, Dept of

CSE (PG) Nitte Meenakshi Institute of

Technology,Yelahanka, Bangalore,

Karnataka, India

M Tech, Dept of CSE, Nitte Meenakshi

Institute of Technology,Yelahanka,

Bangalore, Karnataka, India

classification of human facial expression by computer is an

important issue to develop automatic facial expression recognition

system in vision community.

This paper explains about an approach to the problem of facial

feature extraction from a still frontal posed image and

classification and recognition of facial expression and hence

emotion and mood of a person. Feed forward back propagation

neural network [3] is used as a classifier for classifying the

expressions of supplied face into four basic categories like happy,

sad, surprise, and neutral. For face portion segmentation basic

image processing operation like projection and skin color

polynomial model. Facial features like eye, eyebrow and lip are

extracted based on the projection and fine search method. For

most efficient classifier neural network is used for the facial

expression classification.

ABSTRACT

Facial Expression Recognition (FER) is a rapidly growing and

ever green research field in the area of Computer Vision,

Artificial Intelligent and Automation. There are many application

which uses Facial Expression to evaluate human nature, feelings,

judgment, opinion. Recognizing Human Facial Expression is not a

simple task because of some circumstances due to illumination,

facial occlusions, face color/shape etc. Our goal is to categorize

the facial expression in the given image into four basic emotional

states – Happy, Sad, Neutral and Surprise. Our method consists of

three steps, namely face detection, facial feature extraction and

emotion recognition. First, face detection is performed using a

novel skin-color extraction and extraction of location of facial

component features such as the eye and the mouth analysis by

using a knowledge based approach and projection. Next, the

extraction of facial features distance is performed by employing

an iterative search algorithm, on the edge information of the

localized face region in gray scale. Finally, emotion recognition is

performed by giving the extracted eleven facial features as inputs

to a feed-forward neural network trained by back-propagation.

PREVIOUS WORK

Facial expressions provide the building blocks with which to

understand emotion. In order to effectively use facial expressions,

it is necessary to understand how to interpret expressions, and it is

also important to study what others have done in the past. Fasel

and Luettin performed an in depth study in an attempt to

understand the sources that drive expressions [4]. Their results

indicate that using FACS may incorporate other sources of

emotional stimulus including non-emotional mental and

physiological aspects used in generating emotional expressions.

Much of previous work has used FACS as a framework for

classification. In addition to this, previous studies have

traditionally taken two approaches to emotion classification

according to Fasel and Luettin [4]: a judgment based approach

and a sign-based approach. The judgment approach develops the

categories of emotion in advance such as the traditional six

universal emotions. The sign-based approach uses a FACS system

[5], encoding action units in order to categorize an expression

based on its constituents. This approach assumes no categories,

but rather assigns an emotional value to a face using a

combination of the key action units that create the expression.

Keywords

ANN, Expression classification, Facial features,

Projection, perceptron, Skin colour extraction.

Shilpaa336@gmail.com

FACS,

INTRODUCTION

Computer vision is the branch of artificial intelligence that

focuses on making computers to emulate human vision, including

learning, making inferences and performing cognitive actions

based on visual inputs, i.e. Images. Computer vision also plays a

major role in Human Computer Intelligent Interaction (HCII)

which provides natural ways for humans to use computer as aids.

HCI will be much more effective and useful if computer can

predict about emotional state of human being and hence mood of

a person from supplied images on the basis of facial expressions.

Mehrabian [1] pointed out that 7% of human communication

information is communicated by linguistic language (verbal part),

38% by paralanguage (vocal part) and 55% by facial expression.

Therefore facial expressions are the most important information

for emotions perception in face to face communication.

Ekman and Friesen [2] represent 6 basic face expressions

(emotions), hence recognition of facial expressions became a

major modality. For example, Smart Devices like computer/robots

can sense/understand the human’s intension from their expression

then it will helpful to the system to assist them by giving

suggestions or proposals as per their needs. Automatic facial

expression and facial AU (Action Unit) recognition have attracted

much attention in the recent years due to its potential applications.

For classifying facial expressions into different categories, it is

necessary to extract important facial features which contribute in

identifying proper and particular expressions. Recognition and

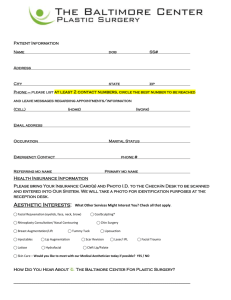

DATA COLLECTION

Data required for experimentation is done taking our collage

NMIT database for training and testing of classifiers. The

database contains 40 color images of 4 facial expressions (Happy,

Sad, Surprise, and Neutral) posed by ten different NMIT students

have rated each image on 4 emotion adjectives. Few samples are

shown in

Figure 1.

1

Neutral

Smile

Sad

The skin-color pixels, which fall within the area between these

two polynomials, can be extracted by the following rule checking

on their r,g values:

Surprise

R1: g >

Neutral

Smile

Sad

and g>

Surprise

In r_g plane to exclude the bright white pixels, which falling

around the point (r, g) = (0.33, 0.33).

Figure 1. Few samples of facial expressions of persons

PROPOSED METHOD

R2: W =

Real-time face detection algorithm for locating faces in images

and videos. The algorithm starts from the extraction of skin pixels

based upon rules derived from a simple quadratic polynomial

model [6]. First, much computation time are saved. Second, both

extraction processes can be performed simultaneously in one scan

of the image or video frame.

Happy

Sad

Surprise

Neutral

Classification

0.0004.

R1 and R2 simultaneously for skin pixel extraction.

To improve the extraction results, the two extra simple rules are

used.

R3: R > G > B;

R4: R – G ≥ 45:

An overview of the proposed work contains three major modules:

(i) face detection, (ii) facial feature extraction and (iii) emotion

recognition from the extracted features. The first step, namely

face detection is performed on a color image containing the

frontal view of a human subject.

Preprocessing

+

Rule R3 is derived from the observation that the skin pixels tend

to be red and yellow With Rule R3; the pixels tending to be blue

can be effectively removed. Rule R4 is defined in order to remove

the yellow-green pixels.

Face

detection

S=

{

1 if all R1; R2; R3 and R4 are true

0 otherwise

Where S=1 means the examined pixel is a skin pixel.

To improve the compactness of the formed skin-color regions,

apply the projection to extract the correct face part.

And crop the face part based on detected face centroid.

Feature

extraction

Facial Feature Extraction

Lip detection:

Figure 2. Block Diagram For The Expression Recognition

System

Observing the pixels of lips, we find that the colors of lips range

from dark red to purple under normal light condition.

Two discriminate functions for lip pixels as

Face detection

Instead of using probabilistic models, here we use a quadratic

polynomial model for the color model of skin pixels to reduce the

computation time [6].

Skin-color region extraction: For chromatic color space,

each pixel is represented by two values, denoted by (r, g). The

conversion from conventional RGB color space to chromatic

color space is defined as follows:

During the discrimination using l(r), the darker pixels on faces,

which are usually the eyes, eyebrows or nose holes, also need to

be excluded from the discrimination.

The rule for detecting lip pixels.

L

Where R, G, B denotes the intensities of pixels in red, green and

blue channels, respectively.

The skin locus, formed by the skin pixel distribution on the r_g

plane. These two polynomials are:

=

{

1 if

0 otherwise

and R≥20 and G≥20 and

B≥20

L = 1 indicates the examined pixel is a lip pixel.

The above rule will may fail for sometimes due to other darker

features like eye. For the testing of lip part can be done by

cropping [7] the face image into equally two parts. The lower part

2

contains the lip, and apply the above same rules will get only lip

part.

There may be full of noise in lip region, to extract the accurate lip

distance apply the projections [8].

The projection points are thicker only when there is more

concentration of pixels. The distance between two corners of lip

can be identified by applying Horizontal projection. Similarly,

distance between the upper and lower lip region can be identified

by Vertical projection

eye brow midpoint and right eye midpoint is considered

(H).

G

Ratio LE is:

v.

a.

H

b.

G

G+H

Ratio of distance between right eye and eye brows to

both(left and right) eye and eye brows:

The distance between left eye brow midpoint and left eye

midpoint is considered (G). The distance between right

eye brow midpoint and right eye midpoint is considered

(H).

G

H

Figure 3. face components projection. a) lip horizontal and

verticle projection, b) eye horizontal and verticle projection.

Ratio RE is:

Eye detection:

The eye components are extracted through a threshold operation

(e.g. threshold =20) on the histogram-equalized grayscale image

converted from the original color image.

Ratio of Areas of features:

The midpoint of a left eye brow is considered (A1), the midpoint

of a right eye brow is considered (A2) and lower lip point is

considered (A3).Find the area of a triangle A for the points A1,

A2, A3.

The upper peak point of a left eye is considered (B1), the upper

peak point of a right eye is considered (B2) and lower lip point is

considered (B3).Find the area of a triangle B for the points B1,

B2, B3.

The lower peak point of a left eye is considered (C1), the lower

peak point of a right eye is considered (C2) and lower lip point is

considered (C3).Find the area of a triangle C for the points C1,

C2, C3.

For the testing of correct eye part can be done by cropping the eye

part. i.e after detection of face part apply the Edge detector i.e

convert the image into binary form. For the binary image apply

Horizontal and Vertical projection. The peak value of projection

will gives the location of an eye in a face. Crop the eye region [8]

[9] based on the peak value which is shown in Figure 3.b). So we

can find the distance between eye and eyebrow, and iris.

Facial Feature distance Ratio:

Total eleven features extracted based on the projection

mechanism.

i.

Ratio of eye brow distance

a

b

c

vi.

Ratio of area of eye brows to eyes, eyebrows, and lip is

R1 =

A

A+B+C

vii.

Ratio of area of upper peak point of eye to eyes,

eyebrows, and lip is R2 =

B

A+B+C

viii.

Ratio of area of lower peak point of eye to eyes,

eyebrows, and lip is R3 =

C

A+B+C

Distance between upper and lower lips.

Distance between two corners of lip

Distance between only eye part i.e upper peak of an eye

and lower peak of an eye.

d

Distance between point b &c

Distance between point a &d

ii.

H

G+H

Ratio of number of skin and hair pixel is

No. of skin pixel

No. of hair pixel

iii.

The distance between the corner of left eye and right

corner eye is considered (E). The distance between

corners of mouth (F).

Ratio of eye and lip distance is: Distance E

Distance F

iv.

Ratio of distance between left eye and eye brows to

both(left and right) eye and eye brows:

The distance between left eye brow midpoint and left eye

midpoint is considered (G). The distance between right

ix.

x.

xi.

Emotion Recognition

After extracting the facial feature we can classify the expression

based on the following measures-

3

calculated in the output layer are then back propagated to the

hidden layers where the synaptic weights are updated to reduce

the error. This learning process is repeated until the output error

value, for all patterns in the training set, are below a specified

value. The Back Propagation, however, has two major limitations:

a very long training process, with problems such as local minima

and network paralysis; and the restriction of learning only static

input -output mappings. ANN requires large processing time for

large neural networks. The network is trained in supervised

manner using input and target. Here Targets are user defined for

three expressions. We used [1000] for neutral, [0100] for happy,

[0010] for sad, and [0001] for surprise.

Eleven features of face values are given as an input to the neural

network. Model uses an input layer, 11 hidden layers and 4 output

layers as shown in Figure 4.

a. Happiness (smile):

Corners of the lips are drawn back and up. Mouth may or may not

be parted, teeth exposed. Cheeks are raised. Crow’s feet near the

outside of the eyes

b. Sadness:

Inner corners of the eyebrows are drawn up. Corner of the lips are

drawn down. Lower lip pouts out

c. Surprise:

The brows are raised and curved. Eyelids are opened, white of the

eye showing above and below. Jaw drops open and teeth are

parted but there is not tension or stretching of the mouth.

d. Neutral:

Inner corners of the eyebrows are normal. Corner of the lips is

starched. Eyelids are partially opened.

Artificial Neural Network classifier

NEUTRAL

An Artificial Neural Network often just called a neural network is

mathematical model inspired by biological neural networks. A

neural network consists of an interconnected group of artificial

neurons, and it processes information using a connectionist

approach to computation. In most cases a neural network is an

adaptive system that changes its structure during a learning phase.

Neural networks are used to model complex relationships between

inputs and outputs or to find patterns in data.

Neural computing has re-emerged as an important programming

paradigm that attempts to mimic the functionality of the human

brain. This area has been developed to solve demanding pattern

processing problems, like speech and image processing. These

networks have demonstrated their ability to deliver simple and

powerful solutions in areas that for many years have challenged

conventional computing approaches. A neural network is

represented by weighted interconnections between processing

elements (PEs). These weights are the parameters that actually

define the non-linear function performed by the neural network.

Perceptron: The Perceptron is a type of artificial neural network.

It can be seen as the simplest of feed forward neural network; a

linear classifier. The perceptron is a binary classifier that maps its

input x (a real valued vector) to an output value f(x) (a single

binary value) across the matrix.

F(x) = 1, if w.x+b>0

= 0, else

where w is a vector of real valued weights and w.x is the dot

product (which compute the weighted sum) .b is a “bias” a

constant term that does not depend on any input value. The value

of f(x)(0 or 1) is used to classify x as either a positive or a

negative instance in the case of a binary classification problem

.The bias can be thought of as offsetting the activation function ,or

giving the output neuron a base level of activity. If b is negative,

then the weighted combination of inputs must produce a positive

value which is greater than –b in order to, push the classifier

neuron over the 0 threshold. Back-Propagation Networks is most

widely used neural network algorithm than other algorithms due

to its simplicity, together with its universal approximation

capacity. The back-propagation algorithm defines a systematic

way to update the synaptic weights of multi-layer perceptron

(MLP) networks [3][10].

The supervised learning is based on the gradient descent method,

minimizing the global error on the output layer. The learning

algorithm is performed in two stages feed-forward and feedbackward.

In the first phase the inputs are propagated through the layers of

processing elements, generating an output pattern in response to

the input pattern presented. In the second phase, the errors

SMILE

Facial features

SAD

SURPRISE

Figure 4. Neural network

EXPERIMENTAL RESULTS AND

ANALYSIS

In this paper expression is detected for NMIT collage Face

Database for frontal view facial images. Fig. 5 shows the results

of facial feature extraction. Initially Face portion segmentation is

done by skin polynomial model and for the accurate face part

extracted by projection

Cropped Facial image is divided into two regions based on the

centre of an image and location of permanent facial feature by

projection mechanism. Figure 4 shows localized permanent facial

features which are used as input to classification.

For ANN classification,

Training Phase: In this work, supervised learning is used

to train the back propagation neural network. The training

samples are taken from the NMIT collage database. This work has

considered 18 training samples for all expressions. After getting

the samples, supervised learning is used to train the network.

Testing Phase: This proposed system is tested with NMIT

collage database. It was taken totally 40 sample images for all of

the facial expressions. Fig. 6 shows the GUI for displaying the

results of face expression. Success rate is given in TABLE I.

a

4

b

c

enormously beneficial for fields as diverse as medicine, law,

communication, education, and computing. The currently, using

the skin based polynomial model reduces the computation time,

features are every effective for classification of the expression.

And also back propagation neural network is efficient for

recognition facial expression. This aspect of machine analysis of

facial expressions forms the main focus of the current and future

research in the field.

d

e

f

REFERENCES

[1] Mehrabian.A,"Communication without Words", Psychology

Today, Vo1.2, No.4, pp 53-56. 1968.

[2] Ekman, P, Friesen, “Constants across Cultures in the Face

and Emotion”, J. Pers. Psycho. WV, 1971, vol. 17, no. 2, pp.

124-129.

[3] S.P.Khandait, Dr. R.C.Thool and P.D.Khandait. “Automatic

Facial Feature Extraction and Expression Recognition based

on Neural Network” (IJACSA) International Journal of

Advanced Computer Science and Applications, Vol. 2, No.1

(January 2011).

g

Figure 5. Face feature extraction: a) input face part, b) face

part extraction, c)cropped face part, d)left eye part, e)right

eye part, f)lip part, g) final feature part.

[4] B. Fasel and J. Luettin. Automatic facial expression analysis:

A survey. Pattern Recognition, 2003.

[5] Ying-li Tian, Member,Takeo Kanade,Fellow, and Jeffrey F.

Cohn, Member, “Recognizing Action Units for Facial

Expression Analysis”, IEEE

Transactions on pattern

analysis and machine intelligence,2001.

[6] Cheng-Chin Chiang ,Wen-Kai Tai,Mau-Tsuen Yang,Yi-Ting

Huang,Chi-Jaung Huang “A novel method for detecting

lips,eyes and faces in real time”, Elsevier 2003.

[7] Rajesh A Patil, Vineet Sabula, A. S. MandaI ”Automatic

Detection of Facial Feature Points in Image Sequences” ,

IEEE.

[8] W.K. Teo , Liyanage C De Silva and Prahlad Vadakkepat ,

“FACIAL

EXPRESSION

DETECTION

AND

RECOGNITION SYSTEM”, Journal of The Institution of

Engineers, Singapore Vol. 44 Issue 3 2004

Figure 6. GUI for classification

[9] Yepeng Guan, “Robust Eye Detection from Facial Image

based on Multi-cue Facial Information”, IEEE Guangzhou,

China, 2007.

TABLE I. ANN classification

Expression

Number of

image

Experimented

Number of

correct

Recognition

Success rate

Happy

10

10

100%

Sad

10

9

90%

Surprise

10

10

100%

Neutral

10

9

90%

Total

40

38

95%

[10] Le Hoang Thai, Nguyen Do Thai Nguyen and Tran Son Hai,

Member, “A Facial Expression Classification System

Integrating Canny, Principal Component Analysis and

Artificial Neural Network”, International Journal of Machine

Learning and Computing, Vol. 1, No. 4, October 2011

[11] Akshat

Garg,

Vishakha

Choudhary,

“FACIAL

EXPRESSION RECOGNITION USING PRINCIPAL

COMPONENT ANALYSIS”, International Journal of

Scientific Research Engineering &Technology (IJSRET)

Volume 1 Issue4 pp 039-042 July 2012.

[12] Aryuanto Soetedjo “ Eye Detection Based-on Color and

Shape Features” (IJACSA) International Journal of

Advanced Computer Science and Applications, Vol. 3, No.

5.,2011.

Implementation tools is used as Microsoft Visual C++ 6.0

This is installed on Windows 7 OS.

CONCLUSION:

[13] S.-H. Leung, S.-L. Wang, and W.-H. Lau, “Lip image

segmentation using fuzzy clustering incorporating an elliptic

shape function,” IEEE Transactions on Image Processing,

vol. 13, no. 1, pp. 51–62, 2004.

Faces are tangible projector panels of the mechanisms which

govern our emotional and social behaviors. The automation of the

entire process of facial expression recognition is, therefore, a

highly intriguing problem, the solution to which would be

5