Survey on Multiversion and Replicated Database

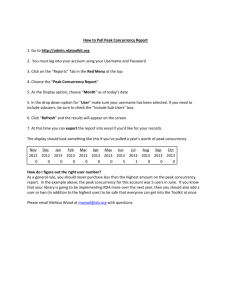

advertisement

Survey of Multiversion and Replicated Databases

Xinyan Feng

December, 99

1. Introduction

Concurrency control protocols are the part of database system that ensures the correctness

of the database. Conventional single version database concurrency control mechanisms

basically fall into one of the following three categories:

Locking based protocols such as Two-phase Locking (2PL)

Timestamp based protocols such as Timestamp Ordering (TSO)

Optimistic protocols such as Kung & Robinson Optimism (OP)

In a single version system, the same data is used both for read operations and write

operations. Execution conflict rate could be fairly high. Many protocols were proposed

to increase concurrency level. Among the many choices, multiversion and data

replication have a wide range of variances.

Data multiversion and replication deal with different problems. Multiversion databases

provide slightly older versions of data items for transactions to read, while write

operations could create new versions. It could be used in a centralized or a distributed

environment. Replicated data, on the other hand, provides a system with higher data

availability by having the same data available at multiple sites. Hence, this mechanism is

used in a distributed database environment to increase concurrency and data availability.

However, from the point of view that both mechanisms try to increase concurrency level

by providing data redundancy, they share a lot in common. This survey paper tries to

look into development in both. Also, since both multiversion database and distributed

database with data replication have a lot of other issues related to concurrency control,

some of them will also be addressed in the paper.

This survey is organized into the following section: section 2 takes a look back at the

concepts in a single version centralized database, their concurrency control mechanisms

in particular. Section 3 is a discussion of multiversion database, including its concurrency

control mechanisms, their performances, the interaction with object oriented paradigm,

interaction with time validity concept in real time and version control issues. Section 4 is

a discussion of replicated database. Issues such as concurrency control, replication

control are addressed. In section 5 a short summary is given about this survey.

2. Single Version Concurrency Control Protocols

2.1 Two –phase Locking

Locking based concurrency control algorithms are the most popular ones in the

commercial world. A lock is a variable associated with a data object that describes the

status of the object with respect to possible operations that can be applied. Common locks

include read locks and write locks. Two-phase Locking is developed to help reduce

chances of deadlocks. Two-phase locking has a growing and a shrinking phase. During

the growing phase, new locks on database items can be acquired but no old lock released.

During the shrinking phase, the prior acquired locks are released; no new locks are

granted. Two-phase locking preserves serializability.

Nonetheless, deadlocks could still occur which would be solved by deadlock detection or

prevention mechanisms. Another disadvantage of two-phase locking is that it limits the

concurrency level of a system.

2.2 Timestamp Ordering

In a Timestamp Ordering scenario, each data item has associated with it a read timestamp

and a write timestamp, which are used to schedule conflicting data access. Deadlocks will

not happen thanks to the nature of conflict resolution. However, starving problem might

occur where a transaction might get started and aborted many times before it finishes.

Another disadvantage of Timestamp Ordering is cascading aborts. When a transaction

aborts, all the transactions that have read data values updated by this transaction

previously have to abort too.

2.3 Optimistic Concurrency Control

Optimistic Concurrency Control is designed to reduce the overhead of locking. No locks

are involved in this mechanism and therefore it is deadlock free. Three phases make up

this protocol. The first phase is a read phase when a transaction can read database items

and perform preparatory writing (in the local workspace of the transaction). Then comes

the validation phase when the serializability of the transactions is checked. If the

transaction passes the check in write phase, all transaction updates will be applied to the

database items. Otherwise, local updates are discarded (and the transaction restarted).

During the validation phase for transaction Ti , the system checks that for each transaction

T j that is either committed or in validation phase. The transaction will be validated if one

of the following three conditions holds:

1. Ti and T j do not overlap in time

2. Re ad _ Set (Ti ) Write _ Set (T j ) , and their write phase do not overlap in time

3. (Re ad _ Set (Ti ) Write _ Set (Ti )) Write _ Set (T j ) , otherwise.

Otherwise, the transaction is aborted and restarted later.

The efficiency of Optimistic Concurrency Control protocol largely depends on the level

of conflict between transactions. It is easy to imagine that efficiency will be pretty good

under low conflict rate due to less overhead compared to other algorithms. When conflict

rates are high, however, output will be low due to higher rates of restart.

3. Multiple Versions

Multiple versions of data are used in a database system to support higher transaction

concurrency and system recovery. The higher concurrency results since read requests can

be serviced with older versions of data. These algorithms are particularly effective for

long queries, which otherwise cannot finish due to the high probability of conflict with

other transactions. However, notice that a long running query may still have to be aborted

when some of the versions it needs have been garbage collected prematurely.

Serializability theory has been extended into this area and research work has proved that

multiversion algorithms are able to provide strictly more concurrency than single version

algorithms.

The basic idea behind multiversion concurrency control is to maintain one or more old

versions of data in the database in order to allow work to proceed using both the latest

version of the data and some old versions. Some algorithms proposed two versions, a

current version and an older one. Other algorithms keep many old versions around. We

are going to put emphasis on the latter.

In a multiversion database, each write operation on an object produces a new version. A

read operation on the object is performed by returning the value from an appropriate

version in the list. One thing worth addressing is that the existence of multiple versions is

visible only to the schedule, not to the user transactions that refer to the object. In another

word, it is transparent to user application.

3.1 Multiversion History

In a multiversion database, an object x has versions x i , x j , …, where subscripts i, j are

version numbers, which often correspond to the index or transaction number of the

transactions that wrote the versions.

A multiversion (MV) history H represents a sequence of operations on. Each write

operation wi [x ] in a MV history is mapped into wi [ x i ] , and each ri [x ] into ri [ x j ] , for

some j, where x j is some version of data x created by transaction T j . The notion of final

writes can be omitted, since every write results in a new entity being created in the

database.

Correctness of a multiversion database is determined by one-copy (1C) serializability. An

MV history is one-copy serializable if it is equivalent to a serial history over the same set

of transactions executed over a single version database.

The serialization graph of an MV history H is a directed graph whose nodes represent

transactions and whose edges are all Ti T j such that one of Ti ’s operations precedes

and conflicts with one of T j ’s operations. Unlike single version database, SG(H) by itself

does not contain enough information to determine whether H is one-copy serializble or

not. To determine if an MV history is one-copy serializable, a modified serialization

graph is adopted.

Given an MV history H, a multiversion serialization graph (MVSG(H)) is SG(H) with

additional edges such that the following conditions hold:

1. For each object x, MVSG(H) has a total order (denoted x ) on all transactions that

write x, and

2. For each object x, if T j reads x from Ti and if Ti x Tk , then MVSG(H) has an edge

from T j to T k (i.e., T j Tk ); otherwise, if Tk x Ti , then MVSG(H) has an edge

from T k to Ti (i.e., Tk Ti ).

The additional edges are called version order edges. An MV history H is one-copy

serializable is MVSG(H) is acyclic.

3.2 Multiversion Concurrency Control Protocols

To support the versioning capacity and improve database performance, multiversion

concurrency control protocols are developed to extend the basic single version protocols.

For single version databases, we have Two-phase Locking, Timestamp Ordering and

Optimistic Concurrency Control. Consequently, for multiversion databases, there are

Multiversion Two-phase Locking (MV2PL), Multiversion Timestamp Ordering

(MVTSO), and Multiversion Optimistic Concurrency Control.

3.2.1 Multiversion Two-phase Locking

MV2PL is an extension to single version two-phase locking. One implementation

variation is called CCA version pool algorithm. It works as follows: Each transaction T is

assigned a startup timestamp S-TS(T) when it begins running and a commit timestamp CTS(T) when it reaches its commit point. Transactions are classified as read-only or

update. When an update transaction reads or writes a data item, it locks the item, and it

reads or writes the most recent version. When an item is written, a new version of the

item is created. Every version of an item is stamped with the commit timestamp of its

creator.

Since the timestamp associated with a version is the commit timestamp of its writer, a

read-only transaction T is made to read versions written by transactions that committed

before T starts. T is serialized after all transactions that committed prior to its startup, but

before all transactions that are active during its life.

Another variation keeps a completed transaction list, which is a list of all update

transactions committed successfully until that time. One drawback of this algorithm is the

maintenance and usage of the completed transaction list. The execution of a read

operation of a read-only transaction involves finding the largest version of the object

smaller than the startup stamp of the transaction, and ensure that creator of the version

appears in the copy of the completed transaction list of the transaction. This approach is

cumbersome and complex to deal with.

Another drawback exists in the extension to distributed databases. Although this protocol

guarantees a consistent view to a read-only transaction, it does not guarantee global

serializability for read-only transactions. The protocol also requires that a read-only

transaction have a priori knowledge of the set of sites where it is going to perform reads.

It is necessary to construct a global completed transaction list from copies of the local

completed transaction lists at the respective sites before the read-only transaction begins

execution. Thus, the complexity of the protocol increases when used in a distributed

database environment.

MV2PL is proved to preserve serializability. However, it still causes deadlocks. The

deadlocks could be detected with the same technique as with the single version database.

To break deadlocks, however, some transactions must be aborted. Cascading aborts may

occur.

3.2.2 Partitioned Two-phase Locking

Multiversion Two-phase Locking gives decent performance in many situations. However,

notice that these algorithms make the distinction of read-only transactions from update

transactions. Among update transactions, however, some perform read-only access to

certain portions of the database and write into other portions. A concurrency control

algorithm that distinguishes update transactions from read-only transactions would have

treated such transactions as regular update transactions and subjected them to the usual

two-phase locking protocol.

Hierarchical timestamp (HTS) is presented to take advantage of such a decomposition.

HTS allows a transaction that write into one data partition but reads from other higher

data partitions (because of the read-write dependencies) to read from the latter using a

timestamp different from the transaction’s initiation timestamp. In essence, this ensures

that the transaction will not interfere with concurrent updates on the higher data partitions

performed by other transactions. With the idea behind HTS, partitioned two-phase

locking extends the version pool method.

Concurrency control for synchronizing update transactions in partitioned two-phase

locking is composed of two protocols. One is for intraclass and the other for read-only

access to higher data partitions. The former is equivalent to the multiversion two-phase

locking method, where both read and write accesses will result in locking of the accessed

data element. The latter is a protocol that grants a particular version of the data to the

requesting transaction which requires no locking.

However, although the paper mentioned MV2PL, it is not clear why multiversion is

involved because the analysis available seems to be the scenario of a partitioned

distributed database, and the emphasis does not seem to be related to version issue.

3.2.3 Multiversion Timestamp Ordering

In multiversion timestamp ordering, old versions of data are used to speed the process of

read requests. Different variations of the algorithm treat write requests differently.

However, all of them are based on basic TSO.

Write requests are synchronized using the basic timestamp ordering on the most recent

versions of data items. On the other hand, read requests are always granted, possibly

using older versions of objects. Each transaction T has a start-up timestamp S-ST(T),

which is issued when T begins execution. The most recent version of an item X has read

timestamp R-TS(X), and a write timestamp, W-TS(X). They are used to record the

startup timestamps of the latest reader and the writer of this version. A write request is

granted if S-TS(T)>=R-TS(X) and S-TS(T)>=W-TS(X). Transactions whose write

requests are not granted are restarted. When a read or write request is made for an object

with a pending write request from an older transaction, the read or write request is

blocked until the older transaction either commits or aborts.

Another algorithm creates a new version for every write. Read mechanism is similar to

the one mentioned above.

MVTSO ensures serializability. It shares TSO’s potential to have the cyclic restart

problem. Cascading aborts might happen. A variant of the multiversion timestamp

ordering is seen in literature that avoids cascading aborts by using the realistic recovery

protocol.

Read requests receive better service because they are never rejected. However, several

drawbacks exist. First, read operations issued by read-only transactions may still be

blocked due to a pending write. Second, read-only operations have a significant

concurrency control overhead since they must update certain information associated with

versions. Two-phase commit may be used for distributed read transactions. It may also

result in a read-only transaction causing an abort of an update transaction.

3.2.4 Multiversion Optimistic Concurrency Control

One mechanism is called Multiversion Serial Validation (MVSV). Each transaction is

assigned a startup timestamp, S-TS(T), at startup time, and a commit timestamp, CTS(T), when it enters its commit phase. A write timestamp TS(X) is maintained for each

data item X, which is the commit timestamp of the most recent writer of X. A transaction

T is validated at commit time if S-TS(T)>TS(X) for each object X in its read set. Then it

sets TS(X) equal to , C-TS(T) for all data items in its write set.

Another example of multiversion optimistic concurrency control is called Time Warp

(TW). Transactions communicate through timestamped messages. Active processes

(transactions) are allowed to process incoming messages without concerns for conflict,

much like what the optimistic mechanism does. However, a conflict will occur when a

process receives a message from another process whose timestamp is less than the

timestamp of the process. Whenever this happens, the transaction is rolled back to a time

earlier than the timestamp of the message.

Compare these two implementation mechanisms, the difference lies in the unit of

rollback. In MVSV, the unit is the whole transaction. In contrast, TW does not roll back

the whole transaction but just one step. As result, the total time lost in the rollback

process is smaller.

3.3 Experiments

3.3.1 About Simulation Model and Results

There are three major parts of a concurrency control performance model: a database

system model, a user model, and a transaction model. The database system model

captures the relevant characteristics of the system’s hardware and software, including the

physical resources and their associated schedulers, the characteristics of the database, the

load control mechanism for controlling the number of active transactions, and the

concurrency control algorithm itself. The user model captures the arrival processes for

users and the nature of user’s transactions (for example, interactive or batch). Finally, the

transaction model captures the reference behavior and processing requirements of the

transactions in the workload. A concurrency control performance model that fails to

include any of these three major parts is in some sense incomplete. In addition, if there

are more than one class of transactions in the workload with different features, the

transaction model must specify the mix of transaction classes.

Second, there are some common assumptions simulation experiments made about the

system. The first one is the assumption of infinite resources. Some studies compare

concurrency control algorithms assuming that transactions progress at a rate independent

of the number of active transactions. In another word, transactions proceed in parallel,

which is only possible in a system with enough resources so that transactions never have

to wait before receiving CPU or I/O services.

The second assumption is fake restart. Several models assume that a restarted transaction

is replaced by a new, independent transaction, rather than the same transaction getting to

run over again. In particular, this model is nearly always used in analytical models in

order to make the modeling of restart tractable.

The third assumption is about the write-lock acquisition. A number of studies that

distinguish between read and write locks assume that read locks are set on read-only

items and that write locks are set on the items to be updated when they are first read. In

reality, however, transactions often acquire a read lock on an item, then examine the item,

and then request to upgrade it to a write lock only when they want to change the value.

These simplified assumptions give people more flexibility to focus on whatever they

want to investigate. Nonetheless, they make it harder to compare one simulation to the

other and even harder to apply them to a real-life scenario.

3.3.2 Experiment Results

Many experiments are conducted and results published in comparison of single version

versus multiversion concurrency control performance. However, due to the many

different simulation models people used and different assumptions made, results thrun

out to be very conflicting with each other.

Simulation study has been done in multiversion database to evaluate performance of

various concurrency control algorithms. They also address questions such as CPU and

I/O costs associated with locating and accessing old versions and the overall cost of

maintaining multiple versions of data in database. Centralized setting is used in order to

isolate the effects of multiple versions on performance. Several metrics are applied to

analyze system performance. Most common ones are throughput, average response time,

number of disk access per read, work wasted due to restarts and space required for old

versions, etc.

One experiment compares the performance of 2PL, TSO, OP, MV2PL, MVTSO and TW.

In their simulation model, all reads, writes, aborts and commits are uniformly distributed.

A transaction is immediately restarted after it is aborted, while a new transaction

immediately replaces a committed transaction. System variables include concurrency

level, read-write ratio and transaction size. They look at number of aborts, blocks, partial

rollbacks, the mean number of transactions in execution, the mean wait time, the conflict

probability, and the throughput of the system as parameters of performance.

The result shows that all multiversion protocols outperform their single version

counterparts. In the order of high-to-low performance, we have TW, MV2PL, MVTSO,

TSO, 2PL, and OP. The improvement is particularly impressive for MV2PL. Under

medium workload, there is a 277% increase in throughput from single version. However,

MVTSO outperforms MV2PL in high-write scenario. And TW is more sensitive to writeratio than other multiversion concurrency control algorithms.

The difference in performance between multiversion protocols and single version

protocols is bigger when the concurrency level increases. This makes multiversion

protocols desirable candidates for a real intensive system. For a system operating under

low-write scenario, TW would be the best choice. For a system operating under a

medium or high-write ratio, TW can also be the choice if the concurrency level is not too

high. MV2PL would be a good concurrency control protocol under the high concurrency,

medium-write scenario, whereas MVTSO performs better under the high-write situation.

The result of the study also showed that the relative performance of different protocols

did vary with variables such as concurrency level, read-write ratio and the like.

Other work compared the performance of 2PL, TSO, OP, MV2PL, MVTSO and MVSV.

Their work leads to similar conclusion as provided above. The result of their experiment

shows that for both read-only transactions and small size update transactions, the three

multiversion algorithms provide almost identical throughput. The reason being that given

the small size of the update transactions almost all conflicts are between read-only and

update transactions. All three multiversion algorithms eliminate this source of conflicts,

allowing read-only transactions to execute on older versions of objects and requiring

update transactions to compete only among themselves for access to the objects in the

database. On the other hand, the three single version algorithms provide quite different

performance tradeoffs between the two transaction classes. 2PL provides good

throughput for the large read-only transactions, but provides the worst throughput for the

update transactions. TSO and OP provide better performance for update transactions at

the expense of the large read-only transactions.

Both TSO and OP are biased against large read-only transactions because of their conflict

resolution mechanisms. OP restarts a transaction at the end of its execution if any of the

objects in its readset have been updated during its lifetime, which is likely for large

transactions. They might be restarted over and over again many times before they could

commit. Similarly, TSO restarts a transaction any time it attempts to read an object with a

timestamp newer than its startup timestamp, meaning that the object has been updated by

a transaction that started running after this transaction did. Again, this becomes very

likely as the read-only transaction size is increased. In contrast, 2PL has the opposite

problem. Read-only transactions can set and hold locks on a decent among of objects in

the database for a while before releasing any one of them if the transaction sizes are

large. Update transactions that wish to update locked objects must wait a long time in

order to lock and update these objects. This is why the throughput of update transaction

decreases significantly when the sizes of read-only transactions increase.

Besides the overall performance improvement provided by the multiversion concurrency

control algorithms, MV2PL remedies the problem that 2PL has: For medium to large

read-only transaction sizes, the response time for the update transactions degrades

quickly as the read-only transaction size is increased. Similarly, MVSV and MVTSO

improved the problem that both OP and TSO have: As the read-only transaction sizes are

increased, the large read-only transactions quickly begin to starve out because of updates

made by the update transactions in the workload. As a result, all of them give more even

performance to both kinds of transactions.

Other research work checked CPU, I/O, and storage costs as a result of the use of

multiple versions. Multiple versions yield the additional disk accesses involved in

accessing old data from the disk. Storage overhead for maintaining old versions is not

very large under most circumstances. However, it is important for version maintenance to

be efficient, as otherwise the maintenance cost could overweigh the concurrency benefits.

Read-only transactions incur no additional concurrency control costs in MV2PL and

MVSV, but read-only transactions in MVTO and update transactions in all three

algorithms all incur costs for setting locks, checking timestamps, or performing

validation tests depending on the algorithm considered.

3.4 Multiversion Concurrency Control in Distributed and Partially Replicated

Environment

The above research results use a relatively simplified simulation model. They do not

consider data distribution. Communication delay is simplified by combining together

CPU processing time, communication delays and I/O processing time for each

transaction. However, in a distributed database system, the message overhead, data

distribution and transaction type and size are some of the most important parameters that

have significant effect on performance.

Some research work has investigated the behavior of the multiversion concurrency

control algorithms under partitioned and partially replicated database. A read is scheduled

only on one copy of a particular version. A write on the other hand creates new versions

of all copies of the data item.

When a transaction enters the system, it is divided into subtransactions and each is sent to

the relevant node. All request of a subtransaction is satisfied at one node and if a

subtransaction fails then the entire transaction is rolled-back. The system implements a

read-one-write-all policy. The model also uses two-phase commit protocol. The

simulation result shows the effect of message overhead declines with MPL (multiprogramming level).

This research work seemed to be very primitive. Not too much in-depth discussion of the

interaction between multiversion and data distribution and replication was found. It is

also not convincing why the simulation model is so designed for the purpose it wants to

achieve.

3.5 Version Control

Notice that each proposed multiversion concurrency control protocol algorithm employs

a different approach to integrate multiple versions of data with desired concurrency

control protocol. Version control components are very closely tied to concurrency control

units. In contrast, protocols for replicated data are clearly divided into two units: the

concurrency control component and the replication control component. Possbile division

between these two, however, will allow a modular development of new protocols as well

as simplify the task of correctness proof. It should also be easier to extend these protocols

to a distributed environment.

Version control mechanisms were proposed that could be integrated with any conflictbased concurrency control protocols. One such modular version control mechanism could

integrate with abstract concurrency control. The basic assumptions about the system are

as follows: transactions are classified into read-only and read-write (update) transactions,

with update as the default. Second, the execution of update transactions is assumed to be

synchronized by a concurrency control protocol that guarantees some serial order. A

read-write transaction T is assigned a transaction number tn(T), which is unique and

corresponds to the serial order. (It can be proved that conflict-based concurrency control

protocols can be changed to assign such numbers to transactions.).

The most interesting feature of this mechanism is that here read-only transactions are

independent of the underlying concurrency control protocol. These transactions do not

interact with the concurrency control module at all, and make only one call to the version

control module in the beginning. Afterwards, their existence remains transparent to both

the concurrency control and version control modules. Therefore, there is almost

negligible overhead associated with read-only transactions in this scheme.

Unlike multiversion timestamp ordering, the version control mechanism guarantees that a

read-only transaction cannot delay or abort read-write transactions. Execution of readonly transaction is relatively simple when compared to that in the multiversion two-phase

locking. This mechanism is also easy to integrate with garbage collection of old versions.

In this scenario, the concurrency control, version control and garbage control units are

relatively independent from each other. The garbage collection scheme does not interact

with the read-write transactions and the concurrency control component does not interact

with the read-only transactions.

However, in order to achieve the advantages mentioned above, the version control

mechanism trades off the system performance efficiency. Several techniques are

proposed to rectify this problem.

3.6 Interaction with Object Oriented Paradigm

Multiversion Object Oriented Databases (MOODB) support all traditional database

functions, such as concurrency control, recovery, user authorization and object oriented

paradigm, such as object encapsulation and identification, class inheritance, object

version derivation. In a inheritance subtree, there might be a schema change or a query.

Examples of schema changes include adding or deleting an attribute or a method to/from

a particular class, changing the domain for an attribute, or changing the inheritance

hierarchy. In another word, a schema change works a meta information level.

In a multiversion object orient database, after creation of a class instance, i.e., an object,

its version may be created. New versions may be derived, which in turn may become the

parents of next new versions. Versions of an object are organized as a hierarchy, called

the version derivation hierarchy. It captures the evolution of the object and partially

orders its versions.

The problem of concurrency control in a multiversion object oriented database cannot be

solved with traditional methods. The reason being that traditional concurrency control

mechanisms do not address the semantic relationships between classes, objects and object

versions, i.e., class instantiation, inheritance, and version derivation. In an object-oriented

paradigm, a transaction accessing a particular class virtually accesses all its instances and

all its subclasses. Correspondingly, the virtual access to an object by a transaction does

not mean that this object is read or modified by it. It simply means that a concurrent

update of the object by another transaction needs to be excluded.

Another problem relates to update of an object version that is not a leaf of the derivation

hierarchy. If the update has to be propagated to all the derived object versions, the access

to the whole derivation subtree by other transaction has to be excluded.

Early work in this area concerned the inheritance hierarchy but not the version derivation

hierarchy. One work applies hierarchical locking to two types of granules only: a class

and its instances. If an access to a class concerns also its subclasses, all of them have to

be locked explicitly. Another work applies hierarchical locking to the class-instance

hierarchy and the class inheritance hierarchy. Before the basic locking of a class or a

class with its subclasses, all its superclasses have to be intentionally locked. In a case of a

transaction accessing the leaves of the inheritance hierarchy, it leads to a great number of

intentional locks to be set. We could imagine that it would be hard to apply this

hierarchical locking mechanism to version hierarchy because the levels could be very

large.

Another way to deal with the problem is proposed as stamp locking, which concerns both

inheritance and version derivation hierarchies. The main idea behind it is to extend the

notion of a lock in such a way that it contains information on the position of a locked

subtree in the whole hierarchy.

A stamp lock is a pair: SL = (lm, ns), where lm denotes a lock mode and ns denotes a

node stamp. Lock modes describe the properties of stamp locks. Shared and exclusive

locks are used. A node stamp is a sequence of numbers constructed in such a way that all

its ancestors are identifiable. If a node is the n-th child of its parent whose node stamp is

p, then the child node has a stamp p.n. And the root node is stamped 0.

In this scenario, two stamp locks are compatible if and only if their lock modes are

compatible or their scopes have no common nodes. To examine the relationship between

the scopes of two stamp locks, it is sufficient to compare the node stamps.

So far only one hierarchy is considered. The concept, however, may be extended to more

than one hierarchy, providing that they are orthogonal to each other. To enable

simultaneous locking in many orthogonal hierarchies, however, it is necessary to extend

the notion of the stamp locking in such a way that it contains multiple node stamps for

different hierarchies. And of course the logic to determine the compatibility of stamp

locks is also extended. In particular, when both version and class hierarchies are

considered, the stamp lock definition is extended to the following tuple: SL( lm, ns1 , ns2 ),

where lm is a stamp lock mode, ns1 , ns 2 are node stamps concerning the first and the

second hierarchy.

In some multiversion databases, updates of data items lead to a new version of the whole

database. To create a new object version, a new database version has to be created, where

the new object version appears in the context of versions of all the other objects and

respects the consistency constraints imposed. A stamp locking method could apply

explicitly to two hierarchies: the database version derivation and the inheritance

hierarchy, and implicitly to the object version derivation hierarchy. The inheritance

hierarchy, composing of classes, is orthogonal to both database derivation hierarchy and

the object version derivation hierarchy, which are composed of database and object

versions.

Ten notions of granules are used, from the whole multiversion database to a single

version of a single object. A stamp lock set on the multiversion database is defined as a

triple (lm, vs, is), while a stamp lock set on multiversion object is defined as a pair (lm,

vs). Here vs is a version stamp of the database version subtree or object version subtree;

is is an inheritance stamp of the class which all or particular instances is locked.

Depending on the lock mode lm, stamp locks may be grouped in four ways. All together,

there are 12 kinds stamp locks that could be set on the multiversion databases. A

compatibility matrix is also proposed among the 12 kinds of locks.

3.7 Application in Real Time Paradigm

Unlike traditional database transactions, real-time jobs have deadlines, beyond which

their results have little value. Furthermore, many real-time jobs may have to produce

timely results as well, where more recent data are preferred over older data.

Traditional database models do not take time or timeliness into consideration.

Consequently, single-version concurrency control algorithms tend to postpone a job too

much into the future by blocking or aborting it due to conflicts, causing it to miss its

deadline. At the same time, multiversion concurrency control may push a job too much

into the past (read an old version, for example) in an effort to avoid conflicts, thus

producing obsolete results.

In order to deal with the timeliness of the transactions, real time transactions may tolerate

some limited amount of inconsistency, which in a degree sacrifices the serializability of a

database system, although transactions prefer to read data versions that are still valid. In

these situations, classic multiversion concurrency control algorithms become inadequate,

since they do not support the notion of data timeliness.

One way to deal with the tradeoff of timeliness and data consistency is the conservative

scheduling of data access. Data consistency is maintained by scheduling transactions

under SR. Data timeliness is maintained explicitly by application programs. In addition,

the system must schedule the jobs carefully to guarantee that real-time transactions will

meet their deadlines. But this conservative approach is too restrictive, since SR may not

be required in many real-time applications, where sufficiently close versions may be

more important than strict SR. In another word, queries may read inconsistent data as

long as the data versions being read are close enough to serialization in data value and

timeliness.

3.7.1 Multiversion Epsilon Serializability (ESR)

Epsilon Serializabilty (ESR) has been proposed to manage and control inconsistency. It

relaxes SR’s consistency constraints. In ESR, each epsilon transaction (ET), has a

specification of inconsistency (fuzziness) allowed in its execution. ESR increases

transaction system concurrency by tolerating a bounded amount of inconsistency.

Fuzziness is formally defined as the distance between a potentially inconsistent state and

a known consistent state. So for example, the time fuzziness for the non-SR execution of

a query operation is defined as the distance in time between the version a query ET reads

in an MVESR execution and the version that would have been read in an MVESR

execution. In ESR, each transaction has a specification of the fuzziness allowed in its

execution, called epsilon specification ( spec ). When it is 0, an ET (Epsilon

transaction) is reduced to a classic transaction and ESR reduced to SR.

When ESR is applied to a database system that maintains multiple versions of data, it is

called multiversion epsilon serializability (MVESR). Multiversion divergence control

algorithms guarantees epsilon serializability of a multiversion database. MVESR is very

suitable for the use of multiversion database for real-time applications that may trade a

limited degree of data inconsistency for more data recency.

The addition of the time dimension to multiversion concurrency control bounds both

value fuzziness of the query results and the timeliness of data. Non-serializable

executions make it possible for queries to access more recent versions. As a result,

version management can be greatly simplified since only most recent few versions need

to be maintained.

3.7.2 Multiversion Divergence Control for Time and Value Fuzziness

Two MVESR were presented in this research work: one based on timestamp ordering

multiversion concurrency control and the other based on two-phase locking multiversion

concurrency control.

In ESR model, update transactions are serializable with each other while queries (readonly transactions) need not to be serializable with update transactions. Fuzziness is

allowed only between update ETs ( U ET ) and read-only ETs ( Q ET ). The spec of a

U ET refers to the amount of fuzziness allowed to be exported by the U ET , while the

spec of a Q ET refers to the amount of fuzziness allowed to be imported by Q ET .

The time interval [ts(Ti ) spec, ts(Ti ) spec] defines all the legitimate versions

accessible by Ti , where ts(Ti ) denotes the timestamp of transaction Ti . A version can be

accessed by transaction Ti if its timestamp is within Ti ’s spec ,

The problem with accumulating time fuzziness is not a simple summation of two time

intervals, which may underestimate or overestimate the total time fuzziness. A new

operation on time intervals is proposed as TimeUnion:

TimeUnion([ , ], [ , ]) [min( , ), max( , )] . TimeUnion operator can be used to

accurately accumulate the total time fuzziness of different intervals.

Meanwhile, import _ time _ fuzziness Qi is used to denote the accumulated amount of time

fuzziness that has been imported by a query Qi . exp ort _ time _ fuzzinessU j is the

accumulated time fuzziness exported to other queries by U j . The objective of an

MVESR is to maintain the following invariants for all Qi and U j :

import _ time _ fuzziness Qi import _ time _ lim it Qi ;

exp ort _ time _ fuzzinesU j exp ort _ time _ lim it U j

Several tradeoffs are involved in the choice of an appropriate version of data for a given

situation. One could assume that spec = 0 and choose the version that makes a

transaction serializable (it is a generalization of the version selection function of a

traditional multiversion concurrency control algorithm). Or one could always choose the

most recently committed version of the data item being accessed.

The third choice tries to minimize the fuzziness by approximating the classic

multiversion concurrency control. It chooses the newest version x j of data item x such

that ts( x j ) ts(Ti ). If such an x j is no longer available because it has been garbage

collected, then an available version x k would be chosen such that

ts( x j ) ts( x k ) (ts(Ti ) spec ) . If it is not available for any other reasons, a version

x m may be chosen such that ts( x m ) ts( x j ), (ts(Ti ) spec ) ts( x m ) ts(Ti ).

3.7.3 Timestamp Ordering Multiversion Divergence Control Algorithm

A timestamp ordering multiversion divergence control algorithm with an emphasis of

bounding time fuzziness is proposed. In this model, the spec of other dimensions are

assumed to be infinitely large.

The extension stage of a multiversion divergence control algorithm identifies the two

condition where a non-SR operation may be allowed. The first is a situation where the

serializable version does not exist or if it cannot satisfy the recency requirement. The

second is that a late-arriving update transaction may still create a new version that is nonSR with respect to some previously arrived query transactions.

Also notice that for a query transaction, a read operation can be processed by choosing a

proper version. However, for an update transaction, a read operation is restricted to a

serializable data version in order to maintain the overall database consistency.

In the relaxation stage, time fuzziness is accumulated using the TimeUnion operation for

Q i and U j . If the resulting accumulated time fuzziness does not exceed the

corresponding spec , then the non-SR operation can be allowed. Otherwise, it will be

rejected. Also, the time fuzziness incurred by a write operation wi (x) (of U i ) being

processed even after a q j ( x k ) (from Q j ) with ts( x k ) ts(U i ) ts(Q j ) has been

completed, is the time distance between ts(U k ) and ts(U i ) , where U k is the update

transaction that has created x k . This time fuzziness is accumulated into U i and Q j ’s

time fuzziness using the TimeUnion operations.

3.7.4 Two-phase Locking Multiversion Divergence Control Algorithm

This algorithm tried to bound both time and value fuzziness. Value fuzziness is calculated

by taking the difference between values from the non-SR version and the SR version. The

accumulation is calculated by adding together the value fuzziness caused by each non-SR

operation.

Thus, instead of the two requirements on time fuzziness mentioned in the previous

section, four requirements need to be met here:

import _ value _ fuzziness Qi import _ value _ lim it Qi ;

exp ort _ value _ fuzzinessU j exp ort _ value _ lim it U j ;

import _ time _ fuzziness Qi import _ time _ lim it Qi ;

exp ort _ time _ fuzzinesU j exp ort _ time _ lim it U j

In applications where rates of changes of data values are constant, the value distance

could be calculated by using the timestamp of data versions.

When an epsilon transaction successfully sets all the locks and is ready to certify, the two

corresponding conditions required for certification are extended as follows. First, any

data version returned by the version selection function may be accepted for a query read,

but the data version for an update transaction is restricted to the latest certified one.

Second, for each data item x that an update transaction wrote, all the update transactions

that have read a certified data version of x have to be certified before this update

transaction could be certified.

Each query transaction has associated to it an import_time_fuzziness and an

import_value_fuzziness, and each update transaction has an export_time_fuzziness and an

export_value_fuzziness. Each uncertified data version, when created by a write operator,

is associated with a Conflict_Q list which is initialized to null. The Conflict_Q list is used

to remember all queries that have read that uncertified data version, and the time and

value fuzziness for these queries are calculated by the time this data is certified.

However, for applications that cannot afford to delay the certification, one can use

external information to estimate the timestamp and value of the data version that is

serializable to it, and then accumulate the fuzziness to the total time and value fuzziness

of the corresponding epsilon transaction.

3.7.5 Discussion

The multiverison divergence control algorithms were not combined with any CPU

scheduling algorithms, such as EDF or RM. More research work needs to be done to help

to understand the interaction between divergence control and transaction scheduling.

3.8 Other Notions of Versioning

There are three common kinds of explicit versioning in literature. One is called historical

versions. Users create historical versions when they want to keep a record of histories of

data. The changes of data could also be viewed as revisions and user may want to

examine earlier versions of the data. The only difference between a historical version and

a revised version is that the former models a logical time, but the latter models a physical

time. Finally, variables can have different values based on different assumptions or

design criteria. These different values are alternative versions.

All these three kinds of versioning can be modeled with the notion of annotations. The

advantages of doing it include higher generality, extensibility and flexibility. Versions

can be combined arbitrarily with other annotations and with other versions. A new kind

of versioning can be implemented by defining the appropriate annotation class.

One aspect of research work involves multiversioning technique for concurrency control.

However, the relationship between concurrency control and annotation is not clear.

There, the system creates versions for the purpose of ensuring serializability; these

versions are subsequently removed when they are no longer needed. This form of

versioning is different from the three types of versioning mentioned in this section,

because the versions in a multiversion system are only visible to the system. It is unclear

whether multi-versioning can be integrated with the annotation framework.

4. Replicated Data

Data are often replicated to improve transaction performance and system availability.

Replication reduces the need for expensive remote accesses, thus enhancing performance.

Replicated database can also tolerate failures, hence providing greater availability than

single-site database.

An important issue of replicated data management is to ensure correct data access in spite

of concurrency, failures, and update propagation. Data copies should be accessed by

different transactions in a serializable way both locally and globally. Therefore, two

conflicting transactions should access replicated data in the same serialization order at

sites.

Common practice in the field is to use concurrency control protocols to ensure data

consistency, replication control protocols to ensure mutual consistency, and atomic

commitment protocols to ensure that changes to either none or all copies of replicated

data are made permanent. One popular combination would beTwo-phase Locking (2PL)

for concurrency control, read-one-write-all (ROWA) for replication control and Twophase Commit (2PC) for atomic commitment.

4.1 The Structure of Distributed Transactions

An example distributed database works as follows: Each transaction has a master

(coordinator) process running at the site where originated. The coordinator, in turn, sets

up a collection of cohort processes to perform the actual processing involved in running

the transaction. Transactions access data at the sites where they reside instead of remote

access. There is at least one cohort at each site where data will be accessed by the

transaction. Each cohort that updates a replicated data item is assumed to have one or

more remote update processes associated with it at other sites. It communicates with its

remote update processes for both concurrency purposes and value update.

The most common commit protocol used in a distributed environment is the two-phase

commit protocol, with the coordinator process controlling the protocol. With no data

replication, the protocol works as follows: When a cohort finishes executing its portion of

a query, it sends an execution complete message to the coordinator. When the coordinator

has received this message from every cohort. It initiates the commit protocol by sending

prepare message to all cohorts. Assuming that a cohort wishes to commit, it responds to

this message by forcing a prepare record to its log and then sending a prepared message

back to the coordinator. After receiving prepared messages from all cohorts, the

coordinator forces a commit record to its log and sends a commit messages to each

cohort. Upon receipt of this message, each cohort forces a commit record to its record to

its log and sends a committed message back to the coordinator. Finally, once the

coordinator collects committed messages from each cohort, it records the completion of

the protocol by logging a transaction end record. If any cohort is unable to commit, it will

log an abort record and return an abort message instead of a prepared message in the first

phase, causing the coordinator to log an abort record and to send abort instead of commit

messages in the second phase of the protocol. Each aborted cohort reports back to the

coordinator once the abort procedure has been completed. When remote updates are

present, the commit protocol becomes a hierarchical two-phase commit protocol.

4.2 Replicated Data History

A replicated database is considered to be correct if it is one-copy serializable. Here, we

have two concepts: replicated data history and one-copy history. Replicated data

histories reflect the database execution, while one-copy history is the interpretation of

transaction executions from a user’s single copy point of view. An execution of

transactions is correct if the replicated data history is equivalent to a serial one-copy

history.

A complete replicated data (RD) history H over T {T0 ,..., Tn } is a partial order with

ordering relation < where

1. H h(

n

i 0

Ti ) for some translation function h;

2. for each Ti and all operations p i , q i in Ti , if p i i qi , then every operation in h( pi )

is related by < to every operation in h( qi ).

3. for every r j [ x A ] , there is at least one wi [ x a ] r j [ x a ];

4. all pairs of conflicting operations are related by <, where two operations conflict if

they operate on the same copy and at least one of them is a write; and

5. if wi [ x ] i ri [ x ] and h( ri [ x ]) ri [ x A ] then wi [ x A ] h( wi [ x ])

An RD history H over T is equivalent to a 1C history H 1C over T if

1. H and H 1C have the same reads-from relationships on data items, i.e., T j reads from

Ti if and only of the same holds in H 1C ; and

2. for each final write wi [x ] in H 1C , wi [ x A ] is a final write in H for some copy x A of

data x.

4.3 Replicated Concurrency Control Protocols

For protocols listed here, we assume read-one-copy, write-all-copies scenario.

4.3.1 Distributed Two-phase Locking

To read an item, it suffices to set a read lock on any copy of the item. To update an item,

write locks are required on all copies. Write locks are obtained as the transaction

executes, with the transaction blocking on the write request until all of the copies to be

updated have been locked. All locks are held until the transaction has committed or

aborted.

Deadlocks are possible. Global deadlock detection is done by a snooping process, which

periodically requests wait-for information from all sites and then checks for and resolves

any global deadlocks using the same victim selection criteria as for local deadlocks. The

snoop responsibility could be dedicated to a particular site or rotated among sites in a

round-robin fashion.

A variation of the above algorithm is called wound-wait. The difference between WW

and 2PL is their way to deal with deadlock. Rather than maintaining wait-for information

and checking for local and global deadlocks, deadlocks are prevented by the use of time

stamps. Every transaction in the system has a start-up timestamp, and younger

transactions are prevented from making older transactions wait. Whenever there is a data

conflict, younger transactions are aborted unless it is in its second phase of its commit

protocol.

There is also a notion of Optimistic Two-phase Locking (O2PL). The difference between

O2PL and normal 2PL is that O2PL handles replicated data as OP does. When a cohort

updates a data item, it immediately requests a write lock on the local copy of the item, but

it defers requesting of the write locks on the remote copies until the beginning of the

commit phase. So the idea behind O2PL is to set locks immediately within a site, where

doing so is cheap (which is the local site), while taking a more optimistic, less messageintensive approach across site boundaries. Since O2PL waits until the end of transaction

to obtain write locks on remote copies, both blocking and deadlocks happen late.

Nonetheless, if deadlocks occur, some transactions have to be aborted eventually.

4.3.2 Distributed Timestamp Ordering

The basic notion behind distributed timestamp ordering is the same as that for centralized

basic timestamp ordering. However, with replicated data, a read request is processed

using the local copy of the requested data item. A write request must be approved at all

copies before the transaction proceeds. Writers keep their updates in a private workspace

until commit time. At the same time, granted writes for a given data item are queued in

timestamp order, without blocking the writers, until they are ready to commit, at which

point their writes are dequeued and processed in order. Accepted read requests for such a

pending write must be queued as well, thus blocking readers, as readers cannot be

permitted to proceed until the update becomes visible. Effectively, a write request locks

out any subsequent read requests with later timestamp until the corresponding write

actually takes place. This happens when the write transaction is ready to commit and thus

dequeued and processed.

4.3.3 Distributed Optimistic Concurrency Control

Some variations of distributed optimistic concurrency control borrow the idea of

timestamp to exchange certificate information during commit phase. Each data item has a

read timestamp and a write timestamp. Transactions may read and update data items

freely. Updates are stored temporarily into a local workspace until commit time. Read

operations need to remember the write timestamp of the versions they read. When all of

the transaction’s cohorts have completed their work and report to the coordinator, the

transaction is assigned a unique timestamp. This timestamp is sent to each cohort, and is

used to certify locally all of the cohort’s reads and writes. If the version that the cohort

read is still the current version, read request is locally certified. A write request will be

certified if no later reads have been certified. A transaction will be certified globally only

if local certifications are passed for all cohorts.

In the replicated situation, remote updaters are considered in certification. Updates must

locally certify the set of writes they received at commit time, and the necessary

communication can be accomplished by passing information in the message of the

commit protocol.

4.3.4 Quorum Based Concurrency Control Algorithms

When copies of the same data reside on several computers, the system becomes more

reliable and available. However, it makes the work much harder to keep the copies

mutually consistent. Several popular methods for replicated data concurrency control are

based on quorum consensus (QC) class of algorithms.

The common feature of QC algorithm family is that each site is assigned a vote. To

perform a read or a write operation, a transaction must assemble a read or write quorum

of sites such that the votes of all of the sites in the quorum add up to a predefined read or

write threshold. The basic principle is that the sum of these two thresholds must exceed

the total sum of all votes, and the write threshold must be strictly larger than half of the

sum of all votes. These two conditions are known as the quorum intersection invariants.

The former ensures that a read operation and a write operation do not take place

simultaneously, while the latter prevents two simultaneous write operations on the same

object. It is important to note that the above two invariants do not enforce unique values

upon the size of the read and write quorums, or even the individual site votes. The readone-write-all method can be viewed as a special case of the QC method with each site

assigned a vote of 1. The read quorum is 1, and the write quorum is set to the sum of all

votes. This assignment decision leads to better performance for queries at the expense of

poorer performance for updates.

The basic QC algorithms are static because the votes and quorum sizes are fixed a priori.

In dynamic algorithms, votes and quorum sizes are adjustable as sites fail and recover.

Examples of dynamic algorithms include missing writes method and virtual partition

method. In the missing writes method, the read-one-write-all policy is used when all of

the sites in the network are up; however, if any site goes down, the size of the read

quorum is increased and that of the write quorum is decreased. After the failure is

recovered, it reverts to the old scheme. In the virtual partition algorithm, each site

maintains a view consisting of all the sites with which it can communicate, and within

this view, the read-one-write-all method is performed.

Another dynamic algorithm is the available copies method. There, query transactions can

read from any single site, while update transactions must write to all of the sites that are

up. Each transaction must perform two additional steps called missing writes validation

and access validation. The primary copy algorithm is based on designating one copy as

primary copy and requiring each transaction to update it before committing. The update is

subsequently spooled to the other copies. If the primary copy fails, then a new primary is

selected.

Network partitioning due to site or communication link failures are discussed by some

protocols. Some algorithms ensure consistency at the price of reduced availability. They

permit at most one partition to process updates at any given time. Dynamic algorithms

permits updates in a partition provided it contains more than half of the up-to-date copies

of the replicated data. It is dynamic because the order in which past partitions were

created plays a role in the selection of the next distinguished partition.

The basic operations of dynamic voting work as follows. When a site S receives an

update request, it sends a message to all other sites. Those sites belonging to the partition

P where S currently belongs (that is, those sites with which S can communicate at the

moment.) lock their copies of the data and reply to the inquiry sent by S. From the

replies, S learns the biggest (most recent) version number VN among the copies in

partition P, and updates site’s cardinality SC of the copies with that version number.

Partition P is the distinguished partition if the partition contains more than half of the SC

sites with version number VN. If partition P is the distinguished partition, then S commits

the update and sends a message to the other sites in P, telling them to commit the update

and unlock their copies of the data. A two-phase commit protocol is used to ensure that

transactions are atomic.

The vote assignment method is important to this family of algorithms because the

assignment of votes to sites and the settings of quorum values will greatly affect the

transaction overhead and availability. In some research work, techniques are developed to

optimize the assignment of votes and quorums in a static algorithm. Three optimization

models are addressed. Their primary objective is to minimize cost during regular

operations. These three models are classified into several problems with some of which

solved optimally and others heuristically.

Processing costs are likely to reduce using these methods. The reason is that in QC

algorithms, a major component of the processing cost is proportional to the number of

sites participating in a quorum of a transaction. When the unit communication costs are

equal, the overall communication cost is directly related to the size of a transaction’s

quorum. Hence, minimizing communication cost is equivalent to minimizing the number

of sites that participate in the transaction. On the other hand, however, if the unit

communication costs are unequal, the relationship between the number of sites and the

total communication cost is more complex, depending on other factors such as the

variance in the unit costs.

4.4 Experiments

In a distributed environment, besides the throughput as an important metric for

evaluation, number of message sending involved is also worth consideration. Some work

focus their attention on the update cost for distributed concurrency control. Various

system load and different levels of data replication were chosen to test the performance of

various replicated concurrency control algorithms.

Among all the replicated distributed concurrency control protocols, 2PL and O2PL

provide the best performance, followed by WW(wound wait) and TSO, OPT performs

the worst. In this case, 2PL and O2PL performs similarly because they differ only in their

handling of replicated data. The reason behind the difference in performance is because

2PL and O2PL have the lowest restart ratios and as a result waste the smallest fraction of

the system’s resources. WW and TSO have higher restart ratios. Between these two,

however, although WW has a higher ratio of restarts to commits, it always selects a

younger transaction to abort, making its individual restarts less costly than those of TSO.

OPT has the highest restart ratio among these protocols. These results indicate the

importance of restart ratios and the age of aborted transactions as performance

determinants.

When the number of copies increases, both the amount of I/O involved in updating the

database and the level of synchronization-related message traffic are increased. However,

the differences between algorithms decrease with the level of replication increased. The

reason is again restart-related. Successfully completing a transaction in the presence of

replication involves work of updating remote copies of data. Since remote updates occur

only after a successful commit, the relative cost of a restart decreases as the number of

copies increases. However, 2PL, WW and TSO suffer a bit more as the level of

replication is increased.

One interesting result is that O2PL actually performs a little worse than 2PL because of

the way conflicts are detected and resolved in O2PL. Unlike the situation in 2PL, it is

possible that for a specific data item in O2PL, each copy site has an update transaction

obtains locks locally, discovering the existence of competing update transactions at other

sites only after successfully executing locally, and has to be aborted or abort others.

Hence O2PL has a higher transaction restart ratio than the 2PL scheme.

In contrast, OP is insensitive to the number of active copies either because it only checks

for conflicts at the end of transactions, or it matters little whether the conflicts occur at

one copy site or multiply copy sites, since all copies are involved in the validation

process anyway. In fact, restart cost becomes less serious for OP (as well as for O2PL).

Since remote copy updates are only performed after a successful commit, they are not

done when OP restarts a transaction.

As far as message cost goes, O2PL and OP require significantly fewer messages than

other algorithms, because they delay the necessary inter-site conflict check until before

the transactions commit. Between these two, O2PL retains its performance advantage

over OP due to its much lower reliance on restarts to resolve conflicts. Assume message

cost is large, OP might actually outperform all three others (2PL, TSO, WW). Among the

subset of algorithms that communicate with remote copies on a per-write basis, however,

2PL is still the best performer, followed by WW and TSO.

4.5 Increasing Concurrency in a Replicated System

Serializability is a traditional standard for the correctness in a database system. For a

distributed replicated system, the notion for correctness is one-copy serializability.

However, it might not be practical in high-performance applications since the restriction

it imposes on concurrency control is too stringent.

In classical serializability theory, two operations on a data item conflict unless they are

both reads. One technique for improving concurrency utilizes the semantics of transaction

operations. The purpose is to restrict the notion of conflicts. For instance, just like an

airfare ticker system, two commutative operations do not conflict, even if both update the

same data item (However, whether or not two operations commute might depend on the

state of the database.). More research work needs to be done to further exploit the

inherent concurrency in such a situation.

When serializability is relaxed, the integrity constraints describing the data may be

violated. By allowing bounded violation of the integrity constraints, however, it is

possible to increase the concurrency of transactions in a replicated environment.

In one solution, transactions are partitioned into disjoint subsets called types. Each

transaction is a sequence of atomic steps. Each type, y, is associated with a compatibility

set whose elements are the types, y’, such that the atomic steps of y and y’ may be

interleaved arbitrarily without violating consistency. Interleaved schedules are not

necessarily serializable. This idea has also been generalized in different ways to give a

specification of allowable interleaving at each transaction breakpoint with respect to

other transactions.

Most approaches in literature are concerned with maintaining the integrity constraints of

the database, even though serializability is not preserved. Some other work are interested

in application scenarios that have stringent performance requirements but which can

permit bounded violations of the integrity constraints. A notion of set-wise serializability

is introduced where the database is decomposed into atomic data sets, and serializability

is guaranteed within a single data set. For applications where replications are allowed to

diverge in a controlled fashion, an up-to-date central copy is present which is used to

detect divergences in other copies and thus triggers appropriate operations. However, it is

not clear how this method could be extended to a completely distributed environment.

Another example is the notion of k-completeness introduced in SHARD system. A total

order is imposed on transactions executed in a run. A transaction is k-complete if it sees

the results of all but at most k of the preceding transactions in the order. Because there

was no algorithm proposed to enforce a particular value of k, the extent of ignorance can

only be estimated in a probabilistic sense. Also, a transaction which has executed at one

site may be unknown to other sites even after they have executed a large number of

subsequent transactions. This is a major drawback since it implies that a particular site

might remain indefinitely unaware of an earlier transaction at another site.

Epsilon serializability is also proposed for replicated database systems. An epsilon

transaction is allowed to import and export a limited amount of inconsistency. It is not

clear, however, how the global integrity constraints of the database are affected if

transactions can both import and export inconsistency.

4.5.1 N-ignorance

N-ignorance utilizes the relaxation of correctness in order to increase concurrency. An Nignorant transaction is a transaction that may be ignorant of the results of at most N prior

transactions. A system in which all transactions are N-ignorant can have an N+1-fold

increase in concurrency over serializable systems, at the expense of bounded violations of

its integrity constraints.

Quorum locking and gossip messages are integrated to obtain a control algorithm for an

N-ignorant system. The messages that are propagated in the system as part of the quorum

algorithm are locking request, locking grant, locking release, and lock deny. Gossip

messages are piggy-backed onto these quorum messages. They may also be transmitted

periodically from a site, independent of the quorum messages.

Gossip messages are point-to-point background messages which can be used to broadcast

information. In the database model here, a gossip message sent by site i to j may contain

updates known to i (these need not to be only updates of transactions initiated at site i).

Gossip messages could ensure that a site learns of updates in a happened-before order. At

most one of the following can occur at a time t at a particular site i: the execution of a

transaction, the send of a gossip message, or the receipt of a gossip message.

Consider a transaction T submitted at site i. If site j is in QT , then j is said to participate

in T. Once QT is established, the sites in QT cannot participate in another transaction

until the execution of T is completed. However, the number of sites in the quorum ( |Q|)

need not be greater than half of the total number of sites (M/2), When T is initiated, site i

knows of all updates unknown to each quorum site before the quorum site sent its lock

grant response to i. When T terminates, each quorum site learns of all updates that i

knows about. Hence, a tight coupling exists among these sites. The condition for nonquorum sites is more relaxed: site i is allowed to initiate T as long as non-quorum sites

are ignorant of a bounded number of updates that i knows about. By adjusting |Q| and the

amount of allowed difference between the knowledge states of the initiator site and nonquorum sites, different algorithms with different values of N are developed.

Two algorithms are actually introduced. Algorithm A does the timetable check first and

then locks a quorum. Algorithm B does the operations in a reverse order. In algorithm B,

in contrast, replicated data at quorum sites are locked while a site is waiting for a more

restrictive timetable condition to be satisfied. Hence, less concurrency is permitted.

If serializability can be relaxed, the |Q| can be reduced and performance improves

because it takes less time to gather a quorum and also that conflicting transactions could

execute concurrently.

When site crashes, message delays, or communication link fails and a standard commit

protocol is used to abort transactions interrupted by failures of quorum sites, the

algorithms are fault tolerant. They can allow up to |Q|-1 non-quorum site failures and

transactions can still be initiated and executed successfully at the sites that have not

failed.

4.5.2 Discussion of N-ignorance

The major contributions of this work are the following two points. First, they formalize

the violation of the integrity constraints as a function of the number, N, of conflicting

transactions that can execute concurrently. In order to compute the extent to which the

constraints can be violated, they also provide a systematic analysis of the reachable states

of the system.

Secondly, they deal with a replicated database. The emphasis is on reducing the number

of sites a site has to communicate with. They attempt to increase the autonomy of sites in

executing transactions, which results in a smaller response time.

N-ignorance has the property that in any run R, the update of a transaction T is unknown

to at most N transactions. This property is referred to as Locality of N-ignorance.

N-ignorance is most useful when many transaction types conflict. To improve

concurrency when few transaction types conflict, the algorithms are extended to permit

the use of a matrix of ignorance, so that the allowable ignorance of each transaction type

with respect to each other type can be individually specified.

Several problems need further research effort. Among them, one is the question of how to

fit compensation transactions into this model. A compensating transaction is normally

used to bring a system that does not satisfy the constraints back to good state. It is