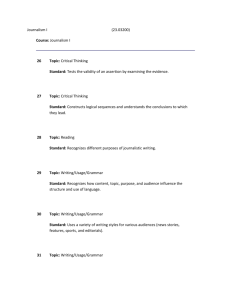

Systemic Functional Grammar

advertisement