david makinson

advertisement

CONDITIONAL STATEMENTS AND DIRECTIVES

DAVID MAKINSON

OUTLINE

Conditional assertions are used in many different ways in ordinary language, with great

versatility. Corresponding to their different jobs, they have different logical behaviour. For this

reason, there is not just one logic of conditionals, but many. In this brief overview, we describe

some of the main kinds of conditional to be found in common discourse, and how logicians have

sought to model them.

In the first part, we recall the pure and simple notion of the truth-functional (alias material)

conditional and some of its more complex elaborations. We also explain the subtle distinction

between conditional probability and the probability of a conditional.

In the second part, we explain the difference between a conditional proposition and a conditional

directive. The logical analysis of directives has lagged behind that of the propositions, and we

outline the recently developed concept of an input/output operation for that purpose.

1. CONDITIONAL PROPOSITIONS

1.1. What is a Conditional Proposition?

Suppose that in your house the telephone and the internet are both accessed by the same line, but

the telephone is in the living room on the ground floor, and the computer is upstairs. You explain

to a guest:

If the telephone is in use then the computer cannot access internet.

This is an example of a conditional proposition. Verbally, it is of the form if…then…, which is

perhaps the most basic and common form for conditionals in English – although, as we will see,

there are many others. We make such conditional propositions in daily life, as well as in

mathematics and the sciences.

1.2. What is the Truth-functional Conditional?

The simplest of all models for stand-alone conditionals is the truth-functional one, also often

known as material implication. Its very simplicity, so much less subtle than ordinary language,

hid it from view for a long time. The idea of a truth-table dates from the beginning of the

twentieth century - two thousand years after Aristotle first began codifying the discipline called

logic. After the event, it was realized that in antiquity, certain Stoic philosopher/logicians had

grasped the concept of material implication although, as far as anybody knows, they did not used

the graphic device of a table. But their ideas never became part of mainstream thinking in logic,

which until the late nineteenth century was dominated by the Aristotelian tradition.

To make a truth-table, whether for conditionals or other particles such as and, or, and not, we

need a simplifying assumption. We need to assume that the assertions that we are dealing with

are always either true or false, but never both; as it is usually put, that they are two-valued. It is

also assumed that if a proposition appears more than once in the context under study, its truthvalue is the same in each instance. The truth-values are written as T,F or more commonly, 1,0.

Certain ways of forming complex propositions out of simpler ones are called truth-functional, in

the sense that the truth-value of the complex item is a function (in the usual mathematical sense)

of the truth-values of its components. In the case of the material conditional, this may be

expressed by the following table, where the right-hand column is understood as listing the values

of a function of two variables, whose values are listed in the left columns.

p

q

pq

1

1

1

1

0

0

0

1

1

0

0

1

In other words: when p is true and q is false, the material conditional pq is false, but in all the

other three cases pq is true. As simple as that.

1.3. Some Odd Properties of the Material Conditional

2

Most of the properties of the truth-functional conditional are very natural. For example, it is

reflexive (the conditional proposition pp is always true, for any proposition p) and also

transitive (pr is true whenever pq and qr are). But there are others, reflecting the simple

definition, that are less natural. Among them are the following, often known as the paradoxes of

material implication:

Any conditional pq with a false antecedent p is true, no matter what the consequent q

is, and no matter whether there is any kind of link between the two. For example, the

proposition ‘If Sydney is the capital of Australia then Shakespeare wrote Hamlet’ is true,

simply because of the falsehood of its antecedent (the capital is in fact Canberra). We

could replace the consequent by any other proposition, even its own negation, and the

material conditional would remain true.

Any conditional pq with a true consequent q is true, no matter what the antecedent p is,

whether or not there is any kind of link between the two. For example, the proposition ‘If

the average temperature of the earth’s atmosphere is rising then wombats are marsupials’

is true, simply because of the truth of its consequent. We could replace the antecedent by

any other proposition, even its own negation, without affecting the truth of the entire

material conditional.

Given any two propositions p and q whatsoever, either the conditional pq or its

converse qp is true. For example, either it is true that if my car is in the garage then

your computer is turned on, or conversely it is true that if your computer is turned on then

my car is in the garage – when these propositions are understood truth-functionally.

Of the entries on the right hand side of the truth-table for , the one in the second row appears to

be incontestable. But the first may be a little suspicious, while the entries in the third and fourth

rows can appear quite arbitrary. Now as we will see in the following sections, it cannot be

pretended that the truth-functional conditional captures all the subtleties of content of

conditionals of everyday discourse. Nevertheless the table has its rationale and a certain

inevitability. A story told by Dov Gabbay illustrates this.

A shop on high street is selling electronic goods, and to promote sales offers a free printer to

anyone who buys more than £200 worth in a single purchase. The manager puts a sign in the

3

window: ‘If you buy more than £200 in electronic goods here in a single purchase, we give you a

free printer’. You are on the fraud squad, and you suspect this shop manager of making false

claims. You try to nail him by sending inspectors disguised as little old ladies, teenage punks etc.,

making purchases and asking for the free printer. The first inspector buys for £250, and is given

the printer. So far, no grounds for charging the manager. The second inspector buys for only

£150, asks for the free printer, and is refused. Still no grounds for a charge. The third inspector

buys for £190, asks for a free printer, and because the manger likes the colour of her eyes, gives

one. Still no grounds for a charge of fraud. There is only one way of showing that the shopwindow conditional is false: getting an instance where the customer buys for £200 or more, but is

not offered the printer.

What is the moral of this story? If we want our connective to be truth-functional, in other words

to be determined by some truth-table, then there is only one table that can do the job acceptably –

the one that we have chosen.

This said, it would be very misleading to say that the truth-table gives us a full analysis of

conditional propositions of everyday language, for they are normally used to convey much more

information than is given in the table. In fact, it is fair to say that the truth-functional conditional

almost never occurs in daily language in its pure form. We look at some of the ways in which this

can happen.

1.4. Implicit Generalization

You tell a student: ‘if a relation is acyclic then it is irreflexive’. What kind of conditional is this?

In effect, you are implicitly making a universal quantification. You are saying that: for every

relation r, if r is acyclic, then it is irreflexive. There is an implicit claim of generality.

For those who have already seen the notation of quantifiers in logic, the statement says that

r((A(r)I(r)), where is material implication, is the universal quantifier, and the letters A,I

stand for the corresponding predicates, with A(r) for ‘r is acyclic’ and I(r) for ‘r is irreflexive’.

The truth-functional connective is present, but it is not working alone.

To show that this proposition is false, you would have to find at least one relation that is acyclic

but not irreflexive, i.e. that satisfies the antecedent A(r) but falsifies the consequent I(r), briefly

that gives the combination (1,0) for antecedent and consequent. In fact, in this example, the

4

combinations (1,1), (0,1), (0,0) all arise for suitable relations r, but that does not affect the truth

of the conditional. The combination (1,0) does not exist for any relation r, and that is enough to

count the conditional as true.

We can generalize on the example. In pure mathematics, the if…then… construction is typically

used as a universally quantified material conditional, with the quantification often left implicit.

The same happens in daily language. In the example from the electronics shop, the conditional in

the window is implicitly generalizing, or as we say quantifying, over all customers and sales. In

the telephone/internet example, we are quantifying over times or occasions, saying something

like ‘whenever the telephone is in use, the computer cannot access internet’, i.e. ‘at any time t, if

the house telephone is in use at time t then the computer cannot access internet at t’.

Again in the notation of logic, this may be written as t(T(t)A(t)), where the letters T,A serve

as predicates, i.e. T(t) means ‘the telephone is in use at time t’, and A(t) means ‘computer cannot

access internet at time t’.

But for ordinary discourse, even this level of simplicity is exceptional. Suppose you are working

on your computer without a virus protection. Your friend advises: ‘if you open an attachment

with a virus, it will damage your computer’. In the spirit of the previous example, we can see this

as a universal quantification over times or occasions, with an embedded material implication.

Thus as first approximation we have: for every time t (within a certain range left implicit), if you

open an attachment with a virus at time t, then your computer will be damaged, i.e.

t(A(t)D(t)).

But this representation sins by omission, for it leaves unmentioned two aspects of the advice:

Futurity. Your hard disk will not necessarily be damaged immediately, even if it is

immediately infected. There may be a time lapse.

Causality. The introduction of the virus is causally responsible for the damage.

The representation also sins by commission, for it says more than we probably mean. It says

always, when we may mean something a bit less. Ordinary language conditionals often have the

property of:

5

Defeasibility. Your friend may not wish to say that such an ill-advised action will always

lead to damage, but that it will do so usually, probably, under natural assumptions, or

barring exceptional circumstances.

All three dimensions are pervasive in the conditionals of everyday life. Logicians have attempted

to tackle all of them. We will say a few words on each.

1.5. Futurity

Success is most apparent in the case of temporal futurity and other forms of temporal crossreference. Since the 1950s, logicians have developed a range of what are known as temporal

logics. Roughly speaking, there are two main kinds of approach.

One is to remain within the framework of material implication and quantification over moments

of time, but recognize additional layers of quantificational complexity. In the example, the

representation becomes something like: t(A(t)t(ttD(t))), although this still omits any

indication of the vague upper temporal bound on the range of the second, existential, quantifier.

Another approach is to introduce non-truth-functional connectives on propositions to do the same

job. These are called temporal operators, and belong to a broad class of non-truth-functional

connectives called modal operators. Writing x for ‘it will always be the case that x’, the

representation becomes (ad), where the predicates A(t), D(t) are replaced by propositions

a,d, and appropriate principles are devised to govern the temporal propositional operator . The

study of such temporal logics is now a recognized and relatively stable affair.

1.6. Causality

The treatment of causality as an element of conditionals has not met with the same success,

despite some attempts that also date back to the middle of the twentieth century. The reason for

this is that, to be honest, we do not have a satisfying idea of what causality is. Part of its meaning

lies in the idea of regular or probable association, and for this reason, can be considered as a form

of implicit quantification. But it is difficult to accept, as the eighteenth-century philosopher David

Hume did, that this is all there is to the concept, and even more difficult to specify clearly what is

missing. There is, as yet, no generally accepted way of representing causality within a

proposition, although there are many suggestions.

6

1.7. Defeasibility and Probability

The analysis of defeasibility has reached a state somewhere between those of futurity and

causality – not as settled as the former, but much more advanced than the latter. It is currently a

very lively area of investigation. Two general lines of attack emerge: a quantitative one using

probability, and a non-quantitative one using ideas that are not so familiar to the general scientific

public. The non-quantitative approach is reviewed at length in a companion chapter in this

volume (‘Bridges between classical and nonmonotonic logic’) and so we will leave it aside here,

making only some remarks on the quantitative approach.

The use of probability to represent uncertainty is several centuries old. For a while, in the

nineteenth century, some logicians were actively involved in the enterprise – for example Boole

himself wrote extensively on logic and probability. But in the twentieth century, the two

communities tended to drift apart, with little contact.

From the point of view of probability theory, the standard way of representing an uncertainty in

conditional contexts is via the notion of conditional probability. Given any probability

distribution P on a language, and any proposition a of the language such that P(a) 0, one

defines the function Pa by the equality Pa(x) = P(ax)/P(a) where the slash is ordinary division.

In the limiting case that P(a) = 0, Pa is left undefined. When defined, Pa is itself a probability

distribution on the language, called the conditionalization of P on a.

This concept may then be used to give probabilistic truth-conditions for defeasible conditionals.

In particular, the notion of a threshold probabilistic conditional may be defined as follows. We

begin by recalling the definition of the latter. Let P be any probability distribution on the

language under consideration, and let t be a fixed real in the interval [0,1]. Suppose a,x are

propositions of the language on which the probability distribution is defined, and P(a) 0. We

say that a probabilistically implies x (under the distribution P, modulo the threshold t), and we

write a |~P,t x, iff Pa(x) t, i.e. iff P(ax)/P(a) t

Thus there is not one probabilistic conditional relation |~p,t but a family of them, one for each

choice of a probability distribution P and threshold value t. Each such relation is defined only for

premises a such that P(a) 0, for it is only then that the ratio P(ax)/P(a) is well-defined in

conventional arithmetic. There is some discussion whether it is useful to extend the definition

7

with a special clause to cover the limiting case that P(a) 0, but that is a question which we need

not consider.

From a logician’s point of view, this is a kind of relation that is rather badly behaved. It is in

general nonmonotonic, in the sense that we may have a |~P,t x but not ab |~P,t x. This is only to

be expected, given that we are trying to represent a notion of uncertainty, but worse is the fact

that it is badly behaved with respect to conjunction of conclusions. That is, we may have a |~P,t x

and a |~P,t y but not a |~P,t xy. Essentially for this reason, the relation also fails a number of other

properties, notably one known as cumulative transitivity alias cut. But from a practical point of

view, it is a relation that has been used to represent uncertain conditionality in a number of

practical domains.

1.8. Conditional Probability versus Probability of a Conditional

Note that the conditional assertion a |~P,t x tells us that the conditional probability Pa(x) is

suitably high, but it does not tell us that the probability of any conditional proposition is high.

Underlying this is the fact that while Pa(x) is the conditional probability of x given a, it is not the

probability of any conditional proposition. This is a subtle conceptual distinction, but an

important one; we will attempt to explain it in this section.

To begin with, Pa(x) cannot be identified with P(ax), where is material implication. For

ax is classically equivalent to ax, and so is highly probable whenever a is highly

improbable, even when x is less probable in the presence of a than in its absence.

This suggests the question whether there is any kind of conditional connective, call it , such

that Pa(x) = P(ax) whenever the left-hand side is defined. For a long time it was vaguely

presumed that there must some such ‘probability conditional’ somewhere; but in a celebrated

paper of 1976 David Lewis showed that this cannot be the case. The theorem is a little technical,

but is worth stating explicitly (without proof), even in a general review like the present one.

Consider any propositional language L with at least the usual truth-functional connectives (or

equivalently, consider its quotient structure under logical equivalence, which is a Boolean

algebra). Take any class P of probability distributions on L. Suppose that P is closed under

conditionalization, that is, whenever P P and a L then Pa P. Suppose finally that there are

a,b,c L and P P such that a,b,c are pairwise inconsistent under classical logic, while each

8

separately has non-zero probability under P. This is a condition that is satisfied in all but the most

trivial of examples. Then, the theorem tells us, there is no function from L2 into L such that

Px(y) = P(xy) for all x,y L with P(x) 0, i.e. whenever the left-hand side is defined.

An impressive feature this result is that it is does not depend on making any apparently innocuous

but ultimately questionable assumptions about properties of the conditional . The only

hypothesis made on the connective is that it is a function from L2 into L. Indeed, an analysis of

the proof shows that even this hypothesis can be weakened. Let L0 be the purely Boolean part of

L, i.e. the part built up with just the truth-functional connectives from elementary letters. Then

there is no function even from L02 into L that satisfies the property that for all x,y L0 with

P(x) 0, Px(y) = P(xy). In other words, the result does not depend on iteration of the

conditional connective in the language. Interestingly, however, it does depend on iteration of

the operation of the operation of conditionalization in the sense of probability theory – the proof

requires consideration of a conditionalization (Px)y of certain conditionalizations Px of a

probability distribution P.

Lewis’ impossibility theorem does not show us that there is anything wrong with the notion of

conditional probability. Nor does it show us that there is anything incorrect about with classical

logic. It shows that there is a difference between conditional probability on the one hand and the

probability of a conditional proposition on the other, no matter what kind of conditional

proposition we have in mind. There is no way in which the former concept may be reduced to the

latter.

Some logicians have argued that if we are prepared to abandon the classical basis of logic,

conditional probability can be identified with the probability of a suitable conditional.

Specifically, if we abandon the two-valued interpretation of the Boolean connectives between

propositions, pass to a suitable three-valued interpretation, and at the same time reconstruct

probability theory so that probability distributions have real intervals rather than real points as

values, then we can arrange matters so that the two coincide. There does not appear to be a

consensus on this question – whether it can be made to work, what conceptual costs it entails, and

whether it gives added value for the additional complexity. For an entry into the literature, see the

“Guide to further reading”.

9

1.9. Counterfactual conditionals

Returning again to daily language, we cannot resist mentioning a very strange kind of

conditional. We have all heard statements like ‘If I were you, I would not sign that contract’, ‘If

you had been on the road only a minute earlier, you would have been involved in the accident’, or

‘If the Argentine army had succeeded in its invasion of the Falklands, the military dictatorship

would had lasted much longer’.

These are called counterfactual conditionals, because it is presumed by the speaker that, as a

matter of common knowledge, the antecedent condition is in fact false (I am not you, you did not

in fact cross the road a minute earlier, the invasion did not succeed). Grammatically, in English at

least, they are often signalled by the subjunctive mood in the antecedent and the auxiliary

‘would’ in the consequent. Notoriously, they cannot be represented as material conditionals, for if

they were, the falsehood of the antecedent would make them all true (which, by the way is

another counterfactual). They may sometimes be intended as assertions without exceptions, more

often as defeasible ones. They are occasionally used in informal mathematical discourse,

especially on the oral level, but never in formal pure mathematics.

Logicians have been working on the representation of counterfactual conditionals for several

decades, and have developed some fascinating mathematical constructions to model them. To

describe these constructions would take us too far from our main thread; once again we refer to

the “Guide” at the end of this paper.

1.10. So Why Work with the Truth-Functional Conditional?

Given that the conditional statements of ordinary language usually say much more than is

contained in the truth-functional analysis, why should we bother with them? There are several

reasons. From a pragmatic point of view:

If you are doing computer science, then the truth-functional conditional will give you a

great deal of mileage. It forms the basis of any language that communicates with a

machine.

If you are doing pure mathematics, the truth-functional conditional will also serve your

purposes perfectly well, provided that you recognize that it is usually used with an

implicit universal quantification.

10

From a more theoretical point of view, there are even more important reasons:

The truth-functional conditional is the simplest possible kind of conditional. And in

general, it is a good strategy to begin any formal modelling in the simplest way,

elaborating it later if needed.

Experience has shown that when we do begin analysing any of the other kinds of

conditional mentioned above (including even such a maverick one as the counterfactual),

the truth-functional one turns up in one form or another, hidden inside. It is impossible

even to begin an analysis of any of these more complex kinds of conditional unless you

have a clear understanding of the truth-functional one.

Thus despite its eccentricities and limitations, the material conditional should not be thrown

away. It gives us the kernel of conditionality, even if not the whole fruit.

2. CONDITIONAL DIRECTIVES

2.1. The problem

So far, we have been looking at conditional propositions, where a proposition is something that

can be regarded as true or false. But when we come to consider conditional directives, we are

faced with a new problem.

By a conditional directive we mean a statement that tells us what to do in a given situation. It

may be expressed in the imperative mood as in “if you take the rubbish out for collection, put it

in a plastic bag”, or in the indicative mood as in “if you take the rubbish out for collection, you

should put it in a plastic bag”. The former has a purely directive function, whereas the latter can

be used to either issue a directive, report the fact that such a directive has been made (or is

implicit in one made), express acceptance of the directive, or (most commonly) a combination of

the three. In what follows, we focus on the purely directive function, ignoring the elements of

report and acquiescence. We also abstract from the grammatical mood in which the directive is

expressed, indicative or imperative.

Philosophically, it is widely accepted that directives and propositions differ in a fundamental

respect. Propositions may bear truth-values, in other words may be true or false; but directives are

11

items of another kind. They may be respected (or not), and may also be assessed from the

standpoint of other directive as, for example, when a legal requirement is judged from a moral

point of view (or vice versa). But it makes no sense to describe directives as true or as false.

If this is the case, how is it possible to construct a logic of directives, and in particular, of

conditional directives? The whole of classical logic revolves around the distinction between truth

and falsehood. In this section, we will sketch one approach to this problem, recently developed

by the present author with Leendert van der Torre. Called input/output logic, it provides a means

of representing conditional directives and determining their consequences, without treating them

as bearing truth-values.

2.2. Simple-minded output

For simplicity, we write a conditional directive ‘in condition a, do x’ as ax. To break old

habits, we emphasise again that we are not trying to formulate conditions under which ax is

true, for as we have noted, directives are never true, nor false. Our task is a different one. Given a

set G of conditional directives, which we call a code, we wish to formulate criteria under which a

conditional directive ax is implicit in G.

Another way of putting this is that we would like to define an operation out(.), such that out(G)

consists of all the conditional directives ax that are implicit in those already in G. Equivalently,

an operation out(.,.) such that out(G,a) is the set of all propositions x such that ax is implicit in

G. These are equivalent because given either one we can define the other by the rule x out(G,a)

iff ax out(G).

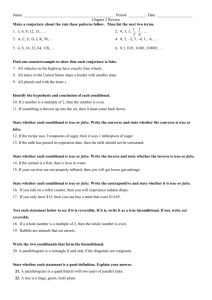

The simplest kind of input/output operation is depicted in Figure 1. It has two arguments G and a,

where G is a code and a is an input proposition. The operation has three phases. First, the input a

is expanded to its classical closure Cn(a), i.e. the set of all propositions y that are consequences of

a under classical (truth-functional) logic. Next, this set Cn(a) is ‘passed through’ G, which

delivers the corresponding immediate output G(Cn(a)). Here G(X) is defined in the standard settheoretic manner as the closure of a set under a relation, so that G(Cn(a)) = {x: for some b

Cn(a), (b,x) G}. Finally, this is expanded by classical closure again to out1(G,a) =

Cn(G(Cn(a))). We call this simple-minded output.

12

Cn(G(Cn(a)))

G

a

G(Cn(a))

Cn(a)

Figure 1: Simple-Minded Output

out1(G,a) = Cn(G(Cn(a)))

Despite its simplicity, this is already an interesting operation. It gives us the implicit content of an

explicitly given code of conditional directives, without treating the directives themselves as

propositions: only the items serving as input and as output are so treated. It should be noted that

the operation out1(G,a) does not satisfy the principle of identity, which in this context is called

throughput. That is, in general we do not have that a out1(G,a). In the parallel notation, we do

not have that ax out1(G). It also fails contraposition. That is, in general x out1(G,a) does

not imply a out1(G,x). In the parallel notation, we can have ax out1(G) without having

x a out1(G). Reflection on how we think of conditional directives in real life indicates

that this is how it should be.

As an example, let the code G consist of just three conditional directives: (b,x), (c,y), (d,z). We

call b,c,d the bodies of these directives, and x,y,z their respective heads. Let the input a be the

conjunction b(cd)ed. Then the only bodies of elements of G that are consequences of a

are b,c, so that G(Cn(a)) = {x,y} and thus out1(G,A) = Cn(G(Cn(a))) = Cn(x,y). In the other

notation, we can say that for any proposition z, we have az out1(G) iff z Cn(x,y).

13

It can easily be shown that simple-minded output is fully characterized by just three rules. When

formulating these rules it is convenient to use the notation ax out1(G); indeed, as G is held

constant in all the rules, we drop the unvarying part “ out1(G)” from each of them. These are

notational conventions to make the formulations easier to read. The rules characterizing simpleminded output are three:

Strengthening Input (SI):

From ax to bx whenever a Cn(b)

Conjoining Output (AND):

From ax, ay to axy

Weakening Output (WO):

From ax to ay whenever y Cn(x).

It can be shown that these three rules suffice to provide passage from a code G to any element

ax of out1(G), by means of a derivation tree with leaves in G{tt} where t is any classical

tautology, and with root the desired element.

2.3. Stronger output operations

Simple-minded output lacks certain features that may be appropriate for some kinds of directive.

In the first place, the treatment of disjunctive inputs is not very sophisticated. Consider two inputs

a and b. By classical logic, we know that if x Cn(a) and x Cn(b) then x Cn(ab). But there

is nothing to tell us that if x out1(G,a) = Cn(G(Cn(a))) and x out1(G,b) = Cn(G(Cn(b))) then x

out1(G,ab) = Cn(G(Cn(ab))), essentially because G is an arbitrary set of ordered pairs of

propositions. In the second place, even when we do not want inputs to be automatically carried

through as outputs, we may still want outputs to be reusable as inputs – which is quite a different

matter.

Operations satisfying each of these two features can be provided with explicit definitions,

pictured by diagrams in the same spirit as that for simple-minded output. They too can be

characterized by straightforward rules. We thus have four very natural systems of input/output,

which are labelled as follows: simple-minded alias out1 (as above), basic (simple-minded plus

input disjunction: out2), reusable (simple-minded plus reusability: out3), and reusable basic (all

together: out4).

For example, reusable basic output out4 may be given a diagram and definition as in Figure 2.

The definition tells us that: out4(G,a) consists of just those propositions that are in every set of the

form Cn(G(V)), where V ranges over the complete sets of propositions that both contain a and are

14

closed under G. Here, a complete set is one that is either maximally consistent or equal to the set

of all formulae.

Cn(G(V1))

G(V1)

G

G

V1

out4(G,a)

a

G(V2)

V2

Cn(G(V2))

Figure 2: Reusable Basic Output:

out4(G,a) = {Cn(G(V)): a V G(V), V complete}

It can be shown that these three stronger systems may be characterized by adding one or both of

the following rules to those for simple-minded output:

Disjoining input (OR):

From ax, bx to abx

Cumulative transitivity (CT):

From ax, axy to ay.

There is a great deal more to input/output logics than we have sketched here. In particular, there

is the problem, which must be faced by any approach to the logic of directives, of dealing

adequately with what are called contrary-to-duty conditional directives. In general terms, the

problem may be put as follows: given a set of norms, how should we determine which obligations

are operative in a situation that already violates some among them. In the context of input/output

logics, one way of approaching this question is via the imposition of consistency constraints on

the application of input/output operations. Another question of interest is that of understanding

15

conditional permissions. Again, the input/output approach provides a convenient platform for

clarifying the well-known contrast between positive and negative permission, as well as for

distinguishing between different kinds of positive permission. However, in this brief review we

leave these questions aside, directing the reader to the guide below.

SUMMARY

There are many kinds of conditional in human discourse. They can be used to assert, and they can

be used to direct. On the level of assertion, the simplest kind of conditional is the truth-functional,

alias material, conditional. It almost never occurs pure in daily language, but provides the kernel

for a range of more complex kinds of conditional assertion, involving such features as universal

quantification, temporal cross-reference, causal attribution, and defeasibility. Unlike conditional

assertions, conditional directives cannot be described as true or false, and their logic has to be

approached in a more circumspect manner. Input/output logic does this by examining the notion

of one conditional directive being implicit in a code of such directives, bringing the force of

classical logic to play in the analysis without ever assuming that the directives themselves carry

truth-values.

GUIDE TO FURTHER READING

The truth-functional conditional

All elementary textbooks of modern logic present and discuss the truth-functional conditional. A

well-known text that carries the discussion further than most is W.V.O. Quine Methods of Logic,

fourth edition 1982. Harvard University Press.

Temporal conditionals

The pioneering work on temporal logics was A.N. Prior Time and Modality, Greenwood

Publishing 1979. For an introductory review, see e.g. Johan van Benthem “Temporal Logic”, in

Handbook of Logic in Artificial Intelligence and Logic Programming. vol 4, Gabbay, Hogger and

Robinson eds Oxford University Press 1995, pp 241-350, or Yde Venema “Temporal logic” in

Lou Goble ed., The Blackwell Guide to Philosophical Logic (Oxford:Blackwell, 2001).

16

Defeasible conditionals: quantitative approaches

All elementary textbooks of probability discuss conditional probability. There is a short but clear

introduction, with pointers to the literature, in chapter 14 of Stuart J. Russell and Peter Norvig

Artificial Intelligence: A Modern Approach, Prentice Hall: Upper Saddle River, NJ. 1995. David

Lewis’ impossibility result was established in his paper “Probabilities of conditionals and

conditional probabilities” The Philosophical Review 85: 297-315, 1976, reprinted with a

postscript in his Philosophical Papers, Oxford University Press 1987, pp133-156. On the attempt

to bypass this result by falling back onto a three-valued logic and a modified probability theory,

see e.g. Didier Dubois and Henry Prade “Possibility theory, probability theory and multiplevalued logics: a clarification” Annals of Mathematics and Artificial Intelligence 32: 2001, 35-66.

Defeasible conditionals: non-quantitative approaches

The pioneering classics, in chronological order, are: R. Reiter “A logic for default reasoning”

Artificial Intelligence 13: 81-132, 1980, reprinted in M.Ginsberg ed, Readings in Nonmomotonic

Reasoning Morgan Kaufmann, Los Altos CA, 1987 pp 68-93; David Poole “A logical framework

for default reasoning” Artificial Intelligence 36: 27-47, 1988; Yoav Shoham Reasoning About

Change, MIT Press, Cambridge USA 1988. For a more recent overview of the literature: David

Makinson “General Patterns in Nonmonotonic Reasoning”, in Handbook of Logic in Artificial

Intelligence and Logic Programming, vol. 3, ed. Gabbay, Hogger and Robinson, Oxford

University Press, 1994, pages 35-110. The chapter “Bridges between classical and nonmonotonic

logic” in the present volume shows how these nonmonotonic relations emerge naturally from

classical consequence.

Counterfactual conditionals

The best place to begin is probably still the classic presentation: David K. Lewis Counterfactuals,

Blackwells, Oxford 1973. For a comparative review of the different uses of minimalization in the

semantics of counterfactuals, preferential conditionals, belief revision, update and deontic logic,

see David Makinson "Five faces of minimality", Studia Logica 52: 339-379, 1993.

Conditional directives

17

For an introduction, see Makinson, David and Leendert van der Torre “What is input/output

logic?” in Foundations of the Formal Sciences II: Applications of Mathematical Logic in

Philosophy and Linguistics. Dordrecht: Kluwer, Trends in Logic Series (to appear, 2002). For

details see the following three papers by the same authors: “Input/output logics” Journal of

Philosophical Logic (2000) 29: 383-408, “Constraints for input/output logics” Journal of

Philosophical Logic 30 (2001) 155-185, “Permission from an input/output perspective” (to

appear).

Last revised 03.10.02

18