472-365

advertisement

Text Alignment Based on the Correlation of

Surface Lexical Sequences

IASON DEMIROS1,2 , CHRISTOS MALAVAZOS2,3 , IOANNIS TRIANTAFYLLOU1,2

1

Institute for Language and Speech Processing, Artemidos & Epidavrou, 151 25, Athens

2

National Technical University of Athens

3

Hypertech S.A., 125-127 Kifissias Ave., 11524, Athens

GREECE

Abstract: - This paper addresses the problem of accurate and robust alignment across different languages. The

proposed method is inspired by automatic biomolecular sequencers and string matching algorithms. Lexical

information produced by a text handler and sentence recognizer is transformed into a lexical sequence, encoded in

a specific alphabet, in both languages. The algorithm uses a quantification of the similarity between two

sequences at the sentence level and a set of constraints, in order to identify isolated regions of high similarity that

yield robust alignments. Residues are subsequently aligned in a dynamic programming framework. The method is

fast, accurate and language independent and produces a success rate of ~95%.

Keywords: - alignment, lexical sequences, similarity metrics, sequence correlation, dynamic programming.

1

Introduction

Real texts provide the current phenomena, usages

and tendency of language at a particular space and

time. Recent years have seen a surge of interest in

bilingual and multilingual corpora, i.e. corpora

composed of a source text along with translations of

that text in different languages. One very useful

organization of bilingual corpora requires that

different versions of the same text be aligned. Given

a text and its translation, an alignment is a

segmentation of the two texts such that the n-th

segment of one text is the translation of the n-th

segment of the other. As a special case, empty

segments are allowed and correspond to translator’s

omissions or additions.

The deployment of learning and matching

techniques in the area of machine translation, first

advocated in the early 80s (Nagao 1984) proposed as

“Translation by Analogy” and the return to statistical

methods in the early 90’s (Brown 1993) have given

rise to much discussion as to the architecture and

constituency of modern machine translation systems.

Bilingual text processing and in particular text

alignment with the resulting exploitation of

information extracted from thus derived examples,

created a new wave in machine translation (MT).

In this paper, we describe a text alignment

algorithm that was inspired by the recognition of

relationships and the detection of similarities in

protein and nucleic acid sequences, which are

crucial methods in the biologist’s armamentarium. A

preprocessing stage transforms the series of surface

linguistic phenomena such as dates, numbers,

punctuation, enumerations and abbreviations, into

lexical sequences. The algorithm performs pairwise

sequence alignment based on a local similarity

measure, a sequence block correlation measure and a

set of constraints. Residues of the algorithm are

subsequently aligned via Dynamic Programming.

The Aligner has been tested on various text types in

several languages, with exceptionally good results.

2

Background

Several different approaches have been

proposed tackling the alignment problem at various

levels. Catizone's technique (Catizone 1989) was to

link regions of text according to the regularity of

word co-occurrences across texts. (Brown 1991)

described a method based on the number of words

that sentences contain.

(Gale 1991) proposed a method that relies on a

simple statistical model of character lengths. The

model is based on the observation that lengths of

corresponding sentences between two languages are

highly correlated. Although the apparent efficacy of

the Gale-Church algorithm is undeniable and

validated on different pairs of languages (English-

German-French-Czech-Italian), it seems to be

awkward when handling complex alignments.

Given the availability in electronic form of texts

translated into many languages, an application of

potential interest is the automatic extraction of word

equivalencies from these texts. (Kay 1991) has

presented an algorithm for aligning bilingual texts

on the basis of internal evidence only. This

algorithm can be used to produce both sentence

alignments and word alignments.

(Simard 1992) argues that a small amount of

linguistic information is necessary in order to

overcome the inherited weaknesses of the GaleChurch method. He proposed using cognates, which

are pairs of tokens across different languages that

share "obvious" phonological or orthographic and

semantic properties, since these are likely to be used

as mutual translations.

(Papageorgiou 1994), proposed a generic

alignment scheme invoking surface linguistic

information coupled with information about possible

unit delimiters depending on the level at which

alignment is sought.

Many algorithms were developed for sequence

alignment in molecular biology. The NeedlemanWunsch method (Needleman 1970) is a brute-force

approach performing global alignment on a dynamic

programming basis. Wilbur and Lipman (Wilbur

1983) introduced the concept of k-tuples in order to

focus only on areas of identity between two

sequences. (Smith 1981) describes a variation of the

Needleman-Wunsch algorithm that yields local

alignment and is known as the Smith-Waterman

algorithm. Both FASTA (Pearson 1988) and BLAST

(Altschul 1990) place restrictions on the SmithWaterman model by employing a set of heuristics

and are widely used in BioInformatics.

3

3.1

Alignment Framework

Alignment, the Basis for Sequences

Comparison

Aligning two sequences is the cornerstone of

BioInformatics. It may be fairly said that sequence

alignment is the operation upon which everything

else is built. Sequence alignments are the starting

points for predicting the secondary structure of

proteins, for the estimation of the total number of

different types of protein folds and for inferring

phylogenetic trees and resolving questions of

ancestry between species. This power of sequence

alignments stems from the empirical finding, that if

two biological sequences are sufficiently similar,

almost invariably they have similar biological

functions and will be descended from a common

ancestor. This is a non-trivial statement. It implies

two important facts about the syntax and the

semantics of the encoding of function in sequences:

(i) function is encoded into sequence, this means:

the

sequence

provides

the

syntax

and

(ii) there is a redundancy in the encoding, many

positions in the sequence may change without

perceptible changes in the function, thus the

semantics of the encoding is robust.

3.2

Lexical Information, Alphabets and

Sequences

Generally speaking, an alphabet is a set of

symbols or a set of characters, from which

sequences are composed. The Central Dogma of

Molecular Biology describes how the genetic

information we inherit from our parents is stored in

DNA, and how that information is used to make

identical copies of that DNA and is also transfered

from DNA to RNA to protein. DNA is a linear

polymer of 4 nucleotides and the DNA alphabet

consists of their initials, {A, C, G, T}.

The text alignment architecture that we propose

is a quantification of the similarity between two

sequences, where the model of nucleotide sequences

that is used in molecular biology is replaced by a

model of lexical sequences derived from the

identification of surface linguistic phenomena in

parallel texts.

Inspired by the facts presented in 3.1, about

encoding function in sequences, we convert the text

alignment problem into a problem of aligning two

sequences encoding surface lexical information

characterizing the underlying parallel data. Yet,

although Descartes reductionism has turned out to be

a home run in molecular biology, we understand

that, by and large, global, complete solutions are not

available for a pairwise sequence alignment, and we

try to constrain the problem with information that

can be inferred from the nature of the text alignment

problem.

3.3

Handling Texts

Recognizing and labelling surface phenomena

in the text is a necessary prerequisite for most

Natural Language Processing (NLP) systems. At this

stage, texts are rendered into an internal

representation that facilitates further processing.

Basic text handling is performed by a MULTEXTlike tokenizer (Di Christo 1995) that identifies word

boundaries, sentence boundaries, abbreviations,

numbers, dates, enumerations and punctuations. The

tokenizer makes use of a regular-expression based

definition of words, coupled with downstream

precompiled lists for abbreviations in many

languages and simple heuristics. This proves to be

quite successful in effectively recognizing sentences

and words, with accuracy up to 96%. The text

Handler is responsible for transforming the parallel

texts from the original form in which they are found

into a form suitable for the manipulation required by

the application. Although its role is often considered

as trivial, in fact it is crucial for subsequent steps

that heavily rely on correct word boundary

identification and sentence recognition. The lexical

resources of the handler are constantly enriched with

abbreviations, compounding lists, dates, number and

enumeration formats for new languages.

A certain level of interactivity with the user is

supported, by indirect manipulation of pre-fixed

sentence splitting patterns such as a new line

character followed by a word starting with an

uppercase character. The text handler has been

ported to the Unicode standard and the number of

treated and tested languages has reached 14.

3.4

Sequences of lexical symbols

The streams of characters are read into the

handler from the parallel texts and then successively

processed through the different components to

produce streams of higher level objects, that we call

tokens. Streams of tokens are converted into

sequences according to mapping rules that are

presented in Table 1.

Origin

Symbol

abbreviation

a

number

d

date

h

enumeration

e

initials

i

right

parehthesis1

k

left parenthesis

m

punctuation2

u

delimiters3

t

period

p

Table 1: Summary of sequences alphabet

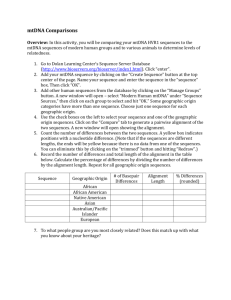

Figure 1 details an extract of 5 sentence

sequences that were created by a conversion of

1

Including all right brackets such as ( , { , [ , « , etc.

Equivalently, left parenthesis includes all left brackets

such as ) , } , ] , » , etc.

2 Such as comma (,), star (*), plus (+), etc.

3 Such as colon ( :), exclamation ( !), semicolon ( ;), etc.

lexical information according to the rules presented

in Table 1. For instance, the fourth sentence of the

extract contains an enumeration symbol (e), a

number symbol (d), four punctuation symbols (u)

and finally a semicolon (t) that is functioning as

sentence delimiter. The corresponding sentence

index precedes each sentence sequence.

1:d

2:

3:eukdmuuuuut

4:eduuuut

5:uuut

Figure 1: Example of lexical sequences

4

4.1

Algorithm Description

Problem Formulation

A = {a, d, h, e, i, k, m, u, t, p} is the symbol

alphabet.

Each sentence of the parallel texts is

transformed into one sequence, according to Table 1.

Empty sequences are valid sequences.

S is the set of all finite sequences of characters

from A, having their origin in the Source text.

T is the set of all finite sequences of characters

from A, having their origin in the Target text.

S i denotes the i-th sequence in S,

corresponding to the i-th sentence of the Source text.

T j denotes the j-th sequence in T,

corresponding to the j-th sentence of the Target text.

4.2

Methodology

The basic presupposition is that parallel files

present local structural similarity. A certain degree

of structural divergencies is expected at the level of

sentence

sequences,

due

to

translational

divergencies, as well as of blocks of sentence

sequences due to assymetric m:n alignments,

between source and target language blocks.

We argue that, parallel files contain a

significant amount of source and target language

blocks of surface lexical sequences, of variable

length, that display a high degree of correlation.

These blocks can be captured, in a highly reliable

manner, based solely on internal evidence and local

alignments can be established that play the role of

anchors in any typical alignment process.

To this end, similarity is sought along two

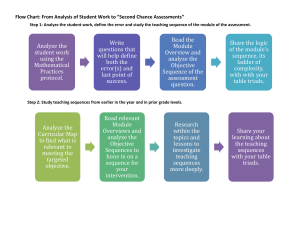

different dimensions (Figure 2): a)Horizontal or

Intra-sentential, where corresponding source and

target language sentence sequences are compared on

the basis of the elements they contain, and

b)Vertical or Inter-sentential, where corresponding

source and target language blocks of sentence

sequences, of variable length, are associated, based

on the similarity of their constituent sentence

sequences.

Inter-Sentential

IntraSentential

At each iteration, the search space of potential

source anchors is reduced (Search Space Reduction)

by examining only those source points n that could

constitute the starting sequence of an anchor block.

In order for a point ns to be considered as such, it

should meet the following criteria :

Target File Point nt :

n-w

SIM(Sns,Tnt) CCS,

n

(1)

SIM(Sns,Tnt) - SIM(Sns-1,Tnt) CCD (2)

SIM(Sns,Tnt) - SIM(Sns,Tnt-1) CCD (3)

n+w

Figure 2: Intra/Inter-Sentential

similarity

The overall alignment process flow is depicted

in Figure 3. At each iteration, candidate blocks of

sequences of variable size (N), starting at source file

point n, are examined against the target file blocks

of sequences, through a crosscorrelation process

described in detail in the following section.

Crosscorrelation is a mathematical operation, widely

used in discrete systems, that measures the degree to

which two sequences of symbols or two signals are

similar. As shown in Figure 2, only pairs with

maximum normalized deviation less than

w O file _ length ,

a threshold originally

introduced by (Kay 1991), are considered, where

file_length is the mean value of the number of

sentences contained in the parallel texts (Compute

Target Search Window).

Search Space Reduction:

Find Next Candidate Starting Point n

Compute Target Seacrh Window

[ n-w , n+w ]

Crosscorrelation Process

Store Anchor Block

Figure 3. General Process Flow

where, SIM( ) is the intra-sentential similarity

measure, and CCS and CCD are the similarity

threshold and deviation threshold respectively,

descibed in detail in the following section.

At the end of each iteration, the best matching

anchor area is stored in the global set of anchor

points.

4.3

Intra-sentential Comparison

Sentences are encoded into one-dimensional

sequences based on their respective surface lexical

elements. However, during translation certain

elements act as optional, thus introducing a

significant amount of noise into the corresponding

target language sequences. In order to reduce the

effect of such distortion on the pattern matching

process, sequences are normalized (k,m optional,

d+d, u+u, duddd) and comparison is also

performed on the normalized forms. The final

similarity measure is produced by averaging the two

individual scores. We have experimented with two

alternative methods of intra-sentential comparison,

Edit Distance and shared N-gram matching,

described below. The latter, proved to be more

efficient since it performs equally well while

requiring 30 times less processing time (Willman

1994).

4.3.1

Edit Distance

An "enhanced" edit distance between source

and target language sequences, that is, the minimum

number of required editing actions (insertions,

deletions,

substitutions,

movements

and

transpositions) in order to transform one sequence

into the other, is computed. The process was

implemented through a dynamic programming

framework that treats pattern-matching as a problem

of traversing a directed acyclic graph. The method

originally proposed in (Wagner 1974), was enriched

by introducing additional actions (movements,

transpositions), frequently occuring in the translation

process.

4.3.2

Shared n-gram method

The similarity of two patterns is assumed to be

a function of the number of common n-grams they

contain (Adamson 1974). In the proposed

framework association measures between sequences

are calculated based on the Dice Coefficient of

shared unique overlapping n-grams. We have

experimented with bigrams (n=2) and trigrams (n=3)

as the units of comparison and discovered that

bigrams performed better.

4.4

Inter-Sentential Comparison

(Crosscorrelation)

Alignment between blocks of source and target

language sentence sequences is performed based on

the structural similarity of consecutive sequences.

Let Sn and Tk be the source and target language

sequences at position n and k, respectively and

L = k-n be the lag between the respective file

offsets, in number of sentences (see Figure 1). Then,

the crosscorrelation (CC) between blocks of size N,

starting at position n and k (= n+L ) is given by the

following formula :

CC(n, L, N )

1

N

crosscorrelation values between the current source

block and the preceding and following target blocks

is above a deviation threshold (CCD). Therefore, a

tuple <n,L,N> constitutes a candidate anchor when

the following conditions are met :

CC(n, L, N)

CCpreceding

CCfollowing

where,

CCS,

CCD

CCD

(4)

(5)

(6)

CCpreceding = CC(n,L,N) - CC(n,L-1, N)

CCfollowing = CC(n,L,N) - CC(n,L+1, N)

We have experimented with a wide range of

threshold values (60-90% for CCS and 70-90% for

CCD) and measured respective recall and precision

values. A maximum occurred when both thresholds

were set to approximately 80%.

Crosscorrelation Process : The correlation

process is presented in detail in Figure 4. For each

candidate start point n found by the previous

process:

Step-1: The process examines blocks

continuously increasing in size (N), starting at point

n, until no candidate anchor block is found.

N 1

sim x(n i), y(n L i)

i 0

Since the total sum of similarity scores between

corresponding sequences, increases with the size N

of respective blocks, longer blocks tend to produce

higher similarity values. For that reason, the total

value is normalised over the size of blocks in

number of sequences under comparison.

Alignment is performed through an iterative

process. For every source file position n, blocks of

variable size N starting at this position are matched

against target blocks of the same size (Figure 2). As

already mentioned, for each source pattern starting

at position n, only target patterns in

the window [n-w, n+w] are examined, where

w O file _ length is the maximum deviation

threshold.

Our goal is to discover the source points n,

along with the particular L (target block lag) and N

(block size) values, for which we get the highest

degree of correlation between the respective source

and target blocks (starting at positions n and n+L

respectively).

More specifically, we define as an anchor each

tuple <n,L,N>, for which the crosscorrelation value

CC(n,L,N) is above a predefined similarity threshold

(CCS) and also, the deviation from the

Step-2: For each candidate source anchor

sequence, the respective target file area (Lmin,Lmax) is

computed, based on the deviation threshold w,

within which the corresponding target sequence will

be sought:

Lmin = n – w

Lmax = n + w – N + 1

Step-3: The crosscorrelation CC(n,L,N)

between the current source block of size N, starting

at point n and each target block of the same size,

within the previously specified area (Lmin,Lmax), is

computed.

Step-4: (Condition [A]) If conditions (4), (5),

and (6) are met, then the current tuple is stored in the

local set of candidate anchors (Step-5), else we

ignore it and continue with step-6.

Step-5: The tuple <n,L,N> is stored in the set

of local candidates, along with the respective

correlation measures (CC, CCpreceding,CCfollowing).

The best candidate tuple will be selected at the end,

before examining the next candidate starting point n.

Step-6: If there are still target blocks to

examine, go to Step-7, else go to Step-8.

Step-7: Move to the next target candidate

sequence by increasing the lag (L=L+1) and return

to Step-3 (Crosscorrelation).

The best candidate for each point n is selected

from the set of all candidates based on the following

maximization criteria, listed

in order of

importance:

Step-8: (Condition [B]) If a candidate has been

found, then return to Step-1, and continue the search

for larger sequences.

N >CC(n,L,N) > (CCpreceding+CCfollowing)/2 > (1/L)

If there was no candidate found during the last

iteration, then end the process. Find the best

candidate anchor tuple <n,L,N> from the local set of

candidates (if any) and store it in the set of global

anchor blocks.

Increase Sequence Size

(N =N+1)

Compute lag (L min , L

max

4.5

(7)

Example

In Figure 5 we present the Crosscorrelation

results for 3 subsequent block size values of

candidate blocks starting at the same ns position

(dashed line for N=3, continuous line for N=4,

dotted line for N=5). The horizontal axis represents

the lag value L, where Lmin=0, while the vertical axis

represents the respective Crosscorrelation (CC)

Values. The only tuple that meets the required

criteria (7), thus producing an acceptable anchor

block is for block size value N=4: <ns,L,4>.

)

CC (Source Starting Point, L , N)

NO

Condition [A]

YES

Store Candidate Anchor Block

NO

L < L max

Figure 5: 2-D CrossCorrelation Plot for 3 different

values of the anchor block size N (3,4,5)

YES

Increase lag (L =L + 1 )

Condition [B]

YES

NO

Select Best Candidate

Anchor Block

Figure 4: Crosscorrelation for

source start point n.

Figure 6: 3-D CrossCorrelation Plot for 3 different

values of the anchor block size N (3,4,5)

Figure 6, is a 3-dimensional representation of the

same space defined by all tuple values <CC, L,N>

corresponding to the previous blocks of sequences.

Through this diagram we obtain a more complete

and thorough view of the crosscorrelation variation

with respect to the lag (L) and block size (N) values.

4.6

Residues Alignment by Dynamic

Programming

The sentence-level alignment of residues (regions

between anchor blocks that remain unaligned), is

based on the simple but effective statistical model of

character lengths introduced by (Gale 1991). The

model makes use of the fact that longer sentences in

one language tend to be translated into longer

sentences in the other language, and that shorter

sentences tend to be translated into shorter

sentences. A probabilistic score is assigned to each

pair of proposed sentence pairs, based on the ratio of

lengths of the two sentences (in number of

characters). The score is computed by integrating a

normal distribution. This probabilistic score is used

in a dynamic programming framework in order to

find the Viterbi alignment of sentences.

5

Experimental Results and

Ongoing Work

We have evaluated our algorithm in a Translation

Memory environment that is designed to enhance the

translator’s work by making use of previously

translated and stored text. Dealing with users’ real

world documents has proved much different than

aligning the Hansards or the Celex directives. We

have aligned texts where the lexical unit and

sentence recognition error rate varied from very low

to very high - almost 50% - due to the peculiar

format of the input documents. The testing corpus

comprised user manuals, laws, conventions and

directives in 11 languages. Handler errors were left

intact and the whole process was fully automated.

We have tested the method on a European

Convention document that was aligned in 11

languages and contained 750 alignments in each

pair, manually inspected and corrected. The portion

of the bitext that was aligned by the algorithm was,

on average 20%. An error of 5% was reported while

the error in the residues that were aligned via

dynamic programming was approximately 10%. For

a user manual alignment in English and French

where the dynamic programming framework yielded

a 20% error, due to sentence boundaries

identification errors, our algorithm was able to

identify stable regions of robust alignment, resulting

to a 10% error. In general, we have been able to

identify homology between surface lexical

sequences by isolating regions of similarity, that

constitute significant diagonals in a dot-plot, by

using minimal information.

Another important characteristic of the method is

the low running time and the small amount of

memory space required by the computations, since

the core strategies of the algorithm are pattern

matching and window sliding. There are several

important questions that are not answered by the

paper, such as the existence of crossings in the

alignment and large omissions in one of the parallel

texts. Yet, we do believe that using surface linguistic

information for a robust sentence alignment is a very

promissing idea that we aim at further exploiting in

the future.

References:

[1] G. Adamson, J. Boreham. 1974. The use of an

association measure based on character

structure to identify semantically related pairs

of words and document titles, In Information

Storage and Retrieval, 10:253-260.

[2] S. F. Altschul, W. Gish, W. Miller, E. W.

Myers, D. J. Lipman. 1990. Basic local

alignment search tool. In Journal of Molecular

Biology,215:403-410.

[3] P. F. Brown, Stephen A. Della Pietra, Vincent

J. Della Pietra, Robert L. Mercer. 1993. The

Mathematics of Statistical Machine Translation:

Parameter Estimation. In Computational

Linguistics, 19(2), 1993.

[4] P. F Brown, J. C. Lai, R. L. Mercer. 1991.

Aligning Sentences in Parallel Corpora. In

Proceedings of the 29th Annual Meeting of the

ACL, pp 169-176, 1991.

[5] R. Catizone, G. Russell, S. Warwick. 1989.

Deriving translation data from bilingual texts.

In Proceedings of the First Lexical Acquisition

Workshop, Detroit 1989.

[6] Di Christo. 1995. Set of programs for

segmentation and lexical look up, MULTEXT

LRE 62-050 project Deliverable 2.2.1, 1995.

[7] W. A. Gale and K. W. Church. 1991. A

Program for Aligning Sentences in Bilingual

Corpora. In Proceedings of the 29th Annual

Meeting of the ACL., pp 177-184, 1991.

[8] M. Kay, M. Roscheisen. 1991 Text-Translation

Alignment. In Computational Linguistics 19(1),

1991.

[9] M. Nagao. 1984. A framework of a mechanical

translation between Japanese and English by

analogy principle. In Artificial and Human

Intelligence, ed. Elithorn A. and Banerji R.,

North-Holland, pp 173-180, 1984.

[10] S. B. Needleman, C. D. Wunsch. 1970. A

general method applicable to the search for

similarities in the amino acid sequences of two

proteins. In Journal of Molecular Biology, 48,

443-453.

[11] H. Papageorgiou, L. Cranias and S. Piperidis.

1994. Automatic alignment in parallel corpora.

In Proceedings of the 32nd Annual Meeting of

the ACL, 1994.

[12] W. R. Pearson, D. J. Lipman. 1988. Improved

tools for Biological Sequence Comparison. In

Proceedings Natl. Acad. Sci. USA 1988,

85:2444-2448.

[13] M. Simard, G. Foster and P. Isabelle. 1992.

Using cognates to align sentences in bilingual

corpora. In Proceedings of TMI, 1992.

[14] T. F. Smith, M. S. Waterman. 1981.

Identification

of

common

molecular

subsequences. In Journal of Molecular Biology,

147:195-197.

[15] R.A. Wagner, M.J. Fischer. 1974. The string-tostring correction problem, In Journal of ACM,

21:168-173.

[16] W. J. Wilbur, D. J. Lipman. 1983. Rapid

similarity searches of nucleid acid and protein

data banks. In Proceedings Natl. Acad. Sci.

USA 1983; 80:726-730.

[17] N. Willman. 1994. A prototype Information

Retrieval System to perform a Best-Match

Search for Names, In RIAO94, Conference

Proceedings, 1994.